This ElasticSearch+Grafana combination given in this article gives you visualization of your application performance, trend analysis, capacity projection, monitoring & alerting - all for free.

Introduction

IIS or Apache do not come with any monitoring dashboard that shows you graphs of requests/sec, response times, slow URLs, failed requests and so on. You need to use external tools to visualize that. ElasticSearch and Grafana are two such tools that let you collect logs from web servers, and then parse, filter, sort, analyze, and create beautiful presentations out of them. ElasticSearch is a distributed JSON document store, just like a NoSQL database. You can use it to store logs as JSON documents. Then you can use Grafana to fetch those documents from ElasticSearch and build beautiful presentations. Both are free and open source.

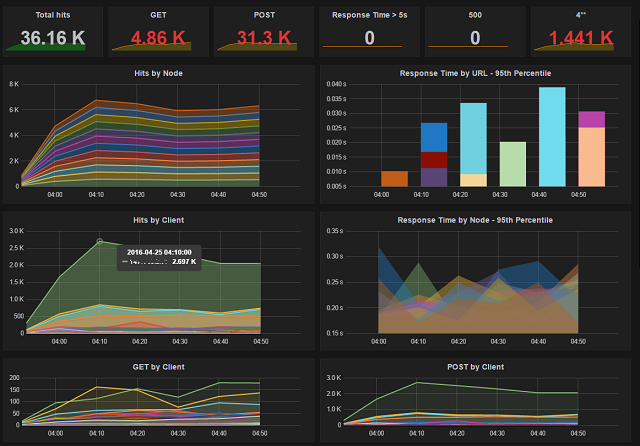

Using ElasticSearch and Grafana, I have built the above dashboard to monitor IIS and Apache websites. I use it to measure webserver performance, identify slow URLs, see how many requests are failing, identify overloaded servers, 404s and so on. You can also query for certain measures, for example, when Avg response time is over 5 seconds. Using some simple command line scripts, you can build alerts from those query results. This ElasticSearch+Grafana combination can give you visualization of your application performance, trend analysis, capacity projection, monitoring & alerting - all for free.

This ElasticSearch+Grafana combination can give you visualization of your application performance, trend analysis, help you with capacity projection, near real time monitoring & alerting — all for free.

Architecture

You need the following products to build such dashboards:

- ElasticSearch to store the logs as JSON documents and make them searchable. Free and open source.

- Grafana to view the logs from ElasticSearch and create beautiful dashboards. Free and open source.

- Filebeat - a tool that is part of ElasticSearch ecosystem. It ships logs from servers to ElasticSearch. You need ot install this on each server and tell it which log files to ship. Free and open source.

- Logstash - a tool that is part of ElasticSearch ecosystem. It processes logs sent by Filebeat clients and then parses and stores it in ElasticSearch. Free and open source.

The architecture is like this:

Sounds like a lot of software to install. But you can put ElasticSearch, Logstash, Grafana on a single server. Filebeat will be running on each server you want to ship logs from. Depending on how much log your webservers are generating, you can scale out easily by adding more ElasticSearch nodes. The only component that is resource hungry here is ElasticSearch. Rest will have little resource consumption.

Automatic Installation

First, you need to install Java 8. They work with Java 7, but 8 gives better performance, so I am told.

Once you have installed Java, you can use the Powershell script I have made to download the necessary software, configure and run in one shot, all in a Windows PC/Server. It will start shipping logs from your local IIS log folder to the newly installed ElasticSearch node. For this to work, you need IIS 7+ and a local website already running. Otherwise, it will have nothing to show.

You need Powershell 3.0+ for this.

Launch Powershell command prompt. Go to a folder where you want everything to be downloaded and installed. And then, run these:

Invoke-WebRequest -Uri http://bit.ly/1pks5SY -OutFile SetupELKG.ps1

powershell -File SetupELKG.ps1

The script does the following:

$elasticsearch="https://download.elastic.co/elasticsearch/...

$logstash="https://download.elastic.co/logstash/logstash/...

$filebeat="https://download.elastic.co/beats/filebeat/...

$grafana="https://grafanarel.s3.amazonaws.com/winbuilds/...

$logstashconf="https://gist.githubusercontent.com/oazabir/...

$logstashtemplate="https://gist.githubusercontent.com/oazabir/...

$filebeatconf="https://gist.githubusercontent.com/oazabir/...

$grafanatemplate="https://gist.githubusercontent.com/oazabir/...

if (-not (Test-Path "Elastic")) { ni Elastic -ItemType "Directory" }

cd Elastic | Out-Null

$path=(pwd).ToString()

function Expand-ZIPFile($file, $destination)

{

Write-Host "Extracting $file -> $destination ...";

$shell = new-object -com shell.application

$zip = $shell.NameSpace($file)

foreach($item in $zip.items())

{

$shell.Namespace($destination).copyhere($item)

}

}

function DownloadAndUnzip($url, $path, $file) {

Write-Host "Downloading $url -> $path ...";

$fullpath = Join-Path $path $file;

Invoke-WebRequest -Uri $url -OutFile $fullpath

Expand-ZIPFile $fullpath $path

}

DownloadAndUnzip $filebeat $path "filebeat.zip"

DownloadAndUnzip $grafana $path "grafana.zip"

DownloadAndUnzip $logstash $path "logstash.zip"

DownloadAndUnzip $elasticsearch $path "elasticsearch.zip"

Write-Host "All Downloaded."

Write-Host "Start ElasticSearch..."

cd elasticsearch*

start cmd "/c .\bin\elasticsearch.bat"

cd ..

sleep 5

Write-Host "Start Logstash..."

cd logstash-*

if (-not (Test-Path "conf")) { ni conf -ItemType "Directory" }

cd conf

Invoke-WebRequest -Uri $logstashconf -OutFile logstash.yml

Invoke-WebRequest -Uri $logstashtemplate -OutFile logstash-template.json

cd ..

start cmd "/c .\bin\logstash.bat -f .\conf\logstash.yml"

cd ..

sleep 5

Write-Host "Start Grafana..."

cd grafana*

start cmd "/c bin\grafana-server.exe"

cd ..

sleep 5

Write-Host "Start Filebeat..."

cd filebeat*

Invoke-WebRequest -Uri $filebeatconf -OutFile filebeat_original.yml

$SystemDrive = [system.environment]::getenvironmentvariable("SystemDrive")

$logfolder = resolve-path "$SystemDrive\inetpub\logs\LogFiles\"

$iisLogFolders=@()

gci $logfolder | %{ $iisLogFolders += $_.FullName + "\*" }

if ($iisLogFolders.Length -eq 0) {

Write-Host "*** There is no IIS Folder configured. You need an IIS website. ***"

}

# put them all on filebeat.yml

$filebeatconfig = [IO.File]::ReadAllText((Join-Path (pwd) "filebeat_original.yml"));

$padding = " - "

$logpaths = [string]::Join([Environment]::NewLine + $padding, $iisLogFolders);

$pos = $filebeatconfig.IndexOf("paths:");

$filebeatconfig = $filebeatconfig.Substring(1, $pos+5) +

[Environment]::NewLine + $padding + $logpaths + $filebeatconfig.Substring($pos+6);

[IO.File]::WriteAllText((Join-Path (pwd) "filebeat.yml"), $filebeatconfig);

start cmd "/c filebeat.exe"

cd ..

sleep 5

$templatePath = Join-Path (pwd) "GrafanaWebLogTemplate.json"

Invoke-WebRequest $grafanatemplate -OutFile $templatePath

Write-Host "

Write-Host "I am about to launch Grafana. This is what you need to do:"

Write-Host "1. Login to grafana using admin/admin"

Write-Host "2. Create a new Data source exactly named 'Elasticsearch Logstash'"

Write-Host " Name: Elasticsearch Logstash"

Write-Host " Type: ElasticSearch"

Write-Host " Url: http://127.0.0.1:9200"

Write-Host " Pattern: Daily"

Write-Host " Index name: (It will come automatically when you select pattern)"

Write-Host " Version: 2.x"

Write-Host " Click Add"

Write-Host "3. Click on Dashboards. Click Import. Import the file: $templatePath"

Sleep 5

pause

start "http://127.0.0.1:3000"

Write-Host "All done."

Once the script has run, you will get all four applications configured and running in four command prompt windows. If you close any of those windows, that application will stop running. So, you need to keep those command prompt windows open.

Manual Installation

You can follow the instructions below to download and configure them individually.

ElasticSearch

Visit https://www.elastic.co/downloads/elasticsearch and download the latest binary.

You can then Run bin/elasticsearch on Unix or bin\elasticsearch.bat on Windows to get ElasticSearch server up and running.

Once you have got it running properly and logs are being shipped, Grafana is showing data, you need to configure it as a service on Windows or a daemon on Unix.

Logstash

Visit https://www.elastic.co/downloads/logstash and download the latest binary.

Once you have downloaded and extracted it, create a conf folder inside the logstash extracted folder, so that the folder is at the same level as bin folder. Then inside that folder, download the logtash.yml file and the logstash-template.json file.

Then you need to run logstash using:

.\bin\logstash.bat -f .\conf\logstash.yml

If there's no scary looking error message, then it is running and has picked up the right configuration.

Filebeat

Download this from https://www.elastic.co/downloads/beats/filebeat.

Extract it. There will be a filebeat.yml file. Delete that. Download my filebeat.yml instead.

Open the file and add the path of the IIS log folders in it. By default, the file looks like this:

filebeat:

prospectors:

-

paths:

- /var/log/*.log

Once you have added a path, it will look like this:

filebeat:

prospectors:

-

paths:

- C:\inetpub\logs\LogFiles\W3SVC1\*

This is the tricky part. When you are indenting the lines, make sure you use SPACE, not TAB. Most editors will put TAB to indent the line. Filebeat will not work with TABs. It has to be SPACE. And make sure there's a hyphen before each path.

Just run filebeat.exe and it will do the job.

When things are working well, install it as a service.

Grafana

- Download Grafana, extract and run bin\grafana-server.exe.

- Then open http://127.0.0.1:3000/.

- Login using admin, admin.

- Click Data Sources.

- Add a new data source exactly named

Elasticsearch Logstash. - Select Type as

ElasticSearch. - Put URL as http://127.0.0.1:9200.

- Select Pattern as Daily.

- Select Version as 2.x.

- Index name will automatically come as [logstash-]YYYY-MM-DD. Leave it as it is.

- Click Add.

- Now download the Dashboard template I have made.

- On Grafana, click on Dashboards, and Import the Dashboard template.

- You are ready to rock!

If you see no graphs, then there's no IIS log being shipped. See the filebeat, logstash, elasticsearch console window for any error. If there's no error, then the log files have no data in it.

Points of Interest

ElasticSearch

Once you have got a local installation running, you have a single node setup. You can deploy ElasticSearch on as many servers as you want. With very simple configuration changes, ElasticSearch will automatically detect and run a cluster of nodes. Detail guidance to configure a cluster is available here.

When you have a cluster, the Kopf plugin is very handy for monitoring the cluster nodes, doing adhoc config changes, and manipulating data stored in the cluster. Here, you can see a three node cluster.

There's a bit learning curve to getting comfortable with ElasticSearch. But the documentation is very useful. At a minimum, you need to understand Index, Template, Document, and clustering.

ElasticSearch has a built in Dashboard called Kibana. Personally, I find it visually unappealing and it has no access control whatsoever. Thus I go for Grafana.

Logstash

Logstash receives data in various formats from filebeat and other tools, and then it parses, formats and saves in proper index in ElasticSearch. You can think of Logstash as a central server to process all logs and other data that are coming in. Using Logstash, you get control over what you will accept into ElasticSearch.

Logstash does the parsing of IIS logs and converts into ElasticSearch document. If you look into the config, you will see how it parses it:

input {

beats {

port => 5045

type => 'iis'

}

}

filter {

if [message] =~ "^#" {

drop {}

}

grok {

patterns_dir => "./patterns"

match => [

"message", "%{TIMESTAMP_ISO8601:timestamp} %{IPORHOST:serverip}

%{WORD:verb} %{NOTSPACE:request} %{NOTSPACE:querystring} %{NUMBER:port}

%{NOTSPACE:auth} %{IPORHOST:clientip} %{NOTSPACE:agent} %{NOTSPACE:referrer}

%{NUMBER:response} %{NUMBER:sub_response} %{NUMBER:sc_status} %{NUMBER:responsetime}",

"message", "%{TIMESTAMP_ISO8601:timestamp} %{IPORHOST:serverip}

%{WORD:verb} %{NOTSPACE:request} %{NOTSPACE:querystring} %{NUMBER:port}

%{NOTSPACE:auth} %{IPORHOST:clientip} %{NOTSPACE:agent} %{NUMBER:response}

%{NUMBER:sub_response} %{NUMBER:sc_status} %{NUMBER:responsetime}",

"message", "%{TIMESTAMP_ISO8601:timestamp} %{IPORHOST:serverip}

%{WORD:verb} %{NOTSPACE:request} %{NOTSPACE:querystring} %{NUMBER:port}

%{NOTSPACE:auth} %{IPORHOST:clientip} %{NOTSPACE:agent} %{NUMBER:response}

%{NUMBER:sub_response} %{NUMBER:sc_status}"

]

}

date {

match => [ "timestamp", "yyyy-MM-dd HH:mm:ss" ]

locale => "en"

}

}

filter {

if "_grokparsefailure" in [tags] {

} else {

mutate {

remove_field => ["message", "timestamp"]

}

}

}

output {

elasticsearch {

hosts => ["127.0.0.1:9200"]

index => "logstash-%{+YYYY.MM.dd}"

template => "./conf/logstash-template.json"

template_name => "logstash"

document_type => "iis"

template_overwrite => true

manage_template => true

}

}

The Input section specifies the port to listen to.

The Filter is where the work happens. It applies a regular expression to process IIS log lines and converts to JSON. You will see multiple regular expressions that handle the common W3C log formats in various IIS versions.

If you add/remove log fields, then you need to update this regular expression. The three I have configured are the default W3C format of IIS log files.

I have added a second filter to remove the message field, which contains the full log line as it is received. This helps save storage in ElasticSearch. If parsing is successful, there's no need to keep the original message.

The output section defines where Logstash will send the logs to. It is configured to connect to ElasticSearch in localhost. There's a template file called logstash-template.json that has the index template for IIS logs.

Logstash has some learning curve. You need to get the configuration right. The configuration is in YAML format, so make sure you have some familiarity with this format. You can use online YAML validators to check the config file before you launch logstash.

The grok command is the parser of incoming data. You can test regular expression required for grok command using the Grok Debugger tool.

There's also detail documentation on how many ways you can receive data into logstash and then parse it and feed into ElasticSearch. Logstash can also load balance multiple elasticsearch nodes.

Conclusion

ElasticSearch is a very powerful product. It is a multi-purpose distributed JSON document store and also a powerful search engine. Most frequent use cases for ElasticSearch is to create searchable documents, implement auto completion feature, and also aggregate logs and analyze them. Grafana is a beautiful Dashboard tool that takes ElasticSearch, among many, as a data source. Combing these two, you can build sophisticated monitoring and reporting tools to get a holistic view on how your application is performing and where the issues are.

History

- 30th May, 2016: Initial version