Introduction

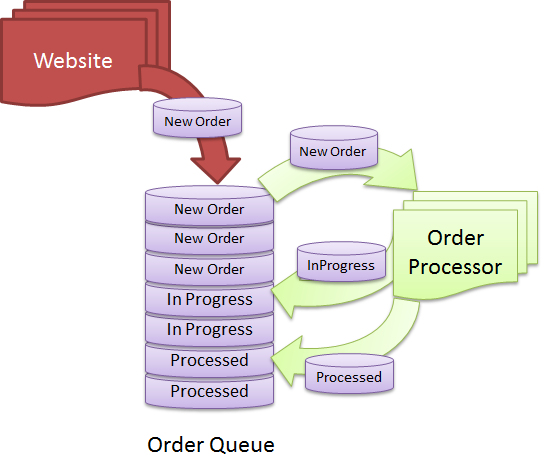

We have Queues everywhere. There are queues for asynchronously sending notifications like email and SMS in most websites. E-Commerce sites have queues for storing orders, processing and dispatching them. Factory Assembly line automation systems have queues for running tasks in parallel, in a certain order. Queue is a widely used data structure that sometimes have to be created in a database instead of using specialized queue technologies like MSMQ. Running a high performance and highly scalable queue using database technologies is a big challenge and it’s hard to maintain when the queue starts to get millions of rows queue and dequeued per day. Let me show you some common design mistakes made in designing Queue-like tables and how to get maximum performance and scalability from a queue implemented using simple database features.

Let’s first identify the challenges you have in such queue tables:

- The table is both read and write. Thus queuing and dequeuing impact each other and cause lock contention, transaction deadlocks, IO timeouts, etc. under heavy load.

- When multiple receivers try to read from the same queue, they randomly get duplicate items picked out of the queue, thus resulting in duplicate processing. You need to implement some kind of high performance row lock on the queue so that the same item never gets picked up by concurrent receivers.

- The Queue table needs to store rows in a certain order and read in a certain order, which is an index design challenge. It’s not always first in and first out. Sometimes Orders have higher priority and need to be processed regardless of when they are queued.

- The Queue table needs to store serialized objects in XML or binary form, which becomes a storage and index rebuild challenge. You can’t rebuild index on the Queue table because it contains text and/or binary fields. Thus the tables keep getting slower and slower every day and eventually queries start timing out until you take a downtime and rebuild the indexes.

- During dequeue, a batch of rows are selected, updated and then returned for processing. You have a “

State” column that defines the state of the items. During dequeue, you select items of certain state. Now State only has a small set of values, e.g. PENDING, PROCESSING, PROCESSED, ARCHIVED. As a result, you cannot create index on “State” column because that does not give you enough selectivity. There can be thousands of rows having the same state. As a result, any dequeue operation results in a clustered index scan that’s both CPU and IO intensive and produces lock contention. - During dequeue, you cannot just remove the rows from table because that causes fragmentation in the table. Moreover, you need to retry orders/jobs/notification N times in case they fail on the first attempt. This means rows are stored for longer period, indexes keep growing and dequeue gets slower day by day.

- You have to archive processed items from the Queue table to a different table or database, in order to keep the main Queue table small. That means moving large amount of rows of some particular status to another database. Such large data removal leaves the table highly defragmented causing poor queue/dequeue performance.

- You have a 24x7 business. You have no maintenance window where you can take a downtime and archive large number of rows. This means you have to continuously archive rows without affecting production queue-dequeue traffic.

If you have implemented such queue tables, you might have suffered from one or more of the above challenges. Let me give you some tips on how to overcome these challenges and how to design and maintain a high performance queue table.

Building a Typical Queue in SQL Server

Let’s take a typical Queue table as an example and we will see how well it works under concurrent load.

CREATE TABLE [dbo].[QueueSlow](

[QueueID] [int] IDENTITY(1,1) NOT NULL,

[QueueDateTime] [datetime] NOT NULL,

[Title] [nvarchar](255) NOT NULL,

[Status] [int] NOT NULL,

[TextData] [nvarchar](max) NOT NULL

) ON [PRIMARY]

GO

CREATE UNIQUE CLUSTERED INDEX [PK_QueueSlow] ON [dbo].[QueueSlow]

(

[QueueID] ASC

)

GO

CREATE NONCLUSTERED INDEX [IX_QuerySlow] ON [dbo].[QueueSlow]

(

[QueueDateTime] ASC,

[Status] ASC

)

INCLUDE ( [Title])

GO

In this queue tables, items are dequeued using QueueDateTime as the sort order in order to simulate first-in-first-out or a priority queue. The QueueDateTime is not necessarily the time when the item is queued. It’s the time when it should be processed. So, item with the earliest time gets picked up early. The TextData is the large character field to store the payload.

The table has a non-clustered index on QueueDateTime in order to make the sorting using QueueDateTime faster during dequeue.

First, let’s populate this table with around 40K rows worth 500MB data, where each row has varying size of payload.

set nocount on

declare @counter int

set @counter = 1

while @counter < @BatchSize

begin

insert into [QueueSlow] (QueueDateTime, Title, Status, TextData)

select GETDATE(), 'Item no: ' + CONVERT(varchar(10), @counter), 0,

REPLICATE('X', RAND() * 16000)

set @counter = @counter + 1

end

Then we will dequeue 10 items at a time. During dequeue, it will pick first 10 items based on QueueDateTime and Status = 0 and then it will update the Status to 1 to mark the items as being processed. We aren’t deleting the rows from the queue table during dequeue because we want to make sure the items aren’t lost forever in case the application that receives them fails to process them.

CREATE procedure [dbo].[DequeueSlow]

AS

set nocount on

declare @BatchSize int

set @BatchSize = 10

declare @Batch table (QueueID int, QueueDateTime datetime, _

Title nvarchar(255), TextData nvarchar(max) )

begin tran

insert into @Batch

select Top (@BatchSize) QueueID, QueueDateTime, Title, TextData from QueueSlow

WITH (UPDLOCK, HOLDLOCK)

where Status = 0

order by QueueDateTime ASC

declare @ItemsToUpdate int

set @ItemsToUpdate = @@ROWCOUNT

update QueueSlow

SET Status = 1

WHERE QueueID IN (select QueueID from @Batch)

AND Status = 0

if @@ROWCOUNT = @ItemsToUpdate

begin

commit tran

select * from @Batch

print 'SUCCESS'

end

else

begin

rollback tran

print 'FAILED'

end

The above query first selects 10 rows from the QueueSlow table, and then it stores in a temporary table variable. It then changes the status of the selected rows to make sure no one else takes those rows again. If it is able to successfully update the same 10 rows and no one else has taken them by this time, then it commits the transaction, which makes those items marked as done and are not picked up on subsequent calls. But if it cannot update the same 10 rows that it picked with the right status, someone else has taken them in the meantime and it aborts in order to preserve consistency.

Let’s measure the IO performance:

set statistics IO on

exec dequeueslow

The output of this is:

Table '#3B75D760'. Scan count 0, logical reads 112, physical reads 0, read-ahead reads 0,

lob logical reads 83, lob physical reads 0, lob read-ahead reads 0.

Table 'QueueSlow'. Scan count 1, logical reads 651, physical reads 0, read-ahead reads 0,

lob logical reads 166, lob physical reads 0, lob read-ahead reads 166.

Table 'QueueSlow'. Scan count 0, logical reads 906, physical reads 0, read-ahead reads 0,

lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table '#3B75D760'. Scan count 1, logical reads 11, physical reads 0, read-ahead reads 0,

lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table '#3B75D760'. Scan count 1, logical reads 11, physical reads 0, read-ahead reads 0,

lob logical reads 464, lob physical reads 0, lob read-ahead reads 0.

Let’s sum it up:

- Total Logical Read = 1695

- Total LOB Logical Read = 675

- LOB Logical Read Count = 3

We will compare these with a faster solution and see how much improvement we get. One thing to notice is the high LOB Logical Read and the number of times LOB Logical Read is performed. There should be no reason to read Large Objects thrice as we need to load them only once. This clearly shows that SQL Server has to unnecessarily read large objects in order to satisfy the queries.

As queue and dequeue happens, and rows are moved out of the table for archival, the table gets fragments day by day. You can’t rebuild the clustered index online in order to eliminate the fragmentation because it has a varchar(max) field. So, you have no choice but to take a downtime and then rebuild the indexes. Downtimes are always expensive, even if you don’t have a 24x7 system.

Building a Faster Queue

First you need to reduce the high logical read. In order to do that, you need to split the QueueSlow table into two tables – QueueMeta and QueueData. QueueMeta contains only the fields that participate in WHERE clause. It’s a lightweight table only to hold the fields that are used in search queries. Thus SQL Server will be able to fit several rows in a 8K page and running through this table will be faster than running throw QueueSlow table where each page contains only a handful of rows, sometimes a single row spans across multiple pages due to the large payload.

Secondly, you will be able to rebuild indexes on the QueueMeta table online, while the production traffic is still hitting the table. This way, the performance of the QueueMeta table will not degrade and you won’t need a downtime anymore.

CREATE TABLE [dbo].[QueueMeta](

[QueueID] [int] IDENTITY(1,1) NOT NULL,

[QueueDateTime] [datetime] NOT NULL,

[Title] [nvarchar](255) NOT NULL,

[Status] [int] NOT NULL,

CONSTRAINT [PK_Queue] PRIMARY KEY CLUSTERED

(

[QueueID] ASC

)

GO

ALTER TABLE [dbo].[QueueMeta] ADD CONSTRAINT [PK_Queue] PRIMARY KEY CLUSTERED

(

[QueueID] ASC

)

GO

CREATE NONCLUSTERED INDEX [IX_QueueDateTime] ON [dbo].[QueueMeta]

(

[QueueDateTime] ASC,

[Status] ASC

)

INCLUDE ( [Title])

This table holds all the fields that appear in the search query. All other fields related to the payload are moved to the QueueData table.

CREATE TABLE [dbo].[QueueData](

[QueueID] [int] NOT NULL,

[TextData] [nvarchar](max) NOT NULL

) ON [PRIMARY]

GO

CREATE UNIQUE NONCLUSTERED INDEX [IX_QueueData] ON [dbo].[QueueData]

(

[QueueID] ASC

)

GO

There’s no clustered index on this table.

The Dequeue procedure is slightly modified to perform the query on the QueueMeta table first, and then select the payload from QueueData table.

CREATE procedure [dbo].[Dequeue]

AS

set nocount on

declare @BatchSize int

set @BatchSize = 10

declare @Batch table (QueueID int, QueueDateTime datetime, Title nvarchar(255))

begin tran

insert into @Batch

select Top (@BatchSize) QueueID, QueueDateTime, Title from QueueMeta

WITH (UPDLOCK, HOLDLOCK)

where Status = 0

order by QueueDateTime ASC

declare @ItemsToUpdate int

set @ItemsToUpdate = @@ROWCOUNT

update QueueMeta

SET Status = 1

WHERE QueueID IN (select QueueID from @Batch)

AND Status = 0

if @@ROWCOUNT = @ItemsToUpdate

begin

commit tran

select b.*, q.TextData from @Batch b

inner join QueueData q on q.QueueID = b.QueueID

print 'SUCCESS'

end

else

begin

rollback tran

print 'FAILED'

end

When I populate the QueueMeta and QueueData with exact same data from QueueSlow and rebuild index on both table and do the comparison, I can see some significant improvement:

- Total Logical Read = 1546 (vs 1695)

- Total LOB Read = 380 (vs 675)

- LOB Read Count = 1 (vs 3)

Here you see the number of logical reads is 149 less and LOB read is 295 less. The LOB read count is just 1, exactly what we should expect.

Comparing the Performance Under Load

When I simulate concurrent queue and dequeue and measure the performance counters, the result looks like this:

| Slow Queue | Fast Queue |

|  |

Let’s investigate the important counters and see the improvements:

- Page Splits/sec – The faster solution has lower page split, almost none compared to the slower solution. This is because during insert, sometimes a row does not fit on a page that is partially populated and thus need to be split into new page. You can read more about Page Splits from here.

- Transactions/sec – we get more value for our money. The more transactions per sec, the more queue operation happens while dequeue goes on. It shows the performance of fast queue operation is better compared to the slower option.

- Lock Timeouts/sec – It shows how many queries wait for lock on a certain object and eventually gave up because it does not get the lock on time. The higher the value, the worse performance you get from your database. You have to try to keep this near zero. The above result shows the number of lock timeouts in the fast queue is less than the slower solution.

- Batch Requests/Sec – Shows how many

SELECT queries are performed per second. It shows both solutions are performing same number of SELECTs, although the faster solution is reading from multiple tables. So, the dequeue stored procedure on the slower solution is not significantly optimal.

It’s not just better performance, the greatest benefit is you can run INDEX DEFRAG online on the QueueMeta table and thus prevent the Queue from slowing down gradually.

Fastest Queue in SQL Server 2005, 2008

SQL Server 2005 introduced the OUTPUT clause in UPDATE, INSERT and DELETE statements. It allows you to get the rows that are affected by the insert, update, delete from a single query. You don’t have to first select some rows, then lock them, then update them and then return them. You can do it in one shot – update and return.

Here’s the dequeue procedure after the change:

alter procedure [dbo].[DequeueFast]

AS

set nocount on

declare @BatchSize int

set @BatchSize = 100

update top(@BatchSize) QueueMeta WITH (UPDLOCK, READPAST)

SET Status = 1

OUTPUT inserted.QueueID, inserted.QueueDateTime, inserted.Title, qd.TextData

FROM QueueMeta qm

INNER JOIN QueueData qd

ON qm.QueueID = qd.QueueID

WHERE Status = 0

This single line UPDATE statement does everything that the Dequeue procedures we have seen so far do. The IO stats are impressive as well:

Table 'QueueMeta'. Scan count 1, logical reads 522, physical reads 0,

read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'QueueData'. Scan count 0, logical reads 31, physical reads 0,

read-ahead reads 0, lob logical reads 56, lob physical reads 0, lob read-ahead reads 0.

- Total Logical Read = 553

- LOB Logical Read = 56

It is at least 3 times less IO intensive than the faster solution.

I have used a special locking strategy here – READPAST. This indicates that if it finds some rows locked, it won’t wait for the rows to be unlocked. It will just ignore them. Since we aren’t doing a SELECT and then an UPDATE, there’s no need to use the HOLDLOCK here. This is one of the reasons for better performance.

Archiving Strategy for Queue Tables

As you queue records on the Queue table, it keeps growing. You have to ensure the queue table remains within reasonable size so that backups and index rebuild don’t take forever. There are two ways to archive rows – continuously round the clock in small batches or in large batch during off peak. If you have a 24x7 system and there’s no off peak period for you, then you need to archive in smaller batch, continuously with some delay. However, you should not delete the rows from queue table during dequeue because delete is an expensive operation. It makes dequeue slower. Instead you should delete the processed items in the queue in another background job so that it does not impact the dequeue performance. Moreover, in a reliable queue, you can’t delete the rows during dequeue. If the process that deals with the items fails for some reason and does not queue them again for retry, then the items are lost forever. Sometimes you need to keep an eye on the queue to ensure items that are picked up for processing gets processed within a certain period. If not, then they need to be sent to the front of the queue to be picked up again for processing. For all these reasons, it’s best to keep items in the queue during dequeue and just update their status.

Conclusion

Performance and reliability of your order processing systems, task execution systems or notification systems depend on how well you design your queues. Since these directly impact customer satisfaction and eventually your bottom line, it’s important you spend enough time making the right design decision while building your queues. Otherwise over time it becomes a liability and you end up having to re-engineer the queues at the cost of lost business and high resource engagement.

History

- 18th September, 2010: Initial post