Introduction

Programming has always been the process of converting requirements (something that has meaning to us humans) into instructions that have meaning to the underlying hardware.

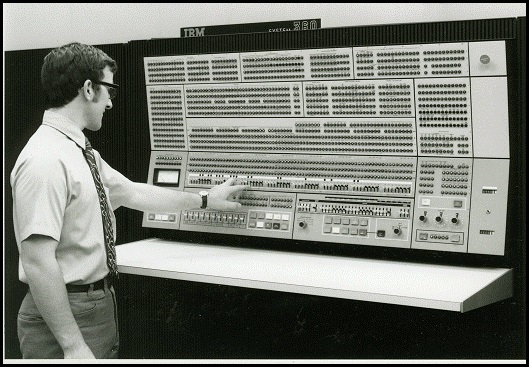

1GL

First generation languages (1GL) were closely tied to the hardware, requiring the human operator to physically manipulate toggle switches to enter in the machine language instructions directly.

(source)

2GL

Second generation languages (2GL) can be loosely categorized as assembly languages. Here we see opcodes representing instructions and the ability to express numbers and text as literals, as well as symbolic resolution of instruction and memory addresses.

(source)

3GL

Third generation languages (3GL) abstracted assembly language into a more human readable syntax with statements like if and goto, and were either compiled into assembly language or directly interpreted (Apple II BASIC did this) or translated into p-code (Commodore PET BASIC did this) by an interpreter (which was still often written in assembly language.)

At this point, languages started to branch into specific domains:

- engineering languages

- functional programming languages

- object oriented programming languages

- logic programming languages

- static typed programming languages

- dynamic typed (type inference) programming languages

4GL

Fourth generation languages (4GL) are distinguished from 3GH in that they are typically further abstracted from the underlying hardware. The line here gets fuzzy. I might argue that languages like C# that emit IL (intermediate language) are examples of a 4GL language, in that the IL is hardware independent and is compiled into processor-specific assembly language usually at runtime. However, wikipedia has a more distinctive definition: "Languages claimed to be 4GL may include support for database management, report generation, mathematical optimization, GUI development, or web development." (source) Here we could say that languages like SQL, Javascript and script syntaxes like CSS and HTML are (or are supposed to be) completely independent of the hardware they are running on.

5GL

Now we get to an interesting place, the fifth generation language (5GL), which abstracts the language itself such that it is based on ""solving problems using constraints given to the program, rather than using an algorithm written by a programmer." (Fundamentals of Computer Programming, D.A.Godse, A.P.Godse) Or as wikipedia states it: "fifth-generation languages are designed to make the computer solve a given problem without the programmer. This way, the user only needs to worry about what problems need to be solved and what conditions need to be met, without worrying about how to implement a routine or algorithm to solve them." Prolog is supposedly a 5GL. One of the ways to recognize (in my opinion) a 5GL is that 1) it is declarative and 2) the declarations are rules, from which the computer can make inferences (5GL's typically have an inference engine.)

Unfortunately, if you look at an example of a Quicksort algorithm in Prolog:

partition([], _, [], []).

partition([X|Xs], Pivot, Smalls, Bigs) :-

( X @< Pivot ->

Smalls = [X|Rest],

partition(Xs, Pivot, Rest, Bigs)

; Bigs = [X|Rest],

partition(Xs, Pivot, Smalls, Rest)

).

quicksort([]) --> [].

quicksort([X|Xs]) -->

{ partition(Xs, X, Smaller, Bigger) },

quicksort(Smaller), [X], quicksort(Bigger).

I fail to see how this was written "without the programmer."

6GL

There is no 6GL, but with hubris, I am going to propose that a 6GL is a highly visual (2D or virtual 3D, or even temporal 4D) environment in which you glue together desired behaviors (programs) from smaller behaviors (other programs / functions.)

Human Meaning, Machine Meaning

At the end of the day, we still seem to be living in a world where a requirement, expressed in terms that has meaning to a person, needs to be translated into terms that has meaning to the machine. Doesn't it seem strange that something that you could often teach a child with a 3rd grade education, like basic accounting, requires the skills of a programmer to translate the requirement into something a machine can understand?

And more telling is that we usually require an even more skilled programmer to figure out, given a program or even a single function, what the requirements were that the program was intended to solve!

Basically, we have a huge translation process between human and machine meaning, and so far, it requires programmers to fill that void. Part of the problem of course is that the language we humans use to express our thoughts and intentions is itself a complex and inconsistent syntax where meaning is often contextual. If people communicated in a more rigorous (mathematical) language, the translation between person and machine might be more straight forward. Supposedly AI's can start filling that void. The Forbes article of July 11, 2016 "Robots Replacing Developers? This Startup Uses Automation To Build Smart Software", states that the company Dev9 "assembles teams that use artificial intelligence to develop custom software, eliminating strenuous processes and drastically reducing manual overhead." However: "Implementing techniques such as automated testing, continuous automation and automated deployments allows the Seattle-based company to meet a high standard, easily packaging and deploying into test environments. By automating traditionally manual processes, Dev9 empowers businesses to focus on growth, collaborating to fulfill technical needs in a way that consolidate complex workflows into a simple handoff." Is this artificial intelligence? Not really - the word "automated / automation" is used three times in that sentence. The interviewer then asks about "creativity", and here is the telling statement ((my underline): "I think the answer is that humans are still the providers of meaning."

So there you go - the core issue with AI replacing developers is how well human meaning can be translated to something a machine can comprehend sufficiently to create a program.

FizzBuzz - A Play Example

FizzBuzz - Coding Horror, Why Can't Programmers.. Program?

Write a program that prints the numbers from 1 to 100. But for multiples of three print "Fizz" instead of the number and for the multiples of five print "Buzz". For numbers which are multiples of both three and five print "FizzBuzz".

The majority of comp sci graduates can't. I've also seen self-proclaimed senior programmers take more than 10-15 minutes to write a solution.

So, how does this simple requirement statement get translated into code?

C# and SuccincT

FizzBuzz written in C# with SuccincT extension methods:

var fizzes = Cycle("", "", "Fizz");

var buzzes = Cycle("", "", "", "", "Buzz");

var words = fizzes.Zip(buzzes, (f, b) => f + b);

var numbers = Range(1, 100);

var fizzBuzz = numbers.Zip(words, (n, w) => w == "" ? n.ToString() : w);

Let's look at some implementation people posted in response to that blog post.

Plain C#

An implementation in C# that's wrong because it doesn't print the number when not a multiple of 3 or 5:

for (int i = 1; i < 101; i++)

{

if ((i % 3) == 0) Console.Write("Fizz");

if ((i % 5) == 0) Console.Write("Buzz");

Console.WriteLine();

}

Ruby

1.upto(100) do |i|

out = nil

out = out.to_s + 'Fizz' if i % 3 == 0

out = out.to_s + 'Buzz' if i % 5 == 0

puts out || i

end

C++

Wrong because it starts at 0:

for(int i=0; i <= 100; printf(i%3==0 ? i%5==0 ? "Fizzbuzz" : "FIZZ" : i%5==0 ? "BUZZ" : "%i" ,i++));

Another C++ implementation that's wrong because it always prints the number when its a multiple of 3 or 5, but doesn't print the number when it isn't:

for(int loop = 1; loop <= 100; loop++)

{

if ((loop % 3 == 0) (loop % 5 == 0))

{

cout << loop << ":\tFizzBuzz" << endl ;

}else if (loop % 3 == 0)

{

cout << loop << ":\tFizz" << endl ;

}

else if (loop % 5 == 0)

{

cout << loop << ":\tBuzz" << endl ;

}

}

F#

[1..100]

|> Seq.map (function

| x when x%5=0 && x%3=0 -> "FizzBuzz"

| x when x%3=0 -> "Fizz"

| x when x%5=0 -> "Buzz"

| x -> string x)

|> Seq.iter (printfn "%s")

LISP

I think I fixed this correctly, as the website was munching '<' and '>':

(defun fizzbuzz (n)

(when (> n 0)

(fizzbuzz (- n 1))

(format t "~a~%"

(if (= (mod n 3) 0)

(if (= (mod n 5) 0) "FizzBuzz" "Fizz")

(if (= (mod n 5) 0) "Buzz" n)))))

(progn (fizzbuzz 100) (values))

BrainF*ck

(as posted by Simon Forsberg on SOO):

++++++++++[>++++++++++<-]>>++++++++++>->>>>>>>>>>-->+++++++[->++++++++

++<]>[->+>+>+>+<<<<]+++>>+++>>>++++++++[-<++++<++++<++++>>>]+++++[-<++

++<++++>>]>-->++++++[->+++++++++++<]>[->+>+>+>+<<<<]+++++>>+>++++++>++

++++>++++++++[-<++++<++++<++++>>>]++++++[-<+++<+++<+++>>>]>-->---+[-<+

]-<<[->>>+>++[-->++]-->+++[---<-->+>-[<<++[>]]>++[--+[-<+]->>[-]+++++[

---->++++]-->[->+<]>>[.>]>++]-->+++]---+[-<+]->>-[+>++++++++++<<[->+>-

[>+>>]>[+[-<+>]>+>>]<<<<<<]>>[-]>>>++++++++++<[->->+<<]>[-]>[<++++++[-

>++++++++<]>.[-]]<<++++++[-<++++++++>]<.[-]<<[-<+>]]<<<.<]

Why show all these implementations?

- Amazingly, some of them are simply wrong - the programmer did not correctly read the requirements

- The languages illustrate the symbolic "impedance mismatch" between the clearly readable requirements and the implementation.

- In the last example, the syntax and semantics are so obscure as to be unrecognizable as an implementation of the FizzBuzz problem.

How Could We Get Closer to the Human-Readable Requirements?

Something that surprises me is that no one coded this to close to the original requirement statement:

Write a program that prints the numbers from 1 to 100. But for multiples of three print "Fizz" instead of the number and for the multiples of five print "Buzz". For numbers which are multiples of both three and five print "FizzBuzz".

What would this look like if we threw out all requirements for performance and tried to coerce C# into looking more like the original requirement statement? One way to go about this is with lambda expressions to come closer to the syntax (at least in English) of the original requirement:

public void Go()

{

ForEach(_ => NumberFrom(1).To(100),

_ => IfDivisibleBy(3, () => Write("Fizz"))

.OrIfDivisibleBy(5, () => Write("Buzz"))

.Also(() => WriteSpace())

.Else(() => WriteNumber()));

}

However, this is a syntactical mess as we humans are forced to use the syntax of the language. The syntax can be somewhat improved using delegates instead of Action and a more fluent style (method chaining):

public void Go())

{ ForEach().NumberFrom(1).To(100).Do(

() =>

{

IfDivisibleBy(3, Write, "Fizz").

OrIfDivisibleBy(5, Write, "Buzz").

Also(Write, " ").

Else(WriteNumber);

});;

}

In either case, we really haven't achieved much besides creating a bunch of highly customized methods that allow us to do call chaining. Under the covers, the methods are really just wrappers for the code that actually does the work, for example:

public FizzBuzz2 ForEach()

{

return this;

}

public FizzBuzz2 NumberFrom(int n)

{

start = n;

return this;

}

public FizzBuzz2 To(int n)

{

stop = n;

return this;

}

public void Do(Action action)

{

for (n = start; n <= stop; n++)

{

conditionMet = false;

action();

}

}

We even have a "do nothing" method ForEach whose sole purpose is to create a somewhat readable English sentence. Furthermore, to achieve this, the program state must now be preserved. Any programmer would scream at the fact that we're now maintaining a bunch of state information:

protected int start;

protected int stop;

protected int n;

protected bool conditionMet;

simply because we're trying (with questionable results) to get the code to be more immediately "human" readable, mostly through leveraging a fluent call chaining approach. Even the IfDivisibleBy3 with OrIfDivisibleBy5 methods have questionable meaning.

Furthermore, even the above code doesn't clearly express intent:

- Is 1 to 100 inclusive, or exclusive?

- "IfDivisibleBy" is poorly names. If course the number is divisible by 3 or 5. This mistake I made is that "multiple of 3" is not the same thing as "divisible by 3" - I should have written "DivisibleWithNoRemainder" or even better "MultipleOf"

The latter illustrates (which is why I left the mistake in the example) how easy it is to make a "translation" error between the requirement and the method name that implements the requirement. Granted, probably other people would not have made that mistake, but I did, and I'm happy to use myself as an example!

We Have a Long Way to Go

While we can sort of create a human readable program:

- The performance of the algorithm essentially collapses.

- State information must be preserved either globally within the implement object or passed as a separate object.

- We're still having to implement the nuts and bolts of the real work directly in the programming language.

This really doesn't reduce the disconnect between human and machine meaning, and in fact can have the opposite effect.

Assembly Resolver - A Real World Example

Under certain specific conditions, we can however write better code. I recently had to write an assembly resolver with the following requirements:

- Ignores any assembly requests ending in ".resources"

- Attempts to find the assembly in a specified path

- Attempts to find the assembly in same folder where the app is running

- Attempts to use the GAC to resolve the missing assembly.

- Assembly resolving can sometimes result in infinite recursion, so second attempts to resolve an assembly are ignored.

Take 1

My original code was written like this:

private Assembly OnAssemblyResolve(object sender, ResolveEventArgs args)

{

Assembly assy = null;

string assyName = args.Name.LeftOf(",");

string fn = Path.Combine(dllPath, assyName + ".dll");

if (assyName.EndsWith(".resources"))

{

return null;

}

if (File.Exists(fn))

{

if (!assemblyTryResolve.Contains(fn))

{

assemblyTryResolve.Add(fn);

assy = Assembly.LoadFrom(fn);

assemblyTryResolve.Remove(fn);

}

else

{

AddError("Tried to load " + assyName);

}

}

else

{

if (!assemblyTryResolve.Contains(args.Name))

{

fn = assyName + ".dll";

if (File.Exists(fn))

{

assemblyTryResolve.Add(fn);

assy = Assembly.LoadFrom(fn);

assemblyTryResolve.Remove(fn);

}

else

{

assemblyTryResolve.Add(args.Name);

assy = Assembly.Load(args.Name);

assemblyTryResolve.Remove(args.Name);

}

}

else

{

AddError("Tried to load " + assyName);

}

}

return assy;

}

In my opinion, with comments describing what this code is supposed to do, we have completely broken the ability for a programmer to quickly look at the code and understand its meaning in human terms.

Take 2

My second attempt at writing this code, with little degradation to performance, looked like this:

public Assembly OnAssemblyResolve(object sender, ResolveEventArgs args)

{

Assembly assy = null;

string fullyQualfiedName = args.Name;

string assyName;

string localfn;

GetAssemblyNameAndFilename(fqn, out assyName, out localfn);

string fnFromDllPath = Path.Combine(dllPath, localfn);

if (IsResource(assyName))

{

onError("Ignoring resource " + localfn);

return null;

}

if (IsNewResolveRequest(fullyQualfiedName))

{

if (!AttemptResolution(fullyQualfiedName, fnFromDllPath, out assy))

{

if (!AttemptResolution(fullyQualfiedName, localfn, out assy))

{

if (!TryOnceToResolve(fullyQualfiedName, fullyQualfiedName, out assy))

{

onError("Failed to load " + localfn);

}

}

}

}

else

{

onError("Already tried to load " + localfn);

}

return assy;

}

By creating some variables and restructuring the code into very small methods, one might say (or not) that this code is not only more readable and the intent of the code is more easily reverse engineered into its original requirements.

Issues

At the end of the day:

- The syntax of the language still gets in the way of expressing meaning clearly.

- Besides programming the algorithm, the programmer must now have a certain subjective skill in expressing the original meaning with (questionably) descriptive terms.

- Again the syntax of the specific language can get in the way of even descriptive context.

I can only conclude that programming, as we do it today in the languages available to us, cannot achieve a reduction in translational skills necessary to create the program nor to reverse engineer the program.

FizzBuzz as a Flow Chart

What if we wrote FizzBuzz to look like this:

ok, what's so thrilling about this? It's just a flowchart! While a flowchart can be easily translated into code, traditional flowcharting enforces a too low level approach to programming. Why? Flowcharts have been around for almost a 100 years (and if you are reading this article 5 years from now, it will be 100 years!):

"The first structured method for document process flow, the "flow process chart", was introduced by Frank and Lillian Gilbreth to members of the American Society of Mechanical Engineers (ASME) in 1921 in the presentation "Process Charts: First Steps in Finding the One Best Way to do Work".[2] The Gilbreths' tools quickly found their way into industrial engineering curricula." (source) But then (my underline): "The popularity of flowcharts decreased in the 1970s when interactive computer terminals and third-generation programming languages became common tools for computer programming. Algorithms can be expressed much more concisely as source code in such languages." Flowcharting pretty much ended up reduced to UML activity diagrams and something called DRAKON that the Russians originally created in 1986, ("friendly Russian algorithmic language that provides clarity") was a way "for creating visual program that can be converted to source code in other languages." Other applications that use flowcharts to represent and execute programs are generally used as teaching tools for beginner students!

(you can still buy this on Amazon.)

While it's certainly true that algorithms can be expressed much more concisely in source code, the difficulty in translating from the requirement into the specific programming language (and vice versa) increased dramatically. What if flowcharts had evolved to express more than just core flow logic? Given the history of interactive text-based terminals vs. the development of computer graphics (which came much later), it's pretty easy to see how something that started off as a physical stencil fell by the wayside during the "text-based terminal" period of software development.

What if we tried modernizing the concept a bit?

Scratch

- I'm not even sure this is right (particularly the little arrow at the bottom of the "repeat" block)

- Frankly, it looks awful, I can't believe people think this is a good way teaching children programming

- The UI is a bit klunky - it took several tries to even figure out how to position things so I could drop them into the correct slots.

Scratch is definitely not an option.

What Can We Do Instead?

What if we tried to take better advantage of the graphic environments that are available nowadays? We could express the FizzBuzz program more succinctly, like this:

We now have a FizzBuzz function, and maybe we want to introduce it as an Easter Egg in our application. We can package up the entire function and drop it in as a shape:

What are we doing here?

- Certain logic statements should be able to be translated into code directly.

- If more complex logic is required, the programmer can still write the function for a box in whatever language they like.

- The programmer can choose (or create) shapes that represent concepts, like loops.

- The logic for a particular function can be collapsed into a single shape, creating a "higher order" shape.

- Applications can be developed by combining logic and higher order shapes, taking us back to item #4.

The Problem of "Syntax" Remains

The above is a crude example, and I suspect my artist friends would render the FizzBuzz requirements in a much more visually interesting way! That of course reveals that the problem of syntax is not really solved, it merely pushes the problem into the visual space instead of the text-based terminal approach we still use with modern programming languages. For example, here are the DRAKON icons (many of which I can't fathom the meaning of without some "translation"):

(source)

And even more interesting, here are "macro-icons":

(source)

If we allow the free expression of shapes to take on meaning, then the human is still forced to learn the syntax of visual "language" that others have created. I see no way around this issue.

Is this concept 6GL?

Well, why not? "DRAKON rules for creating diagrams are cognitively optimized for easy comprehension, making it a tool for intelligence augmentation." (source) This tool was ultimately replaced with a visual computer-aided software engineering (CASE) tool was developed in 1996 and has been used in major space programs! Current DRAKON editors support C#, Javascript, Python, etc!

Classical flowcharting is definitely obsolete, however, in my opinion, a visual way of programming that supports both low-level and higher-level expression of concepts is a path to reduce the translation effort required to take human meaning and convert it into machine meaning. It also seems to me that such an approach can become a viable mechanism for an AI to actually write original programs, using building blocks that humans (or the AI) has previously written and most importantly, for us humans to visual whatever it is that the AI creates in a way that is comprehensible to us humans. With virtual reality, we should be able to create programs in a 3 dimensions, and even watch another programmer (or AI) create / modify existing programs.