This is the fourth in a series of articles demonstrating how to build a .NET AI library from scratch. In this article, we will continue discussion about Perceptron to create a more complicated layout for more complicated problems.

Series Introduction

This is the 4th article of creating .NET library. Below are the links for the earlier parts in the series:

My objective is to create a simple AI library that covers couple of advanced AI topics such as Genetic algorithms, ANN, Fuzzy logics and other evolutionary algorithms. The only challenge to complete this series would be having enough time to work on the code and articles.

Having the code itself might not be the main target however, understanding these algorithms is. Wish it will be useful to someone someday.

Article Introduction - Part 2 "Beyond Perceptron"

In the last article, we created Perceptron that acts as Binary Linear Classifier. We will continue discussion about Perceptron to create a more complicated layout for more complicated problems.

I strongly advise you to review Build Simple AI .NET Library - Part 2 - Machine Learning Introduction before moving any further in this article.

More Perceptron Examples

As mentioned, Perceptron is the simplest processing element of ANN, yet it is still a powerful algorithm, however it is very limited. Remember, it's mainly used only as a binary linear classifier.

What about other complicated classification problems, what about binary but non-linear classifications. We will see through a couple of examples how to develop further complex layouts of Perceptron.

To be all on the same page; let's verify some definitions:

- Binary Classifier - is a problem where output has only 2 possible answers, classifications or groups. However, classification can be linear or non-linear.

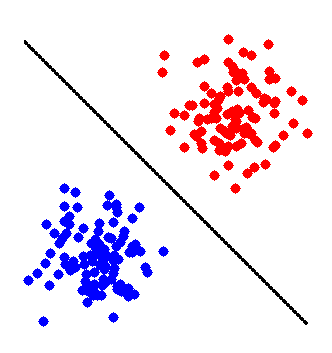

- Linear Classifier - If inputs are linearly separated, you can draw a straight line to separate both groups.

- Non-linear Classifier - In case classification is not possible via a straight line:

- Problem Dimensions - Inputs matrix (Vector) can be considered as features of problem being optimized, for the last example of article 3, we had 2 inputs: one is X and other one is Y, which are coordinates of each point. Another way to view inputs is by considering a number of inputs as dimensions of the problem. So 4D problem means it has 4 different features (AI can resolve problems of higher dimensions that are impossible to visualize by the human brain).

Using Perceptron to Optimize Binary Functions

To better understand Perceptron and its limitation, we will check its use in optimization of binary functions as NOT, OR, AND & XOR.

NOT Function

So this is a 1-Dimensional problem. Let's design Perceptron as follows:

h(x) = W0 + W1 * X1

As output is 0 or 1, Step Activation function is a good choice.

Then Y=StepFunction (h(x))

From NOT truth table above, output Y is 1 when X is 0 so h(x) shall be >= 0 if X=0.

h(x) = W0 + W1 * X1 >= 0 When X=0

W0 >= 0 for X =0 let's select W0 =1

h(x) = 1 + W1 * X1

Now, second possible value of Y is 0 for X = 1

h(x) < 0 for X =1

1 + W1 * X1 < 0 for X =1

1 + W1 < 0 for X=1

W1 < -1 so let's select W1 = -1.5

Finally, h(x) = 1 - 1.5 * X

OR Function

This is a 2-dimenstional problem, let's plot X1 & X2.

These are linearly separated groups as a straight line can be drawn to separate both groups as the following one:

Again, we will use Step activation function for this Perceptron:

h(x) = W0 + W1 * X1 + W2 * X2

Y= Step(h(x)

From truth table, we know that Y=o for X1=X2=0 which means:

h(x) < 0 for X1=X2=0

W0 < 0 for X1=X2=0 - Let's select W0 as -0.5

h(x) = -0.5 + W1 * X1 + W2 * X2

Selecting one line from the graph that intercepts with X1 at 0.5 and X2 at 0.5 (other lines can work as well as separators):

From the Truth table, Y=1 for X1=1 and X2 =0 then h(x) >= 0 for X1=1 and X2 =0

-0.5+ W1 * X1 + W2 * X2 >= 0 for X1=1 and X2 =0

-0.5+ W1 * 1 + W2 * 0 >= 0 for X1=1 and X2 =0

-0.5+ W1 >= 0 for X1=1 and X2 =0

W1 >= 0.5 for X1=1 and X2 =0 let's select W1 = 1

h(x) = -0.5 + 1 * X1 + W2 * X2

Also, Y=1 for X1=0 and X2 =1 then h(x) >= 0 for X1=0 and X2 =1

-0.5 + 1 * X1 + W2 * X2 >= 0 for X1=0 and X2 =1

-0.5 + W2 >= 0 for X1=0 and X2 =1

W2 >= 0.5 for X1=0 and X2 =1 let's select W2 = 1

Finally h(x) = -0.5 + X1 + X2

Let's confirm the truth table:

| X1 | X2 | Desired | h(x) = -0.5 + X1 + X2 | Y |

| 1 | 1 | 1 | 1.5 | 1 |

| 1 | 0 | 1 | 0.5 | 1 |

| 0 | 1 | 1 | 0.5 | 1 |

| 0 | 0 | 0 | -0.5 | 0 |

AND Function

Similarly, it is a 2D problem and Perceptron shall be:

by following the same above OR procedure, we may conclude values for W0, W1 & W2:

One possible combination is h(x) = -1.5 + X1 + X2

To verify the truth table:

| X1 | X2 | Desired | h(x) = -1.5 + X1 + X2 | Y |

| 1 | 1 | 1 | 0.5 | 1 |

| 1 | 0 | 0 | -0.5 | 0 |

| 0 | 1 | 0 | -0.5 | 0 |

| 0 | 0 | 0 | -1.5 | 0 |

So, the final Perceptron shall be:

XOR Function

This is a problem, this function cannot be linearly separated; there is no single line that can separate the 2 groups.

Then Perceptron cannot resolve this problem and this is the main and major limitation of Perceptron (only binary linear classifications).

Yet, Perceptron is a powerful algorithm and can be used maybe in other formations to optimize complicated problems.

Let's come back to the XOR function and try to understand this function more. We will use Venn diagrams to help with that. Venn diagrams are graphical representation of different logical operations (here is more about Venn diagrams).

Venn diagram for OR gate shall be:

and here is the Venn diagram for AND:

Here is XOR:

From Venn diagrams, we may extract the meaning of XOR gate as the result of UNION (OR) excluding INTERSECTION area in other words:

A XOR B = (A + B) - (A.B)

We already used Perceptron to implement AND & OR functions above, so why do we not use more than one Perceptron to implement the above function. One possible implementation could be:

AND Function

Already 2D AND function is implemented and we may use the same:

OR Function

We did not implement 3D OR function. To do so, let's start by simplifying XOR function truth table:

| X1 | X2 | X1 AND X2 | Desired |

| 1 | 1 | 1 | 0 |

| 1 | 0 | 0 | 1 |

| 0 | 1 | 0 | 1 |

| 0 | 0 | 0 | 0 |

So we need to find weights of h(x) of OR function Perceptron that fulfill the above table where:

h(x) = W0 +W1 * X1 + W2 * X2 + W3 * X3 (X3 = X1 AND X2)

Activation function will be Step as well.

Let's start with the last combination of X1=0, X2=0 & X1 AND X2 = 0 then Y = 0

h(x) = W0 +W1 * X1 + W2 * X2 + W3 * X3 <0 for X1=0, X2=0 & X1 AND X2 = 0

W0 <0 for X1=0, X2=0 & X1 AND X2 = 0 Let's select W0 = -1

For combination of X1=1, X2=0 & X1 AND X2 = 0 then Y = 1

h(x) = -1 +W1 * X1 + W2 * X2 + W3 * X3 >= 0 for X1=1, X2=0 & X1 AND X2 = 0

-1 +W1 >= 0 for X1=1, X2=0 & X1 AND X2 = 0

W1 >= 1 Let's select W1 = 2

For combination of X1=0, X2=1 & X1 AND X2 = 0 then Y = 1

h(x) = -1 + 2 * X1 + W2 * X2 + W3 * X3 >= 0 for X1=0, X2=1 & X1 AND X2 = 0

-1 +W2 >= 0 for X1=0, X2=1 & X1 AND X2 = 0

W1 >= 1 Let's select W2 = 2

For combination of X1=1, X2=1 & X1 AND X2 = 1 then Y = 0

h(x) = -1 + 2 * X1 + 2 * X2 + W3 * X3 < 0 for X1=1, X2=1 & X1 AND X2 = 1

-1 + 2 + 2 +W3 < 0 for X1=1, X2=1 & X1 AND X2 = 1

3 +W3 < 0 for X1=1, X2=1 & X1 AND X2 = 1

W3 < -3 for X1=1, X2=1 & X1 AND X2 = 1 Let's select W3 = -4

Final h(x) =-1 +2 * X1 + 2 * X2 - 4 * X3 (X3 = X1 AND X2)

Final Perceptron Network shall be:

Well, let's try to reformulate the graphical representation of the above layout. Each Perceptron shall be denoted by its function.

Instead of having 1 AND Perceptron, let's add 1 Perceptron that generates X1 and other one to generate X2:

Now, let's add dummy Perceptrons to receive inputs and just pass it to the next level of Perceptrons:

Clearly above is a better representation, and it's called MLP (Multi-Layer Perceptron Network). This is exactly the common layout for ANN (Artificial Neural Network).

Inputs are being received by set of Perceptrons equal to number of inputs, this is called Input Layer.

Output is being generated by Perceptron, where 1 Perceptron per each output. This is called Output Layer.

Processing Perceptrons in the middle between input layer to output layer is called Hidden Layer.

Each ANN can have only 1 Input Layer and 1 Output Layer, but could have one or multiple hidden layers. Number of hidden layers is based on complexity of problem being optimized.

We have demonstrated that by adding 1 Perceptron in addition to output Perceptron could add additional power to the network.

A lot of algorithms are there for ANN training and ANN itself has many types as well. We will discuss the most common types and algorithms in future articles.

However, it all starts from the Perceptron concept and builds upon it, hence it is important to get as many details as possible about Perceptron although the examples might not seem to be complicated.

History

- 17th September, 2017: Initial version