The Full Series

- Part 1: We create the whole

NeuralNetwork class from scratch. - Part 2: We create an environment in Unity in order to test the neural network within that environment.

- Part 3: We make a great improvement to the neural network already created by adding a new type of mutation to the code.

Introduction

Before I start, I got to acknowledge that I'm 16 years old, because I kinda noticed people get hiccups when they know that, so I thought... maybe if people knew, I would get something more out of it. Just sayin.

Welcome Back Fellas! About a month ago, I got Part 2 of this series posted. After that, I got really caught up doing a bunch of other stuff which couldn't let me continue doing what I really want to do (Sorry about that). However, a few days ago, Michael Bartlett sent me an email asking about a GA operator called safe mutation (or SA for short) (referring to this paper).

Background

To follow along this article, you'll need to have basic C# and Unity programming knowledge. Also you're going to need to have read Part 1 and Part 2 of this series.

Understanding the Theory

According to the paper sent by Michael, it appears that there are two types of SM operators:

- Safe Mutation through Rescaling: In a nutshell, that's just like tuning the weight a bit to know how much this bit affects the output, and according to that, make an informed mutation.

- Safe Mutation through Gradients: That's a similar approach to the one used in backpropagation to get the gradient of each weight, and according to that, also make an informed mutation.

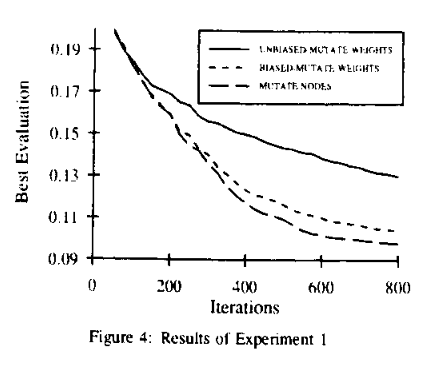

That still, however didn't give me enough information to start coding, so I kept looking till I found this paper. This paper shows how great of an improvement you can get if you just use some of the information that's right in front of you. If you check Figure 4 in that paper, you can see that unbiased mutation gives the worst results, while node mutation gives exponentially better results! Heck yeah, it even matches crossover!

It's even compared with safe mutation (through gradients) and backpropagation on figures 7 and 8. It's either a tie with safe mutation, and node mutation crushes backpropagation...

Ok then... So what's that magical "Mutate Nodes" operator? Well... normal mutation just kinda selects some weights and mutates them as follows:

However, node mutation selects a few nodes and mutates all of the weights coming to it:

Using the Code

Well, the code is pretty straight forward. First, add the MutateNodes function to the NeuralSection class:

public void MutateNodes(double MutationProbablity, double MutationAmount)

{

for (int j = 0; j < Weights[0].Length; j++)

{

if (TheRandomizer.NextDouble() < MutationProbablity)

{

for (int i = 0; i < Weights.Length; i++)

{

Weights[i][j] = TheRandomizer.NextDouble() *

(MutationAmount * 2) - MutationAmount;

}

}

}

}

Then, add the caller function to the NeuralNetwork class:

public void MutateNodes(double MutationProbablity = 0.3, double MutationAmount = 2.0)

{

for (int i = 0; i < Sections.Length; i++)

{

Sections[i].MutateNodes(MutationProbablity, MutationAmount);

}

}

This is pretty much it! That's how the NeuralNetwork.cs should look now:

using System;

using System.Collections.Generic;

using System.Collections.ObjectModel;

public class NeuralNetwork

{

public UInt32[] Topology

{

get

{

UInt32[] Result = new UInt32[TheTopology.Count];

TheTopology.CopyTo(Result, 0);

return Result;

}

}

ReadOnlyCollection<UInt32> TheTopology;

NeuralSection[] Sections;

Random TheRandomizer;

private class NeuralSection

{

private double[][] Weights;

private Random TheRandomizer;

public NeuralSection(UInt32 InputCount, UInt32 OutputCount, Random Randomizer)

{

if (InputCount == 0)

throw new ArgumentException("You cannot create a Neural Layer

with no input neurons.", "InputCount");

else if (OutputCount == 0)

throw new ArgumentException("You cannot create a Neural Layer

with no output neurons.", "OutputCount");

else if (Randomizer == null)

throw new ArgumentException("The randomizer cannot be set to null.",

"Randomizer");

TheRandomizer = Randomizer;

Weights = new double[InputCount + 1][];

for (int i = 0; i < Weights.Length; i++)

Weights[i] = new double[OutputCount];

for (int i = 0; i < Weights.Length; i++)

for (int j = 0; j < Weights[i].Length; j++)

Weights[i][j] = TheRandomizer.NextDouble() - 0.5f;

}

public NeuralSection(NeuralSection Main)

{

TheRandomizer = Main.TheRandomizer;

Weights = new double[Main.Weights.Length][];

for (int i = 0; i < Weights.Length; i++)

Weights[i] = new double[Main.Weights[0].Length];

for (int i = 0; i < Weights.Length; i++)

{

for (int j = 0; j < Weights[i].Length; j++)

{

Weights[i][j] = Main.Weights[i][j];

}

}

}

public double[] FeedForward(double[] Input)

{

if (Input == null)

throw new ArgumentException("The input array cannot be set to null.", "Input");

else if (Input.Length != Weights.Length - 1)

throw new ArgumentException

("The input array's length does not match the number of neurons

in the input layer.", "Input");

double[] Output = new double[Weights[0].Length];

for (int i = 0; i < Weights.Length; i++)

{

for (int j = 0; j < Weights[i].Length; j++)

{

if (i == Weights.Length - 1)

Output[j] += Weights[i][j];

else

Output[j] += Weights[i][j] * Input[i];

}

}

for (int i = 0; i < Output.Length; i++)

Output[i] = ReLU(Output[i]);

return Output;

}

public void Mutate(double MutationProbablity, double MutationAmount)

{

for (int i = 0; i < Weights.Length; i++)

{

for (int j = 0; j < Weights[i].Length; j++)

{

if (TheRandomizer.NextDouble() < MutationProbablity)

Weights[i][j] = TheRandomizer.NextDouble() *

(MutationAmount * 2) - MutationAmount;

}

}

}

public void MutateNodes(double MutationProbablity, double MutationAmount)

{

for (int j = 0; j < Weights[0].Length; j++)

{

if (TheRandomizer.NextDouble() < MutationProbablity)

{

for (int i = 0; i < Weights.Length; i++)

{

Weights[i][j] = TheRandomizer.NextDouble() *

(MutationAmount * 2) - MutationAmount;

}

}

}

}

private double ReLU(double x)

{

if (x >= 0)

return x;

else

return x / 20;

}

}

public NeuralNetwork(UInt32[] Topology, Int32? Seed = 0)

{

if (Topology.Length < 2)

throw new ArgumentException("A Neural Network cannot contain

less than 2 Layers.", "Topology");

for (int i = 0; i < Topology.Length; i++)

{

if (Topology[i] < 1)

throw new ArgumentException("A single layer of neurons must contain,

at least, one neuron.", "Topology");

}

if (Seed.HasValue)

TheRandomizer = new Random(Seed.Value);

else

TheRandomizer = new Random();

TheTopology = new List<uint>(Topology).AsReadOnly();

Sections = new NeuralSection[TheTopology.Count - 1];

for (int i = 0; i < Sections.Length; i++)

{

Sections[i] = new NeuralSection

(TheTopology[i], TheTopology[i + 1], TheRandomizer);

}

}

public NeuralNetwork(NeuralNetwork Main)

{

TheRandomizer = new Random(Main.TheRandomizer.Next());

TheTopology = Main.TheTopology;

Sections = new NeuralSection[TheTopology.Count - 1];

for (int i = 0; i < Sections.Length; i++)

{

Sections[i] = new NeuralSection(Main.Sections[i]);

}

}

public double[] FeedForward(double[] Input)

{

if (Input == null)

throw new ArgumentException("The input array cannot be set to null.", "Input");

else if (Input.Length != TheTopology[0])

throw new ArgumentException("The input array's length does not match

the number of neurons in the input layer.", "Input");

double[] Output = Input;

for (int i = 0; i < Sections.Length; i++)

{

Output = Sections[i].FeedForward(Output);

}

return Output;

}

public void Mutate(double MutationProbablity = 0.3, double MutationAmount = 2.0)

{

for (int i = 0; i < Sections.Length; i++)

{

Sections[i].Mutate(MutationProbablity, MutationAmount);

}

}

public void MutateNodes(double MutationProbablity = 0.3, double MutationAmount = 2.0)

{

for (int i = 0; i < Sections.Length; i++)

{

Sections[i].MutateNodes(MutationProbablity, MutationAmount);

}

}

}

And... No I'm not gonna leave you like that. Now it's time to make tiny little changes to the cars demo made in Unity previously, so that we can see the difference for ourselves. Let's first go to EvolutionManager.cs in our Unity project and add this variable at the beginning of the script:

[SerializeField] bool UseNodeMutation = true;

Let's also put this variable to use by replacing the call to Car.NextNetwork.Mutate() inside the StartGeneration() function with that:

if(UseNodeMutation)

Car.NextNetwork.MutateNodes();

else

Car.NextNetwork.Mutate();

This way, EvolutionManager.cs should end up looking like that:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using UnityEngine.UI;

public class EvolutionManager : MonoBehaviour

{

public static EvolutionManager Singleton = null;

[SerializeField] bool UseNodeMutation = true;

[SerializeField] int CarCount = 100;

[SerializeField] GameObject CarPrefab;

[SerializeField] Text GenerationNumberText;

int GenerationCount = 0;

List<Car> Cars = new List<Car>();

NeuralNetwork BestNeuralNetwork = null;

int BestFitness = -1;

private void Start()

{

if (Singleton == null)

Singleton = this;

else

gameObject.SetActive(false);

BestNeuralNetwork = new NeuralNetwork(Car.NextNetwork);

StartGeneration();

}

void StartGeneration ()

{

GenerationCount++;

GenerationNumberText.text = "Generation: " +

GenerationCount;

for (int i = 0; i < CarCount; i++)

{

if (i == 0)

Car.NextNetwork = BestNeuralNetwork;

else

{

Car.NextNetwork = new NeuralNetwork(BestNeuralNetwork);

if(UseNodeMutation)

Car.NextNetwork.MutateNodes();

else

Car.NextNetwork.Mutate();

}

Cars.Add(Instantiate(CarPrefab, transform.position,

Quaternion.identity, transform).GetComponent<Car>());

}

}

public void CarDead (Car DeadCar, int Fitness)

{

Cars.Remove(DeadCar);

Destroy(DeadCar.gameObject);

if (Fitness > BestFitness)

{

BestNeuralNetwork = DeadCar.TheNetwork;

BestFitness = Fitness;

}

if (Cars.Count <= 0)

StartGeneration();

}

}

After stirring it all up and hitting play, you get this:

Points of Interest

It was fantastic to see such a great improvement from the last article in the training just by making a really simple addition. Now that we've got some improvement, it's your turn to tell me what you think about all this. And what do you think I should do next? And, should I make youtube videos regarding AI and stuff like that, or should I stick with articles?

History

- Version 1.0: Main implementation