Contents

Linear regression is a useful technique for representing observed data by a mathematical equation. For linear regression, the independent variable (data) is assumed to be a linear function of various independent variables. Normally, the regression problem is formulated as a least squares minimization problem. For the case of linear least squares, the resulting analysis requires the solution of a set of simultaneous equations that can be easily solved using Gaussian Elimination. The applications of linear least squares and Gaussian elimination are well known techniques.

Unfortunately, there are many times when one knows that the dependent variable is not a linear function, but that a transformed variable might be. In addition, in many cases, it is not only necessary to compute the best formula to represent the data, but to also estimate the accuracy of the parameters. This article presents a C# implementation of a weighted linear regression, using an efficient symmetric matrix inversion algorithm to overcome the problem of nonlinearity of the dependent variable and to compute the complete variance-covariance matrix to allow estimation of confidence intervals in the estimated regression coefficients.

The files included with this article contain the source code for the linear regression class, as well as an example program.

The standard linear regression problem can be stated mathematically as follows, where yj represents the jth measured or observed dependent variable value, xi,j represents the jth measured independent variable value for the ith variable, and Ci is the regression coefficient to be determined. M represents the number of data points, and N represents the number of linear terms in the regression equation.

It is important to note that this statement assumes that all errors have the same significance, but this is often not true. If there is a drift in the precision of the measurements, for example, some errors may be more or less important than others. Another important case arises if we need to transform the dependent variable, y, in order to get a linear representation. For example, in many practical cases, the logarithm of y (or some other function) may be much better at representing the data. In the case of a logarithmic relationship, we can fit Log(y) = C1x1 + C2x2 + ... to represent the relationship y = e(C1x1 + C2x2 + ...). In the case where a transformation is needed, however, the errors in the transformed variable are no longer necessarily all of the same significance. As a simple example using the Log transformation, note that Log(1) +/- 0.1 represents a much different range than Log(1000) +/- 0.1. In such cases, it is also possible to approximately represent the variation in error significance by using a weighted regression, as shown in the following modified equation.

In this formulation, the squared difference between each observed and predicted value is multiplied by a weighting factor, wj to account for the variation in significance of the errors. If the difference is due to variations in measurement precision, the weight factors will need to be determined based on the precision drift. If the differences in significance are due to a variable transformation, however, we can often estimate them based on the functional form of the transformation.

In the case of a variable transformation, we can approximate the error in terms of the derivative of the function. Assuming that we are measuring y and transforming to f(y), the following relationship represents a first order approximation:

Essentially, the weight factor is used as a first order correction, and if the regression yields small residual errors, the approximation is very close. As can be seen, for the case where a Log(y) transformation is used, the weights for each data point would be (dLog(y)/dy)-2 = y2.

It might seem more reasonable to define the weights as multiplying the difference in the computed and measured values, rather than the difference squared. The reason that they are defined as multiplying the difference squared is due to the relationship between the statistical variance and the least squares terms. In the case of a changing measurement precision, it makes sense to adjust the variance, which is related to the square of the difference, rather than the difference itself.

For more information on regression analysis, including weighted regressions, please refer to the book by Draper and Smith (1966) listed in the references. This book should be considered one of the classical texts on practical regression analysis.

Solving the linear regression equation is straightforward. Since the terms are linear and the objective is to compute the minimum with respect to all of the coefficients, the standard derivation is to take the derivative of the least squares sum with respect to each coefficient, Ci, and require that the derivatives are all exactly zero. This yields a set of simultaneous equations with the coefficients, Ci, as unknowns which can be solved using standard linear algebra. In the weighted least squares case, the equations are the same as the standard, unweighted case, except the weights are included in each of the sums. For reference, the equations are:

Most simple least squares algorithms use Gaussian Elimination to solve the simultaneous equations, since it is fast and easy to program. In fact, if all you need is the best set of coefficients, it's probably best to use Gaussian elimination. If, however, you want to do some additional analyses, then Gaussian Elimination may not be the best option.

An alternate method for solving the equations is to represent the simultaneous equations as a matrix equation:

Solving the matrix equation can be accomplished by inverting the X matrix, then multiplying by the B vector to determine the values of C. The reason that this is an option worth considering is twofold:

- the inverted X matrix is directly proportional to the variance-covariance matrix that contains almost all of the information about the accuracy of the coefficient estimates, and

- X happens to be a symmetric matrix that can be efficiently inverted rapidly.

The heart of the algorithm for the weighted linear regression is actually the method that inverts the symmetric matrix. The source code for this method is shown below. Note that I can't claim this algorithm. I actually was given this algorithm while in graduate school, back in about 1972, by an office mate by the name of Eric Griggs. I don't know where Eric got it, but it has been floating around in various forms for a long, long time. The original code that I obtained was written in Fortran 1, and has since been translated into many other languages. Since it was published in at least one report done for the State of Texas, it is public domain. If anyone knows who originally came up with the algorithm, I'd be pleased to give them due credit.

The method bool SymmetricMatrixInvert(double[,] V) takes a square, symmetric matrix, V, as its argument, and inverts the matrix in place. In other words, when the method is called, V contains a symmetric matrix. If the inversion fails due to the matrix being singular, the method returns false; if the inversion succeeds, it returns true. After the call, V contains the inverted matrix.

public bool SymmetricMatrixInvert(double[,] V)

{

int N = (int)Math.Sqrt(V.Length);

double[] t = new double[N];

double[] Q = new double[N];

double[] R = new double[N];

double AB;

int K, L, M;

for (M = 0; M < N; M++)

R[M] = 1;

K = 0;

for (M = 0; M < N; M++)

{

double Big = 0;

for (L = 0; L < N; L++)

{

AB = Math.Abs(V[L, L]);

if ((AB > Big) && (R[L] != 0))

{

Big = AB;

K = L;

}

}

if (Big == 0)

{

return false;

}

R[K] = 0;

Q[K] = 1 / V[K, K];

t[K] = 1;

V[K, K] = 0;

if (K != 0)

{

for (L = 0; L < K; L++)

{

t[L] = V[L, K];

if (R[L] == 0)

Q[L] = V[L, K] * Q[K];

else

Q[L] = -V[L, K] * Q[K];

V[L, K] = 0;

}

}

if ((K + 1) < N)

{

for (L = K + 1; L < N; L++)

{

if (R[L] != 0)

t[L] = V[K, L];

else

t[L] = -V[K, L];

Q[L] = -V[K, L] * Q[K];

V[K, L] = 0;

}

}

for (L = 0; L < N; L++)

for (K = L; K < N; K++)

V[L, K] = V[L, K] + t[L] * Q[K];

}

M = N;

L = N - 1;

for (K = 1; K < N; K++)

{

M = M - 1;

L = L - 1;

for (int J = 0; J <= L; J++)

V[M, J] = V[J, M];

}

return true;

}

Besides the matrix inversion, additional code is needed to set up the equations, compute statistics, etc. For that, I defined a class LinearRegression that also saves the results of various statistical measures, such as the multiple correlation coefficient, standard deviation of the residual errors, the Fisher F statistic for the regression, as well as the variance/covariance matrix, computed coefficients and their standard errors, calculated values, and residuals. Although I don't show all the details here, the source code for the class contains properties to retrieve all of these items once the regression has been computed.

public class LinearRegression

{

double[,] V;

public double[] C;

public double[] SEC;

double RYSQ;

double SDV;

double FReg;

double[] Ycalc;

double[] DY;

public bool Regress(double[] Y, double[,] X, double[] W)

{

int M = Y.Length;

int N = X.Length / M;

int NDF = M - N;

Ycalc = new double[M];

DY = new double[M];

if (NDF < 1)

{

return false;

}

V = new double[N, N];

C = new double[N];

SEC = new double[N];

double[] B = new double[N];

for (int i = 0; i < N; i++)

for (int j = 0; j < N; j++)

V[i, j] = 0;

for (int i = 0; i < N; i++)

{

for (int j = 0; j < N; j++)

{

V[i, j] = 0;

for (int k = 0; k < M; k++)

V[i, j] = V[i, j] + W[k] * X[i, k] * X[j, k];

}

B[i] = 0;

for (int k = 0; k < M; k++)

B[i] = B[i] + W[k] * X[i, k] * Y[k];

}

if (!SymmetricMatrixInvert(V))

{

return false;

}

for (int i = 0; i < N; i++)

{

C[i] = 0;

for (int j = 0; j < N; j++)

C[i] = C[i] + V[i, j] * B[j];

}

double TSS = 0;

double RSS = 0;

double YBAR = 0;

double WSUM = 0;

for (int k = 0; k < M; k++)

{

YBAR = YBAR + W[k] * Y[k];

WSUM = WSUM + W[k];

}

YBAR = YBAR / WSUM;

for (int k = 0; k < M; k++)

{

Ycalc[k] = 0;

for (int i = 0; i < N; i++)

Ycalc[k] = Ycalc[k] + C[i] * X[i, k];

DY[k] = Ycalc[k] - Y[k];

TSS = TSS + W[k] * (Y[k] - YBAR) * (Y[k] - YBAR);

RSS = RSS + W[k] * DY[k] * DY[k];

}

double SSQ = RSS / NDF;

RYSQ = 1 - RSS / TSS;

FReg = 9999999;

if (RYSQ < 0.9999999)

FReg = RYSQ / (1 - RYSQ) * NDF / (N - 1);

SDV = Math.Sqrt(SSQ);

for (int i = 0; i < N; i++)

{

for (int j = 0; j < N; j++)

V[i, j] = V[i, j] * SSQ;

SEC[i] = Math.Sqrt(V[i, i]);

}

return true;

}

}

Using the class to perform a weighted linear regression is straightforward. Simply create an instance of the LinearRegression class, put the data and weights into suitable arrays, then call the Regress method, which returns true if the regression is calculated and false if it fails, usually due to not enough points. The coefficients are available from the Coefficients[] array, and the standard error of the coefficients are available in the CoefficientsStandardError[] array. The following code snippet shows how to call the regression once the data arrays have been set up.

LinearRegression linReg = new LinearRegression();

if (linReg.Regress(y, x, w))

{

}

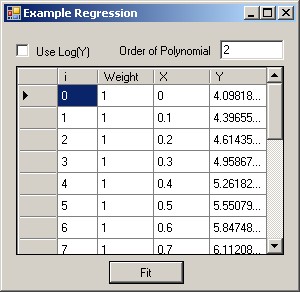

The sample program is a simple illustration of the use of the LinearRegression class. By default, it generates 20 values with random errors based on the equation y = 4 + 3x, and allows fitting the data with a general polynomial equation. If you change the equation type from y to Log(y) using the checkbox, the weights are recomputed accordingly. Pressing the Fit button performs the regression and displays the coefficients, along with their standard errors. Note that if you use more than a 1st order polynomial, the 3rd and higher order coefficients will be meaningless, as indicated by their standard errors. To compute the actual confidence intervals, the standard errors should be multiplied by the appropriate Student's t statistic, which is not included in this article.

A screenshot of the sample program is shown above.

You can change the values in the table and perform your own regressions if you like. With some modifications, the sample program could be converted into a general regression package, although I have not done that here.

Linear regression is a powerful technique that can be used to represent observed data and trends with equations. If you have one variable that is a simple function of another variable, perhaps, graphical techniques are good enough. However, in more complex cases, with many thousands of data points and perhaps dozens of variables, graphical techniques are not practical. The use of a weighted regression, and having access to the variance/covariance matrix, as well as the standard error of the coefficients is often enlightening, in that it may show which coefficients are meaningless and should be eliminated from the equation or replaced by terms of a different nature.

Unfortunately, I have observed many naive applications of regression techniques. In many published papers, the authors have blindly increased the number of terms in a regression to improve their results. Unfortunately, in many cases, the small improvements are statistically meaningless, but if they never look at the statistics, they don't recognize that. In other cases, I have seen function transformations used, such as fitting Log(y) rather than y, without regard to the variations of accuracy. Again, looking at errors in Log(y) can be very misleading, unless the proper methods are used. The direct use of Log(y) is really only valid if the measurements have a precision represented as a percent of their values, while most measurements have an absolute precision.

Part of the problem may be due to a lack of understanding of the underlying mathematical principles, but part of the problem is also due to the lack of general software that implements the correct procedures. It is my hope that perhaps someone will find these algorithms useful. I'd be very interested in any comments concerning statistical procedures or any observations to improve the algorithms even more.

- Draper, N. R. and H. Smith, Applied Regression Analysis, New York: Wiley (1966)