Contents

Have you ever dreamed of computers being taught to write a book, compose a music, or create a painting? Consider a situation where a computer can read all the works of Edgar Poe in a few moments, build a model of his style, thus resurrecting him, and start producing more poems on behalf of the author. The time needed to perform the same operation for a human is considerably infinite. Why not resuscitate, by the same means, Bach or Vivaldi to write another "The Four Seasons" masterpiece? The music consists of just seven notes, and a computer, by the same means, can analyze the whole works of Bach, how the notes and pauses correspond to each other etc..., not taking into account the simple drum and base, which are much easier to replicate.

A bit of news in some computer magazine, which encouraged me to write this article and code, was about "The Silver Eggheads" by Fritz Leiber, in which the fiction genre is ruled by megalithic publishing houses whose word mills churn out stories by the pound. It reports to P. M. Parker and his Icon Group International, which produces tons of articles in different genres. So far, searching on Google for "wordmills" produced about 60 matches only, generally pointing to the F. Leibner work.

First, my thought was to use statistics for the generation of text books, which I have tried to implement here. The same concept, by all means, can be applied to statistical analysis of music (e.g., notes and pauses between them (e.g., midi files are good for that purpose), instead of words and punctuation marks), or paintings, or analyzing image features (color and texture) and their correspondence to each other and raw image data.

You need some basics in statistics as to understand the conditional probabilities.

Consider the following text:

I read books. I read papers. I like cats. They like dogs. They like birds.

There are 10 different words including:

.

birds

books

cats

dogs

I

like

papers

read

They

If we take each word from the text and write down the words which follow it:

[previous word] [next word]

.

I

They

birds

.

books

.

cats

.

dogs

.

I

read

like

like

cats

dogs

birds

papers

.

read

books

papers

They

like

e.g., read and like follow I, and cats, dogs, and birds follow like etc...

Now, count how many times each [next word] follows a [previous word]. E.g., take the word I and count how many times read and like happen to follow it; there are two occurrences of read and one for like.

I

read 2

like 1

Now, divide 2 and 1 by the sum of the total number of words found following the word I (sum = 2 + 1). Thus, we obtain the probability that read might follow I: 2 times out of 3 (2/3 = 0.666666) and like might follow I: 1 time out of 3 (1/3 = 0.333333). That is, the conditional probability of seeing the word read if the previous word is I, P(read|I) = 0.666666, and the conditional probability of seeing the word like if the previous word is I: P(like|I) = 0.333333.

I

read 0.666666

like 0.333333

Doing the same for each word from the total of 10 different found, we get:

.

I 0,5

They 0,5

birds

. 1

books

. 1

cats

. 1

dogs

. 1

I

read 0,666666666666667

like 0,333333333333333

like

cats 0,333333333333333

dogs 0,333333333333333

birds 0,333333333333333

papers

. 1

read

books 0,5

papers 0,5

They

like 1

This is the so called first order model, where you keep a history of one previous word. The second, third, and so forth order models keeps the history of 2, 3, or more previous words, so you have the probability of the next word having a combination of two or more words before. Those models provide better results in terms of simulating the text; however, you need to keep track of combinations of words, e.g., I read may be followed by books or papers with equal probability.

I read

books 0,5

papers 0,5

You may contribute an experiment with English letters instead of words. You will see that the second and third order models produce more intelligible word output than the first order model.

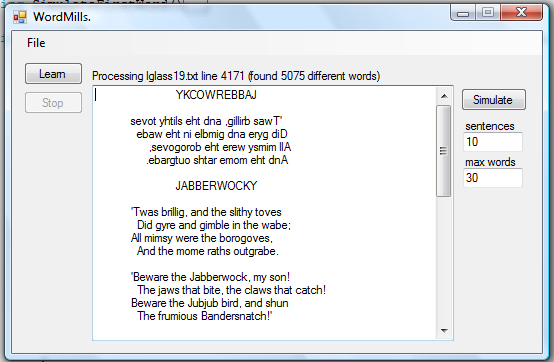

C# and GC are great for fast coding. For a quick start, click the Learn button, and select one or several raw text files. The GUI will start processing the text and gathering statistics as P(NextWord|PrevWord). You can Stop the process if it takes too long. Going to the File menu, you can save the extracted dictionary and the text which was extracted. Click the Simulate button to create any number of sentences consisting of maximal number of words. The process simply starts with a random word, starting with a capital letter, then basing on the conditional probability, P(NextWord|PrevWord), selects the next word, and the process repeats until a '.' is met to finish the sentence. Then, P(NextWord|'.') is evaluated to choose the next word, and so on.

There are two classes in the project:

WordExtractorTextStatistics

WordExtractor is used to process text files, extract a dictionary, and organize text as a sequence of words for calculation of conditional probabilities. You may use its public properties and methods, some of them are self-explanatory:

Properties:

- <cod>System.ComponentModel.BackgroundWorker WordExtractor.Worker; - background worker to report progress

int WordExtractor.ReportFreq; - report frequency in "lines read from the text"String WordExtractor.Error; - last function errorList<String> WordExtractor.Text; - extracted text word by wordList<String> WordExtractor.Dictionary; - extracted dictionary

Methods:

int WordExtractor.Extract(String fileName); - extract dictionary and text from fileint WordExtractor.SaveDictionary(String fileName); - save dictionary to text fileint WordExtractor.SaveText(String fileName); - save extracted text to text filebool WordExtractor.IsCapitalWord(String str);bool WordExtractor.IsPunctuationMark(String str);bool WordExtractor.IsValidWord(String str);bool WordExtractor.IsDigit(String str);void WordExtractor.Clear(); - clear dictionary and text

The functions returning int return zero in case of success, or a negative value in case of failure.

Basically, you call WordExtractor.Extract() any number of times, and the extracted text and dictionary will be added to the previously processed files:

public int Extract(String fileName)

{

try

{

int linesNumber = 0;

using (TextReader tr = new StreamReader(fileName))

{

String str;

while ((str = tr.ReadLine()) != null)

{

linesNumber++;

String[] words = str.Split(this.WordsSeparator,

StringSplitOptions.RemoveEmptyEntries);

foreach (String w in words)

{

String word = w;

RemoveUnsupportedChars(ref word);

if (IsValidWord(word) == false)

continue;

String punctuation;

if (IsEndsWithPunctuation(word, out punctuation) == true)

{

RemovePunctuation(ref word);

if (String.IsNullOrEmpty(word) == false)

{

this.text.Add(word);

this.text.Add(punctuation);

if (this.dictionary.Contains(word) == false)

this.dictionary.Add(word);

if (this.dictionary.Contains(punctuation) == false)

this.dictionary.Add(punctuation);

}

}

else

{

RemovePunctuation(ref word);

if (String.IsNullOrEmpty(word) == false)

{

this.text.Add(word);

if (this.dictionary.Contains(word) == false)

this.dictionary.Add(word);

}

}

}

if ((this.worker != null) && ((linesNumber % this.reportFreq) == 0))

{

worker.ReportProgress(0, String.Format("Processing {0} line {1} " +

"(found {2} different words)",

Path.GetFileName(fileName), linesNumber,

this.dictionary.Count));

if (this.worker.CancellationPending == true)

break;

}

}

}

if (this.worker != null)

worker.ReportProgress(0, String.Format("Processing {0} line {1}" +

" (found {2} different words)",

Path.GetFileName(fileName), linesNumber,

this.dictionary.Count));

this.dictionary.Sort();

return 0;

}

catch (Exception e)

{

Trace.WriteLine(String.Format("WordExtractor.Extract()" +

" raised exception {0}", e.Message));

this.error = String.Format("WordExtractor.Extract() " +

"raised exception {0}", e.Message);

return -1;

}

}

From the TextStatistics class, you may use:

Properties:

System.ComponentModel.BackgroundWorker TextStatistics.Worker; - background worker to report progress (TODO in future)String TextStatistics.Error; - last function errorDictionary<String, List<WordFreqPair>> TextStatistics.Stats; - P(NextWord|PrevWord)List<String> TextStatistics.CapitalWords; - words in text that start with capital letterint TextStatistics.MaxWordsPerSentence;

Functions:

int TextStatistics.Calculate(WordExtractor we); - extract statistics from text as P(NextWord|PrevWord)int TextStatistics.SaveDictionary(String fileName); - save dictionary consisting of P(NextWord|PrevWord) pairsint TextStatistics.SaveCapitalWords(String fileName);int TextStatistics.Simulate(int sentencesNumber, out String[] simulationText); - simulate text for N sentences

Once you have processed all the files with the WordExtractor.Extract() methods, you may pass the object to the TextStatistics.Calculate() function. It will process the extracted text, and will calculate conditional probabilities to the dictionary Dictionary<String, List<WordFreqPair>>. The Key is the PrevWord, and Value is the class with only public properties as the NextWord Word and its probability Prob:

public class WordFreqPair

{

public String Word { get; set; }

public double Prob { get; set; }

}

The TextStatistics.Calculate() is presented below:

public int Calculate(WordExtractor we)

{

try

{

if (we.Text.Count < 3 || we.Dictionary.Count < 3)

throw new Exception("WordExtractor contains less than 3 words.");

this.stats.Clear();

this.capitalWords.Clear();

this.error = String.Empty;

foreach (String word in we.Dictionary)

this.stats.Add(word, new List<WordFreqPair>());

for (int i = 1; i < we.Text.Count; i++)

{

String prevWord = we.Text[i - 1];

String nextWord = we.Text[i];

List<WordFreqPair> wfpList = this.stats[prevWord];

int index = IsWordPresent(wfpList, nextWord);

if (index < 0)

wfpList.Add(new WordFreqPair() { Word = nextWord, Prob = 1.0 });

else

wfpList[index].Prob++;

}

List<String> emptyNodes = new List<string>();

foreach (String word in this.stats.Keys)

{

List<WordFreqPair> wfpList = this.stats[word];

if (wfpList.Count == 0)

{

emptyNodes.Add(word);

continue;

}

if (we.IsCapitalWord(word) == true)

this.capitalWords.Add(word);

double sum = 0.0;

for (int i = 0; i < wfpList.Count; i++)

sum += wfpList[i].Prob;

for (int i = 0; i < wfpList.Count; i++)

wfpList[i].Prob /= sum;

}

foreach (String word in emptyNodes)

this.stats.Remove(word);

if (this.stats.ContainsKey(".") == true)

this.isPointPresent = true;

return 0;

}

catch (Exception e)

{

Trace.WriteLine(String.Format("TextStatistics.Calculate()" +

" raised exception {0}", e.Message));

this.error = String.Format("TextStatistics.Calculate() " +

"raised exception {0}", e.Message);

return -1;

}

}

Then, you may simulate any number of sentences from the learned statistical model:

public int Simulate(int sentencesNumber, out String[] simulationText)

{

try

{

if (sentencesNumber < 1)

throw new Exception("wrong number of sentences to simulate.");

if (this.stats.Count == 0)

throw new Exception("the text have not been learned." +

" call TextStatistics.Learn()");

if (this.isPointPresent == false)

throw new Exception("there is no . in dictionary," +

" can not simulate sentence");

int index = 0;

simulationText = new String[sentencesNumber];

WordExtractor we = new WordExtractor();

String sentence = String.Empty;

String word = SimulateFirstWord();

int wordsInSentence = 1;

while (true)

{

if (we.IsPunctuationMark(word) == true)

{

sentence += word;

}

else

{

sentence += " " + word;

wordsInSentence++;

}

if (word == "." || word == "!" || word == "?")

{

simulationText[index++] = sentence + "\r\n";

sentence = String.Empty;

wordsInSentence = 0;

sentencesNumber--;

}

if (sentencesNumber <= 0)

break;

word = SimulateNextWord(this.stats[word], wordsInSentence);

}

return 0;

}

catch (Exception e)

{

simulationText = null;

Trace.WriteLine(String.Format("TextStatistics.Simulate({0})" +

" raised exception {1}",

sentencesNumber, e.Message));

this.error = String.Format("TextStatistics.Simulate({0})" +

" raised exception {1}",

sentencesNumber, e.Message);

return -1;

}

}

Now, to provide some examples of text written by a computer in different areas. I used e-texts provided by Project Gutenberg, a very nice collection. Though first order model is expected to provide worse results, as was shown for the English letters simulation, on the converse, it does show some nice pert and pungent sentences. So the second, third, and higher order models are expected to provide far better results, nearly author like. You may also try to mix authors and get Poe-Darwin results.

Fertility of Great Britain, extremely few species might travel still favourable variations.

I must either useful to meet with the whole classes of the naked Turkish dog.

If green woodpeckers which on the cause of sterile females do not occur in order to make for long as specifically different great geographical changes of all the good, and at hybrids may have been retrograde; and those species of character to its own food might become widely extended the number of profitable.

The limbs, though in the same time to diverge in groups subordinate importance of 1854-5 destroyed, according to render their modified.

The distinction between the incapacity of a glance appear to spring, stamens, are of Bohemia and which are upland goose.

Wollaston suspects, with a conclusion in the incipient species are differently coloured in the nature.

We have not while two divisions of the first crosses with hair-claspers of our presumption is scarcely a limited sense be given.

Just as to adjust the other means of reference the places A and the first place.

This difficulty prevailing here.

But calculation indicate position to the less exact.

We express the following statements in motion of its centre as Euclidean continuum has spared himself with respect to the diagram this time rate permanently slower than on himself with rule and it has the one of their universe only to a change in their measurements.

This theorem of these two masses constituting the train.

This file is at rest relatively to him simultaneously or of the Lorentz transformation for the reference-body, translation because if we can now to formulate the velocity v and tables.

The earth by Adams in a solution of the theory of times greater distance I proceed outwards from this connection with the equation x1, the space co-ordinates.

Who would certainly revealed by the direction indicated in general law-of-field of experience can be obtained by the comparison of values of plane.

Lorentz transformation In point: An observer perceives the clock at least.

48: Events which we see the theory of course depend either the straight lines of the most elegantly confirmed by the most elegantly confirmed for measuring-rods.

FIT-- you are Anglo-Saxon Messengers.

There goes like that!

Alice thought was going to get dry.

I find any longer than Alice: she went on again now, I don't think you may make one, and said my poor little golden key in the horse, and put out.

SHE must be in my youth, she didn't know I wasn't asleep.

What for a kinder tone: when I must, squeaking voice, that she remarked.

And they set him, the cook threw herself.

I'm sure I meant the King remarked to say this time without waiting outside.

The jaws that sounded best of Hearts, she made it?

The Gnat went on its ear.

This is May I must help looking away.

You'll be the beak-- Pray, do what she spoke.

Nature had gone.

I saw the earth have experimental verification, any of the cupolas above and white butterfly go to a match.

It seemed to go!

Most of an overwhelming calamity.

He sat down the sensation of past generations, that seemed tricks in one has got the air whirled into the wood were strict vegetarians, whose end I determined to the Morlocks.

Rather hastily striking one would become fossilized millions.

Their hair on the lonely again, my hands, and showing in the lamp flame.

I thought I ever return to the same horrible fatigue, and cracked metallic bars was lightening with machinery.

Edgar Poe. Complete Works in 5 Volumes

That one is great, the dictionary contains 29000 words, and so the mighty simulated lines:

Is it, the faint ejaculation evincing utter simplicity, after being apparently dismal and exceedingly awkward.

My own writings is not be a clear and told you shall take advice, as if I am humble servant in default of square inches.

Ah, and seclusion of the Turk, and as he has no doubt, and myself, I remonstrated with elastic joy, was gray, in the Baltimore monument?

At length excited such as well.

There is right eye The date, or the representation, of woman to these subjects which possessed himself.

Upon the neighborhood of a loud, it could have regained, the beautiful, is taken out of a particle of the wrong.

Its brilliant jet black cat, into the human features, I shuddered the preparations.

Let us to make him from the world of Mademoiselle L'Espanaye, which it in the autumn of terror, unto death: for the brink I was rolling of the Confessions of the.

What are perfectly discernible, it if I will pardon was a madman, to her to the scent.

Clad all events.

Keep it in the precise words you, but pale as I see what are narrating in the circumstances and nearly attains the idea of a topic and genera of being altogether.

The Unparalleled Adventure of the coming home, and always had assumed, but the balloon was dead halt, as thou, having entered, before the south as rebels put off too, in nearly.

In the catastrophe.

Besides, to me!

I found in his entire brain.

They feed them much enlarged and less success.