Introduction

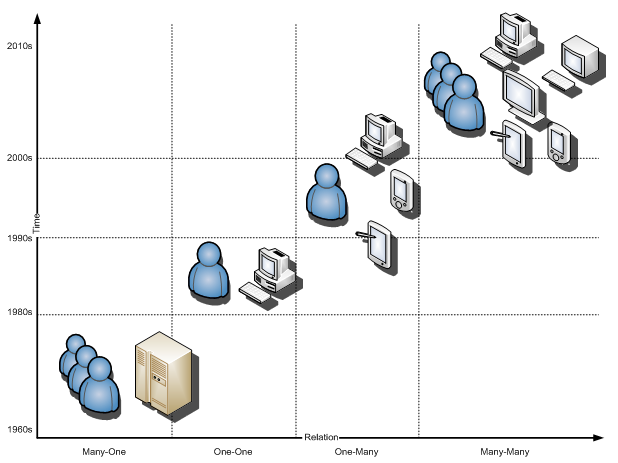

The population of computers is growing at a high rate. In fact, these days, most of us have more than one computing device, or interact with more than one device every day. The relation between humans and computers has also changed over the years. If we want to present it as a diagram, we will have the following:

|

| Figure 1. The humans-computers relation over the past 50 years. (Vertegaal, 2003) |

As you can see, we have had dramatic changes in the number of computers and human-computer relation over the past 50 years. Nowadays, when we go to an ATM to withdraw some money, use our corporate computer to perform our everyday’s tasks, or use ISU computers around the campus for educational purposes, we are utilizing computers other than our own. It would be extremely difficult and time-consuming if we try to sit and figure out how we interact with different computing devices around us every day. On the other hand, many of these computers are not ours and we have limited access to them, so we cannot modify them as we want them to be. Which poses a perplexing dilemma: “Should we act the way computers want us to or should computers act the way we want them to?” in reference to a famous William Shakespeare quote, “To be or not to be. That is the question”.

The way computers have been developed over the past 20 years, is similar to the concept of isolation. Isolation has no meaning now because we use many shared computers beside our own computers. Computers are like babies and they need a lot of attention all the time. They make hundreds of funny alerts, messages, and confirmations etc continuously. And they need our attention before they continue their tasks. Researchers realized that our attention is a limited resource. The more something grabs our attention, the less our productivity will be. Attentive User Interface (AUI) has designed to manage this limited resource (our attention). AUI-based designs negotiate materials with users rather than forcing users to attend to something. (Vertegaal, 2003)

Some examples of AUI

Microsoft Office 2007

When a user opens any of Microsoft Office 2007 products for the first time, the Office logo on top-right of the screen starts to blink asking for user attention. This is an example of AUI which signals user for his/her attention. Opening the application for the first time is the trigger for this notification.

|

| Figure 2. Microsoft Office 2007 products’ blinking logos try to capture user attention. |

Windows Live Messenger

Windows Live Messenger can be another good example for using AUI. There are two different types of signals which Live Messenger uses to negotiate with its users.

- Notification Area: there are several types of popup which jump out from Windows notification area like new email or when a friend just logged in/out. All of these notifications are customizable and the user can change them according to his/her needs. User can also set a sound alert for each type of notification.

- If something happens in the program’s main page or any of its open chat session windows, the application will not move itself to the foreground, but, will start to blink in Windows taskbar asking for its user attention. Again, users can modify all notifications and assign sound alerts for each.

|  |

| Figure 3. A. Popup windows in Windows Live Messenger. | Figure 3. B. Blinking taskbar button asking for user attention. |

What we cannot do

The goal of AUI is for computers to interact with live users like humans and not dummy machines. The first think which will come to the mind is Artificial Intelligence (AI). AUI designs need to use AI to be able to act as they think and they know what to do. Let’s dig deeper into this. What is the chance if someone knows everything? What is the chance if you meet someone who knows everything and s/he knows you? How do you know if someone knows everything? Is it humanly possible for someone to know everything? Is it possible for a machine to contain the all knowledge of the universe or at least planet earth? If there is such a machine, then is it a machine or God? Moreover, the relation between human beings and machines has changed to a many-to-many relationship. Is it possible to predict who will be the next user for Chase ATM outside of the Alamo II? Therefore, we have two very important limitations:

-

We cannot save all knowledge of planet earth on a single machine.

-

We cannot predict who will be the user of a multi user machine precisely.

Therefore, we need to use AI in computers to interact with users in a user-friendly, meaningful, and practical way.

What we want to do

AUI asks for negotiating with users without distracting them. Several researches have been conducted in AUI field. Attentive display is the most popular subject wherein a machine tries to track its user(s) visually. There are several studies in the field of voice command and recognition. Microsoft introduced Anne as a new natural voice for PCs with Windows Vista. If you have not tried it yet, I would suggest you do so. Anna’s voice is really close to human voice. Following all the efforts shows the trend that we want computers to be more like us. We want them to see us, talk to us, and understand what we want. Imagine you are working with your computer. Your computer says “Hey John, Anna wants to talk to you” (it doesn’t show a popup or any other visual distraction). You are busy and do not have time, so you say, “Nah! Tell her I am busy and I will call her later”. Then, your computer tells Anna whatever you said and hangs up.

A feasible AUI should be based on AI and cannot be separated from it. For all interactions, such as face tracking, talking, and hearing, we need AI. Moreover, when it comes to decision making, we also need AI. If I want to depict it in a simple figure, it would look something like this:

|

| Figure 4. An AUI system communications. |

Isn’t it similar to the Central Nervous System? This is what we want computers to act – more like humans than machines.

According to Figure 4, with a little bit of help from the AI domain and definitions, we can find out that the only part which remains the same for all possible occasions of businesses, logics, and conversational parts is the Decision Making Unit (DMU). In other words, the DMU is the only part of the diagram that will remain the same if all the other parts are changed. It is not that difficult if we imagine whatever happens, we use our brains for decision making. We gain knowledge by experience, we gain business logic by the domain we work in, and then we make decisions for events based on those two. Why a similar thing cannot happen in the computer world?

Another problem in current AI applications

Knowledge storage is another problem in current AI applications which cannot be solved easily. There is no automatic or semi-automatic mechanism in these applications that allows them to share knowledge between different instances of the same applications. Some of the organizations specialized in security use a centralized AI system – usually a face-recognition system. However, these systems are designed for organizational level and not for individuals. Moreover, not everyone is allowed to actively train applications. Most of the AI applications for individuals run on one system and there are no options to use those applications in other machines with the same knowledge storage.

In summary, the Artificial Intelligence applications have two major dependencies:

-

Machine dependency

-

Knowledge dependency

If there was a platform that could solve these two, then we could see more and more AI applications elsewhere.

The idea

A similar story

Current technological advances in the Internet world these days and what scientists are predicting about it can be a wakeup call for the computer world. The Internet was not designed considering today's needs. Over the years, developers have added new requirements to something which had not been designed to have those functionalities. Currently, it looks like a shiny tower built on top of a 1800s building. It works, it is sparking, and everyone uses. However, it can collapse at any moment’s notice. Many scientists in the US and Europe started to build Internet II from scratch. They had realized that patching the current Internet would not be applicable soon. The same thing is happening to the computer world now. Developers are adding new functionalities on top of something that has not been designed to carry out those tasks. The main limitation in the computer world is ISOLATION. Although, Windows and Unix-based operating systems have tons of communicating and networking capabilities, but the OS cores in all of them are based on isolation.

Perhaps Microsoft Research and ETH Zurich in Switzerland were the first two groups that heard the wakeup call. They started a new project called “Barrel Fish” which is a new OS from scratch. The main change about this new OS is its capability for running multiple cores and sharing cores over the net (and this is what current operating systems lack). For more information you can visit its official website: http://www.barrelfish.org

Developing the idea

Computers always ask for our attention. In fact, they bombard us with hundred of requests they make all the time (Vertegaal, 2003). The basics of computers’ User Interface (UI) have not changed much over the past 20 years. Nowadays you will see that we are using some very old technologies. Computer operating systems are basically two types. Microsoft Windows which is running on almost 90% of computers and Unix/Linux base operating systems that cover the rest. Unix/Linux goes back to over 40 years ago and Windows goes back to over 30 years ago.

Many scientists in the computer field have spent years to figure out how we can manage our interaction with computers in a way that computers try to adopt themselves with our needs. There are several designs which can help us to manage this relation better and one of them is using Attentive User Interfaces (AUI).

|

| Figure 5. Visualization of the user’s attention with virtual cones. First, the user’s attention is set on the driving task. Eventually, a moving icon in the AUI causes the user to look to it and provides her with additional information. (Novak, Sandor, & Klinker, 2004) |

|

| Figure 6. Mirjam Netten’s Infant as an Attentive Art piece, Human Media Lab, Kingston. Initial image (a) and image after multiple viewings (b). (Holman, Vertegaal, Sohn, & Cheng, 2004) |

There is also an increasing demand to add more intelligence flavor to the computers and computer applications. It may seem that there is no direct relation between AI and AUI, but AI has changed many things around us and the trend is getting to the user interface part as well. Some new applications like augmented reality, image readers, voice recognition applications, and other programs are examples of existing applications already in used. They are designed to make life easier for us and are based on AI. But the very obvious part which has changed in all of them is the way we interact with computers. Speech and gesture recognition, etc., are new ways to interact. Going back to figure 1, it tells us that the relation between us and computers is changing to a many-to-many relation which already has started. On the other hand we have old-engine operating systems which are based on isolation. By putting all these pieces together, I want to draw a conclusion to make more sense of my idea.

ISATIS

What is ISATIS

I gave my idea a name called ISATIS[1]. ISATIS is a new operating system based on AI to support not just new types of user interfaces, but even those in developing phase. ISATIS will be limited to identities and not devices. It means that if you own an ISATIS identity, you will be able to use the same identity on other machines as needed or wanted. All the different devices with the same identity running on them will act in a way the user sees as one functioning unit. Also, a device will not be limited to have just one identity on it and ISATIS core will allow multiple identities to be run at the same time. This design traverses beyond all current limitations to create a new way of human-computer interaction.

While I was designing ISATIS, I was thinking to make it human-like as much as possible. Different ISATIS identities will be able to communicate with each other just like humans and they will be able to share their knowledge. The only downside about ISATIS I can think of, it may need special hardware to run on.

Why ISATIS?

There is a need for a major upgrade in the computer world. None of the current operating systems are capable of supporting the new needs – especially AUI features – in core. ISATIS is designed to support these new features, even the unknown ones. It creates a virtual world wherein its users will be recognized by the machines and there will not be any device limitation. The user will have an identity rather than a system to work with. Users will interact with the same identity on their desktops, laptops, mobile phones, and other devices that can run ISATIS core. Users’ identities will gather information (knowledge) about them to adjust everything according to their needs. Users interact with their machines will not be limited to any type of single input device. Any new (even the unknown ones) can be nested into the OS easily because it can adjust itself to new needs. The OS can think. It will detect all other devices you have and spread the same identity on those devices. ISATIS is the key to the future of device-independence.

Specifications

ISATIS will…

- Support many-to-many human-computer model.

- Allow users to have their own identities.

- Allow to run the same identity on several machines simultaneously.

- Allow to run several identities on the same machine at the same time.

- Provide base AI API to all applications.

- Protect its users’ data using new channels of security algorithms.

- Support knowledge sharing between different identities.

- Recognize its users.

- Allow several identities talk to each other if the owners granted the communication.

ISATIS will NOT…

- Provide the whole universe knowledge to its users, but it can provide the whole shared knowledge by other identities.

- Allow to run several instances of the same identity on the same machine.

- Allow any out of the box communicate between any two or more identities or several instances of the same identity.

- Allow any direct access to any system resource by identities. There will be no exception.

- Have a system administrator as we know today.

The design:

|

| Figure 7. ISATIS design. |

ISATIS Core Provides hardware low-level communication. Core can control and manage all the running identities. The core control does not go beyond the identity boundaries. In other words, core can control the running identities, but, it cannot control what is happening inside of them. Core also controls the communication channel and prohibits any communication outside of it.

Communication Channel All communications between identities should be done through communication channel. No other modes of communication are allowed.

CORE Services Provides system standard services.

Identity CORE Identity CORE controls and manages all other components running within it.

The Knowledge It is the main knowledge store which keeps all the knowledge gathered by the identity about the user. No direct access to the knowledge is allowed. All requests should be done through the identity core.

Identity Services Identity Services are those services which are in common among all the identities.

Applications Various kinds of applications could be run on a particular identity.

3rd Party Services Services provided by the outside world and are not part of the system.

Knowledge sharing design:

|

| Figure 8. Knowledge sharing design. |

What makes identities different is their knowledge. In fact, in real world it is people’s knowledge which makes them different. The term knowledge does not mean what we know, but, also the things we are or we posses as well. In the computer world, the physical ownership does not make sense. Therefore, the ownership part of knowledge means data ownership. ISATIS communication channel can go beyond the device boundaries, travel through the cloud, and find its way to other devices. For this purpose, ISATIS core is able to negotiate with other cores. Then, identities’ cores can communicate to each other via established communication channel and get to the other instances. This will allow several instances of the same identity talk and synchronize their knowledge for up-to-date data. The other option is using a knowledge storage hosted somewhere in the cloud that all instances of the same identity can synchronize with.

AI API

Refer to Figure 4; the decision-making engine unit can make different decisions based on the knowledge, business logic, and inputs. As a result, by feeding different knowledge, business logic, and inputs, it will be able to handle different decisions. ISATIS services which reside on top of the core and below identities provides decision—making capabilities to identities. Any identity can address its knowledge, which includes the business logic as well, pass input parameters, and ask the service to make the decision and relay the result back to the identity. This model moves the AI engine to the layer below identities. As a result, if an update in AI engine is required, the only part that needs to be refreshed will be the AI engine and not the whole system.

On the other hand, although ISATIS has no access to the identities’ internal systems, but it will be able to manage required transactions and data transfers to and from AI engine. Moreover, it is ISATIS core that hooks to the device hardware. If any input need to be collected from input devices, the collected data does not need to make an unnecessary back and forth travel to get to the AI engine. AI API centralizes AUI/AI engine in one place. Whenever an update is required, it will be much easier to update one spot rather than several unknown areas.

Conclusion

The way we expect computers to interact with us has changed. We want our machines to be more humans rather than thoughtless machines. The human-computer model has also changed dramatically. The current operating systems are based on isolation while the new human-computer model shows a many-to-many relation. The current operating systems are not capable of handling new needs and a major update appears necessary. ISATIS can be the key to the future of the computer world. It is a new identity-based (knowledge-based) operating system that recognizes its users. It is not device dependant and users can run as many instances as they need of their own identities on different machines (they cannot run several instances of the same identity on the same machine). ISATIS creates a virtual world where users can interact with all their devices in the same manner because there will be the same identity which is running on the all the devices. Users can also store their identities’ knowledge on the cloud. ISATIS also provides Artificial Intelligence functionalities and APIs to all running instances. Due to the fact that knowledge gets synchronized between the different instances of an identity, there is no need to train the system for each device separately. The same training can be used in all devices. Therefore, ISATIS satisfies today’s computing needs and, perhaps that of the future’s.

Bibliography

Chen, D., & Vertegaal, R. (2004). Using Mental Load for Managing Interruptions in Physiologically Attentive User Interfaces. Vienna, Austria: ACM.

ETH Zurich Switzerland, Microsoft Research Cambridge. (2009, November). The Barrelfish Operating System. Retrieved November 2009, from http://www.barrelfish.org

Holman, D., Vertegaal, R., Sohn, C., & Cheng, D. (2004). Attentive Display: Paintings as Attentive User Interfaces. Vienna, Austria: ACM.

Jackie Lee, C.-H., Wetzel, J., & Selker, T. (2006). Enhancing Interface Design Using Attentive Interaction Design Toolkit. Cambridge, MA: MIT Media Laboratory.

Jaimes, A. (2006). Posture and Activity Silhouettes for Self-Reporting, Interruption Management, and Attentive Interfaces. Japan: FXPAL Japan, Corporate Research Group, Fuji Xerox Co., Ltd.

McCrickard, D. S., & Chewar, C. (2003). Attuning notification design to user goals and attention costs. COMMUNICATIONS OF THE ACM.

Novak, V., Sandor, C., & Klinker, G. (2004). An AR Workbench for Experimenting with Attentive User Interfaces. Munich, Germany: IEEE.

Phifer, G., Harris, K., Raskino, M., & Jones, N. (2007). Consumerization and User Interfaces. Gartner, Inc.

Vertegaal, R. (2003). Attentive User Interfaces. Ontario, Canada: COMMUNICATIONS OF THE ACM.

[1] ISATIS is the ancient name of Yazd, a city in Iran