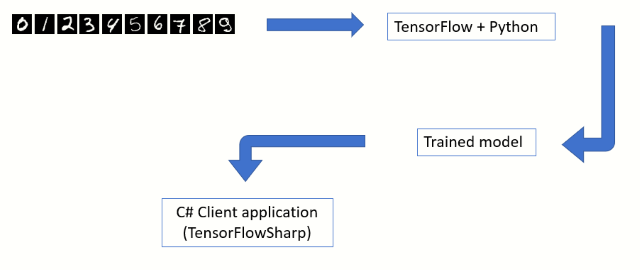

This article aims to demonstrate how to make the best use of Python for training a model and .NET to build a hypothetical end user application which consumes the trained model.

Table of Contents

Introduction

In the field of pattern recognition, deep neural networks have gained prominence in the last 5 years. This can be largely attributed to the availability of cheaper hardware, programming libraries and labelled data. Deep neural networks or Convolutional neural networks (CNN) if trained properly can give spectacular results. TensorFlow from Google is one of the very popular libraries that implement some of these complicated algorithms.

In this article, I am going to demonstrate how to train a CNN model to recognize handwritten digits from the MNIST database. This will be followed by a C# console application which will consume the trained model to actually classify the test images from the MNIST dataset. The objective of this article is to demonstrate how to make the best use of Python for training a model and .NET to build a hypothetical end user application which consumes the trained model.

Top

About TensorFlow

TensorFlow Native Library

///

///https://www.tensorflow.org/install/lang_c

///The windows native implementation is downloadable as a single ZIP

///and structured as follows

///

include

--------

|

|

|

--c_api.h

|

|

lib

--------

|

|

--tensorflow.dll

|

|

--tensorflow.lib

TensorFlow Bindings for Python and C#

Tensorflow is implemented as C/C++ dynamic link library. Platform specific binaries are available in a ZIP file. Bindings in various languages are provided on top of this library. These are language specific wrappers which invoke the native libraries. Python is perhaps one of the most versatile programming layers built on top of the native TensorFlow implementations. TensorFlowSharp is the .NET wrapper over TensorFlow.

TensorFlow(C/C++)

----------------

|

|

------------------------------------------------

| |

| |

| |

Python TensorFlowSharp(C#)

------ -------------------

(train model) (use model in client application)

Top

Background

- Python - I have used Python for training a CNN model using the MNIST dataset of handwritten digits. A basic knowledge of Python would be essential. I have used Visual Studio Code (1.36.1) for the Python scripts. You can use any Python editor that suits you.

- I have used Visual Studio 2017 for the simple Console application which consumes the trained model and classifies the test images.

- In this article, I have used the GPU version of the Tensorflow for to improve the speed of learning. You would need a GPU enabled desktop. The reader is cautioned that coding with the GPU requires additional CUDA libraries and drivers to be installed. This article also assumes that the reader is familiar with the basic principles of Deep Convolution Neural Networks.

Top

What is MNIST? Why MNIST?

Overview

MNIST database is a collection of handwritten digits (0-9). This comprises 60,000 training and 10,000 testing images. Each of the images is 28 pixels wide and 28 pixels high and all the images are in gray scale. In the world of machine learning and computer vision, MNIST has become the de facto standard to test any new paradigm. (Reference: http://yann.lecun.com/exdb/mnist/)

Example Pictures

Distribution of Pictures

| | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

| 5923 | 6742 | 5985 | 6131 | 5842 | 5421 | 5918 | 6265 | 5851 | 5949 |

| 980 | 1135 | 1032 | 1010 | 982 | 892 | 958 | 1028 | 974 | 1009 |

Top

Deep Learning

Perceptrons

In the 1940s and 50s, the idea of a very basic mathematical neuron began to take shape. Researchers (McCulloch, Pitts and Rosenblatt) drew inspiration from the working of a biological neuron. Neurons are the building blocks of the nervous system. An average human brain has several billions of neurons indirectly connected to each other through synapses. They envisioned an individual neuron to behave like a straight-line classifier. The electric signals flowing in through the dendrites represented a real-life signal (vector) and the output signal would represent a binary (on/off) state of classification. Frank Rosenblatt (1962) took the design of McCulloch and Pitts neuron a step forward by proposing the design of the linear perceptron in his book Principles of Neurodynamics (published 1962, Section “Completely linear perceptrons”).

Top

Single Perceptron

The blue circle represents the equation of a straight line in the form of a.x+b.y+c=0.

Given two classes of points X and O, which are linearly separable, you can find a straight line which divides the 2 classes. If you feed in the coordinates of the points in class X to the equation a.x+b.y+c and then do the same for all points in class O, then you are going to see that all points in class X produce a positive value, while all points in class O produce a negative value (or vice versa). The change of sign could be the other way round, depending on the constants a, b and c. Nevertheless, this is the overarching principle which goes to make the Perceptron behave as a linear classifier.

Top

Multi Layer Perceptrons

If we are unable to find a single line that separates the classes X and O , as in the case of the famous XOR problem, then we could cascade multiple linear classifiers.

Top

Convolutional Neural Networks

Deep learning takes a multi layer perceptron a step forward by combining feature extraction and hyperplane discovery. The features are extracted by layers of filters. For a thorough treatise on this subject, the reader is requested to follow Andrew Ng's tutorials.

Top

TensorflowSharp - Using Tensorflow from a C# Application

TensorFlowSharp is a .NET wrapper over the unmanaged native libraries of TensorFlow. This is the outcome of the pioneering work done by Miguel de lcaza. TensorFlowSharp can consume a CNN model that was trained using Python and this opens up the possibility to create exciting end user applications.

nuget install TensorFlowSharp

byte[] buffer = System.IO.File.ReadAllBytes(modelfile);

using (var graph = new TensorFlow.TFGraph())

{

graph.Import(buffer);

using (var session = new TensorFlow.TFSession(graph))

{

var runner = session.GetRunner();

runner.AddInput(...,tensor,)

runner.Fetch(...)

var output = runner.Run();

}

}

Top

Using Tensorflow with GPU

Overview

Python script

--------------

|

|

|

TensorFlow GPU package

----------------------

|

|

|

cuDNN

-----

|

|

|

CUDA Toolkit

--------------

|

|

|

Drivers

-------

|

|

|

GPU

---

When using TensorFlow for training, you have the choice of using either the CPU package or the GPU package. The GPU is preferred because the training speed is significantly faster. You will need the correct version of NVIDIA drivers and CUDA libraries. As a rule of thumb, the version of NVIDIA drivers should match the current version of TensorFlow. At the time of writing this article, I have used the Python package TensorFlow-GPU 1.14.0. I would caution the reader that my experience with installing the drivers and getting TensorFlow GPU to work was less than smooth.

- Update the version of the NVIDIA drivers. I did not install through NVIDIA web site. I updated the display adapter through the Windows Device Manager user interface. The version 24.21.14.1131 worked for me.

- Install CUDA Toolkit 10.0 version

- Install cuDNN SDK version 7.6.2. I chose the Windows 10 edition. I copied over cudnn64_7.dll to %ProgramFiles%\NVIDIA GPU Computing Toolkit\CUDA\v10.0\bin

- Python package Tensorflow 1.14

- Python package Keras 2.2.4

- Python package Numpy 1.16.1

Top

Training a CNN Model using TensorFlow and Python

CNN Architecture

Input layer (28X28,1 channel)

-----------------------------

|

|

|

Convolution layer (5X5,20,RELU)

--------------------------------

|

|

|

Max Pool layer (2X2,stride=2)

------------------------------

|

|

|

Convolution layer (5X5,50,RELU)

--------------------------------

|

|

|

Max Pool layer (2X2,stride=2)

-----------------------------

|

|

|

Flatten

---------

|

|

|

Dense layer (500 nodes,RELU)

----------------------------

|

|

|

Dense layer (10 nodes,RELU)

----------------------------

|

|

|

Output layer(Softmax)

----------------------

Top

Image Files Used for Training

MNIST dataset can be readily accessed from the scikit-learn package. However, in this tutorial, I have demonstrated how to load the images from disk. The individual PNG files are made available in the accompanying project MNISpng.csproj. The python script, MnistImageLoader.py will be enumerated over the directory structure and build a list of training/testing images. The parent folder of each PNG file will provide the training label (0-9).

MNIST

-----

|

|

training.zip

-----------

| |

| |

| |--(folders 0 to 9)

| |

| |

| |_0

| |

| |

| |_1

| |

| |

| |_2

| .

| .

| ._9

|

|

testing.zip

-----------

|

|

|--(folders 0 to 9)

Top

1-Python Script (MnistImageLoader.py)

def load_images(path_in):

filenames = glob.glob(path_in)

images=[]

labels=[]

filenames = glob.glob(path_in)

for filename in filenames:

fulldir=os.path.dirname(filename)

parentfolder=os.path.basename(fulldir)

imagelabel=int(parentfolder)

labels.append(imagelabel)

img = get_im(filename)

images.append(img)

return images,labels

def ReShapeData(data,target,numclasses):

data_out = np.array(data, dtype=np.uint8)

target_out = np.array(target, dtype=np.uint8)

data_out = data_out.reshape(data_out.shape[0], 28,28)

data_out = data_out[:, :, :, np.newaxis]

data_out = data_out.astype('float32')

data_out /= 255

target_out = np_utils.to_categorical(target_out, numclasses)

return data_out,target_out

Top

2-Loading the Training Images (TrainMnistFromFolder.py)

The master python script TrainMnistFromFolder.py will call the functions load_images and ReShapeData.

from MnistImageLoader import load_images,ReShapeData

print("Loading training images")

(train_data, train_target)=load_images(mnist_train_path_full)

(train_data1,train_target1)=ReShapeData(train_data,train_target,nb_classes)

print('Shape:', train_data1.shape)

print(train_data1.shape[0], ' train images were loaded')

Top

3-Create the CNN Model(TrainMnistFromFolder.py)

model = Sequential()

model.add(Convolution2D(

name="conv1",

filters = 20,

kernel_size = (5, 5),

padding = "same",

input_shape = (28, 28, 1)))

model.add(Activation(

activation = "relu"))

model.add(MaxPooling2D(

name="maxpool1",

pool_size = (2, 2),

strides = (2, 2)))

model.add(Convolution2D(

name="conv2",

filters = 50,

kernel_size = (5, 5),

padding = "same"))

model.add(Activation(

activation = "relu"))

model.add(MaxPooling2D(

name="maxpool2",

pool_size = (2, 2),

strides = (2, 2)))

model.add(Flatten())

model.add(Dense(500))

model.add(Activation(activation = "relu"))

model.add(Dense(nb_classes,name="outputlayer"))

model.add(Activation("softmax"))

model.summary()

model.compile(

loss = "categorical_crossentropy",

optimizer = SGD(lr = 0.01),

metrics = ["accuracy"])

print("Compilation complete");

Top

4-Train Model (TrainMnistFromFolder.py)

total_epochs=20

start = time.time()

model.fit(

train_data1,

train_target1,

batch_size = 128,

epochs = total_epochs,

verbose = 1)

print("Train complete");

print("Testing on test data")

(loss, accuracy) = model.evaluate(

test_data1,

test_target1,

batch_size = 128,

verbose = 1)

print("Accuracy="+ str(accuracy))

Top

5-Save the Model (FreezeKerasToTF.py)

After training is complete, the model has to be saved in the original TensorFlow format (.pb). The function freeze_session in the file FreezeKerasToTF.py does this for us. The saved model contains the network layout and the weights.

frozen_graph = freeze_session(K.get_session(),

output_names=[out.op.name for out in model.outputs])

tf.train.write_graph(frozen_graph, "Out", "Mnist_model.pb", as_text=False)

6-Results

Top

C# Console Application

Overview

-----------------------

1)Load trained model file

-----------------------

|

|

-----------------

2)Load test images

-----------------

|

|

-----------------------------------

3)Evaluate the test image using CNN

-----------------------------------

1-Create a Console Application

- Create a new Console application using .NET Framework (64 bit, 4.6.1 or above)

- Add NUGET package reference to TensorflowSharp

Top

2-Load the Trained Model File

var modelfile=@"c:\\MyTensorFlowModel.pb";

byte[] buffer = System.IO.File.ReadAllBytes(modelfile);

using (var graph = new TensorFlow.TFGraph())

{

graph.Import(buffer);

using (var session = new TensorFlow.TFSession(graph))

{

var file="test.png";

var runner = session.GetRunner();

var tensor = Utils.ImageToTensorGrayScale(file);

runner.AddInput(graph["conv1_input"][0], tensor);

runner.Fetch(graph["activation_4/Softmax"][0]);

var output = runner.Run();

var vecResults = output[0].GetValue();

float[,] results = (float[,])vecResults;

int[] quantized = Utils.Quantized(results);

}

}

Top

3-Utils.ImageToTensorGrayScale

This function will load a MNIST picture file and create a TFTensor:

public static TensorFlow.TFTensor ImageToTensorGrayScale(string file)

{

using (System.Drawing.Bitmap image =

(System.Drawing.Bitmap)System.Drawing.Image.FromFile(file))

{

var matrix = new float[1, image.Size.Height, image.Size.Width, 1];

for (var iy = 0; iy < image.Size.Height; iy++)

{

for (int ix = 0, index = iy * image.Size.Width;

ix < image.Size.Width; ix++, index++)

{

System.Drawing.Color pixel = image.GetPixel(ix, iy);

matrix[0, iy, ix, 0] = pixel.B / 255.0f;

}

}

TensorFlow.TFTensor tensor = matrix;

return tensor;

}

}

Top

4-Utis.Quantized

This function will convert the TF result into an array with 10 elements. The 0th element represents the probability for digit 0 and element 9th represents the probability for digit 9.

internal static int[] Quantized(float[,] results)

{

int[] q = new int[]

{

results[0,0]>0.5?1:0,

results[0,1]>0.5?1:0,

results[0,2]>0.5?1:0,

results[0,3]>0.5?1:0,

results[0,4]>0.5?1:0,

results[0,5]>0.5?1:0,

results[0,6]>0.5?1:0,

results[0,7]>0.5?1:0,

results[0,8]>0.5?1:0,

results[0,9]>0.5?1:0,

};

return q;

}

Top

5-Results

After iterating over all the 10,000 test images and classifying each of them through MNIST, we get a prediction success rate of 98.5%. 150 images were misclassified. As per MNIST home page, the state of the art benchmark is over 99.5% success rate.

Top

Using the Code

Github Repository

Solution Structure

Solution

--------

|

|

MNISTPng (ZIP of individual PNG train and test files)

------------------------------------------------------

|

|

PythonTrainer (Python script to train using TensorFlow)

-------------------------------------------------------

|

|

ConsoleAppTester (C# console app using TensorFlowSharp)

-------------------------------------------------------

1-PythonTrainer

Python scripts for training a CNN model:

- TrainMnistFromFolder.py - Outermost Python script which loads and trains the images

- MnistImageLoader.py - Useful for converting a PNG into a Tensor

- FreezeKerasToTF.py - Useful for saving a trained model as .PB file

2-MNISTPng

ZIP of training and testing images:

- testing.zip - 10,000 individual testing files organized into 10 directories

- training.zip - 50,000 individual training files organized into 10 directories

3-ConsoleAppTester

C# EXE which will use TensorFlowSharp to load the trained model.

Top

Points of Interest

Top

History

- 7th August, 2019: Initial version