Here we use the MSAL for Java to obtain access tokens for several Azure APIs, and then add a microservice to interact with those APIs, return the result, and store it in a managed MySQL database on Azure.

In the previous article of this series, we created a microservice to call the Microsoft Graph API on behalf of a user who initiated the request from a web application to retrieve calendar events. That tutorial demonstrated how Azure AD and MSAL allowed a Spring application to participate in a broader services ecosystem.

In this tutorial, we’ll create a second microservice, this time calling the Azure Storage API on behalf of a user. When we call Azure services on behalf of a user, the Azure security layer grants or restricts access to individual users rather than our application managing those rules itself. This method significantly simplifies our code and enables us to manage security policies within Azure centrally.

This second microservice then log files upload events to an audit table in a MySQL database and exposes those audit records to the frontend web application.

You can find this tutorial’s frontend application’s source code in the mcasperson/SpringMSALDemo GitHub repo and the Azure API microservice in the mcasperson/SpringMSALAzureStorageMicroservice GitHub repo.

Creating an Azure Storage Account

We need to upload our microservice to an Azure storage account for this demonstration. We first need to create a new storage account.

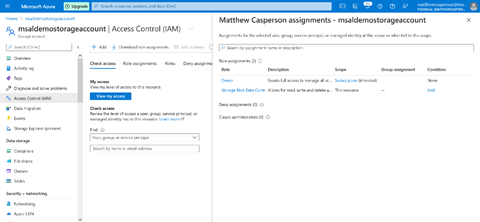

Note that the Owner permissions are not sufficient to perform an upload via our application. The user performing the upload must also have the Storage Blob Data Contributor permission.

The fact that we define these permissions within Azure demonstrates how interacting with Azure APIs via MSAL separates the burden of maintaining security rules from our code.

Creating the MySQL Database

Our microservice requires a MySQL database to persist audit entries, so we create a new MySQL instance in Azure.

Registering a New Application

We register our second microservice as a new Azure AD application. We documented how to register a new application in the last article, so we won’t run through the process again step-by-step. Instead, we’ll call out the settings specific to this new microservice.

This new application exposes a scope called SaveFile. Note the Application ID URI, as you’ll need this value later on:

The application delegates the user_impersonation permission on the Azure storage API.

As before, we need to grant admin consent for these permissions:

Finally, we must create a new secret. Note the new secret, as Azure won’t show it again:

Building the Spring Boot Microservice

As we have with the previous tutorials, we’ll bootstrap our Spring microservice with the Spring Initializr tool. Our new microservice requires the following dependencies, which we add after clicking ADD DEPENDENCIES:

- Spring Web, providing the built-in web server

- OAuth2 Resource Server, allowing us to configure our application as a resource server

- OAuth2 Client, providing classes we’ll use to make OAuth2 authenticated HTTP requests

- Azure Active Directory, providing integration with Azure AD

- MySQL Driver, providing a driver for a MySQL database

- Spring Data JPA, providing an object-relational mapping (ORM) interface to the MySQL database

Note that we don’t use the Azure Storage dependency, which connects to a storage account via account keys. Instead, this microservice uses the more generic Azure storage Java library shown below, which we must add to pom.xml:

<dependency>

<groupId>com.azure</groupId>

<artifactId>azure-storage-blob</artifactId>

<version>12.13.0</version>

</dependency>

Configuring the Spring Application

Below is the application.yaml file code for the microservice:

server:

port: 8082

azure:

activedirectory:

client-id: ${CLIENT_ID}

client-secret: ${CLIENT_SECRET}

app-id-uri: ${API_URL}

tenant-id: ${TENANT_ID}

authorization-clients:

storage:

scopes:

- https:

spring:

jpa:

database-platform: org.hibernate.dialect.MySQL5InnoDBDialect

datasource:

url: jdbc:mysql:

username: ${DB_USERNAME}

password: ${DB_PASSWORD}

logging:

level:

org:

springframework:

security: DEBUG

We’ve seen most of this configuration already. However, we’ll call out a few unique settings.

This microservice requires permission to impersonate a user when interacting with Azure storage. The scope assigned to the client reflects this.

authorization-clients:

storage:

scopes:

- https:

Because this microservice uses Spring Data JPA, we must configure access to the database. Our database runs MySQL, so we define the appropriate hibernate dialect:

spring:

jpa:

database-platform: org.hibernate.dialect.MySQL5InnoDBDialect

We then define the connection string and database credentials. We save the database name in the DB_NAME environment variable, the username in the DB_USERNAME environment variable, and the password in the DB_PASSWORD environment variable:

datasource:

url: jdbc:mysql:

username: ${DB_USERNAME}

password: ${DB_PASSWORD}

Configuring Spring Security

Our storage API microservice implements the same security rules as the calendar API, authenticating all requests. We configure these rules in the AuthSecurityConfig class:

package com.matthewcasperson.azureapi.configuration;

import com.azure.spring.aad.webapi.AADResourceServerWebSecurityConfigurerAdapter;

import org.springframework.security.config.annotation.web.builders.HttpSecurity;

import org.springframework.security.config.annotation.web.configuration.EnableWebSecurity;

@EnableWebSecurity

public class AuthSecurityConfig extends AADResourceServerWebSecurityConfigurerAdapter {

@Override

protected void configure(HttpSecurity http) throws Exception {

super.configure(http);

http

.authorizeRequests()

.anyRequest()

.authenticated();

}

}

Creating the JPA Repository

Spring provides a convenient solution for working with database records. Creating an interface that extends the JpaRepository interface provides access to many standard functions for saving, deleting, and returning entries. We expose these methods through the AuditRepository interface.

We just need to inject this interface into a controller to access the database:

package com.matthewcasperson.azureapi.repository;

import com.matthewcasperson.azureapi.model.Audit;

import org.springframework.data.jpa.repository.JpaRepository;

public interface AuditRepository extends JpaRepository<Audit, Long> {

}

Creating the Audit Entity

Next, we define the database entity representing our audit records in the Audit class. This class, and the associated database records, have three fields:

id, an auto-incrementing primary keymessage, the audit messagedate, the audit record’s date

We save these records in a table called audit using this code:

package com.matthewcasperson.azureapi.model;

import javax.persistence.*;

import java.sql.Timestamp;

@Entity

@Table(name = "audit")

public class Audit {

private Long id;

private String message;

private java.sql.Timestamp date;

public Audit() {

}

public Audit(String message) {

this.message = message;

this.date = new Timestamp(System.currentTimeMillis());

}

public void setId(Long id) {

this.id = id;

}

@Id

@GeneratedValue(strategy=GenerationType.IDENTITY)

public Long getId() {

return id;

}

public void setMessage(String message) {

this.message = message;

}

@Column(name = "message")

public String getMessage() {

return message;

}

public void setDate(Timestamp date) {

this.date = date;

}

@Column(name = "date")

public Timestamp getDate() {

return date;

}

}

Creating the File Upload REST Controller

We can find the bulk of this microservice’s logic in the UploadFileController class. This controller takes a request from the frontend application, uploads a file to Azure storage, and creates an audit record in the database.

The complete class code is as follows:

package com.matthewcasperson.azureapi.controllers;

import com.azure.core.credential.AccessToken;

import com.azure.core.credential.TokenCredential;

import com.azure.storage.blob.BlobContainerClient;

import com.azure.storage.blob.BlobServiceClient;

import com.azure.storage.blob.BlobServiceClientBuilder;

import com.azure.storage.blob.specialized.BlockBlobClient;

import com.matthewcasperson.azureapi.model.Audit;

import com.matthewcasperson.azureapi.repository.AuditRepository;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.security.oauth2.client.OAuth2AuthorizedClient;

import org.springframework.security.oauth2.client.annotation.RegisteredOAuth2AuthorizedClient;

import org.springframework.security.oauth2.server.resource.authentication.BearerTokenAuthentication;

import org.springframework.web.bind.annotation.PathVariable;

import org.springframework.web.bind.annotation.PutMapping;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RestController;

import reactor.core.publisher.Mono;

import java.io.ByteArrayInputStream;

import java.io.IOException;

import java.time.ZoneOffset;

@RestController

public class UploadFileController {

@Autowired

AuditRepository auditRepository;

@PutMapping("/upload/{fileName}")

public void upload(@RequestBody String content,

@PathVariable("fileName") String fileName,

BearerTokenAuthentication principal,

@RegisteredOAuth2AuthorizedClient("storage") OAuth2AuthorizedClient client) {

try {

uploadFile(client, generateContainerName(principal), fileName, content);

auditRepository.saveAndFlush(new Audit("Uploaded file " + fileName + " for user " + getPrincipalEmail(principal)));

} catch (Exception ex) {

ex.printStackTrace();

}

}

private void uploadFile(OAuth2AuthorizedClient client, String container, String fileName, String content) {

BlobServiceClient blobServiceClient = new BlobServiceClientBuilder()

.credential(createTokenCredential(client))

.endpoint("https://" + System.getenv("STORAGE_ACCOUNT_NAME") + ".blob.core.windows.net")

.buildClient();

BlobContainerClient containerClient = blobServiceClient.getBlobContainerClient(container);

if (!containerClient.exists()) containerClient.create();

BlockBlobClient blockBlobClient = containerClient.getBlobClient(fileName).getBlockBlobClient();

if (blockBlobClient.exists()) blockBlobClient.delete();

try (ByteArrayInputStream dataStream = new ByteArrayInputStream(content.getBytes())) {

blockBlobClient.upload(dataStream, content.length());

} catch (IOException e) {

e.printStackTrace();

}

}

private TokenCredential createTokenCredential(OAuth2AuthorizedClient client) {

return request -> Mono.just(new AccessToken(

client.getAccessToken().getTokenValue(),

client.getAccessToken().getExpiresAt().atOffset(ZoneOffset.UTC)));

}

private String generateContainerName(BearerTokenAuthentication principal) {

return getPrincipalEmail(principal).replaceAll("[^A-Za-z0-9\\-]", "-");

}

private String getPrincipalEmail(BearerTokenAuthentication principal) {

return principal.getTokenAttributes().get("upn").toString();

}

}

Let’s examine the intriguing aspects of this class.

We inject an instance of the AuditRepository interface, giving us access to the database:

@Autowired

AuditRepository auditRepository;

The upload method calls uploadFile to upload the file to Azure storage, then auditRepository.saveAndFlush to create a new database record.

Note the name of the container where we upload the file is derived from the logged-in user’s email address:

@PutMapping("/upload/{fileName}")

public void upload(@RequestBody String content,

@PathVariable("fileName") String fileName,

BearerTokenAuthentication principal,

@RegisteredOAuth2AuthorizedClient("storage") OAuth2AuthorizedClient client) {

try {

uploadFile(client, generateContainerName(principal), fileName, content);

auditRepository.saveAndFlush(new Audit("Uploaded file " + fileName + " for user " + getPrincipalEmail(principal)));

} catch (Exception ex) {

ex.printStackTrace();

}

}

The uploadFile method begins by building a new BlobServiceClient, allowing us to interact with the Azure storage account. Note that we define the name of the storage account in an environment variable called STORAGE_ACCOUNT_NAME:

private void uploadFile(OAuth2AuthorizedClient client, String container, String fileName, String content) {

BlobServiceClient blobServiceClient = new BlobServiceClientBuilder()

.credential(createTokenCredential(client))

.endpoint("https://" + System.getenv("STORAGE_ACCOUNT_NAME") + ".blob.core.windows.net")

.buildClient();

The code creates the container if it doesn’t already exist.

BlobContainerClient containerClient = blobServiceClient.getBlobContainerClient(container);

if (!containerClient.exists()) containerClient.create();

And, if it already exists, the code deletes the file to be uploaded.

BlockBlobClient blockBlobClient = containerClient.getBlobClient(fileName).getBlockBlobClient();

if (blockBlobClient.exists()) blockBlobClient.delete();

Then we create a new file from the supplied string:

try (ByteArrayInputStream dataStream = new ByteArrayInputStream(content.getBytes())) {

blockBlobClient.upload(dataStream, content.length());

} catch (IOException e) {

e.printStackTrace();

}

The credentials accessing the storage account are sourced directly from the access token the client generates. Interestingly, while the com.microsoft:azure-identity dependency provides many specialized implementations of the TokenCredential interface, none accept an existing JWT token.

However, it is easy enough to build our own implementation to hold the JWT token from our client, which is the purpose of the createTokenCredential function:

private TokenCredential createTokenCredential(OAuth2AuthorizedClient client) {

return request -> Mono.just(new AccessToken(

client.getAccessToken().getTokenValue(),

client.getAccessToken().getExpiresAt().atOffset(ZoneOffset.UTC)));

}

The generateContainerName function converts an email into a string suitable for a container name. Because container names can only include letters, numbers, and dashes, this function replaces all invalid characters with dashes:

private String generateContainerName(BearerTokenAuthentication principal) {

return getPrincipalEmail(principal).replaceAll("[^A-Za-z0-9\\-]", "-");

}

The getPrincipalEmail function returns the email address from the user principal name (upn) attribute:

private String getPrincipalEmail(BearerTokenAuthentication principal) {

return principal.getTokenAttributes().get("upn").toString();

}

Creating the Audit REST Controller

For the frontend web application to view the audit records, we must create a controller to find the records from the database and return them in an HTTP response. We use the AuditController class:

package com.matthewcasperson.azureapi.controllers;

import com.matthewcasperson.azureapi.model.Audit;

import com.matthewcasperson.azureapi.repository.AuditRepository;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RestController;

import java.util.List;

@RestController

public class AuditController {

@Autowired

AuditRepository auditRepository;

@GetMapping(value = "/audit", produces = "application/json")

public List<audit> upload() {

return auditRepository.findAll();

}

}</audit>

Running the Microservice

To build and run the application, we run the following PowerShell:

$env:CLIENT_SECRET="Application client secret"

$env:CLIENT_ID="Application client ID"

$env:TENANT_ID="Azure AD tenant ID"

$env:API_URL="Application API URI"

$env:DB_NAME="The name of the database"

$env:DB_USERNAME="The database username"

$env:DB_PASSWORD="The database password"

$env:STORAGE_ACCOUNT_NAME="The storage account name"

.\mvnw spring-boot:run

Or Bash:

export CLIENT_SECRET="Application client secret"

export CLIENT_ID="Application client ID"

export TENANT_ID="Azure AD tenant ID"

export API_URL="Application API URI"

export DB_NAME="The name of the database"

export DB_USERNAME="The database username"

export DB_PASSWORD="The database password"

export STORAGE_ACCOUNT_NAME="The storage account name"

./mvnw spring-boot:run

Creating the Frontend Audit Controller

Next, we need to update the frontend web application to read the audit logs generated when the backend microservice uploads files. The AuditController class displays these records.

Here we call the backend microservice, convert the result to a list of Audit records, and pass the records to the view via the audit model attribute:

package com.matthewcasperson.demo.controllers;

import com.matthewcasperson.demo.model.Audit;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.core.ParameterizedTypeReference;

import org.springframework.security.oauth2.client.OAuth2AuthorizedClient;

import org.springframework.security.oauth2.client.annotation.RegisteredOAuth2AuthorizedClient;

import org.springframework.stereotype.Controller;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.reactive.function.client.WebClient;

import org.springframework.web.servlet.ModelAndView;

import java.util.ArrayList;

import java.util.List;

import static org.springframework.security.oauth2.client.web.reactive.function.client.ServerOAuth2AuthorizedClientExchangeFilterFunction.oauth2AuthorizedClient;

@Controller

public class AuditController {

@Autowired

private WebClient webClient;

@GetMapping("/audit")

public ModelAndView events(

@RegisteredOAuth2AuthorizedClient("azure-api") OAuth2AuthorizedClient client) {

List<Audit> events = getAudit(client);

ModelAndView mav = new ModelAndView("audit");

mav.addObject("audit", events);

return mav;

}

private List<Audit> getAudit(OAuth2AuthorizedClient client) {

try {

if (null != client) {

System.out.println("\n" + client.getAccessToken().getTokenValue() + "\n");

return webClient

.get()

.uri("http://localhost:8082/audit")

.attributes(oauth2AuthorizedClient(client))

.retrieve()

.bodyToMono(new ParameterizedTypeReference<List<Audit>>() {})

.block();

}

} catch (Exception ex) {

System.out.println(ex);

}

return new ArrayList<>();

}

}

Creating the Frontend Audit Record

The frontend represents the JPA entities the backend returns as more streamlined records. Since we don’t need any of the JPA annotations, a record called Audit provides a lightweight solution for representing the audit events.

package com.matthewcasperson.demo.model;

import java.sql.Timestamp;

public record Audit(Long id, String message, Timestamp date) {

}

Creating the Frontend Audit Template

The Thymeleaf template called audit.html displays the audit records by looping over each item in the audit model attribute and building a new table row:

<!doctype html>

<html lang="en">

<head>

<meta charset="utf-8">

<title>MSAL and Spring Demo</title>

<link href="bootstrap.min.css" rel="stylesheet">

<link href="cover.css" rel="stylesheet">

</head>

<body class="text-center">

<div class="cover-container d-flex h-100 p-3 mx-auto flex-column">

<header class="masthead mb-auto">

<div class="inner">

<nav class="nav nav-masthead justify-content-center">

<a class="nav-link" href="/">Home</a>

<a class="nav-link" href="/profile">Profile</a>

<a class="nav-link" href="/events">Events</a>

<a class="nav-link" href="/upload">Upload</a>

<a class="nav-link active" href="/audit">Audit</a>

</nav>

</div>

</header>

<main role="main" class="inner cover">

<h2>Audit Events</h2>

<table>

<tr>

<td>Message</td>

<td>Time</td>

</tr>

<tr th:each="auditItem: ${audit}">

<td th:text="${auditItem.message}" />

<td th:text="${auditItem.date}" />

</tr>

</table>

</main>

</div>

<script src="jquery-3.6.0.min.js"></script>

<script src="bootstrap.min.js"></script>

</body>

</html>

Running the Frontend Application

To build and run the application, we run the following PowerShell:

$env:CLIENT_SECRET="Application client secret"

$env:CLIENT_ID="Application client ID"

$env:TENANT_ID="Azure AD tenant ID"

$env:CALENDAR_SCOPE="The Calendar API ReadCalendar scope e.g. api://2e6853d4-90f2-40d9-a97a-3c40d4f7bf58/ReadCalendar"

$env:AZURE_SCOPE="The Azure API SaveFile scope e.g. api://06bab64f-dc26-4156-9412-720e351259ab/SaveFile"

.\mvnw spring-boot:run

Or Bash:

export CLIENT_SECRET="Application client secret"

export CLIENT_ID="Application client ID"

export TENANT_ID="Azure AD tenant ID"

export CALENDAR_SCOPE="The Calendar API ReadCalendar scope e.g. api://2e6853d4-90f2-40d9-a97a-3c40d4f7bf58/ReadCalendar"

export AZURE_SCOPE="The Azure API SaveFile scope e.g. api://06bab64f-dc26-4156-9412-720e351259ab/SaveFile"

./mvnw spring-boot:run

We then open http://localhost:8080/upload, enter a filename, supply the file content, and click the Upload button:

The frontend application calls the backend microservice, uploads the file to Azure storage, and saves a record in the MySQL database.

We can view these audit records by opening http://localhost:8080/audit. The frontend contacts the backend microservice for a list of audit events. The backend returns this information, and the Thymeleaf template displays it by looping over the audit records to build a table.

Next Steps

After following along with these three tutorials, you have three Spring Boot applications:

Azure AD protects each application, and, thanks to MSAL, we have a streamlined login experience that allows users in our directory to use our website and backend services.

MSAL and Azure AD also streamline the process of authenticating each application with external resource servers. With the support for on-behalf-of OAuth flows, our microservices can interact with external platforms, like Azure and Microsoft 365, as users who logged into the frontend website. This ability allows us to access user-specific information, such as calendar events, and rely on external security layers rather than our code implementing custom security.

These features ensure your applications remain secure and easy to manage as your microservice architecture complexity grows. By following the examples provided here, you now have an excellent foundation to put these techniques into practice in your applications. Use MS Identity and MSAL in your next corporate application for manageable enterprise-level security.

Further Reading