This article proceeds through the steps to deploy Azure Functions to an Arc-hosted Kubernetes cluster hosted in another cloud service.

It demonstrates how to:

- Provision an App Service Kubernetes Environment

- Deploy Python Functions to an Arc-enabled cluster on Google Cloud

It concludes by demonstrating that the Azure Functions are running in the cloud, but not on Azure itself.

The following steps target PowerShell on Windows but are straightforward to recreate in Bash. Additionally, they are segmented to enable better understanding of the process. In production, they can be consolidated into a singular concise script.

Prerequisites

The first article in this series reviewed how to connect our Azure Arc to Kubernetes on Google Cloud. Before deploying Azure Functions, let’s run a few checks to ensure everything is still properly located. Remember to incorporate any naming choices that you employed previously.

First, confirm the existence of a resource group in your Azure subscription called arc-rg.

Note: While in preview, resource groups for Azure Arc must be in either West Europe or East US.

Next, confirm the registration of the Kubernetes providers:

az provider show -n Microsoft.Kubernetes --query registrationState -tsv

az provider show --namespace Microsoft.KubernetesConfiguration --query registrationState -o tsv

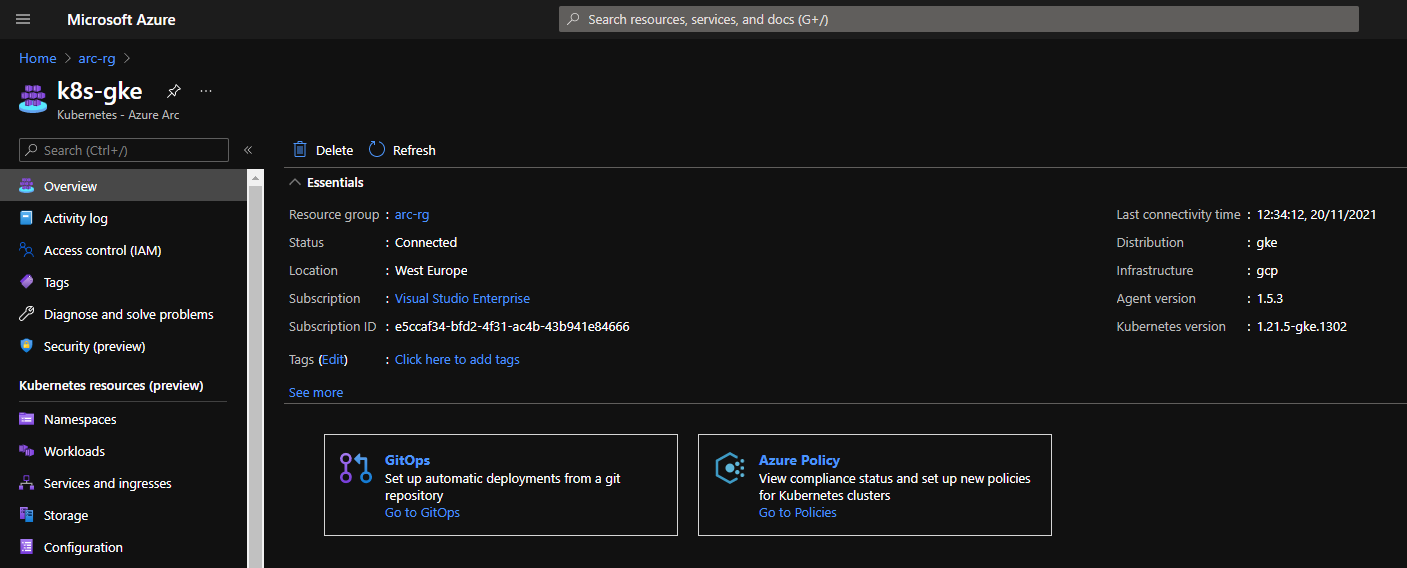

Then confirm that the connected Azure Arc resource named k8s-gke exists in the resource group.

Additionally, ensure that you have a kubeconfig entry to authenticate with the GKE cluster.

Next, check that azure-arc is set to Active:

kubectl get namespace

Verify that the Azure Arc Kubernetes agents are deployed. They should all be running:

kubectl get pods -n azure-arc

Afterward, validate the connection with the following command:

az connectedk8s show --resource-group arc-rg --name k8s-gke

This should display the provisioningState property as Succeeded.

Finally, check that the connected Kubernetes cluster is a resource group in the Azure portal:

Step 1: Provision an App Services Kubernetes Environment

You are free to use any names for SQL Server to suit your preference. (You will also need to set your Azure subscription ID.) However, for the sake of simplicity, this article uses the following names:

k8s-gke — Azure Arc resourcearc-rg — Azure resource group for Azure Arcarc-app-service — Kubernetes App Service extensionarc-ns — App namespacearc-app-custom-location — custom location

Log in to Azure

For deployment pipelines, it can be helpful to connect Kubernetes clusters to Azure Arc using service principles with limited-privilege role assignments. You can find instructions for this here.

For this demo, we log in to our Azure account:

az login

az account set -s "<SUBSCRIPTION_ID>"

Add or Update the Prerequisites for Custom Location

Add or update the prerequisites for the custom location as follows:

az provider register --namespace Microsoft.ExtendedLocation --wait

az extension add --upgrade -n customlocation

Enable the Feature on the Cluster

Enable the custom location feature as follows:

az connectedk8s enable-features -n k8s-gke -g arc-rg --features custom-locations

This also enables the cluster-connect feature.

Install the app service extension in the Azure Arc-connected cluster as follows:

az k8s-extension create -g "arc-rg" --name "arc-app-service"

--cluster-type connectedClusters -c "k8s-gke" `

--extension-type 'Microsoft.Web.Appservice' --release-train stable --auto-upgrade-minor-version true `

--scope cluster --release-namespace "arc-ns" `

--configuration-settings "Microsoft.CustomLocation.ServiceAccount=default" `

--configuration-settings "appsNamespace=arc-ns" `

--configuration-settings "clusterName=arc-app-service" `

--configuration-settings "keda.enabled=true" `

--configuration-settings "buildService.storageClassName=standard" `

--configuration-settings "buildService.storageAccessMode=ReadWriteOnce" `

--configuration-settings "customConfigMap=arc-ns/kube-environment-config"

If we want Log Analytics enabled in production, we must add it now following the additional configuration shown here. We will not be able to do this later.

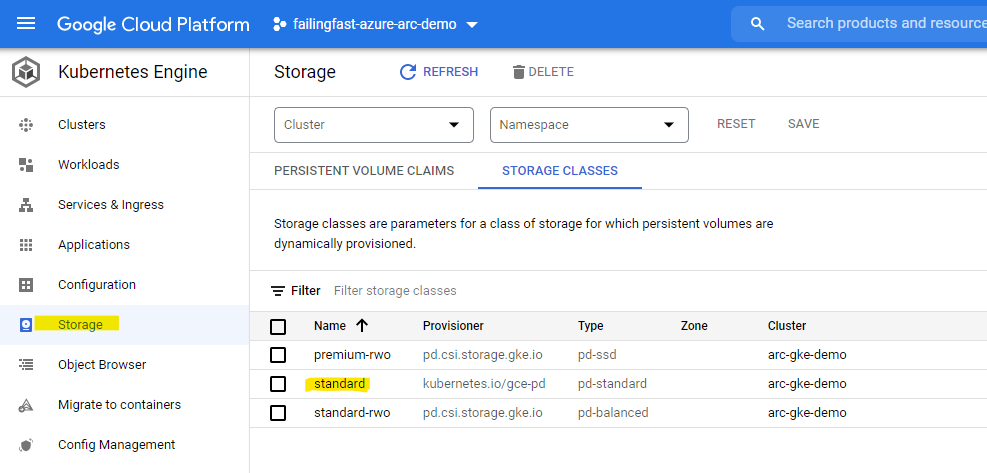

There are two parameters for persistent storage that need proper consideration. You can learn more about Kubernetes persistent volumes, including a breakdown of all available access modes on the storage resources provided by all major cloud service providers, in the official Kubernetes documentation. In this demo, we’re hosting our cluster in Google Cloud Engine (GCE), which provides GCEPersistentDisk. This supports the following PersistentVolume (PV) types for Kubernetes:

ReadWriteOnceReadOnlyMany

The AppService extension supports:

ReadWriteOnceReadWriteMany

Suppose we don’t explicitly set the AppService extension to ReadWriteOnce. In that case, it defaults to ReadWriteMany. There will be a mismatch between the PersistentVolumeClaim (PVC), the request for storage from the AppService extension, and the available PVs in GCE.

We must also configure the correct class name for persistent storage on GCP.

The command can take a few minutes. If there are any errors during this process, check the troubleshooting documentation.

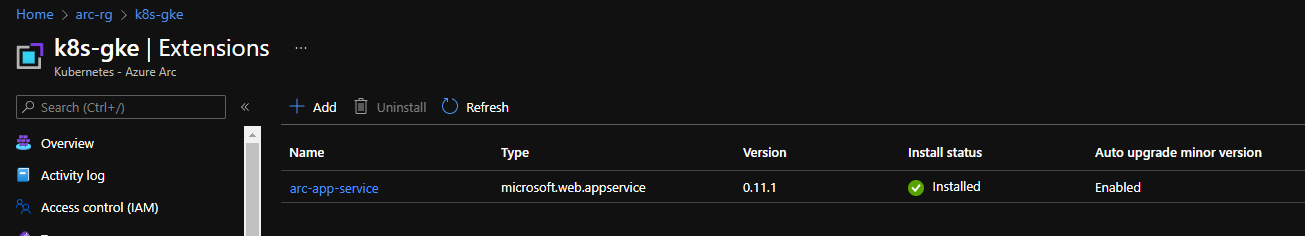

Once the command completes, navigate to the Kubernetes Azure Arc resource in Azure Portal and click Extensions on the sidebar. You should see the arc-app-service extension listed as Installed.

Create the Custom Location

We must set up a custom location to enable the deployment of Azure Functions to an Arc-enabled Kubernetes cluster. We looked at these in the previous article.

First, get the id property of the App Service extension:

$extensionId=(az k8s-extension show --name arc-app-service --cluster-type connectedClusters -c k8s-gke -g arc-rg --query id -o tsv)

Then, get the id property of the Azure Arc-connected cluster:

$connectedClusterId=(az connectedk8s show -n k8s-gke -g arc-rg --query id -o tsv)

Use these values to create the custom location:

az customlocation create `

--resource-group arc-rg `

--name arc-app-custom-location `

--host-resource-id $connectedClusterId `

--namespace arc-ns `

--cluster-extension-ids $extensionId

When this completes, validate it with the following command:

az customlocation show --resource-group arc-rg --name arc-app-custom-location --query privisioingState -o tsv

The output should show as Succeeded. If not, wait a minute and run it again.

Create the App Service Kubernetes Environment

First, get the custom location id property:

$customLocationId=$(az customlocation show `

--resource-group arc-rg `

--name arc-app-custom-location `

--query id `

--output tsv)

Use this id to create the App Service Kubernetes environment:

az appservice kube create `

--resource-group arc-rg `

--name k8s-gke `

--custom-location $customLocationId

Then, check that the provisioning state is set to Succeeded:

az appservice kube show --resource-group arc-rg --name k8s-gke

Step 2: Deploy the Python Azure Function App

Now we are going to deploy two Python functions: the Blob storage trigger and the HTTP trigger.

Blob Storage Trigger

When uploading new files (blobs) to a blob container hosted on Azure, our function (hosted on Google Cloud) checks the MD5 hash of the newly uploaded file with those already in the folder. That is, it looks for duplicates. It then deletes any copies of the new file that it finds. Next, we’ll prove the function is running on Google Cloud by checking the blob storage audit logs.

HTTP Trigger

This simple function displays the IP address of the host on which it is running so that we can prove it is running on Google Cloud.

If you are not already familiar with creating Azure Functions in Python, read through the guides in the official documentation. These guides contain instructions for installing and updating all the prerequisites for Python Azure Function development, including the Azure Function Core Tools. They also provide guidance for testing a function locally.

Set up an Azure Storage Account

To maintain simplicity, we create the container we will monitor for new files in the same storage account as our function. The first thing to do is make that storage account:

az storage account create --name arcdemofunctionstorage --location westeurope --resource-group arc-rg --sku Standard_LRS

Now, we create a container in the storage account named demo-container.

Create the Function App Python Project

If you need to ensure that you have created a new Python project, follow the guidance in the official documentation and copy the source code from this demonstration’s GitHub repository into the project.

Install the modules in requirements.txt.

Then copy the storage account’s connection string into the local.settings.json entry for AzureWebJobsStorage. This runs the function app locally.

Create the Function App in Azure

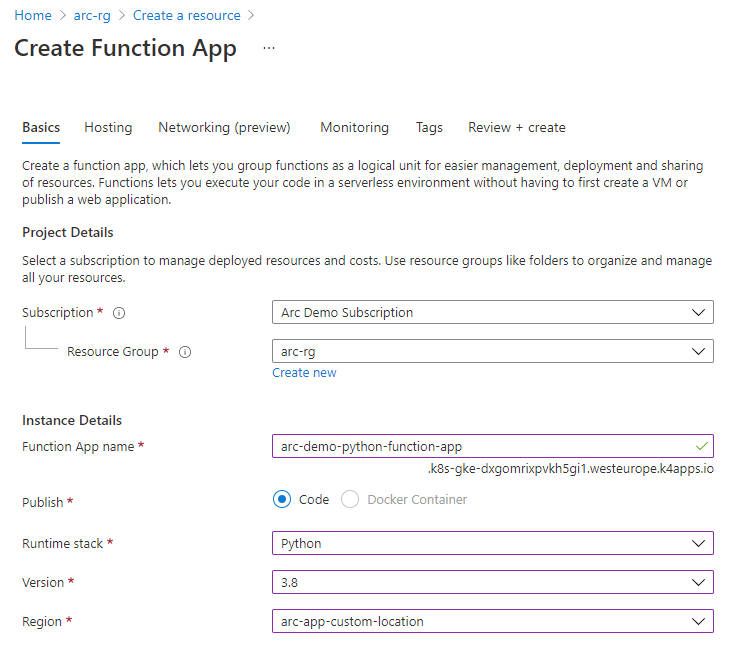

We will now use the Azure Portal to create a new function app. This will enable us to connect our storage account.

The only modification we must make to our usual function-building process applies to the region. We must select the custom location option instead of a regular region name.

Then we switch to the Hosting tab to select the storage account we made earlier. When Azure finishes provisioning, we go to the new function app and add a new application setting under Configuration named CONTAINER_NAME with any value. We will name our container Arc.

Note: At the time of writing, there is a bug affecting Python libraries. The workaround is to add an application setting called CONTAINER_NAME and assign to it any value. This bug also requires us to add the –build-native-deps argument when deploying our function app.

Deploy our Function

Deploying to our Google Cloud Kubernetes clusters is no different from deploying to a native Azure Function. Here we use the Azure Function Core Tools:

func azure functionapp publish arc-demo-python-function-app --build-native-deps

The only difference is that for the time being, we must use the --build-native-deps argument.

At this stage, it is unknown as to whether this issue is limited to Python and GKE, but at the time of writing, there is a bug that means we must delete and recreate the Function App in Azure each time we must redeploy the App.

Project Summary

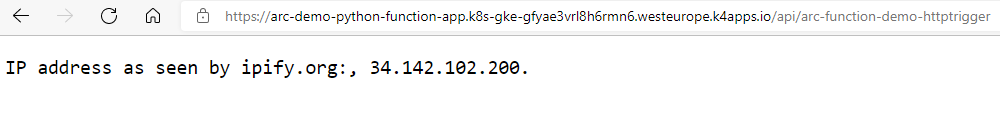

When we visit our Function App URL, https://{BASE_URL}/api/arc-function-demo-httptrigger, our HTTP-triggered function is executed:

def main(req: func.HttpRequest) -> func.HttpResponse:

ip = get('https://api.ipify.org').text

return func.HttpResponse(f"IP address as seen by ipify.org:, {ip}.")

This makes a call to ipify.org to get the function host’s IP address and returns it to our browser:

To trigger our blob function:

- Upload a file to the container named

demo-container in our storage account. - Upload another exact copy of that file to the container.

Our function automatically deletes the first file.

def main(myblob: func.InputStream):

connection_string = os.getenv("AzureWebJobsStorage")

service_client = BlobServiceClient.from_connection_string(connection_string)

client = service_client.get_container_client("demo-container")

for blob in client.list_blobs():

existing_md5 = blob.content_settings.content_md5

newfile_md5 = base64.b64decode(myblob.blob_properties.get('ContentMD5'))

if f"{blob.container}/{blob.name}" != myblob.name and existing_md5 == newfile_md5:

logging.info(f"Blob {myblob.name} already exists as {blob.name}. Deleting {blob.name}.")

client.delete_blob(blob.name)

return

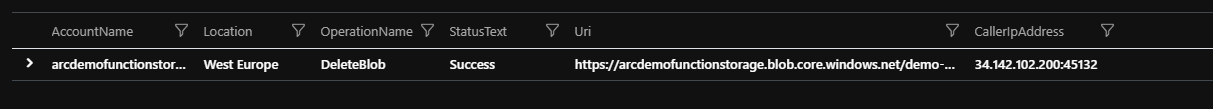

Then, if we check the audit logs in Azure Portal, we can see the delete operation:

The operation uses the same IP address as the HTTP trigger.

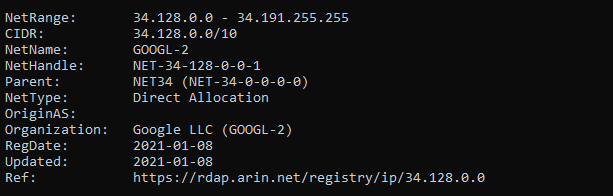

Then, we can look up that IP address:

This is definitive proof that our functions are both running in Google Cloud!

Next Steps

Continue along to the next article in this series to learn how to deploy an Azure App Service web app written in Java to an Arc-hosted Kubernetes cluster hosted in another cloud service.

To learn how to manage, govern, and secure your Kubernetes clusters across on-premises, edge, and cloud environments with Azure Arc Preview, check out Azure webinar series Manage Kubernetes Anywhere with Azure Arc Preview.