Brief foreword

This is my second attempt to implement a basic compile time numerical integration methodology. In the first version of this article integrations were done before main(), but not really at compile time. I want to thank all the constructive comments and feedback I have received so far. I hope this time everything will work out...

Introduction

Since the arrival of C++11, it is possible to carry out far from trivial calculations at compile time. As a sample, in this article I will show how to calculate the area under any real function between arbitrary boundaries. I will use standard and widespread algorithms, like trapezoidal rule and Simpson’s rule.

Computing at compile time means that the result of the operation is known by the time the process is loaded in memory, far before entering the main function.

The magic of the main function

Have a look at this code (only valid since C++11):

#include <cmath>

#include <iostream>

#include "simpsons_rule.h"

constexpr double PI=4.0*atan(1.0);

struct my_function

{

constexpr static double f(double x) {return cos(x);}

};

struct left_boundary

{

constexpr static double value=0.0;

};

struct right_boundary

{

constexpr static double value=PI/2.0;

};

int main(int argn, char *argc[])

{

constexpr double partition_size=100;

constexpr double simpsons_rule_result=

simpsons_rule<

my_function,

left_boundary,

right_boundary,

partition_size

>::result;

std::cout << "Simpson's rule: " << simpsons_rule_result << std::endl;

static_assert(fabs(simpsons_rule_result-1.0)<0.001, "Failure");

return 0;

}

The program above simply displays the value stored in a static constant expression (constexpr) result, belonging to the class simpsons_rule<>. This value is evaluated at compile time and stored in memory before main() is accessed. result stores the integral of cos(x) (function my_function::f) between 0.0 (left_boundary::value) and PI/2.0 (right_boundary::value). The exact result is 1. The displayed result is also 1. Thus, the approximation is almost perfect in this case (think about round-off errors).

If you now change my_function::f, left_boundary::value or right_boundary::value and recompile, you will get a different value for result.

So this is the crux and the magic of the matter: at runtime no computations are done; the only performed task is displaying the result. To see that, just change expression [1] like this:

static_assert(fabs(simpsons_rule_result-1.0)>0.001, "Failure");

If you now try to recompile, compilation will fail because the integration error is actually less than 0.001.

Now it is time to see the guts, but instead of diving into trapezoidal or Simpson’s rules implementations, it is much more illustrative to show a simpler (but analogous) case: the factorial.

Implementing the factorial (1)

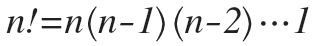

To compute the factorial of an integer n (noted as n!), just apply the following formula:

The following code implements the factorial computation at compile time (only valid since C++11):

#ifndef FACTORIAL_H

#define FACTORIAL_H

#include <cstddef>

template <size_t N>

struct factorial

{

constexpr static size_t result=N*factorial<N-1>::result;;

};

template <>

struct factorial<1>

{

constexpr static size_t result=1;

};

#endif

The code above declares a class template named factorial<> [1] and a specialization factorial<1> [2]. In [1] the static constexpr result is defined for factorial<>. In [2] the static constexpr result is defined for factorial<1>.

Now suppose you write this sentence in the main function:

std::cout << factorial<5>::result << std::endl;

As you can see in [1], the compiler resolves factorial<5>::result as:

factorial<5>::result = 5 * factorial<4>::result

But also in [1] factorial<4>::result is resolved as:

factorial<4>::result = 4 * factorial<3>::result

Following this reasoning in a recursive way we get:

factorial<5>::result = 5 * 4 * 3 * 2 * factorial<1>::result

factorial<1>::result is resolved in [2] as 1. We get then:

factorial<5>::result = 5 * 4 * 3 * 2 * 1

The compiler finally carries out the multiplication above, and factorial<5>::result is resolved at compile time as 120.

Implementing the factorial (2)

The following code is a not so known alternative (only valid since C++11):

#ifndef FACTORIAL_H

#define FACTORIAL_H

#include <cstddef>

template <size_t N, size_t M=N+1, size_t... Indices>

struct factorial : public factorial<N, M-1, M-1, Indices...>

{};

template <size_t N, size_t First, size_t... Rest>

struct factorial<N, 1, First, Rest...>

{

constexpr static size_t result=

First*factorial<N, 1, Rest...>::result;

};

template <size_t N>

struct factorial<N, 1>

{

constexpr static size_t result=1;

};

#endif

How does the compiler resolve factorial<5>::result in this case? If you have a look at [1], you will see that factorial<5> can be resolved into factorial<5, 5, 5>, being Indices… an empty pack. Without taking your eyes off [1], you can follow the chain:

factorial<5> -> factorial<5, 5, 5>, with Indices... empty.

factorial<5, 5, 5> -> factorial<5, 4, 4, 5>, with Indices... = {5}.

factorial<5, 4, 4, 5> -> factorial<5, 3, 3, 4, 5>, with Indices... = {4, 5}.

factorial<5, 3, 3, 4, 5> -> factorial<5, 2, 2, 3, 4, 5>, with Indices... = {3, 4, 5}.

factorial<5, 2, 2, 3, 4, 5> -> factorial<5, 1, 1, 2, 3, 4, 5>, with Indices... = {2, 3, 4, 5}.

Once the compiler gets factorial<5, 1, 1, 2, 3, 4, 5>, it can be resolved by the specialization at [2]. It becomes then factorial<5, 1, First, Rest…>, being First=1 and Rest… the pack: { 2, 3, 4, 5}.

The technique above is very interesting because it allows us to get a pack of indices for the operands involved in our computation. In the case of the factorial, indices and operands (numbers to be multiplied) are the same, but this is just a particular case.

Observe that the expresion factorial<N, 1, Rest…>::result, inside [2], is evaluated at [2] itself.

The termination condition is reached when Rest… is empty. Such case is implemented in [3].

After following the previous steps, the compiler finally resolves factorial<5>::result as 120. As you can imagine, both methodologies come together to the same binary output.

An esoteric Fibonacci sequence

As another illustrative example, you can use the technique above to compute an element in a Fibonacci sequence. Be indulgent with me if it looks weird to your eyes:

#ifndef FIBONACCI_H

#define FIBONACCI_H

#include <cstddef>

template <

size_t SequenceSize,

size_t CurrentSequenceSize = 0,

size_t M = 1,

size_t N = 0,

size_t... Series

>

struct fibonacci :

public fibonacci<SequenceSize, CurrentSequenceSize + 1, M + N, M, N, Series...>

{};

template <size_t SequenceSize, size_t M, size_t N, size_t First, size_t... Rest>

struct fibonacci<SequenceSize, SequenceSize, M, N, First, Rest...>

{

constexpr static size_t value=First;

};

#endif

If you type (for instance) fibonacci<5>::value in the main function, it will be resolved as 3 (the fifth element in the series). I agree this is not the most intuitive way to compute a Fibonacci sequence, of course.

The trapezoidal rule

Observe that factorial of n can be written schematically as:

where f(x)=x and {i1, i2,…, in} is a collection of indices with values ranging from 1 to n.

The trapezoidal rule can be arranged into a similar pattern:

where:

In the code below, summation<> class template carries out the summation of the g()’s:

#ifndef TRAPEZOIDAL_RULE_H

#define TRAPEZOIDAL_RULE_H

#include <cstddef>

template <typename F, typename A, typename B, size_t N, size_t I=N-1>

struct summation

{

constexpr static double result=

F::f(A::value+I*(B::value-A::value)/N)+summation<F, A, B, N, I-1>::result;

};

template <typename F, typename A, typename B, size_t N>

struct summation<F, A, B, N, 0>

{

constexpr static double result=0.0;

};

template <typename F, typename A, typename B, size_t N>

struct trapezoidal_rule

{

constexpr static double result=

(B::value-A::value)/N*(0.5*(F::f(A::value)+F::f(B::value))+summation<F, A, B, N>::result);

};

#endif

The code above bears resemblance to the first implementation of factorial. If you look for resemblance to the second implementation, here you are the code:

#ifndef TRAPEZOIDAL_RULE_2_H

#define TRAPEZOIDAL_RULE_2_H

#include <cstddef>

template <typename F, typename A, typename B, size_t N, size_t M=N, size_t... Indices>

struct summation_ : public summation_<F, A, B, N, M-1, M-1, Indices...>

{};

template <typename F, typename A, typename B, size_t N, size_t First, size_t... Rest>

struct summation_<F, A, B, N, 1, First, Rest...>

{

constexpr static double result=

F::f(A::value+First*(B::value-A::value)/N)+summation_<F, A, B, N, 1, Rest...>::result;

};

template <typename F, typename A, typename B, size_t N>

struct summation_<F, A, B, N, 1>

{

constexpr static double result=0.0;

};

template <typename F, typename A, typename B, size_t N>

struct trapezoidal_rule_2

{

constexpr static double result=

(B::value-A::value)/N*(0.5*(F::f(A::value)+F::f(B::value))+summation_<F, A, B, N>::result);

};

#endif

Once we have understood both factorial implementations, I think these two last pieces of code are clear, well commented and self-explanatory.

The implementation for Simpson’s rule (a more precise integration method) follows the same ideas but it is a little more verbose, because it arises from a bit more complicated formula. You can find it in the enclosed zip.

Source code

The trapezoidal and Simpson’s rules implementation code has been attached, along with a main sample code. The code has been written with Code::Blocks and compiled with MinGw gcc (v.4.8.1-4).

References

- Trapezoidal rule. (http://en.wikipedia.org/wiki/Trapezoidal_rule).

- Simpson’s rule. (http://en.wikipedia.org/wiki/Simpson's_rule).

- Compile time function execution. (http://en.wikipedia.org/wiki/Compile_time_function_execution).

- Constexpr - Generalized Constant Expressions in C++11. (http://www.cprogramming.com/c++11/c++11-compile-time-processing-with-constexpr.html).

- “Unpacking” a tuple to call a matching function pointer. (http://stackoverflow.com/questions/7858817/unpacking-a-tuple-to-call-a-matching-function-pointer).