Thank you for nominating me for article of the month.

Introduction

This is the first in a series of articles I hope to write about parallelism and distribution of computationally intensive applications.

In this article, I present a number of techniques available to .NET developers for parallelising Monte Carlo routines on a single machine. We learn that whilst TPL has its benefits, it is also difficult to write efficient routines that outperform Threadpooling and mixed C#/C++ implementations.

Background

Briefly, a Monte Carlo routine is any iterative routine that uses random number generation in order to calculate a result whose estimated accuracy increases per iteration. On top of that, because each iterative result is independent, we can disperse the computation to any number of locations and then aggregate our results later on.

Confused? Then go here for a decent description.

For the purposes of this article, I've used the version of this algorithm presented in Numerical Recipes in C by Press, Flannery, Teukolsky and Vetterling pages 239-240. Where the routine is designed to find the weight and centre of mass of a section of torus with varying density. In plain English: we're chopping up a lumpy doughnut and finding the centre of mass of single chunk.

Using the Code

The attached solution should compile and run within Visual Studio Express for Windows Desktop 2012 which you should build in Release mode. It includes 4 projects:

Montecarlo - containing just .NET codeComponentWin32 - a native C++ DLL with static exportsComponentCLR - a C++/CLR assembly that can be referenced by any other .NET assemblyCppConsole - a simple runner for the native Monte Carlo implementation

Points of Interest

There are several techniques demonstrated, which I've developed and tested on my 4 core desktop machine:

- Pure C# on a single thread which we shall use as our benchmark for accuracy

- Native C++ on a single thread as our benchmark for speed

- A mixed C#/C++ version to demonstrate one method of integration

- Single threaded PInvoke version where we create an unmanaged object and call methods on it

- A

Threadpool version where the work is split up, executed by pooled threads and aggregated afterwards - TPL

Parallel.For methods where we improve the performance and accuracy of the result with each evolution - Threadpooled and Tasked PInvoke where we attempt to use the fastest execution method with .NET parallelisation.

Single Threaded C#

Starting off with a single threaded approach in C#, we can rapidly implement our basic routine and use it to validate the results of any consequent evolution.

public void Calculate(ref ResultsdotNet results)

{

double w,x,y,s,z,dw,dx,dy,dz,sw,swx,swy,swz, varw,varx,vary,varz,ss,vol;

w=x=y=s=z=dw=dx=dy=dz=sw=swx=swy=swz=varw=varx=vary=varz= 0.0;

ss=0.2*(Math.Exp(5.0)-Math.Exp(-5.0));

vol=3.0*7.0*ss;

long count = 0;

long n = results.Iterations;

for(int j=1; j<= n; j++)

{

x=1.0+3.0* r.NextDouble();

y=(-3.0)+7.0* r.NextDouble();

s = 0.00135 + ss * r.NextDouble();

z=0.2*Math.Log(5.0*s);

double b = Math.Sqrt((x * x ) + (y * y) ) - 3.0;

double a = (z * z) + ( b * b);

if (a < 1.0)

{

sw += 1.0;

swx += x;

swy += y;

swz += z;

varw = 1.0;

varx += x * x;

vary += y * y;

varz += z * z;

}

count++;

}

results.W = vol * sw / n;

results.X = vol * swx/n;

results.Y = vol * swy/n;

results.Z = vol * swz/n;

results.dW = vol * Math.Sqrt((varw / n - Math.Pow((sw /n), 2.0)) / n);

results.dX = vol * Math.Sqrt((varx / n - Math.Pow((swx / n), 2.0)) / n);

results.dY = vol * Math.Sqrt((vary / n - Math.Pow((swy / n), 2.0)) / n);

results.dZ = vol * Math.Sqrt((varz / n - Math.Pow((swz / n), 2.0)) / n);

}

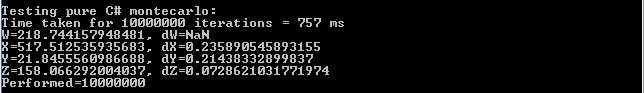

Running this in Release mode for 10 million iterations gives:

We know we can make this faster, but so long as any other approach gives us similar numbers, then we know we are on the right track.

Single Threaded Native C++

Let's try the same thing in C++ and see how that compares.

vector<double> TorusMontecarlo::Calculate(int n)

{

double w,x,y,s,z,dw,dx,dy,dz,sw,swx,swy,swz, varw,varx,vary,varz,ss,vol;

w=x=y=s=z=dw=dx=dy=dz=sw=swx=swy=swz=varw=varx=vary=varz= 0.0;

ss=0.2*(exp(5.0)-exp(-5.0));

vol=3.0*7.0*ss;

for(int j=1; j<= n; j++)

{

x=1.0+3.0* RandomNumbers::ran3(&idum);

y=(-3.0)+7.0* RandomNumbers::ran3(&idum);

s=0.00135+ss* RandomNumbers::ran3(&idum);

z=0.2*log(5.0*s);

if (SQR(z) + SQR( sqrt(SQR(x)+SQR(y)) -3.0) < 1.0)

{

sw += 1.0;

swx+= x;

swy+= y;

swz += z;

varw = 1.0;

varx += SQR(x);

vary += SQR(y);

varz += SQR(z);

}

}

w = vol * sw/n;

x = vol * swx/n;

y = vol * swy/n;

z = vol * swz/n;

dw=vol*sqrt((varw/n-SQR(sw/n))/n);

dx=vol*sqrt((varx/n-SQR(swx/n))/n);

dy=vol*sqrt((vary/n-SQR(swy/n))/n);

dz=vol*sqrt((varz/n-SQR(swz/n))/n);

double res [] = {w,dw,x,dx,y,dy,z,dz};

vector<double> result (res, res + sizeof(res)/ sizeof(double) );

return result;

}

Without much knowledge of how to optimise for MS C++ compiler and using a rather primitive stopwatch implementation, we get similar accuracy for about 2/3 the time:

Obviously, C++ is not everyone's cup of tea and developing full applications rather than just libraries is probably ill advised for business applications. So let's see how we do when mixing C++ with the managed world.

Mixed C# and C++

Let's flip the /clr switch on the compiler, add some supporting classes and call this routine (implemented in the ComponentCLR.dll), from C#.

Not so impressive, but still beats our pure C# implementation.

What if we expose our native Win32 C++ implementation and invoke it statically from C# via PInvoke?

Our unmanaged object performs as well as our native implementation, so why bother with managed C++?

C# Threadpool

From .NET 1.1 onwards, C# developers have been able to take advantage of the Threadpool to parallelise work without having to manually instantiate threads. But to do so optimally, we need to figure out the number of cores on a particular machine and split the work to be done equally among them. Once work has been sent to the threadpool and kicked off, the parent thread needs to wait until each batch signals that it has completed.

Something like the below should do the trick:

public ResultsdotNet CalculateThreadpool(ResultsdotNet total)

{

ManualResetEvent[] events = new ManualResetEvent[Environment.ProcessorCount];

List<ResultsdotNet> results = new List<ResultsdotNet>();

for (int i = 1; i <= Environment.ProcessorCount; i++)

{

ResultsdotNet batchResult = new ResultsdotNet()

{ Iterations = total.Iterations / Environment.ProcessorCount,

ManualResetEvent = new ManualResetEvent(false) };

results.Add(batchResult);

events[i - 1] = batchResult.ManualResetEvent;

ThreadPool.QueueUserWorkItem(new WaitCallback(CalculateCallback), batchResult);

}

WaitHandle.WaitAll(events);

foreach (ResultsdotNet batchResult in results)

{

total.dW += batchResult.dW;

total.dX += batchResult.dX;

total.dY += batchResult.dY;

total.dZ += batchResult.dZ;

total.W += batchResult.W;

total.X += batchResult.X;

total.Y += batchResult.Y;

total.Z += batchResult.Z;

total.Iterations += batchResult.Iterations;

}

total.dW = total.dW / results.Count;

total.dX = total.dX / results.Count;

total.dY = total.dY / results.Count;

total.dZ = total.dZ / results.Count;

total.W = total.W / results.Count;

total.X = total.X / results.Count;

total.Y = total.Y / results.Count;

total.Z = total.Z / results.Count;

return total;

}

Not a vast amount of sophisticated code, but could certainly be more elegant using TPL. When we run it purely in C# for a total of 10 million iterations spread equally among the CPU cores, we get:

This is almost 4 times faster than single threaded. We can assume that minus some minor overhead from task switching monte carlo routines will scale very well indeed!

C# Task Parallel Library

Let's try a TPL and specifically the Parallel.For methods to see if we can get good performance and more elegant code. For example, you might expect the following to be as good if not better than using the Threadpool, it is newer after all:

public void CalculateParallel(ref ResultsdotNet results)

{

double w, x, y, s, z, dw, dx, dy, dz, swx, swy, swz,

varw, varx, vary, varz, ss, vol, sw;

w = x = y = s = z = dw = dx = dy = sw = dz = swx =

swy = swz = varw = varx = vary = varz = 0.0;

ss = 0.2 * (Math.Exp(5.0) - Math.Exp(-5.0));

vol = 3.0 * 7.0 * ss;

long count = 0;

long n = results.Iterations;

Parallel.For( 0, n, f =>

{

Random r = new Random(Environment.TickCount);

x = 1.0 + 3.0 * r.NextDouble();

y = (-3.0) + 7.0 * r.NextDouble();

s = 0.00135 + ss * r.NextDouble();

z = 0.2 * Math.Log(5.0 * s);

double b = Math.Sqrt((x * x) + (y * y)) - 3.0;

double a = Math.Pow(z, 2.0) + (b * b);

if (a < 1.0)

{

sw += 1;

swx += x;

swy += y;

swz += z;

varw = 1.0;

varx += Math.Pow(x, 2.0);

vary += Math.Pow(y, 2.0);

varz += Math.Pow(z, 2.0);

}

Interlocked.Increment(ref count);

});

results.W = vol * sw / n;

results.X = vol * swx / n;

results.Y = vol * swy / n;

results.Z = vol * swz / n;

results.dW = vol * Math.Sqrt((varw / n - Math.Pow((sw / n), 2.0)) / n);

results.dX = vol * Math.Sqrt((varx / n - Math.Pow((swx / n), 2.0)) / n);

results.dY = vol * Math.Sqrt((vary / n - Math.Pow((swy / n), 2.0)) / n);

results.dZ = vol * Math.Sqrt((varz / n - Math.Pow((swz / n), 2.0)) / n);

}

The result:

Not so impressive as it performs worse than the threadpool. It looks like we're not splitting work up correctly between the threads that perform the work.

To take care of the division of labour issue, let's use Parallel.ForEach but also take advantage of partitioning in order to batch the work more efficiently:

long n = results.Iterations;

OrderablePartitioner<Tuple<long, long>> chunkPart =

Partitioner.Create(0, n, n/100);

long count = 0;

Parallel.ForEach(chunkPart, chunkRange =>

{});

Note the 3rd argument, where I partition the total iterations by 100 times. Naturally, you might assume that sizing the partitions according to the number of cores would work similarly to the Threadpool example above. Unfortunately, that doesn't seem to be the case. Feel free to tweak the code and prove it to yourself.

Next, let's use interlocking in order to make operations on state variables are atomic. As there is no Interlocked equivalent for adding doubles in .NET, we'll have to implement our own:

public static double Add(ref double location1, double value)

{

double newCurrentValue = 0;

while (true)

{

double currentValue = newCurrentValue;

double newValue = currentValue + value;

newCurrentValue = Interlocked.CompareExchange(ref location1, newValue, currentValue);

if (newCurrentValue == currentValue)

return newValue;

}

}

With partitioning and interlocking added in, what do we get?

This time, the results look correct and we've achieved a significant improvement in speed, but not enough to beat the Threadpool or our mixed C#/C++ solutions.

Clearly, how we divide the work to be done and then put the results back together is a big factor, so let's compare the traditional Threadpool with a Task based approach using similar logic to partition the work:

public void Run(ResultsdotNet results, int partitions)

{

long iterationsPerTask = results.Iterations / partitions;

Task<ResultsdotNetValue>[] tasks = new Task<ResultsdotNetValue>[partitions];

for (int n = 1; n <= partitions; n++)

tasks[n - 1] = Task.Factory.StartNew < ResultsdotNetValue >(

() => Calculate(iterationsPerTask, new Random(Environment.TickCount))

);

Task.WaitAll(tasks);

GetResults(tasks, ref results, partitions);

}

Note here that (for lack of a better technique), we are again using the number of processor cores to partition our work, plus we are able to use a value type for each batch.

This is much better with results comparable if not slightly better than the Threadpool example. When I ran this again on my laptop (another 4 core machine) however, the results were reversed. Threadpool ran slightly faster than Tasks so I expect your results will also vary.

Mixing Multi-Threading and Interop

Based on our single threaded testing, we might assume that a mix of threading and C#/PInvoke might give us the most speedy performance. So let's put that to the test.

Unfortunately, the original C++ implementation of ran3() from Numerical Receipes is not thread safe. We'll have to refactor it slightly:

#define MBIG 1000000000

#define MSEED 161803398

#define MZ 0

#define FAC (1.0/MBIG)

RandomNumbers::RandomNumbers()

{

iff=0;

}

double RandomNumbers::ran3(long *idum)

{

long mj,mk;

int i,ii,k;

if (*idum < 0 || iff == 0) {

iff=1;

mj=labs(MSEED-labs(*idum));

mj %= MBIG;

ma[55]=mj;

mk=1;

for (i=1;i<=54;i++) {

ii=(21*i) % 55;

ma[ii]=mk;

mk=mj-mk;

if (mk < MZ) mk += MBIG;

mj=ma[ii];

}

for (k=1;k<=4;k++)

for (i=1;i<=55;i++) {

ma[i] -= ma[1+(i+30) % 55];

if (ma[i] < MZ) ma[i] += MBIG;

}

inext=0;

inextp=31;

*idum=1;

}

if (++inext == 56) inext=1;

if (++inextp == 56) inextp=1;

mj=ma[inext]-ma[inextp];

if (mj < MZ) mj += MBIG;

ma[inext]=mj;

return mj*FAC;

}

#undef MBIG

#undef MSEED

#undef MZ

#undef FAC

When we run this via the Threadpool, we get:

Likewise via Tasks:

Suprisingly, we get WORSE results than we might otherwise expect. Why? The most obvious candidate is that we create a new instance of the C++ calculator with every thread. We can probably do better by creating a calculation pool of objects but we'll leave that to the next update.

Conclusions

Clearly, it's not easy to use multi-threaded techniques for a 'simple' iterative routine like monte carlo integration and outperform single threaded methods. As we saw with the Parallel.For methods, the key is to efficiently partition the work between each unit of execution and then aggregate correctly at the end.

So far, we have found that Tasks and Threadpooling are our best bet for comparison as we scale out, but which of these you use will be very much up to personal circumstances. It looks almost certain that by combining this with a PInvoke based calculation pool and techniques explained here then we would achieve the most efficient use of our resources.

So far we have only really looked at utilising the CPU on a single machine. But what about the graphics card or all those wasted highly powered desktops sitting around your organization? In the next article, we will look at building a calculation pool and compare and combine it to other techniques such as CUDA and distributed grid processing to see how we can scale it further.

All suggestions for improvements are happily accepted.

History

- Creation

- Added some performance improvements suggested by Andy Harman and Alexey.

- Tagged with C++ to expose to that audience in case there are some helpful suggestions

- Fixed the zip file, updated images and commentary on single threaded and

Parallel.For - Added

Task example, updated code - Added

Task and Threadpool with PInvoke example, updated code - Edited article