Introduction

About a year ago, I wrote a short article about using Media Foundation API for capturing live-video from web-camera - old article. Many developers paid attention to the article and gave some advice about it. I was surprised that this code had been started to use in project OpenCV. It had inspired me to review the code and I found that it has poor quality of coding. As a result, I had decided to rewrite the project, but after some time I made a decision to write a new article, because the new code is different from the old one. I think that it would be better to have two different articles with two different solutions of the problem.

Background

The previous article about using Media Foundation for capturing live-video from web-camera was developed in target to keep interface of the other library - videoInput. However, now I think that it would be better to use another interface for a more flexible solution. I have decided to include into the solution options for setting image format of the uncompressed image data, interface for processing of removing video device by object way, setting for synchronised and asynchronised reading mode, encapsulation requests to the library into object instances and result-code.

For development of the most stable code, I have decided to use Test-Driven Development strategy. As a result, I developed 45 tests for testing of classes and code. Many objects of the Media Foundation are tested many times in different ways. It allows to develop more clear and stable code, which can be easily verified, and it includes into the project united code-result enumeration for checking result of execution of the code which has 51 items. Also I removed the thread which was used in the previous article and simplified the write-read conveyor, and it made code more stable.

The new project is very different from the previous one and I think it will help to resolve the problems of other developers.

Using the Code

The library videoInput is written on Visual Studio 2012 - videoInputVS2012-static.zip . It can be used like a static library and it is enough to include into the new project only videoInput.lib and videoInput.h. Also code of this project can be downloaded - videoInputVS2012-Source and it can be included into any project.

The project includes 15 classes and interfaces:

videoInput - is class-singleton. This class is made as a singleton which makes managing of resources easy.MediaFoundation - is a class-singleton which manages the allocation and releasing of resources of Media Foundation. Almost all calls of Media Foundation functions are made from this class and results of these calls are controlled in this class.VideoCaptureDeviceManager - is class-singleton which manages the allocation, access and releasing of the video devices.VideoCaptureDevice - is a class which is inherited from IUnknow interface. It allows to use smart pointer - CComPtr, for control live-time of this class. This class allows control of the selected video capture device.VideoCaptureSession - is a class which is inherited from IMFAsyncCallback. This class is used for processing events which are generated by MediaEventGenerator - IMFMediaSession. This class processes event of the video capture device and starts capture of video.VideoCaptureSink - is a class which is inherited from IMFSampleGrabberSinkCallback. This class is used for getting raw data and writing it into the buffer.IWrite - is a class-interface for using different types of buffers by the only one way in VideoCaptureSink for writing data into the buffers.IRead - is a class-interface for using different types of buffers by the only one way in VideoCaptureDevice for reading raw data from buffers.IReadWriteBuffer - is a class-interface which is inherited from IRead, IWrite and IUnknown. This class is the base class for different types of buffers and can be used with a smart pointer CComPtr.ReadWriteBufferRegularAsync - is a class which is inherited from IReadWriteBuffer. This class uses critical section for blocking access to writing-reading of data by user thread and Media Foundation inner thread. However, the user thread checks flag readyToRead before entering into the critical section, if it is not ready, the user thread goes out buffer without reading data. As a result, the user thread is not blocked by writing Media Foundation inner thread.ReadWriteBufferRegularSync - is a class which is inherited from public IReadWriteBuffer. This class uses critical section for blocking access to writing-reading of data by user thread and Media Foundation inner thread. However, the user thread is blocked by WaitForSingleObject before entering into critical section. As a result, the user thread waits the object event about 1 second from Media Foundation inner thread and when it comes the user thread starts reading from buffer, but if event does not come the user thread leaves the buffer with code state - READINGPIXELS_REJECTED_TIMEOUT.ReadWriteBufferFactory - is class-singleton which produces ReadWriteBufferRegularAsync or ReadWriteBufferRegularSync buffers.FormatReader - is a class for reading data of MediaType format.DebugPrintOut - is the class for printing text into console.CComMassivPtr - is a template class for working with massive of objects with IUnknow interface. This class allows to encapsulate calling function SafeRelease() and control releasing of these objects.

It is enough to use the file videoInput.h as the interface of the library. Listing of it is presented below:

#include <string>

#include <vector>

#include <guiddef.h>

using namespace std;

struct MediaType

{

unsigned int MF_MT_FRAME_SIZE;

unsigned int height;

unsigned int width;

unsigned int MF_MT_YUV_MATRIX;

unsigned int MF_MT_VIDEO_LIGHTING;

unsigned int MF_MT_DEFAULT_STRIDE;

unsigned int MF_MT_VIDEO_CHROMA_SITING;

GUID MF_MT_AM_FORMAT_TYPE;

wstring MF_MT_AM_FORMAT_TYPEName;

unsigned int MF_MT_FIXED_SIZE_SAMPLES;

unsigned int MF_MT_VIDEO_NOMINAL_RANGE;

float MF_MT_FRAME_RATE_RANGE_MAX;

float MF_MT_FRAME_RATE;

float MF_MT_FRAME_RATE_RANGE_MIN;

float MF_MT_PIXEL_ASPECT_RATIO;

unsigned int MF_MT_ALL_SAMPLES_INDEPENDENT;

unsigned int MF_MT_SAMPLE_SIZE;

unsigned int MF_MT_VIDEO_PRIMARIES;

unsigned int MF_MT_INTERLACE_MODE;

GUID MF_MT_MAJOR_TYPE;

wstring MF_MT_MAJOR_TYPEName;

GUID MF_MT_SUBTYPE;

wstring MF_MT_SUBTYPEName;

};

struct Stream

{

std::vector<MediaType> listMediaType;

};

struct Device

{

wstring friendlyName;

wstring symbolicName;

std::vector<Stream> listStream;

};

struct Parametr

{

long CurrentValue;

long Min;

long Max;

long Step;

long Default;

long Flag;

Parametr();

};

struct CamParametrs

{

Parametr Brightness;

Parametr Contrast;

Parametr Hue;

Parametr Saturation;

Parametr Sharpness;

Parametr Gamma;

Parametr ColorEnable;

Parametr WhiteBalance;

Parametr BacklightCompensation;

Parametr Gain;

Parametr Pan;

Parametr Tilt;

Parametr Roll;

Parametr Zoom;

Parametr Exposure;

Parametr Iris;

Parametr Focus;

};

struct CaptureVideoFormat

{

enum VideoFormat

{

RGB24 = 0,

RGB32 = 1,

AYUV = 2

};

};

struct StopCallbackEvent

{

enum CallbackEvent

{

STOP = 0,

CAPTUREDEVICEREMOVED = 1

};

};

class IStopCallback

{

public:

virtual void Invoke(StopCallbackEvent::CallbackEvent callbackEvent) = 0;

};

struct ReadMode

{

enum Read

{

ASYNC = 0,

SYNC = 1

};

};

struct DeviceSettings

{

wstring symbolicLink;

unsigned int indexStream;

unsigned int indexMediaType;

};

struct CaptureSettings

{

CaptureVideoFormat::VideoFormat videoFormat;

IStopCallback *pIStopCallback;

ReadMode::Read readMode;

};

struct ReadSetting

{

std::wstring symbolicLink;

unsigned char *pPixels;

};

struct CamParametrsSetting

{

std::wstring symbolicLink;

CamParametrs settings;

};

struct ResultCode

{

enum Result

{

OK = 0,

UNKNOWN_ERROR = 1,

MEDIA_FOUNDATION_INITIALIZECOM_ERROR = 2,

MEDIA_FOUNDATION_INITIALIZEMF_ERROR = 3,

MEDIA_FOUNDATION_SHUTDOWN_ERROR = 4,

MEDIA_FOUNDATION_ENUMDEVICES_ERROR = 5,

MEDIA_FOUNDATION_CREATEATTRIBUTE_ERROR = 6,

MEDIA_FOUNDATION_READFRIENDLYNAME_ERROR = 7,

MEDIA_FOUNDATION_READSYMBOLICLINK_ERROR = 8,

MEDIA_FOUNDATION_GETDEVICE_ERROR = 9,

MEDIA_FOUNDATION_createPresentationDescriptor_ERROR = 10,

MEDIA_FOUNDATION_GETTHEAMOUNTOFSTREAMS_ERROR = 11,

MEDIA_FOUNDATION_GETSTREAMDESCRIPTORBYINDEX_ERROR = 12,

MEDIA_FOUNDATION_ENUMMEDIATYPE_ERROR = 13,

VIDEOCAPTUREDEVICEMANAGER_GETLISTOFDEVICES_ERROR = 14,

MEDIA_FOUNDATION_SETSYMBOLICLINK_ERROR = 15,

MEDIA_FOUNDATION_SETCURRENTMEDIATYPE_ERROR = 16,

MEDIA_FOUNDATION_GETCURRENTMEDIATYPE_ERROR = 17,

MEDIA_FOUNDATION_SELECTSTREAM_ERROR = 18,

MEDIA_FOUNDATION_CREATESESSION_ERROR = 19,

MEDIA_FOUNDATION_CREATEMEDIATYPE_ERROR = 20,

MEDIA_FOUNDATION_SETGUID_ERROR = 21,

MEDIA_FOUNDATION_SETUINT32_ERROR = 22,

MEDIA_FOUNDATION_CREATESAMPLERGRABBERSINKACTIVE_ERROR = 23,

MEDIA_FOUNDATION_CREATETOPOLOGY_ERROR = 24,

MEDIA_FOUNDATION_CREATETOPOLOGYNODE_ERROR = 25,

MEDIA_FOUNDATION_SETUNKNOWN_ERROR = 26,

MEDIA_FOUNDATION_SETOBJECT_ERROR = 27,

MEDIA_FOUNDATION_ADDNODE_ERROR = 28,

MEDIA_FOUNDATION_CONNECTOUTPUTNODE_ERROR = 29,

MEDIA_FOUNDATION_SETTOPOLOGY_ERROR = 30,

MEDIA_FOUNDATION_BEGINGETEVENT_ERROR = 31,

VIDEOCAPTUREDEVICEMANAGER_DEVICEISSETUPED = 32,

VIDEOCAPTUREDEVICEMANAGER_DEVICEISNOTSETUPED = 33,

VIDEOCAPTUREDEVICEMANAGER_DEVICESTART_ERROR = 34,

VIDEOCAPTUREDEVICE_DEVICESTART_ERROR = 35,

VIDEOCAPTUREDEVICEMANAGER_DEVICEISNOTSTARTED = 36,

VIDEOCAPTUREDEVICE_DEVICESTOP_ERROR = 37,

VIDEOCAPTURESESSION_INIT_ERROR = 38,

VIDEOCAPTUREDEVICE_DEVICESTOP_WAIT_TIMEOUT = 39,

VIDEOCAPTUREDEVICE_DEVICESTART_WAIT_TIMEOUT = 40,

READINGPIXELS_DONE = 41,

READINGPIXELS_REJECTED = 42,

READINGPIXELS_MEMORY_ISNOT_ALLOCATED = 43,

READINGPIXELS_REJECTED_TIMEOUT = 44,

VIDEOCAPTUREDEVICE_GETPARAMETRS_ERROR = 45,

VIDEOCAPTUREDEVICE_SETPARAMETRS_ERROR = 46,

VIDEOCAPTUREDEVICE_GETPARAMETRS_GETVIDEOPROCESSOR_ERROR = 47,

VIDEOCAPTUREDEVICE_GETPARAMETRS_GETVIDEOCONTROL_ERROR = 48,

VIDEOCAPTUREDEVICE_SETPARAMETRS_SETVIDEOCONTROL_ERROR = 49,

VIDEOCAPTUREDEVICE_SETPARAMETRS_SETVIDEOPROCESSOR_ERROR = 50

};

};

class videoInput

{

public:

static videoInput& getInstance();

ResultCode::Result getListOfDevices(vector<Device> &listOfDevices);

ResultCode::Result setupDevice(DeviceSettings deviceSettings,

CaptureSettings captureSettings);

ResultCode::Result closeDevice(DeviceSettings deviceSettings);

ResultCode::Result closeAllDevices();

ResultCode::Result readPixels(ReadSetting readSetting);

ResultCode::Result getParametrs(CamParametrsSetting ¶metrs);

ResultCode::Result setParametrs(CamParametrsSetting parametrs);

ResultCode::Result setVerbose(bool state);

private:

videoInput(void);

~videoInput(void);

videoInput(const videoInput&);

videoInput& operator=(const videoInput&);

};

The interface of library has become simple and some work with raw data has become the duty of the developer. Methods of the videoInput have the next purpose:

getListofDevices - Method for filling of the list of the active video devices. The fact is that in the old project, this list was filled at the time of initialisation and did not change. Now, calling this method will generate a new list of the active video devices and can be changed. Each device includes strings friendlyName, symbolicLink and list of streams. String friendlyName is used for presenting "readable" name of the device. String symbolicLink is the unique name for management of the device.setupDevice - Method for setting up and starting capture from device. It has two arguments: deviceSettings and captureSettings. The first is used for setting device into the chosen mode by symbolicLink, indexStream and indexMediaType. The second is used for setting capturing mode. It includes videoFormat for choosing the format of the raw image data - RGB24, RGB32 and AYUV; pIStopCallback is a pointer on the interface of callback class that is executed in the case of the removing video capture device; readMode is enumeration of the type buffer with synchronised and asynchronised reading raw data.closeDevice - Method is used for stopping and closing the selected device. This device is defined by symbolicName.closeAllDevices - Method for closing all setup devices.readPixels - Method for reading data from buffer into the pointer. The argument readSetting has symbolicLink for access to the device and pPixels for keeping pointer on the used raw image data.getParametrs - Method for getting parameters of video capture device.setParametrs - Method for setting parameters of video capture device.setVerbose - Method for setting mode of the printing information on console.

All these methods return item from ResultCode::Result enumeration. It allows to control the result execution of each method and get information about almost all processes in the code.

I would like to mark three special features of this library:

- The resolution of the captured image is defined by selecting appropriate

MediaType of the device. In the old article, the resolution is set by manually. However, it leads to a mistake that it is possible to set any resolution. I have decided that the new solution should present more clearly the limits of the video capture devices. - It is possible to choose one of three colour formats for captured image -

RGB24, RGB32 and AYUV. It allows to select the appropriate format. Colour formats RGB24 and RGB32 have the same appearance, but the second is more suitable for processing data on the modern processors with aligning(16). - Reading from buffer can be done in one of two ways - synchrony and asynchrony. This choice is made by setting mode

readMode. In the mode ReadMode::ASYNC reading from the buffer is not blocked and if the data is not ready, the method readPixels() returns result-code. ResultCode::READINGPIXELS_REJECTED. In the mode ReadMode::SYNC reading from the buffer is blocked and if the data is not ready, the method readPixels() blocks the user thread for 1 second and after returns result-code ResultCode::READINGPIXELS_REJECTED_TIMEOUT.

The next listing of code presents using this library with OpenCV for capturing live-video from web-camera.

#include "stdafx.h"

#include "../videoInput/videoInput.h"

#include "include\opencv2\highgui\highgui_c.h"

#include "include\opencv2\imgproc\imgproc_c.h"

#pragma comment(lib, "../Debug/videoInput.lib")

#pragma comment(lib, "lib/opencv_highgui248d.lib")

#pragma comment(lib, "lib/opencv_core248d.lib")

int _tmain(int argc, _TCHAR* argv[])

{

using namespace std;

vector<Device> listOfDevices;

ResultCode::Result result = videoInput::getInstance().getListOfDevices(listOfDevices);

DeviceSettings deviceSettings;

deviceSettings.symbolicLink = listOfDevices[0].symbolicName;

deviceSettings.indexStream = 0;

deviceSettings.indexMediaType = 0;

CaptureSettings captureSettings;

captureSettings.pIStopCallback = 0;

captureSettings.readMode = ReadMode::SYNC;

captureSettings.videoFormat = CaptureVideoFormat::RGB32;

MediaType MT = listOfDevices[0].listStream[0].listMediaType[0];

cvNamedWindow ("VideoTest", CV_WINDOW_AUTOSIZE);

CvSize size = cvSize(MT.width, MT.height);

IplImage* frame;

frame = cvCreateImage(size, 8,4);

ReadSetting readSetting;

readSetting.symbolicLink = deviceSettings.symbolicLink;

readSetting.pPixels = (unsigned char *)frame->imageData;

result = videoInput::getInstance().setupDevice(deviceSettings, captureSettings);

while(1)

{

ResultCode::Result readState = videoInput::getInstance().readPixels(readSetting);

if(readState == ResultCode::READINGPIXELS_DONE)

{

cvShowImage("VideoTest", frame);

}

else

break;

char c = cvWaitKey(33);

if(c == 27)

break;

}

result = videoInput::getInstance().closeDevice(deviceSettings);

return 0;

}

At the end, I would like to say that in this project, I removed the code for reordering colour BGR to RGB and vertical flipping. The fact is that these specific features of the Windows platform are compensated in many different ways - for example OpenCV library process in BGR colour order by default, DirectX and OpenGL support texturing BGR and RGB, and vertical flipping. I have decided that the developer can write the code for reordering colour and vertical flipping for own purpose.

I published the code of the project on the Git repository of this site and anyone can get a clone of it by using link videoInput.

Update on VS2013Express for Desktop

I have got many questions about use in this library and some of them include doubt about independence of the developed library from the OpenCV libraries, which I used in the test example. I understand such doubt, because the OpenCV includes supporting of working with web-cam. For proving of independence, my code from the OpenCV libraries (the fact is that the OpenCV code is partly based on MY OLD CODE) I have decided to write independent code which can visualize video from web-cam. Of course, such code needs some Windows framework with supporting of the drawing of the images - as a result, it needs some dependencies which can lead to the new doubts. So, I have decided to use OpenGL visualisation. I have rich experience with OpenGL and I wrote ONE FILE example which contains ALL needed code (except videoInput.lib).

#define WIN32_LEAN_AND_MEAN

#include <windows.h>

#include <stdlib.h>

#include <malloc.h>

#include <memory.h>

#include <tchar.h>

#include <gl/gl.h>

#include <memory>

#include <vector>

#include "../videoInput/videoInput.h"

#pragma comment(lib, "opengl32.lib")

#pragma comment(lib, "../videoInput/Debug/videoInput.lib")

#define GL_BGR 0x80E0

LRESULT CALLBACK WndProc(HWND hWnd, UINT message,

WPARAM wParam, LPARAM lParam);

void EnableOpenGL(HWND hWnd, HDC *hDC, HGLRC *hRC);

void DisableOpenGL(HWND hWnd, HDC hDC, HGLRC hRC);

int APIENTRY _tWinMain(_In_ HINSTANCE hInstance,

_In_opt_ HINSTANCE hPrevInstance,

_In_ LPTSTR lpCmdLine,

_In_ int nCmdShow)

{

UNREFERENCED_PARAMETER(hPrevInstance);

UNREFERENCED_PARAMETER(lpCmdLine);

using namespace std;

vector<Device> listOfDevices;

ResultCode::Result result = videoInput::getInstance().getListOfDevices(listOfDevices);

if (listOfDevices.size() == 0)

return -1;

DeviceSettings deviceSettings;

deviceSettings.symbolicLink = listOfDevices[0].symbolicName;

deviceSettings.indexStream = 0;

deviceSettings.indexMediaType = 0;

CaptureSettings captureSettings;

captureSettings.pIStopCallback = 0;

captureSettings.readMode = ReadMode::SYNC;

captureSettings.videoFormat = CaptureVideoFormat::RGB24;

MediaType MT = listOfDevices[0].listStream[0].listMediaType[0];

unique_ptr<unsigned char> frame(new unsigned char[3 * MT.width * MT.height]);

ReadSetting readSetting;

readSetting.symbolicLink = deviceSettings.symbolicLink;

readSetting.pPixels = frame.get();

result = videoInput::getInstance().setupDevice(deviceSettings, captureSettings);

ResultCode::Result readState = videoInput::getInstance().readPixels(readSetting);

if (readState != ResultCode::READINGPIXELS_DONE)

return -1;

float halfQuadWidth = 0.75;

float halfQuadHeight = 0.75;

WNDCLASS wc;

HWND hWnd;

HDC hDC;

HGLRC hRC;

MSG msg;

BOOL bQuit = FALSE;

float theta = 0.0f;

wc.style = CS_OWNDC;

wc.lpfnWndProc = WndProc;

wc.cbClsExtra = 0;

wc.cbWndExtra = 0;

wc.hInstance = hInstance;

wc.hIcon = LoadIcon(NULL, IDI_APPLICATION);

wc.hCursor = LoadCursor(NULL, IDC_ARROW);

wc.hbrBackground = (HBRUSH)GetStockObject(BLACK_BRUSH);

wc.lpszMenuName = NULL;

wc.lpszClassName = L"OpenGLWebCamCapture";

RegisterClass(&wc);

hWnd = CreateWindow(

L"OpenGLWebCamCapture", L"OpenGLWebCamCapture Sample",

WS_CAPTION | WS_POPUPWINDOW | WS_VISIBLE,

0, 0, MT.width, MT.height,

NULL, NULL, hInstance, NULL);

EnableOpenGL(hWnd, &hDC, &hRC);

GLuint textureID;

glGenTextures(1, &textureID);

glBindTexture(GL_TEXTURE_2D, textureID);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB, MT.width, MT.height, 0, GL_BGR, GL_UNSIGNED_BYTE, frame.get());

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_NEAREST);

while (!bQuit)

{

if (PeekMessage(&msg, NULL, 0, 0, PM_REMOVE))

{

if (msg.message == WM_QUIT)

{

bQuit = TRUE;

}

else

{

TranslateMessage(&msg);

DispatchMessage(&msg);

}

}

else

{

ResultCode::Result readState = videoInput::getInstance().readPixels(readSetting);

if (readState != ResultCode::READINGPIXELS_DONE)

break;

glClear(GL_COLOR_BUFFER_BIT);

glLoadIdentity();

glBindTexture(GL_TEXTURE_2D, textureID);

glTexSubImage2D(GL_TEXTURE_2D, 0, 0, 0, MT.width,

MT.height, GL_BGR, GL_UNSIGNED_BYTE, frame.get());

glBegin(GL_QUADS);

glTexCoord2f(0, 1); glVertex2f(-halfQuadWidth, -halfQuadHeight); glTexCoord2f(1, 1); glVertex2f(halfQuadWidth, -halfQuadHeight); glTexCoord2f(1, 0); glVertex2f(halfQuadWidth, halfQuadHeight); glTexCoord2f(0, 0); glVertex2f(-halfQuadWidth, halfQuadHeight); glEnd();

SwapBuffers(hDC);

Sleep(1);

}

}

DisableOpenGL(hWnd, hDC, hRC);

DestroyWindow(hWnd);

videoInput::getInstance().closeDevice(deviceSettings);

return msg.wParam;

}

LRESULT CALLBACK WndProc(HWND hWnd, UINT message,

WPARAM wParam, LPARAM lParam)

{

switch (message)

{

case WM_CREATE:

return 0;

case WM_CLOSE:

PostQuitMessage(0);

return 0;

case WM_DESTROY:

return 0;

case WM_KEYDOWN:

switch (wParam)

{

case VK_ESCAPE:

PostQuitMessage(0);

return 0;

}

return 0;

default:

return DefWindowProc(hWnd, message, wParam, lParam);

}

}

void EnableOpenGL(HWND hWnd, HDC *hDC, HGLRC *hRC)

{

PIXELFORMATDESCRIPTOR pfd;

int iFormat;

*hDC = GetDC(hWnd);

ZeroMemory(&pfd, sizeof (pfd));

pfd.nSize = sizeof (pfd);

pfd.nVersion = 1;

pfd.dwFlags = PFD_DRAW_TO_WINDOW |

PFD_SUPPORT_OPENGL | PFD_DOUBLEBUFFER;

pfd.iPixelType = PFD_TYPE_RGBA;

pfd.cColorBits = 24;

pfd.cDepthBits = 16;

pfd.iLayerType = PFD_MAIN_PLANE;

iFormat = ChoosePixelFormat(*hDC, &pfd);

SetPixelFormat(*hDC, iFormat, &pfd);

*hRC = wglCreateContext(*hDC);

wglMakeCurrent(*hDC, *hRC);

glEnable(GL_TEXTURE_2D);

}

void DisableOpenGL(HWND hWnd, HDC hDC, HGLRC hRC)

{

wglMakeCurrent(NULL, NULL);

wglDeleteContext(hRC);

ReleaseDC(hWnd, hDC);

}

At the time of development of the new text example, I decided to replace code on the Visual Studio 2013 Express for Desktop. So, I rewrote the old code and uploaded it on the following links:

Update on C++/CLI, C# and WPF

The last questions about this article and code payed my attention on the other solutions of the similar task. So, I found some articles which authors suggested to use EmguCV libraries for capturing web-cam live video on platforms with C# language. I know about those libraries. They support powerful algorithms for creating of projects of the Computer Vision. I have decided that it is not good solution to use those libraries if the project needs only to connect to the web-cam. More over, some of the progammers prefer to use more simple solutions which can be included into the projects on the level of code.

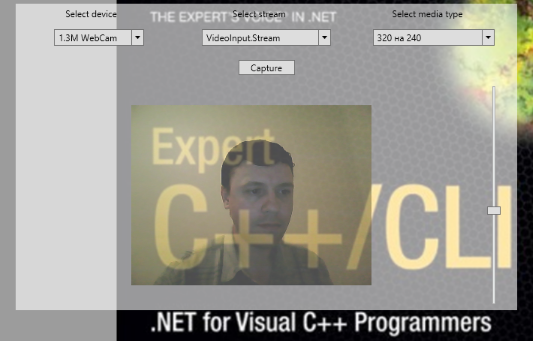

So, I have decided to write simple wrapper on C++/CLI for my original videoInput library. I selected to write the wrapper because C++/CLI and C++ are not full compatible. The fact is that for full implementation of the C++/CLI I need to rewrite about 90% of code and it lose the compatible with the other code on C++ - it leads to the writing of the new code and new article. On the other hand, the code of the wrapper uses code on videoInputVS2013Express_for_Desktop, and any improvements in the original C++ code can be easy replaced into the C++/CLI wrapper. For proving that the wrapper works on C# I wrote simple example on WPF - result is presented on the next image:

The source code and example can be found by link: Download videoInputVS2013Express_for_DesktopC++CLI.zip

Update on C# marshaling of C wrapper

The version of videoInput on C++/CLI allows to integrate capturing image from web cam into C# projects in seamless way. However, after some time I found one sad fact - it is impossible to write C++/CLI solutions on Visual Studio 2013 for old version dotNet. It means that Platform Toolset Visual Studio v120 for C++/CLI supports only dotNet 4.5, and it IS IMPOSSIBLE to integrate C++/CLI solution which is developed on Visual Studio 2013 into the projects on dotNet 3.5 and earlier - I faced with such problem while had started to develope plugin for supporting MediaFoundation for web cam in old project on dotNet 3.5. I have decided that it is not possible to recompile all project on dotNet 4.5 for supporting only one plugin. I have diceded that there is only one way to resolve such problem - write C wrapper for C++ videoInpul library and write marshaling code for dotNet 3.5 plugin. I wrote C library USBCameraProxy code:

USBCameraProxy.h

#include <Windows.h>

#include <stdio.h>

#include "videoInput.h"

#define EXPORT extern "C" __declspec(dllexport)

typedef struct _Resolution

{

int width;

int height;

} Resolution, *PtrResolution;

EXPORT int __cdecl getDeviceCount();

EXPORT int __cdecl getDeviceInfo(int number, wchar_t *aPtrFriendlyName, wchar_t *aPtrSymbolicName);

EXPORT int __cdecl getDeviceResolution(int number, wchar_t *aPtrSymbolicName, PtrResolution aPtrResolution);

EXPORT int __cdecl setupDevice(wchar_t *aPtrSymbolicName, int aResolutionIndex, int aCaptureVideoFormat, int ReadMode);

EXPORT int __cdecl closeAllDevices();

EXPORT int __cdecl closeDevice(wchar_t *aPtrSymbolicName);

EXPORT int __cdecl readPixels(wchar_t *aPtrSymbolicName, unsigned char *aPtrPixels);

EXPORT int __cdecl setCamParametrs(wchar_t *aPtrSymbolicName, CamParametrs *aPtrCamParametrs);

EXPORT int __cdecl getCamParametrs(wchar_t *aPtrSymbolicName, CamParametrs *aPtrCamParametrs);

USBCameraProxy.cpp

#include "USBCameraProxy.h"

#include <algorithm>

EXPORT int __cdecl getDeviceCount()

{

vector<device> listOfDevices;

videoInput::getInstance().getListOfDevices(listOfDevices);

return listOfDevices.size();

}

EXPORT int __cdecl getDeviceInfo(int number, wchar_t *aPtrFriendlyName, wchar_t *aPtrSymbolicName)

{

int lresult = -1;

do

{

if (number < 0)

break;

vector<device> listOfDevices;

videoInput::getInstance().getListOfDevices(listOfDevices);

if (listOfDevices.size() <= 0)

break;

wcscpy(aPtrFriendlyName, listOfDevices[number].friendlyName.c_str());

wcscpy(aPtrSymbolicName, listOfDevices[number].symbolicName.c_str());

lresult = listOfDevices[number].listStream[0].listMediaType.size();

} while(false);

return lresult;

}

EXPORT int __cdecl getDeviceResolution(int number, wchar_t *aPtrSymbolicName, PtrResolution aPtrResolution)

{

int lresult = -1;

do

{

if (number < 0)

break;

std::wstring lSymbolicName(aPtrSymbolicName);

vector<device> listOfDevices;

videoInput::getInstance().getListOfDevices(listOfDevices);

if (listOfDevices.size() <= 0)

break;

auto lfindIter = std::find_if(

listOfDevices.begin(),

listOfDevices.end(),

[lSymbolicName](Device lDevice)

{

return lDevice.symbolicName.compare(lSymbolicName) == 0;

});

if (lfindIter != listOfDevices.end())

{

aPtrResolution->height = (*lfindIter).listStream[0].listMediaType[number].height;

aPtrResolution->width = (*lfindIter).listStream[0].listMediaType[number].width;

lresult = 0;

}

} while (false);

return lresult;

}

EXPORT int __cdecl setupDevice(wchar_t *aPtrSymbolicName, int aResolutionIndex, int aCaptureVideoFormat, int ReadMode)

{

int lresult = -1;

do

{

if (aResolutionIndex < 0)

break;

DeviceSettings deviceSettings;

deviceSettings.symbolicLink = std::wstring(aPtrSymbolicName);

deviceSettings.indexStream = 0;

deviceSettings.indexMediaType = aResolutionIndex;

CaptureSettings captureSettings;

captureSettings.pIStopCallback = nullptr;

captureSettings.videoFormat = CaptureVideoFormat::VideoFormat(aCaptureVideoFormat);

captureSettings.readMode = ReadMode::Read(ReadMode);

lresult = videoInput::getInstance().setupDevice(deviceSettings, captureSettings);

} while (false);

return lresult;

}

EXPORT int __cdecl closeAllDevices()

{

return videoInput::getInstance().closeAllDevices();

}

EXPORT int __cdecl closeDevice(wchar_t *aPtrSymbolicName)

{

DeviceSettings deviceSettings;

deviceSettings.symbolicLink = std::wstring(aPtrSymbolicName);

deviceSettings.indexStream = 0;

deviceSettings.indexMediaType = 0;

return videoInput::getInstance().closeDevice(deviceSettings);

}

EXPORT int __cdecl readPixels(wchar_t *aPtrSymbolicName, unsigned char *aPtrPixels)

{

ReadSetting readSetting;

readSetting.symbolicLink = std::wstring(aPtrSymbolicName);

readSetting.pPixels = aPtrPixels;

int lresult = videoInput::getInstance().readPixels(readSetting);

return lresult;

}

EXPORT int __cdecl setCamParametrs(wchar_t *aPtrSymbolicName, CamParametrs *aPtrCamParametrs)

{

CamParametrsSetting lCamParametrsSetting;

lCamParametrsSetting.symbolicLink = std::wstring(aPtrSymbolicName);

lCamParametrsSetting.settings = *aPtrCamParametrs;

videoInput::getInstance().setParametrs(lCamParametrsSetting);

return 0;

}

EXPORT int __cdecl getCamParametrs(wchar_t *aPtrSymbolicName, CamParametrs *aPtrCamParametrs)

{

CamParametrsSetting lCamParametrsSetting;

lCamParametrsSetting.symbolicLink = std::wstring(aPtrSymbolicName);

videoInput::getInstance().getParametrs(lCamParametrsSetting);

*aPtrCamParametrs = lCamParametrsSetting.settings;

return 0;

}

The structure VideoInputWrap contains inner classes and methods for "reflecting" C library on C# in VideoInputWrap.cs

public struct VideoInputWrap

{

[StructLayout( LayoutKind.Sequential, Pack = 1 )]

public class Resolution

{

public int width;

public int height;

public override string ToString()

{

return width.ToString() + " x " + height.ToString();

}

}

[StructLayout(LayoutKind.Sequential)]

public class Parametr

{

public int CurrentValue;

public int Min;

public int Max;

public int Step;

public int Default;

public int Flag;

};

[StructLayout(LayoutKind.Sequential)]

public class CamParametrs

{

public Parametr Brightness;

public Parametr Contrast;

public Parametr Hue;

public Parametr Saturation;

public Parametr Sharpness;

public Parametr Gamma;

public Parametr ColorEnable;

public Parametr WhiteBalance;

public Parametr BacklightCompensation;

public Parametr Gain;

public Parametr Pan;

public Parametr Tilt;

public Parametr Roll;

public Parametr Zoom;

public Parametr Exposure;

public Parametr Iris;

public Parametr Focus;

};

const string DLLNAME = "USBCameraProxy.dll";

[DllImport(DLLNAME)]

public static extern int getDeviceCount();

[DllImport(DLLNAME, CharSet = CharSet.Unicode)]

public static extern int getDeviceInfo(

int number,

StringBuilder aPtrFriendlyName,

StringBuilder aPtrSymbolicName

);

[DllImport(DLLNAME, CharSet = CharSet.Unicode)]

public static extern int getDeviceResolution(

int number,

StringBuilder aPtrSymbolicName,

[ Out] Resolution aPtrResolution

);

[DllImport(DLLNAME, CharSet = CharSet.Unicode)]

public static extern int setupDevice(

StringBuilder aPtrSymbolicName,

int aResolutionIndex,

int aCaptureVideoFormat,

int ReadMode

);

[DllImport(DLLNAME)]

public static extern int closeAllDevices();

[DllImport(DLLNAME, CharSet = CharSet.Unicode)]

public static extern int closeDevice(

StringBuilder aPtrSymbolicName

);

[DllImport(DLLNAME, CharSet = CharSet.Unicode)]

public static extern int readPixels(

StringBuilder aPtrSymbolicName,

IntPtr aPtrPixels

);

[DllImport(DLLNAME, CharSet = CharSet.Unicode)]

public static extern int getCamParametrs(

StringBuilder aPtrSymbolicName,

[Out] CamParametrs lpCamParametrs

);

[DllImport(DLLNAME, CharSet = CharSet.Unicode)]

public static extern int setCamParametrs(

StringBuilder aPtrSymbolicName,

[In] CamParametrs lpCamParametrs

);

}

public class Device

{

public IList<videoinputwrap.resolution> mResolutionList = new List<videoinputwrap.resolution>();

public StringBuilder mFriendlyName = new StringBuilder(256);

public StringBuilder mSymbolicName = new StringBuilder(256);

public override string ToString()

{

return mFriendlyName.ToString();

}

}

The name of C wrapper library is defined by const string DLLNAME = "USBCameraProxy.dll"

Marshaling structure VideoInputWrap can be used in the next form:

The next code fills the list of web cam devices:

List<device> mDeviceList = new List<device>();

StringBuilder aFriendlyName = new StringBuilder(256);

StringBuilder aSymbolicName = new StringBuilder(256);

var lcount = VideoInputWrap.getDeviceCount();

if(lcount > 0)

{

for (int lindex = 0; lindex < lcount; ++lindex )

{

var lresult = VideoInputWrap.getDeviceInfo(lindex, aFriendlyName, aSymbolicName);

if (lresult <= 0)

continue;

Device lDevice = new Device();

lDevice.mFriendlyName.Append(aFriendlyName.ToString());

lDevice.mSymbolicName.Append(aSymbolicName.ToString());

for (var lResolutionIndex = 0; lResolutionIndex < lresult; ++lResolutionIndex)

{

VideoInputWrap.Resolution k = new VideoInputWrap.Resolution();

var lResolutionResult = VideoInputWrap.getDeviceResolution(lResolutionIndex, aSymbolicName, k);

if (lResolutionResult < 0)

continue;

lDevice.mResolutionList.Add(k);

}

mDeviceList.Add(lDevice);

}

}

The next code starts capture from device aDevice with the specific index of resolution aResolutionIndex:

private IntPtr ptrImageBuffer = IntPtr.Zero;

private DispatcherTimer m_timer = new DispatcherTimer();

private StringBuilder mSymbolicName;

private BitmapSource m_BitmapSource = null;

public VideoInputWrap.CamParametrs m_camParametrs;

private Device m_Device;

public void startCapture(Device aDevice, int aResolutionIndex)

{

mSymbolicName = new StringBuilder(aDevice.mSymbolicName.ToString());

int lbufferSize = (3 * aDevice.mResolutionList[aResolutionIndex].width * aDevice.mResolutionList[aResolutionIndex].height);

ptrImageBuffer = Marshal.AllocHGlobal(lbufferSize);

var lr = VideoInputWrap.setupDevice(aDevice.mSymbolicName, aResolutionIndex, 0, 1);

m_camParametrs = new VideoInputWrap.CamParametrs();

m_Device = aDevice;

var lres = VideoInputWrap.getCamParametrs(aDevice.mSymbolicName, m_camParametrs);

m_timer.Tick += delegate

{

var result = VideoInputWrap.readPixels(mSymbolicName, ptrImageBuffer);

if (result != 41)

m_timer.Stop();

m_BitmapSource = FromNativePointer(ptrImageBuffer, aDevice.mResolutionList[aResolutionIndex].width,

aDevice.mResolutionList[aResolutionIndex].height, 3);

displayImage.Source = m_BitmapSource;

};

m_timer.Interval = new TimeSpan(0, 0, 0, 0, 1);

m_timer.Start();

}

[DllImport("kernel32.dll", EntryPoint = "RtlMoveMemory")]

public static extern void CopyMemory(IntPtr Destination, IntPtr Source, uint Length);

public static BitmapSource FromNativePointer(IntPtr pData, int w, int h, int ch)

{

PixelFormat format = PixelFormats.Default;

if (ch == 1) format = PixelFormats.Gray8;

if (ch == 3) format = PixelFormats.Bgr24;

if (ch == 4) format = PixelFormats.Bgr32;

WriteableBitmap wbm = new WriteableBitmap(w, h, 96, 96, format, null);

CopyMemory(wbm.BackBuffer, pData, (uint)(w * h * ch));

wbm.Lock();

wbm.AddDirtyRect(new Int32Rect(0, 0, wbm.PixelWidth, wbm.PixelHeight));

wbm.Unlock();

return wbm;

}

This solution works well and allowes to work with old project on dotNet 3.5. The C wrapper project on Visual Studio 2013 can be downloaded by link USBCameraProxy.zip

Update version for supporting of x64 Windows platform and improving of multithread synchronisation.

My attention was pointed on problem with compiling on x64 platform by some comments. The fact is that support DIFFERENT InterlockedIncrement function for x86 and x64 - the fact is that on x64 InterlockedIncrement does not have overload for long type of argument, but in my x86 version I used such type. I have got suggesion to replace long type on unsigned long type.

However, I have decided to make much more than simple added unsigned keyword - I have decided to replace the AddRef - Release on STL C++11 solution:

class VideoCaptureSink

{

...

std::atomic<unsigned long> refCount;

}

STDMETHODIMP_(ULONG) VideoCaptureSink::AddRef()

{

return ++refCount;

}

STDMETHODIMP_(ULONG) VideoCaptureSink::Release()

{

ULONG cRef = --refCount;

if (cRef == 0)

{

delete this;

}

return cRef;

}

So, you can see that I have got the same solution, but it does not depended from the semantic of Windows SDK. I think that it more correct solution of the Windows SDK problem.

At the time of resolving of the problem with porting on x64 platform I have decided to improve multithread synchronisation by replace Windows SDK code on STL C++11 solution:

It has the general form on Windows SDK:

HANDLE syncEvent;

syncEvent = CreateEvent(NULL,FALSE,FALSE,NULL);

{

dwWaitResult = WaitForSingleObject(syncEvent, 1000);

if (dwWaitResult == WAIT_TIMEOUT)

{

}

}

{

if(state)

{

SetEvent(syncEvent);

}

else

{

ResetEvent(syncEvent);

}

}

It has the general form on STL C++11:

std::condition_variable mConditionVariable;

std::mutex mMutex;

{

std::unique_lock<std::mutex> lock(mMutex);

auto lconditionResult = mConditionVariable.wait_for(lock, std::chrono::seconds(1));

if (lconditionResult == std::cv_status::timeout)

{

}

}

{

if(state)

{

mConditionVariable.notify_all();

}

}

So, as you can see the new solution is more object oriented and looks more nice.

I have tested the new solution on x86 and x64 platform and it works well. The new version can be downloaded by next link - videoInputVS2013Express_for_Desktop_x64.zip

Update for multisink output.

The main target of the developed code, which is presented in this artice, is writing of a simple and flexible solution for grabing raw data of image from web-cam. In many projects the image is processed for the specific purposes. However, MediaFoundation which is a basement of the code is more power. For expanding options of library I have decided to expand the interface. I replaced the old method setupDevice:

ResultCode::Result setupDevice(

DeviceSettings deviceSettings,

CaptureSettings captureSettings);

on new version:

ResultCode::Result setupDevice(

DeviceSettings deviceSettings,

CaptureSettings captureSettings,

std::vector<TopologyNode> aNodesVector = std::vector<TopologyNode>(),

bool aIsSampleGrabberEnable = true);

The new version of method setupDevice reflects the main change - addition a vector of TopologyNode structure. This structure has the next view:

struct IMFTopologyNode;

struct TopologyNode

{

IMFTopologyNode* mPtrIMFTopologyNode;

bool mConnectedTopologyNode;

TopologyNode():

mPtrIMFTopologyNode(nullptr),

mConnectedTopologyNode(false)

{}

};

This structure is a container for Media Foundation object with interface IMFTopologyNode, which can be "injected" into the pipeline of capturing of video from web-cam.

In the old version of videInput, connection or topology between of source and sample grabber can be shown by the next schema:

The topology has two nodes: source and sink, and Media Foundation resolves it by adding the needed transformations. This schema is simple, but in this topology the stream is directed only into one node for consumption. However, the developer can have the need to have more flexible solution with multi consumptions - for example: grabbing raw image, showing live video on window, injection watermark into the video, saving live video into the video file, network broadcast of live video. Such types of processing video need video capture library which can copy source stream into the many sinks - multisink output.

In the new version of videoInput topology between of source and many sinks can be shown by the next schema:

Media Foundation framework has the integrated topology node "TeeNode" which allows to connect many output nodes with one output pin of source node. The new version of videoInput has one integrated and already connected output node - Sample Graber Sink which capture the separated images, but it is possible to connect many additional nodes for getting video stream from web-cam. It is important to mark that injected nodes can be not only output nodes like video render or video file writer sink, but they can be transform nodes which process image - for example write water marks on image, or smooth image, rescale image and others.

The example of using the new version of videoInput can be found by link: videoInputVS2013Express_for_Desktop_x64_multisink_version.zip

The code in the next listings can explain how to work with the new method:

std::vector<TopologyNode> aNodesVector;

auto lhresult = createOutputNode(lhwnd, aNodesVector);

if (FAILED(lhresult))

break;

resultCode = videoInput::getInstance().setupDevice(deviceSettings, captureSettings, aNodesVector);

In this code, the vector of aNodesVector nodes is created and filled in function createOutputNode then it is sent into the videoinput library for injection into the Media Foundation topology. The code for filling of vector of nodes is presented in the next listing:

HRESULT createOutputNode(

HWND aHWNDVideo,

std::vector<TopologyNode> &aNodesVector)

{

HRESULT lhresult = S_OK;

CComPtrCustom<IMFMediaTypeHandler> lHandler;

CComPtrCustom<IMFActivate> lRendererActivate;

CComPtrCustom<IMFTopologyNode> aNodeMFT;

CComPtrCustom<IMFTopologyNode> aNodeRender;

do

{

WaterMarkInjectorMFT *lMFT = new WaterMarkInjectorMFT();

lhresult = MFCreateTopologyNode(MF_TOPOLOGY_TRANSFORM_NODE, &aNodeMFT);

if (FAILED(lhresult))

break;

lhresult = aNodeMFT->SetObject(lMFT);

if (FAILED(lhresult))

break;

lhresult = MFCreateVideoRendererActivate(aHWNDVideo, &lRendererActivate);

if (FAILED(lhresult))

break;

lhresult = MFCreateTopologyNode(MF_TOPOLOGY_OUTPUT_NODE, &aNodeRender);

if (FAILED(lhresult))

break;

lhresult = aNodeRender->SetObject(lRendererActivate);

if (FAILED(lhresult))

break;

lhresult = MediaFoundation::getInstance().connectOutputNode(0, aNodeMFT, aNodeRender);

if (FAILED(lhresult))

break;

TopologyNode lMFTTopologyNode;

lMFTTopologyNode.mPtrIMFTopologyNode = aNodeMFT.Detach();

lMFTTopologyNode.mConnectedTopologyNode = true;

aNodesVector.push_back(lMFTTopologyNode);

TopologyNode lRenderTopologyNode;

lRenderTopologyNode.mPtrIMFTopologyNode = aNodeRender.Detach();

lRenderTopologyNode.mConnectedTopologyNode = false;

aNodesVector.push_back(lRenderTopologyNode);

} while (false);

return lhresult;

}

In this method argument HWND aHWNDVideo is used for getting render active via function MFCreateVideoRendererActivate. The render active is setted into the render node - aNodeRender. In addition into the method created Media Foundation Transformation class WaterMarkInjectorMFT which injects water mark into the live video. The transformation class is setled into the transform node aNodeMFT. These nodes are connected by method connectOutputNode and pushed into the node vector aNodesVector. It is important to mark that structure TopologyNode has variable mConnectedTopologyNode which is a flag of connection the node with the TeeNode of videoInput. In this code the node aNodeRender is not connected with the TeeNode because it is already connected with aNodeMFT node - the variable mConnectedTopologyNode is setted false. However, aNodeMFT is not connected and it must be connected with TeeNode of videInput - the variable mConnectedTopologyNode is setted true. As a result it is possible to write own code for using of Media Foundation framework and easy inject it into the videoInput library.

In conclusion, it would be important to mark the last argument bool aIsSampleGrabberEnable = true in the method setupDevice. This argument is a flag for enabling the inner connection of inner sample graber sink with the TeeNode of topology. It is enabled by default, but I think that there are situations while projects do not need of processing of the raw data of image, and it could be waste of resource to connect such sink - so, it can be disabled. The fact is that many operations with raw image can be replaced into the custom Meia Foundation Transformation, and such solitions would be more flexible and effective.

Including into the sample graber topology HorizontMirroring Transformation

It is a simple update of code for resolving the problem with bottom-up capturing on some webcam. The fact is that some web cams have different schema of writing image into the output stream that the default for Windows - it leads to the horizont flipping of image. Such problem can be detected by checking of MF_MT_DEFAULT_STRIDE attribute manually and corrected by developer. However, I have decided to write the specific transformation and include it into the topology of video capture. The new code can be found be the next link: videoInputVS2013Express_for_Desktop_bottom_up_detection_version.zip.

Points of Interest

I developed this project on the base of the TDD strategy. I think this will help other developers to resolve some problems with this code.

History

This project is situated on Git Repos CodeProject: videoInput.

This article is based on the old article.

06.12.2014 - Update project on Visual Studio 2013 Express for Desktop. Add new test example on OpenGL visualisation.

11.12.2014 - Update project on C++/CLI, C#, WPF

10.04.2015 - Update project on C# marshaling of C wrapper

13.04.2015 - Update version for supporting of x64 Windows platform and improving of multithread synchronisation.

11.05.2015 - Update version for supporting of multisink output.

25.05.2015 - Update version for fixing of bottom-up distortion.