Introduction

Everyone agrees that automated testing is a good thing, we should all write unit tests. We read articles and blogs to keep us up-to-date on what’s going on in the unit test world, so that we can sound cool talking to peers at lunch. But when we really sit down and try to write automated tests or unit tests ourselves – “Naaah, this is waste of time, let’s ask my QA to test it; that’s much more reliable and guaranteed way to test this. What’s the point testing these functions when there are so many other functions that we should unit test first?” Had such a moment yourself or with someone else? Read on.

How It Started

I had a conversation with our development lead Rob (using a highly generic name since my last post caused some trouble), who runs “the show” in our engineering team. As usual there was reservation in introducing automated tests (AT) and unit tests (UT) into regular development schedule. Rob also had valid points about lack of powerful tools for doing AT on AJAX websites. He also had confusion on ‘what’ and ‘how’ to AT our code so that we aren’t just testing database failures but real user actions that executes both business and rendering logics. So, the discussion has a lot of useful information, that will help you take the right decision when you want to sell AT to your ASP.NET and/or AJAX development team and finally to higher management so that you can buy enough time for the noble effort.

Friday, Jan 2007 – Hallway

Omar: Hey Rob, we need to start doing AT at least on our web services. We are wasting way too much time on manual QA. QA guys just told me they were up last night for the regression test and they are seeing obvious bugs coming from Dev, which should have been caught at Dev PC. Devs are complaining QAs are reporting bugs too late and making them work extra hours. PM guys are saying they come to office at 9 AM and go home at 11 PM, for last 3 months. We gotta stop this. It's the same story for last 5 sprints. These guys are burning themselves out, looking for other jobs, demanding raise and so on. We gotta get tests done a lot faster and prevent obvious bugs going to QA. We need to automate the tests so that Devs can run automated regression test suite from their PC before passing to QA and save QA from spending repeated manual test effort every month. QAs need to run more tests automated way than doing manual tests so that they can report bugs quickly for devs to fix within regular working hours. Do you agree?

Rob: Sure, that sounds fun. I will do some feasibility check and see how can we chip this in into our next sprint.

One Month Later... In Washroom (Facing the Lieu)

Omar: Hey Rob, let’s start doing AT. I haven’t seen any tests last month. Can we start from this sprint?

Rob: Sure, we can surely start from this sprint. Let me find out which tool is the right one for us.

Another Month Later... In Meeting Room

Omar: Hey Rob, haven’t seen any ATs in the solution so far. Let’s seriously start doing AT. Did you make any plan how you want to start AT'ing the webservices?

Rob: Yeah, I did some digging around and found some tools. But most of them are for non-AJAX sites where you can programmatically hit a URL or programmatically do HTTP POST on a URL. You can also record button clicks and form posts from the browser. There’s Visual Studio’s Web Test, which does pretty good job recording regular ASP.NET site, but poor on AJAX sites. Moreover, you need to buy Team Suite edition to get that Web Test feature. Besides, recording tests and playing them back really does not help us because all those tests contain hard coded data. We can’t repeat a particular step many times with random data, at least not using any off-the-shelf tools. We need to test things carefully and systematically using random data set and sometimes use real data from database. For example, a common scenario is loading 100 random user accounts from database and programmatically log those users into their portal and test whether the portal shows those users’ personalized data. All these need to be done from AJAX, without using any browser redirect or form post, because there’s one page that allows user to login using Ajax call and then dynamically renders the portal on the same page after successful login. The UI is rendered by Javascript, so only a real browser can render it and we have to test the output looking at the browser.

Omar: I see, so you can’t use Visual Studio Web Test to run ATs on a browser because it does not let you access the html that browser renders. You can only test the html that’s returned by webserver. As we are AJAX website, most of our stuff is done by JavaScripts – they call Webservice and they render the UI. Hmm, thinking how we can do this using VS. We can at least hit the webservices and see if they are returning the right JSON. This way we can pretty much test the entire webservice, business and data access layer. But it does not really replace the need for manual QA since there’s a lot of rendering logic in JavaScript.

Rob: Now there’s a new project called Watin that seems promising. You can write C# code to instruct a browser to do stuff like click on a button, run some JavaScript and then you can check what the browser rendered in its DOM and run your tests. But still, it’s in its infancy. So, there’s really no good tool for AT'ing AJAX sites. Let’s stick to manual QA, which is proven to be more accurate than anything developers can come up with. We can handover a set of data to QA and ask them to enter and check the result.

Omar: We definitely need to figure out ways to reduce our dependency on manual QA. It simply does not scale. Every sprint, we have to freeze code and then hand over to QA. They run their gigantic test scripts for a whole day. Then next day, we get bug reports to fix. If there’s a severe regression bug, we have to either cancel sprint or work the whole night to fix it and run overnight QA to meet deployment date. For the last one year, every sprint we ended up having some bug that made dev and QA work over night. We have to empower our developers with automated AT tool so that they can run the whole regression test script automatically.

Rob: You are talking about a very long project then. Writing so many ATs for complete regression test is going to be more than a month long project. We have to find the right set of tool, plan what areas to AT and how, then engage both dev and QA to work together and prepare the right tests. And then we have to keep the test suite up-to-date after every sprint to catch the new bugs and features.

Omar: Yes, this is certainly a complex project. We have to get to a stage that can empower a developer to run automated ATs and not ask QA to test every task for regression bugs. In fact, we should have automated build that runs all ATs and does the regression test for us automatically after every checkin.

Rob: We have automated build and deploy. So, that’s done. We need to add automated AT to it. Seriously, given our product size, this is absolutely impossible to engage in writing so many ATs so that we can do the entire regression test automatically. It’s not worth the time and money. Our QA team is doing fine. They can take one day leave after deployment when they do overnight work.

Omar: Actually QA team is at the edge of quitting. They seem to have endless work load. After deployment, they have to do manual regression test on production site to ensure nothing broke on production. While they are at it, they have to participate in sprint initiation meetings and write test plans. When they are about to complete that, devs checkin stuffs and ask for regression test of different modules. Before they can finish that , we reach code freeze and they have to finish all those task level tests as well as the entire regression test. So, they end up working round-the-clock several days every sprint. They simply can’t take it anymore.

Rob: How is it different than our life? After spending sleepless night on the deployment date, next day we have to attend 8 hours long sprint planning meeting. Then we have to immediately start working on the tasks from the next day and have to reach code-freeze within a week. Then QA comes up with so many bugs at the last moment. We have to work round-the-clock last 3 days of sprint to get those bugs fixed. Then after a nerve wrecking deployment day, we have to stay up at night to wait for QA to report any critical bug and fix it immediately on production. We are at the brink of destruction as well.

Omar: That’s understood. The whole team is surely getting pushed to their limit. So, that’s why we urgently need automated test so that it addresses the problems of both dev and QA team. Dev will get tests done at a faster rate so that they don’t get bug reports at the very end and then work over-night to fix them. Similarly, we offload QA team’s continuous overwork by letting the system do the bulk of their test.

Rob: This is going to kill the team for sure. We have so many product features and bug fixes to do every sprint. Now, if we ask everyone to start writing ATs for every task they do, it’s a lot of burden. We can’t do both at the same time.

Omar: Agree. We have to cut down product features or bug fixes. We have to make room in every sprint to write ATs.

Rob: Good luck with that. Let’s see how you convince product team.

Omar: First let me convince you. Are you convinced that we should do it.

Rob: Not yet. I don’t really see its fruit in near future, even after two months. There’s so many features we have to do and so many customers to ship to, we just can’t do enough ATs that will really shed off QA load. It’ll just be a distraction and delay in every sprint, heck, in every task.

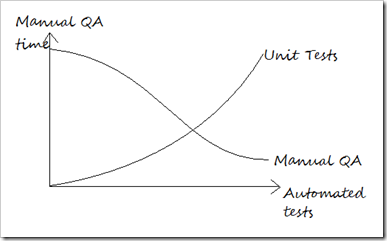

Omar: Let me show you a graph which I believe is going to make an impact:

Figure: Manual QA effort goes down after a while when automated test kicks in

So, you see the more automated test we write, the less time spent on Manual QA. That time can be spent on doing new tests or task level tests and increase quality of every new feature shipped and drastically reduce new bugs shipped to production. Thus we get less and less bugs after every successful sprint.

Rob: Ya, I get it, you don’t need to convince me for this. But I don’t see the benefit from overall gain perspective. Are we shipping better product faster over next two months? We aren’t. We are shipping less features and bug fixes by spending a lot of time on writing ATs that has no impact on end-user.

Omar: Let me see if your assumption is correct:

Figure: QA can spend more time on new features, when automated regression test can catch bugs

You see here, the more automated tests kick in, the more time QA can spend on new features or new bugs. I agree that the speed of testing new features/bugs decrease first one or two sprints, but then they gradually get picked up and get even better. In the beginning, there’s a big overhead of getting started with automated test. But as sprints go by, the number of ATs to write gradually gets stable and soon it becomes proportional to new features/bugs. No more time spent on writing tests for old stuff. So, the number of ATs you write after four sprints is exactly what needed for the new tasks you did on that sprint.

Rob: Let’s see what if we just don’t do any automated test and keep things manual. How does the graph look like?

Figure: Each sprint, time spent on regression test increases, without any automated regression test

Omar: The future looks quite gloomy. We will be spending so much time on regression test as we keep adding stuff to the product that at some point QA will end up doing regression test full time. They will not spend time on new features and we will end up having a lot more new bugs slipped from QA to production due to lack of attention from QA.

Rob: OK, how do we start?

Omar: The first step is to get the regression tests done so that we can get rid of that 24 hour long marathon QA period end of every sprint. Moreover, I see too many devs asking QA to do regression test here and there after they commit some tasks. So, QA is always doing regression tests from the beginning to the end of each sprint. They should only test new things for which automated test is not yet written and let the automated test do the existing tests.

Rob: This will be hard to sell to management. We are going to say “Look for next one month, we will be half productive because we want to spend time automating our QA process so that from second month, we can do tests automatically and QA can have more free time.”

Omar: No, we say it like this, “We are going to spend 50% of our time automating QA for next month so that QA can spend 50% more time on testing new features. This will prevent 50% new bugs from occurring every sprint. This will give developers 50% more time to build new features after one month.” We show them this graph:

Figure: Initially there's a drop of productive, but then a lot in increase

Rob: Seems like this will sell. But for the first couple of sprints, we will be so dead slow that some of us might get fired. Think about it, from management point of view, the development team has suddenly become half productive. They aren’t building only few new features and bugs are not getting fixed as fast rate. Customer are screaming, investors asking for money back. It’s going to get really dirty. Do you want to take this risk?

Omar: I can see that this decision is a very hard decision to take. I know what CEO will say, “We need to be double productive from tomorrow, otherwise we might as well pack our bags and go home. Tell me something that will make us double productive from tomorrow, not half productive.” But you can see what will happen after couple of months. Situation will be so bad that doing this after couple of months will be out of question. We won’t be in a position to even propose this. Now, at least we can argue and they still have the mind to listen to long term ideas. But in future, when our QA team is doing full time regression test, new buggy features going to production, ratio of new bugs increasing after every release, more customers screaming, half baked features running on the production – we might have to shut down the company to save our life.

Rob: We should have started doing automated tests from day one.

Omar: Yes, unfortunately we haven’t and the more we delay, the harder it is going to get. I am sure we will write automated tests from day one in our next project, but we have to rescue this project.

Rob: OK, I am sold. How do we start? We surely need to AT the business and data access layer. Do we start writing AT for every function in DAL and Business layer?

Omar: Writing AT for DAL seems pointless to me. Remember, we have very little time. We will get max two sprints to automate ATs. After that, we won’t get the luxury to spend half of our time writing ATs. We will have to go back to our feature and bug fix mode. So, let’s spend the time wisely. How about we only test the business layer function?

Rob: So, we test functions like CreateCustomer, EditCustomer, DeleteCustomer, AddNewOrder in business layer?

Omar: Is that the final layer in business layer? Is there another high level layer that aggregates such CRUD like functions?

Rob: For many areas, it’s like CRUD, a dumb wrapper on DAL with some minor validation and exception handling. But there are places where there are complex functions that do a lot of different DAL call. For example, UpdateCustomerBalance – that calls a lot of DAL classes to figure out customer’s current balance.

Omar: Do webservices call multiple business classes? Do they act like another level that aggregates business layer?

Rob: Yes, webservices are called mostly from user actions and they generally call multiple business layer classes to get the job done.

Omar: Where’s the caching done?

Rob: Webservice layer.

Omar: That sounds like a good place to start AT'ing. We will write small number of ATs and still test majority of business layer and data access classes and we ensure validation, caching, exception handling code are working fine.

Rob: But there are other tools and services that call the business layer. For example, we have a windows service running that directly calls the business layer.

Omar: Can we refactor it to call webservices instead?

Rob: No, that’ll be like creating 10 more webservices. A lot more development effort.

Omar: OK, let’s write ATs for those business layer classes separately then. I suppose there will be some overlap. Some webservice call will test those business classes as well. But that’s fine. We *should be* AT'ing from business layer. But we don’t have time, so we are starting from one level up. Webservices aren’t really “unit” but you have to do what you have to do. At least testing webservices will give us guarantee that we covered all user actions under AT.

Rob: Yes, testing webservices will at least ensure user actions are tested. The background windows service is not much of our headache. Now how do we test presentation logic? We have ASP.NET pages and there’s all those Javascript rendering code.

Omar: Let’s use Watin for that.

Rob: How to make that part of a AT suite?

Omar: Watin integrates nicely with NUnit, mbUnit, xUnit. I like xUnit. It's the most pragmatic one out there. Especially the SubSpec plugin to do BDD for xUnit is awesome!

Rob: OK, so how do we AT UI? A test function will click on Login link, fill up the email, password box and click “OK”. Then wait for one sec and then see if Javascript has rendered the UI correctly?

Omar: Something like that. We can discuss later exactly how we test it. But how do you test if UI is rendered correctly?

Rob: We check from browser’s DOM for user’s data like name, email, balance etc are available in browser’s HTML.

Omar: Does that really test presentation logic? What if the data is misplaced? What if due to CSS error, it does not render correctly.

Rob: Well, there’s really no way to figure it out if things are rendered correctly. We can ask the QA guys to keep watching the UI while Watin runs the tests on the browser. You can see on the browser what Watin is doing.

Omar: OK, that’s one way and certainly faster than QA doing the whole step. But can it be done automatically like matching browser’s screen with some screenshot?

Rob: Yeah, we need AI for that.

Omar: Seriously, can we write a simple UI capture and comparison tool? Say we take a screenshot of correct output and then clear up some areas which can vary. Then Watin runs the test, it takes the screenshot of current browser’s view and then matches with some screenshot? Here’s the idea:

Figure: Capturing screenshot of page and comparing with expected screenshot

Say this is a template screenshot that we want to match with the browser. We are testing Google’s search result page to ensure the page always returns a particular result when we provide some predefined query. So, when Watin runs the test and takes browser to Google search result page, it takes a screenshot and ignores whatever is on those gray area. Then it does a pixel by pixel match on the rest of the template. So, no matter what the search query is and no matter what ad Google serves on top of results, as long as the first result is the one we are looking for, test passes.

Rob: As I said, this is AI stuff. Some highly sophisticated being will be matching two screenshots to say, Yah, they more or less match, test pass.

Omar: I think a pretty dumb bitmap matching will work in many cases. Just an idea, think about it. This way we can test if CSS is giving us pixel perfect result. QA takes a screenshot of expected output and then let the automated test to match with browser’s actual output.

Rob: OK, all good ideas. Let’s see how much we can do. We will be starting from webservice AT'ing. Then we will gradually move to Watin based testing. Now it’s time to sell this proposal to product team and then to management team.

Omar: Yep, at least get the webservices tested, that will catch a lot of bugs before QA spends time on testing. Goal is to get as much testing done by developers, really fast, automatically then letting QA spend time on them. Also we can run those webservice ATs in a load test suite and load test the entire webservice layer. That’ll give us guaranty our code is production quality and it can survive the high traffic.

Rob: Understood, see ya.

. . .

One Year Later – On a Code Freeze Day

Omar: Hey Rob, how are we doing this sprint?

Rob: Pretty good. 3672 ATs out of 3842 passed. We know why some of them failed. We can get them fixed pretty soon and run the complete regression tests once during lunch and once before we leave. QA has completed testing new features pretty well yesterday and they can check again today. We got some of the new features covered by ATs as well. Rest we can finish next sprint, no worries.

Omar: Excellent. Enjoy your weekend. See you on Monday.