Introduction

With the new release of the .NET framework, creating a sophisticated TCP Server is just like eating a snack!

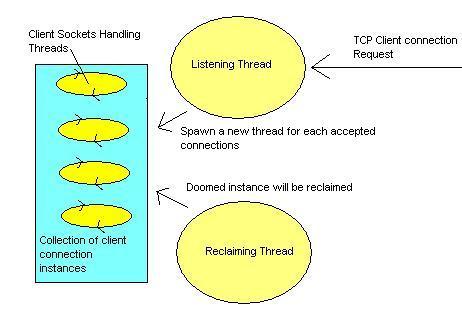

Basic features including multi-thread client connection handling, collective management for client connections

with thread safe collection object, and background reclaiming of all doomed connections, are all easy to

address.

Tools

This example was built on the Microsoft .NET Framework SDK version 1.0.3705 (with SP2), I suggest you use the

latest version wherever you can acquire one. A simple editor should be enough to begin your coding and I have used

C# to create all programs and compiled it with the C# compiler that comes with the SDK. There are several source

files and commands to produce executables are as follows:

CSC TcpServer.cs

CSC TcpServer2.cs

CSC TcpServer2b.cs

CSC TcpServer3.cs

CSC TcpClientTest.cs

TCP Classes

Coming with the framework are the very useful classes TcpListener and TcpClient in

the namespace System.Net.Sockets, which provided most of the needed TCP network task for building TCP

applications. In just several simple statements will give a simple TCP listening service for the TCP server:

TcpListener listener = new TcpListener(portNum);

listener.Start();

TcpClient handler = listener.AcceptTcpClient();

int i = ClientSockets.Add ( new ClientHandler(handler) ) ;

((ClientHandler) ClientSockets[i]).Start() ;

In the third line of the above statements allows the TCP server to accept incoming client connections, each

connections will give a separated TcpClient instance representing individual client connections.

Since each client connections is handled in separated threads, the class ClientHandler will

encapsulate client connection and a new thread will be created and started (that is what the last two lines in the

above statements do).

Thread Safe Collection

As to managing client connections, especially when reclaiming doomed client connections and shutting them down

before ending the main TCP server thread, an ArrayList object is so handy and comes into the game as a thread

safe collection bag for all client connections. The followings lines depict how thread safe access to

ArrayList can be achieved:

private static ArrayList ClientSockets

lock ( ClientSockets.SyncRoot ) {

int i = ClientSockets.Add ( new ClientHandler(handler) ) ;

((ClientHandler) ClientSockets[i]).Start() ;

}

The keyword lock provided thread-synchronized access to a property SyncRoot of the

instance of ArrayList, ClientSockets, a collection of object instances of class

ClientHandler, representing TCP client connections.

Background TCP Client Reclaiming Thread

In a typical TCP servicing environment, many clients making connections will mix with clients which are dropping

their connections at the same time, and such dropped connections should be properly release their held resources.

Without reclaiming means server will soon be overloading with doomed client connections, which hold sacred resources

without releasing them back to system. The following code shows the reclaiming of the thread in my application:

ThreadReclaim = new Thread( new ThreadStart(Reclaim) );

ThreadReclaim.Start() ;

private static void Reclaim() {

while (ContinueReclaim) {

lock( ClientSockets.SyncRoot ) {

for ( int x = ClientSockets.Count-1 ; x >= 0 ; x-- ) {

Object Client = ClientSockets[x] ;

if ( !( ( ClientHandler ) Client ).Alive ) {

ClientSockets.Remove( Client ) ;

Console.WriteLine("A client left") ;

}

}

}

Thread.Sleep(200) ;

}

}

As the reclaiming thread will compete with main thread to access the client connections collection,

synchronized access is needed when checking dead connections before removing them.

Maybe some readers ask me why I did not choose to use features like callbacks or delegates to let client

connection instances unregister themselves from the collection, this I will explain later.

Clean Shutdown

Before stopping the main server, closing all connections properly is also very important:

ContinueReclaim = false ;

ThreadReclaim.Join() ;

foreach ( Object Client in ClientSockets ) {

( (ClientHandler) Client ).Stop() ;

}

First, the resource reclaiming thread is ended and a global variable ContinueReclaim is responsible

for controlling it. In addition, it is waited to be properly stopped before the main thread starts going on to the

next step. Finally, a loop is started to drop each client connections listed in ClientSockets, as this time

only the main thread is accessing it no thread-synchronisation code is needed.

Here I would like to explain why I do not use a callback or delegate to address the reclaiming task. Since the

main thread needs to hold the ClientSockets collection exclusively while dropping the client

connections, it would have produced deadlock as the client connection trying to access ClientSockets

collection tried to use callback or delegate to unregister itself and at the same time main thread was waiting client

connection to stop! Of course some may say using timeout while client connection class trying to access

ClientSockets collection is an option, I agree it could solve the problem but as I always prefer a

cleaner shutdown, using a thread to control resource reclaiming task would be a better idea.

Thread Pooling

Things seem so fine when there are not too many clients connecting at the same time.

But the number of connections can increase to a level that too many threads are created

which would severely impact system performance! Thread pooling in such a case can give

us a helping hand to maintain a reasonable number of threads created at the same time base on

our system resource. A .NET class ThreadPool helps to regulate when

to schedule threads to serve tasks on the work-item queue and limit the maximum number

of threads created. All these functions come as a cost as you lose some

of the control of the threads, for example, you cannot suspend or stop a

thread preemptively as no thread handle will be available by calling the static method:

public static bool QueueUserWorkItem(WaitCallback);

of class ThreadPool. I have created another example to let all client connections

be scheduled to run in thread pool. In the sample program TcpServer2.cs is

several amendments I have made. First, I do not use a collection object to include client connections.

Secondly, there is no background thread to reclaim the doomed client connections. Finally, a special class

is used to synchronize client connections when the main thread comes to a point to instruct ending all of them.

Reschedule Task Item in Thread Pool

As the client handling task scheduled in the thread pool created on the previous example will tend to hold the

precious thread in the thread pool forever, it will restrict the scalability of the system and large number of task

items cannot get a chance to run because the thread pool has a maximum limit on the number of threads to be created.

Suppose even though it did not impose a limit on number of threads created, too many threads running still lead to

CPU context switching problems and bring down the system easily! To increase the scalability, instead of holding the

thread looping with it, we can schedule the task again whenever one processing step has

finished! That is what I am doing in example TcpServer2b.cs;

trying to achieve the client task handling thread function returns after each logical processing step and try

rescheduling itself again to the thread pool as the following code block shows:

if ( SharedStateObj.ContinueProcess && !bQuit )

ThreadPool.QueueUserWorkItem(new WaitCallback(this.Process), SharedStateObj);

else {

networkStream.Close();

ClientSocket.Close();

Interlocked.Decrement(ref SharedStateObj.NumberOfClients );

Console.WriteLine("A client left, number of connections is {0}",

SharedStateObj.NumberOfClients) ;

}

if ( !SharedStateObj.ContinueProcess && SharedStateObj.NumberOfClients == 0 )

SharedStateObj.Ev.Set();

No loop is involved and as the task tries to reschedule itself and relinquish thread it held, other tasks get a

chance to begin running in the thread pool now! Actually this is similar capability to what the asynchronous

version of the socket functions provide, task get, waiting and processing in the thread pool thread needs to reschedule

again at the end of each callback functions if it want to continue processing.

Use Queue with Multiple Threads

Using a queue with multiple threads to handle large numbers of client requests is similar to the asynchronous

version of the socket functions which use a thread pool with a work item queue to handle each tasks. In example

TcpServer3.cs, I have created a queue class ClientConnectionPool and wrapped a Queue object

inside:

class ClientConnectionPool {

private Queue SyncdQ = Queue.Synchronized( new Queue() );

}

This is mainly to provide a thread safe queue class for later use in the multi-threaded task handler,

ClientService, part of its source shown below:

class ClientService {

const int NUM_OF_THREAD = 10;

private ClientConnectionPool ConnectionPool ;

private bool ContinueProcess = false ;

private Thread [] ThreadTask = new Thread[NUM_OF_THREAD] ;

public ClientService(ClientConnectionPool ConnectionPool) {

this .ConnectionPool = ConnectionPool ;

}

public void Start() {

ContinueProcess = true ;

for ( int i = 0 ; i < ThreadTask.Length ; i++) {

ThreadTask[i] = new Thread( new ThreadStart(this.Process) );

ThreadTask[i].Start() ;

}

}

private void Process() {

while ( ContinueProcess ) {

ClientHandler client = null ;

lock( ConnectionPool.SyncRoot ) {

if ( ConnectionPool.Count > 0 )

client = ConnectionPool.Dequeue() ;

}

if ( client != null ) {

client.Process() ;

if ( client.Alive )

ConnectionPool.Enqueue(client) ;

}

Thread.Sleep(100) ;

}

}

}

Using the Dequeue and Enqueue functions, it is so easy to give tasks handling based on

the FIFO protocol. Making it this way we have the benefit of good scalability and control for the client

connections.

I will not provide another Asynchronous Server Socket Example because the .NET SDK

already has a very nice one and for anyone interested please goto the link

Asynchronous Server Socket Example.

Certainly Asynchronous Server Sockets are excellent for most of your requirements, easy to implement, high

performance, and I suggest reader have a look on this example.

Conclusion

The NET framework provide nice features to simplify

multi-threaded TCP process creation that was once a very difficult task to many

programmers. I have introduced three methods to create a multi-threaded TCP server

process. The first one has greater control on each threads but it may impact system

performance after a large number of threads are created. Second one has better performance

but you have less control over each thread created. The last example gives you the benefit of

bothscalability and control, and is the recommended solution. Of course, you can use

the Asynchronous Socket functions to give you similar capabilities, whilst my example

TcpServer2b.cs, which gives you a synchronous version with thread pooling, is

an alternative solution to the same task. Many valuable alternatives and much depends

on you requirements. The options are there so choosing the one best suited your application is the

most important thing to consider! Full features can be explored even wider

and I suggest that readers look at the .NET documentation to find out more.