Table of Content

- Introduction

- Background

- TSql New Features And Enhancements

- Math Function (Enhanced Feature)

- Log Function

- Logical Functions(New Feature)

- Choose Function

- IIF Function

- String Functions(New Feature)

- Concat Function

- Format Function

- Conversion Functions(New Feature)

- Try_Convert Function

- Try_Parse Function

- Parse Function

- Date and Time Functions(New Feature)

- EOMonth Function

- DateFromParts Function

- TimeFromParts Function

- DateTimeFromParts Function

- DateTime2FromParts Function

- SmallDateTimeFromParts Function

- DateTimeOffSetFromParts Function

- Over clause enhancement (Enhanced Feature)

- Rows and Range

- Between..And clause

- Unbounded Preceding

- Unbounded Following

- Current Row

- Analytic Functions(New Feature)

- Lead

- Lag

- First_Value

- Last_Value

- Percent_Rank

- Cume_Dist

- Percentile_Disc

- Percentile_Cont

- Conclusion

One of the hottest and awesome developments by Microsoft in the technology field was come into picture on 8th November, 2010 when they released the Community Technology Preview 1 (CTP 1) version of Sql Server 2011(Code name Denali). The CTP1 is available both in 32-bit and 64-bit versions. As expected, Denali has brought some new features for Sql lovers may that be developers, Administrators or Business Intelligence (BI) professionals. Part I describes about the features and enhancements in SSMS. Part II focuses on the new development and enhanced features from T-Sql perspective. Part III looks into the enhancements made from SSIS angle while Part 4 talks about Contained Database.

But that's not the end of Denali.It is an ongoing development and after the big success of CTP1 , it is now CTP 3 which was launched on 12th July 2011.As expected, Denali is spreading itself in various wings may it be enhancements from TSql perspective, a new look and feel-Juneau, a new report tool using the Project Cresent or enhancing the retrival of datawarehousing process by ColumnStore indexes etc. Denali has given a new dimension to the SQL Server database and this series will explore the new and enhanced functionalities that CTP 3 has offered us from TSql perspective.

In the last few years, Microsoft has brought many technologies under the developers’ hood. A drastic change has been made in the Sql Server jargon with the advent of Sql Server 2005(code name Yukon) and in the subsequent releases like Sql Server 2008(code name Katmai) and Sql 11(code name Denali), the same pace has been kept with introduction to new features and enhancements as well as improvements. In this article we will explore some of the new features that Denali CTP 3 has already offer us from TSql perspective.Earlier we have seen the enhancements made in Denali CTP1 on the same topic. The subsequent articles will focus on the enhancements made in the other areas.

You can read my other articles on Denali as given under

- An Introduction to Sql 11 (Code Name Denali) –Part I (SSMS Features in CTP 1)

- An Introduction to Sql 11 (Code Name Denali) –Part II (T-Sql Features in CTP 1)

- An Introduction to Sql 11 (Code Name Denali) –Part III (SSIS Features in CTP 1)

- An Introduction to Sql 11 (Code Name Denali) -Part IV (Contained Database in CTP 1)

- An Introduction to Sql 11 (Code Name Denali) –Part V (SSIS Features in CTP 3)

I.Math Function

A little enhancement has been done in the Mathematical Log function.

a.Enhanced Log Function

Well we have this function for a long time. But the log function has been overloaded this time with an additional base argument.

Syntax : Log (Float Expression [, base])

Example

;With CTE AS

(

Select Base = 2

Union All

Select Base+1 from CTE

Where Base < 10

)

Select Base, Result = LOG (10, Base) from CTE

II.Logical Function

Logical functions are those functions that determines the result based on some boolean condition.Denali CTP 3 has offered us two such functions which we will discuss now

a.Choose Function

Purpose: Given a list of values and a position, this function will return the value at the indicated position.

Syntax : Choose ([Position], [Value1],[Value2],…,[ValueN])

Where,

Position => The position number of the value to return. Position number starts from 1

Value1..ValueN => List of values.

Example 1: Correct Position Number

Select Choose (1,'Simple Choose Demo', 'This is an example') As [Choose Demo]

Explanation

In this example, we have specified the position as 1 and hence out of the two values, the first appears as the result.

Example 2: Position Number < 1

Select Choose (0,'Simple Choose Demo', 'This is an example') As [Choose Demo]

--OR

Select Choose (-1,'Simple Choose Demo', 'This is an example') As [Choose Demo]

Explanation

If the position is less than 1 then the result will be null.

Example 3: Position Number > Number of values

Select Choose (3,'Simple Choose Demo', 'This is an example') As [Choose Demo]

Explanation

If the position is more than the number of values then the result will be null.

Example 4: Fractional Position Number

It even accepts fractional numbers. E.g.

Select Choose (2.1,'Simple Choose Demo', 'This is an example') As [Choose Demo]

--OR

Select Choose (2.99,'Simple Choose Demo', 'This is an example') As [Choose Demo]

--OR

Select Choose (.5/.25,'Simple Choose Demo', 'This is an example') As [Choose Demo]

Explanation

In this case, it gets rounded off to the whole number.

Example 5: Choose with Expressions

Suppose we have a table as

Declare @t table(Position int)

Insert into @t values(1),(2),(3),(4)

Now if we write the below query

Select Choose ((Select Top 1 Position from @t),'Apple','Banana') As [Choose Demo]

We will get an error. However, we can use it in the following way

Declare @FirstPosition int = (Select Top 1 Position from @t)

Select Choose (@FirstPosition,'Apple','Banana') As [Choose Demo]

Example 6: A more feasible example

Suppose we are preparing a quiz game where every question has four options(Kindly ignore the designing of the table.Focus on the usage of Choose function). Now if we need to find out which one the user has answered, we can use this function to get the answer like the below

Declare @tblQuiz table

(

[QuestionID] int identity

,[UserName] Varchar(100)

,[Question] Varchar(100)

,[AnswerID] int

,[Option1] varchar(50)

,[Option2] varchar(50)

,[Option3] varchar(50)

,[Option4] varchar(50)

)

Insert into @tblQuiz Values

('Deepak','Who was the Indian Skipper when India bagged the Second World Cup Cricket Tournament?',2,'K.Dev','M.S.Dhoni','M.Singh','S.Gavaskar')

,('Deepak','Which nation got the Cricket World Cup 2011?',4,'Srilanka','England','Bangladesh','India')

,('Deepak','Who was the Man of the Tournament in Cricket World Cup 2011?',4,'R.Singh','S.Tendulkar','Y.Singh','Z.Khan')

,('Deepak','Between which two nation the Cricket World Cup 2011 final match was played?',1,'India and Australia','India and Srilanka','WestIndies and Pakistan','Srilanka and South Africa')

Select * from @tblQuiz

Select

[UserName]

,[Question]

,[Answer Given] = Choose([AnswerID],[Option1],[Option2],[Option3],[Option4])

from @tblQuiz

So depending on the [AnswerID] column, we can easily find out the answer given by the user.

b.IIF Function

We are already familiar with IF..Else statement which executes after evaluating a Boolean value. Now from Sql 11 Denali CTP 3, we have the function in place- IIF ().

Purpose:Returns a value as per the specified Boolean condition.

Syntax : IIF([Condition],[True Value],[ False Value])

Where,

Condition => Is any valid Boolean expression.

True Value => The value returned if the Condition evaluates to True.

False Value => The value returned if the Condition evaluates to False.

N.B.~ It can be treated as a shorthand version of Case statement.

Let us see this into action

Example 1: Simple IIF()

Select Result = IIF(1=1,'OK','Not OK')

The same can be done by using case statement as

Select Result = Case When 1 = 1 Then 'OK' Else 'Not OK' End

OR using the IF..Else block as

If(1=1) Print 'OK'

Else Print 'Not OK'

Example 2: Nested IIF() . Find the bigger of two numbers

Declare @Num1 As Int = 1

Declare @Num2 As Int = 2

Select Result = IIF(@Num1 > @Num2, 'First Number is bigger',

IIF(@Num2 > @Num1,'Second number is bigger','Numbers are equal'))

However, an equivalent case statement will be

Declare @Num1 As Int = 1

Declare @Num2 As Int = 2

Select Result = Case When @Num1 > @Num2 Then 'First Number is bigger'

When @Num2 > @Num1 Then 'Second number is bigger'

Else 'Numbers are equal' End

Example 3: Specifying more than one condition in IIF() . Find the biggest of three numbers

We can always specify any conditional or logical operator for specifying multiple conditions inside the boolean expression as illustrated below

Declare @Num1 As Int = 10

Declare @Num2 As Int = 50

Declare @Num3 As Int = 30

Select Result = IIF(@Num1 > @Num2 And @Num1 > @Num3, 'First Number is biggest',

IIF(@Num2 > @Num1 And @Num2 > @Num3,'Second number is biggest',

'Third Number is biggest'))

The equivalent case statement will be

Declare @Num1 As Int = 10

Declare @Num2 As Int = 50

Declare @Num3 As Int = 30

Select Result = Case When @Num1 > @Num2 And @Num1 > @Num3 Then 'First Number is bigger'

When @Num2 > @Num1 And @Num2 > @Num3 Then 'Second number is bigger'

Else 'Third Number is biggest' End

Example 4: An erroneous IIF()

Select IIF('a' > 'b', Null,Null)

Msg 8133, Level16, State 1, Line 2

At least one of the result expressions in a case specification must be an expression other than the NULL constant.

However, the below works

Declare @null sql_variant

Select IIF('a' = 'b', @null, @null)

Will result to Null

It is because of the fact that both the options of the Boolean expression results into the @null variable which has null value.

Case 5: IIF() And Choose() function

Let us once again go back to the Quiz table which we created in the last example of Choose function.

Along with that table let us add an Answer table as under

Declare @tblAnswer table([QuestionID] int identity, [Correct Answer] varchar(50))

Insert into @tblAnswer Values('M.S.Dhoni'),('India'),('Y.Singh'),('India and Australia')

Now fire the below query

;With Cte As(

Select

[UserName]

,[Question]

,[QuestionID]

,[Answer Given] = Choose([AnswerID],[Option1],[Option2],[Option3],[Option4])

from @tblQuiz

)

Select c.*,Result = IIF(c.[Answer Given] = a.[Correct Answer]

,'Correct Answer','Wrong Answer')

from Cte c Join @tblAnswer a on a.QuestionID = c.QuestionID

N.B.~So far I hope that we have seen some examples on the newly introduce logical (IIF and Choose) functions.Now let us see how they are being treated by the Sql Engine

For this, we will create a test environment as under

IF EXISTS (SELECT * FROM sys.objects WHERE name = N'tbl_Test' AND type = 'U')

DROP TABLE tbl_Test

GO

SET ANSI_NULLS ON

GO

--Create the table

CREATE TABLE [dbo].[tbl_Test](

[PlayerId] [INT] NOT NULL,

[PlayerName] [VARCHAR](50) NOT NULL,

[BelongsTo] [SMALLINT] NOT NULL,

[PhoneNumber] [INT] NULL,

CONSTRAINT [PK_tbl_Test] PRIMARY KEY CLUSTERED

(

[PlayerId] ASC

)WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]

) ON [PRIMARY]

GO

--Populate the Cte with some records

;With Cte(Id,Name,BelongsTo,PhoneNo) As (

Select

Id = 1

,Name='Name' + CAST( 1 As Varchar(50))

,BelongsTo = 1

, PhoneNo=12345678

Union All

Select

Id+1

,Name= 'Name' + CAST( Id+1 As Varchar(50))

,BelongsTo = Case When ID <= 250 Then 1

When ID > 250 And ID <= 500 Then 2

When ID > 500 And ID <= 750 Then 3

Else 4

End

, PhoneNo = Case When ID % 2 = 0 Then Null

Else 12345678

End

From Cte

Where Id <100000

)

--Insert the records into the table

Insert Into dbo.tbl_test

Select * From Cte

Option( Maxrecursion 0)

--Display the records

Select *

From tbl_Test

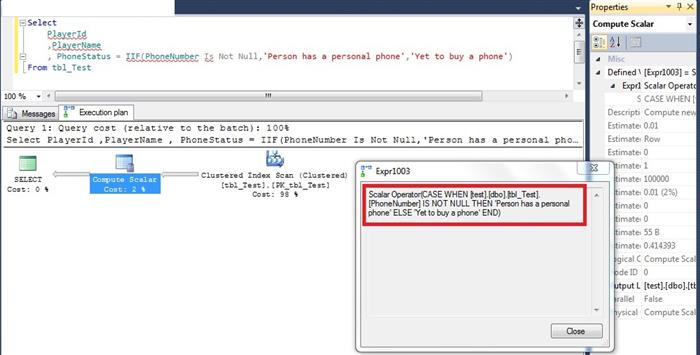

IIF being viewed by SQL Engine

Consider the statement

Select

PlayerId

,PlayerName

, PhoneStatus = IIF(PhoneNumber Is Not Null,'Person has a personal phone','Yet to buy a phone')

From tbl_Test

The execution plan being generated is as under

As can be figured out that, the IIF gets expanded into the corresponding Case statement and that's how the operation happens

An equivalent case statement will give the same execution plan

Select

PlayerId

,PlayerName

, PhoneStatus = Case When PhoneNumber Is Not Null Then 'Person has a personal phone'

Else 'Yet to buy a phone'

End

From tbl_Test

We can even do a simple preformance testing of these two functions which yields

| Function | CPU Time | Elapsed Time |

| IIF | 31 ms | 994 ms |

| Case | 46 ms | 986 ms |

As can be figure out that there is not much performance gain using the new IIF function.Please note that the values may differ in other system but the overall gain will not be significant.

Choose being viewed by SQL Engine

Consider the below statement

Select

PlayerId

,PlayerName

,BelongsTo

,PlayerType = CHOOSE(BelongsTo,'Indian Player','USA Player','Japanese Player','Australian Player')

From tbl_Test

The execution plan being generated is as under

As can be figured out that like IIF, Choose gets expanded into the corresponding Case statement and that's how the operation happens

An equivalent case statement will give the same execution plan

Select

PlayerId

,PlayerName

,BelongsTo

,PlayerType = Case When BelongsTo = 1 Then 'Indian Player'

When BelongsTo = 2 Then 'USA Player'

When BelongsTo = 3 Then 'Japanese Player'

When BelongsTo = 4 Then 'Australian Player'

End

From tbl_Test

We can even do a simple preformance testing of these two functions which yields

| Function | CPU Time | Elapsed Time |

| Choose | 141 ms | 1011 ms |

| Case | 78 ms | 1048 ms |

As can be figure out that atleast for this experiment, Case yields better CPU time (time use to execute the query) but the Elapsed time (time taken by the query to run) is almost identical.Please note that the values may differ in other system but the overall gain will not be significant.

III.String Function

Two new string functions has been introduce in this release. They are Concat and Format about which we will look under

a.Concat Function

As the name suggests, it concatenates strings. In earlier versions of Sql Server we have the option of performing concatenation using the '+' symbol. But the overhead was that if the types that are participating in the concatenation are not of varchar type, then we had to do explicit conversion else it was resulting in error. However, the new Concat() function takes care of this explicit conversion.

Purpose: Concatenates variable number of string arguments and returns a single string.

Syntax: Concat( Value1,Value2,...,ValueN)

Example1: Simple Concat

Select Concat('Hello',' ' ,'Concat') As [Concat]

Example 2: Concat string with integer

Select Concat('String',10) As [Concat]

Example 3: Concat with multiple datatypes

Select Concat('Sql',11, Null, 'Code Name', 'Denali', 'CTP' ,3) As [MultipleField Concat]

The null is converted to empty string. Where as the traditional '+' symbol would have return null

Select 'Sql' + CAST(11 as varchar(10)) + Null + 'Code Name Denali CTP 3' As [MultipleField Concat]

We would have to use either ISNull Or Coalesce to get the desired result as

Select 'Sql' + CAST(11 as varchar(10)) + Coalesce(Null,'') + 'Code Name Denali CTP 3' As [MultipleField Concat]

--OR

Select 'Sql' + CAST(11 as varchar(10)) + IsNull(Null,'') + 'Code Name Denali CTP 3' As [MultipleField Concat]

Example 4: Concat with table columns

Declare @t table(FirstName varchar(10),LastNAme varchar(10))

Insert into @t select 'Niladri','Biswas' Union All Select 'Deepak','Goyal'

Select FullName = Concat(Concat(FirstName,' ' ),LastName) from @t

In this case we have seen how Concat can be use in conjunction with table columns as well as it's nesting.

Example 5: Concat with one argument

Select Concat('Single argument') As [Concat]

Error

Msg 189, Level 15,State 1,Line 1 The concat function requires 2 to 254 arguments

b.Format Function

This function is there in dot net for a long time and now has been added in Sql Server. It formats the value as indicated.

Syntax: Format (expression, format [, culture])

Where,

Expression = > the expression to format

Format => A valid dot net framework format pattern.

Culture => It is optional and is use for specifying the culture

Example 1: Date Formatting

Declare @t table(Culture varchar(10))

Insert into @t values('en-US'),('fr')

Declare @dt Date = '06/15/2011'

Select

Culture

,Res1 = FORMAT(@dt,'d',Culture)

,Res2 = FORMAT(@dt,'yyyy/mm/dd',Culture)

From @t

Example 2: Currency Formatting

Declare @t table(Culture varchar(10))

Insert into @t values('en-US'),('ru'),('no')

Declare @currency int = 200

Select

Culture

,FormattedCurrency = FORMAT(@currency,'c',Culture)

From @t

Even we can specify the number of characters that will appear after the decimal point. Let's have a look

Declare @t table(Culture varchar(10))

Insert into @t values('en-US'),('ru'),('no')

Declare @currency money = 10.25

Select

Culture

,Res1 = FORMAT(@currency,'C1',Culture)

,Res2 = FORMAT(@currency,'C2',Culture)

,Res3 = FORMAT(@currency,'C3',Culture)

,Res4 = FORMAT(@currency,'C4',Culture)

From @t

As we have noticed that, this function happens to be a good addition into the box and it supports advance formatting with specified culture which was a difficult choice with Cast and Convert.

IV.Conversion Function

Denali CTP 3's conversion functions helps us to perform check on the datatype and if the conversion can be done then it helps to do so.Basically these functions are CLR functions and are already present in dotnet framework for a long time.However, we can get the same flavour now in TSql environemnt too.

a.Try_Convert Function

In earlier version of Sql Server till Sql 11 (code name: Denali) CTP 2, there was no option to check if a conversion from one data type to another was possible or not.Consider the below example where we are trying to convert a varchar to int

Select CONVERT(int,'Just a string')

OR

Select CAST('Just a string' as int)

Upon executing the statement, we will get the error

Msg 245, Level 16, State 1, Line 1

Conversion failed when converting the varchar value 'Just a string' to data type int.

Would not it be nice if we can know beforehand whether the conversion is possible and if so then we should go ahead for that? If this is the need, then Try_Convert is the solution.

Purpose:It basically checks whether conversion from one type to other is possible or not. Transforms the source data into the target type if the conversion is possible else null.

Syntax: Try_Convert (data type, expression [ , style ])

Where,

Datatype=> The target datatype

Expression => The value to be cast

Style => It is optional integer expression. It indicates how the function will translate the expression.

Example 1: Simple Try_Convert

Select

Try_Convert(int, 'Just a string') As Result1

,Try_Convert(int, '100') As Result2

,Try_Convert(int,null) As Result3

,Try_Convert(Date,'18500412') As Result4

,Try_Convert(DateTime,'18500412') As Result5

,Try_Convert(Numeric(10,4),'18500412') As Result6

Example 2: Try_Convert with style

Consider the below

Select CONVERT(varchar(10),getdate(),101) as Result

Can also be achieved as

Select Try_Convert(varchar(10),getdate(),101) as Result

As can be observed that it is an enhanced version of the Convert as well as Cast function. This is a CLR function that has been added into the Sql Server environment.

b.Try_Parse Function

We have encountered this in dot net from framework 2.0. Now we have this in Denali from CTP 3.

Purpose: It basically identifies if the type specified is applicable for parsing or not and returns the appropriate status.

Syntax: TRY_PARSE ( string_value AS data_type [ USING culture ] )

Where,

String_value => the value specified

Data_type = > the target type into which the string_value will be transformed

Culture => If any culture needs to be provided for the string_value to be formatted. This is optional and if not provided then the current session language will be use.

Example 1: Simple Try_Parse

Declare @t table(Data varchar(10))

Insert into @t values('12'),('a'),('.5'),('2011-22-07')

--Select * from @t

Select

Data

,TryParseDateTime = Try_Parse(Data AS Date)

,TryParseDecimal = Try_Parse(Data AS Decimal)

,TryParseNumeric = Try_Parse(Data AS Numeric(20,10))

,TryParseWithConcat = Try_Parse(Concat(Data,'7') AS int using 'en-US')

from @t

Example 2: Try Parse inside Nested IIF

Declare @str as Varchar(2) = '1'

Select

IIF(

Try_Parse(@str as int) Is Not Null

,IIF(

Try_Parse(@str as decimal) Is Not Null

,'OK'

,'Conversion failed in second level'

)

,'Conversion failed in first level'

) Result

N.B.~ This function is not fully stable

Select Try_Parse('0' AS Numeric(10,10)) As [Try_Parse_Example]

Will throw error

Msg 6521, Level 16, State 1, Line 1

Msg 6521, Level 16, State 1, Line 1

A .NET Framework error occurred during statement execution:

System.Data.SqlTypes.SqlTypeException: Invalid numeric precision/scale.

System.Data.SqlTypes.SqlTypeException:

at System.Data.SqlTypes.SqlDecimal.CheckValidPrecScale(Byte bPrec, Byte bScale)

at System.Data.SqlTypes.SqlDecimal..ctor(Byte bPrecision, Byte bScale, Boolean fPositive, Int32 data1, Int32 data2, Int32 data3, Int32 data4)

at System.Data.SqlServer.Internal.Number.NumberBufferToSqlDecimal(NumberBuffer number, SqlDecimal& value)

at System.Data.SqlServer.Internal.Number.ParseSqlDecimal(String value, NumberStyles options, NumberFormatInfo numfmt)

at System.Data.SqlServer.Internal.SqlParseIntrinsicImpl.<>c__DisplayClass20.<parsedecimal>b__1f(CXVariantBase* pxvar, String strParse, CultureInfo ci).

But we can always create our own CLR Function in such situations. Here is an attempt to make a basic TRY_PARSE.

Step 1:

Let us create a new project inside Visual Studio. File->New -> Project -> Database -> SQL Server Project.

Next, right click on the project and select Add -> User-Defined Function.

From the Add New Item template that appears, let us choose User Define Function

Let us click on Add button and write the below code

using System;

using System.Collections.Generic;

using System.Data.SqlTypes;

using Microsoft.SqlServer.Server;

public partial class UserDefinedFunctions

{

[Microsoft.SqlServer.Server.SqlFunction(DataAccess = DataAccessKind.Read)]

public static SqlBoolean TryParseFn(string value, string dataType)

{

bool status = TryParse(value, dataType);

return new SqlBoolean(status);

}

private static bool TryParse(string val, string key)

{

bool flag = true;

Dictionary<string, System.Type> dict = new Dictionary<string, System.Type>();

dict.Add("int", typeof(Int32));

dict.Add("bigint", typeof(Int64));

dict.Add("datetime", typeof(DateTime));

dict.Add("numeric", typeof(Decimal));

try

{

switch (dict[key].FullName)

{

case "System.Int32":Int32.Parse(val);break;

case "System.Int64":Int64.Parse(val); break;

case "System.DateTime":DateTime.Parse(val); break;

case "System.Decimal":DateTime.Parse(val);break;

}

}

catch

{

flag = !flag;

}

return flag;

}

}

And build the solution. We will find a dll by the name SqlClassLibrary.dll has been created.

Now open the SSMS and choose the database say ExperimentDB

First thing is that, we need to Enable CLR. Henceforth, let us execute the below query

EXEC sp_configure 'show advanced options' , '1'; -- Enable Advanced option

go

reconfigure;

EXEC sp_configure 'clr enabled' , '1' -- Enables CLR option in SQL SERVER

go

reconfigure;

go

EXEC sp_configure 'show advanced options' , '0'; -- Disable Advanced option

go

The next step is to register the assembly in our database. This can be done

a) By issuing a tsql script

b) Without issuing tsql script

(A)Create Assembly by issuing tsql script

--Drop the function if it exists

IF EXISTS(SELECT * FROM sys.objects WHERE type = 'FS' AND schema_id = SCHEMA_ID('dbo')AND name = 'Try_Parse')

DROP FUNCTION dbo.Try_Parse

GO

--Drop the assembly if it exists

IF EXISTS (SELECT * FROM sys.assemblies a WHERE a.name = N'SqlClassLibrary' and is_user_defined = 1)

DROP ASSEMBLY [SqlClassLibrary]

GO

--Create the assembly

CREATE ASSEMBLY SqlClassLibrary

FROM 'D:\CustomTryParse\CLRTryParse\CLRTryParse\bin\Debug\SqlClassLibrary.dll'

WITH PERMISSION_SET = SAFE

GO

CREATE FUNCTION Try_Parse(@value AS NVARCHAR(255), @dataType AS NVARCHAR(255)) RETURNS Bit

AS EXTERNAL NAME SqlClassLibrary.UserDefinedFunctions.TryParseFn;

GO

(B) Create Assembly Without issuing tsql script

Expand the Programmability node->Assemblies->Right Click->New Assemblies.

From the New Assembly window, set the Permission to Safe and specify the assembly path and then click OK.

The assembly has been added to the Assembly folder. This can also be verified by issuing the tsql command

SELECT * FROM sys.assemblies where is_user_defined = 1

Then issue the below command

USE [ExperimentDB]

GO

IF EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[dbo].[Try_Parse]') AND type in (N'FN', N'IF', N'TF', N'FS', N'FT'))

DROP FUNCTION [dbo].[Try_Parse]

GO

USE [ExperimentDB]

GO

CREATE FUNCTION [dbo].[Try_Parse](@value [nvarchar](255), @dataType [nvarchar](255))

RETURNS [bit] WITH EXECUTE AS CALLER

AS

EXTERNAL NAME [SqlClassLibrary].[UserDefinedFunctions].[TryParseFn]

GO

So, our work is done. Now we can invoke our Try_Parse as

Select Result = Case When dbo.Try_Parse('12/12/2011','datetime') = 1

Then 'True'

Else Null

End

It was just a small attempt to demonstrate our own Try_Parse.It can be enhanced as per the developer's wish if they need to do so.

N.B.~There is some problem with Try_Parse when dealing with DateTime field

Look at the below example

Declare @presentDate datetime = GetDate()

Select IIF(Try_Parse(

@presentDate As Datetime) IS not null

,CONVERT(varchar(10),EOMonth(@presentDate),110)

,'Sorry conversion not possible'

) Result

Msg 8116, Level 16, State 1, Line 3

Argument data type datetime is invalid for argument 1 of parse function.

If we change

Declare @presentDate datetime = GetDate()

to

Declare @presentDate varchar(10) = GetDate()

and run the same query, then we will receive the below error

Msg 6521, Level 16, State 1, Line 3

A .NET Framework error occurred during statement execution:

System.Data.SqlTypes.SqlTypeException: SqlDateTime overflow. Must be between 1/1/1753 12:00:00 AM and 12/31/9999 11:59:59 PM.

System.Data.SqlTypes.SqlTypeException:

at System.Data.SqlTypes.SqlDateTime.FromTimeSpan(TimeSpan value)

at System.Data.SqlTypes.SqlDateTime.FromDateTime(DateTime value)

at System.Data.SqlServer.Internal.CXVariantBase.DateTimeToSSDate(DateTime dt)

at System.Data.SqlServer.Internal.SqlParseIntrinsicImpl.<parsessdate>b__12(CXVariantBase* pxvar, String strParse, CultureInfo ci) .

However, the below is the workaround for it

Declare @presentDate DateTime = GetDate()

Select IIF(Try_Parse(Convert(varchar(10),@presentDate,101) As Datetime) Is Not null

,CONVERT(varchar(10),EOMonth(@presentDate),110)

,'Sorry conversion not possible'

) Result

c.Parse Function

Well dot net developers don't need any new introduction to it but for those who are encountering this function for the first time the answer is that it accepts a string datatype and converts to the requested datatype.

Purpose: It accepts a string datatype and converts to the requested datatype

Syntax: PARSE ( string_value AS data_type [ USING culture ] )

Where,

String_value => the value specified

Data_type = > the target type into which the string_value will be transformed

Culture => If any culture needs to be provided for the string_value to be formatted. This is optional and if not provided then the current session language will be use.

Example 1: Parse from Varchar to DateTime

Select ParseExample1 =Parse(CONVERT(varchar(10),getdate(),22) as datetime using 'en-US')

Example 2: Parse from Varchar to Interger

Select ParseExample2 =Parse('1234' as int)

Example 3: Parse and Try_Parse

Declare @value as varchar(10) = '$100.00'

Declare @culture as varchar(10) = 'en-US'

Select Result = IIF(

Try_Parse(@value AS money using @culture) Is Not Null

,Parse(@value AS money using @culture)

,'Conversion not possible'

)

As we can make out that, we can use Try_Parse function to check whether the conversion is possible or not and if so then we can go ahead.

V.Date and Time Function

Denali CTP 3 has brought some new DateTime functions which are as under

a.EOMonth Function

It expands to End Of Month. That means it helps to find out the last day of the month. Prior to the introduction of this function, we used to do the same by using something similar to the following

IF EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[dbo].[EOMonth]') AND type in (N'FN', N'IF', N'TF', N'FS', N'FT'))

DROP FUNCTION [dbo].[EOMonth]

GO

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

CREATE FUNCTION [dbo].[EOMonth](@startDate Datetime,@offset int)

RETURNS DATETIME

AS

BEGIN

Declare @EOM datetime

Select @EOM = DATEADD(mm,@offset,@startDate - DAY(@startDate)+1)-1

Return @EOM

END

GO

Declare @presentDate DateTime = GetDate()

Select

[PresentDate] = CONVERT(varchar(10),GetDate(),110)

,[EOPrevMonth] = CONVERT(varchar(10),dbo.EOMonth(@presentDate,0),110)

,[EOCurrMonth] = CONVERT(varchar(10),dbo.EOMonth(@presentDate,1),110)

,[EONextMonth] = CONVERT(varchar(10),dbo.EOMonth(@presentDate,2),110)

But with the advent of this function, it will be helpful for tsql developers to do the same operation easily.

Purpose:This function helps to get the last day of any month given a date. It also has an optional offset that helps to calculate the end of month which is N months later or before to the specified input date.

Syntax: EOMonth (StartDate [, Offset ] )

Where,

StartDate => Input date

Offset => An integer which indicates the end of month which is N months later or before to the specified StartDate.

Example1: Simple example

Declare @presentDate DateTime = GetDate()

Select

[PresentDate] = CONVERT(varchar(10),GetDate(),110)

,[EOPrevMonth] = CONVERT(varchar(10),EOMonth(@presentDate,-1),110)

,[EOCurrMonth] = CONVERT(varchar(10),EOMonth(@presentDate),110)

,[EONextMonth] = CONVERT(varchar(10),EOMonth(@presentDate,1),110)

Example 2: EOMonth with Try_Parse

We may need to check before using the EOMonth function if the conversion is at all possible or not. For that we can use Try_Parse in conjunction with EOMonth.

Declare @presentDate DateTime = GetDate()

Select IIF(Try_Parse(Convert(varchar(10),@presentDate,101) As Datetime) Is Not null

,CONVERT(varchar(10),EOMonth(@presentDate),110)

,'Sorry conversion not possible'

) Result

b.DateFromParts Function

Well,the function states that build a date given the various parts like year, month and day. We have already seen this function in dotnet. Out of the various overloaded constructor of DateTime struct, we have

public DateTime(int year, int month, int day);

So if we write

Console.WriteLine(new DateTime(2011, 7, 23));

The output will be: 7/23/2011 12:00:00 AM

Now we have the same opportunity to do from Sql Server with the help of DateFromParts.

Purpose:Returns a date given its parts like year, month, day.

Syntax: DateFromParts(year,month,day)

Where,

Year => the year value

Month => the month value

Day => Day value.

Example 1: Simple example

Select DateFromParts(2011,7,23) [Result]

Example 2: Specifying all nulls

Select DateFromParts(null,null,null) [Result]

Example 3: Omitting mandatory fields will raise exception

Select DateFromParts(2011,23) [Result]

Msg 174, Level 15, State1, Line1

The datefromparts function requires 3 argument(s)

Example 4: Inserting value 2 for all the fields

Select DateFromParts(2,2,2) [Result]

c.TimeFromParts Function

As DateFromParts function returns Date so like TimeFromParts function returns time.

Syntax: TimeFromParts(hour,minute,seconds,fractions,precision)

Example 1: Simple example

Select TimeFromParts(23,22,15,147,3) [Result]

Example 2: Specifying all nulls

Select TimeFromParts (null,null,null,null,null) [Result]

Msg 10760, Level 16, State 1, Line 1

Scale argument is not valid. Valid expressions for data type time scale argument are integer constants and integer constant expressions.

Example 3: Omitting mandatory fields will raise exception

Select TimeFromParts (23,22,15) [Result]

Msg 174, Level 15, State1, Line1

The timefromparts function requires 5 argument(s)

d. DateTimeFromParts Function

It is almost similar to the DateFromParts function with the change that it returns a Date time component. We have already seen this function in dotnet. Out of the various overloaded constructor of DateTime struct, we have

public DateTime(int year, int month, int day, int hour, int minute, int second, int millisecond);

So if we write

Console.WriteLine(new DateTime(2011, 7, 23, 22, 15, 49, 147).ToString("M/dd/yyyy h:mm:ss.fff tt"));

The output will be: 7/23/2011 10:15:49.147 PM

Syntax: DateTimeFromParts(year,month,day,hour,minute,seconds,milliseconds)

Example 1: Simple example

Select [DateTimeFromPartsExample] = DateTimeFromParts(2011, 7, 23, 22, 15, 49, 147)

Example 2: Specifying all nulls

Select DateTimeFromParts (null,null,null,null,null,null,null) [Result]

Example 3: Omitting mandatory fields will raise exception

Select DateTimeFromParts(2011, 7, 23) [Result]

Msg 174, Level 15, State1, Line1

The datetimefromparts function requires 7 argument(s)

N.B. ~ The valid date range should be between 1/1/1753 to 12/31/9999.

e. DateTime2FromParts Function

This function is almost identical to DateTimeFromParts function except that it returns a datetime2 value. In addition to this, it also needs a precision value.

Syntax: DateTime2FromParts (year,month,day,hour,minute,seconds,fractions,precision)

Example 1: Simple example

Select DateTime2FromParts(2011, 7, 23,22,15,49,147,3) [Result]

Example 2: Specifying all nulls

Select DateTime2FromParts (null,null,null,null,null,null,null,null) [Result]

Msg 10760, Level 16, State 1, Line 1

Scale argument is not valid. Valid expressions for data type datetime2 scale argument are integer constants and integer constant expressions.

Example 3: Omitting mandatory fields will raise exception

Select DateTime2FromParts(2011, 7, 23,22,15,49,147) [Result]

Msg 174, Level 15, State1, Line1

The datetime2fromparts function requires 8 argument(s)

f.SmallDateTimeFromParts Function

Similar to DateTimeFromParts, we have SmallDateTimeFromParts which returns a smalldatetime value for the given date and time.

Syntax: SmallDateTimeFromParts(year,month,day,hour,minute)

Example 1: Simple example

Select SmallDateTimeFromParts(2011, 7, 23,22,15) [Result]

Example 2: Specifying all nulls

Select SmallDateTimeFromParts (null,null,null,null,null) [Result]

Example 3: Omitting mandatory fields will raise exception

Select SmallDateTimeFromParts(2011, 7, 23) [Result]

Msg 174, Level 15, State1, Line1

The smalldatetimefromparts function requires 5 argument(s)

g. DateTimeOffSetFromParts Function

This function returns a datetimeoffset value.

Syntax: DateTimeOffsetFromParts (year, month, day, hour, minute, seconds, fractions, houroffset, minuteoffset, precision)

Example 1: Simple example

Select DateTimeOffsetFromParts (2011,7,24,0,15,44,120,0,0,3) [Result]

Example 2: Specifying all nulls

Select

DateTimeOffsetFromParts (null,null,null,null,null,null,null,null,null,null) [Result]

Msg 10760, Level 16, State 1, Line 1

Scale argument is not valid. Valid expressions for data type datetime2 scale argument are integer constants and integer constant expressions.

Example 3: Omitting mandatory fields will raise exception

Select DateTimeOffsetFromParts (2011,7,24,0,15,44,120) [Result]

Msg 174, Level 15, State1, Line1

The datetimeoffsetfromparts function requires 10 argument(s)

VI.Over clause enhancement (Enhanced Feature)

In Denali CTP 3, the Over clause has been enhanced a lot. Let us look into them as below

a.Rows and Range

These keywords specifies that the function will be applied for every row a window use for calculating the function result. The Rows specifies the window in physical units while Range does it in logical offset. These keywords must be use in conjunction with order by clause.

b.Between..And clause

Defines the start (expression before And clause) and end point (expression after And clause) of the window.

c.Unbounded Preceding

It indicates that the window starts at the first row of the partition.

d.Unbounded Following

It indicates that the window ends at the last row of the partition.

e.Current Row

Depending on the Row or Range specified, it indicates that the window initiates at the start row or value.

N.B.~If we ignore the windowing clause completely, then the default Range Between Unbounded Preceding and Current Row is taken into consideration.

VII.Analytic Functions

We will use the below script for the demonstration of the Analytic Functions unless otherwise anything else is specified.We will have two tables a)MatchTable and b)PlayerTable.

For the demonstration of Lead,Lag,First_Value,Last Value,Percentile_Cont and Percentile_Disc we will use the MatchTable while for the rest the PlayerTable will come into picture

Script for MatchTable generation

IF EXISTS (SELECT * FROM sys.objects WHERE name = N'MatchTable' AND type = 'U')

DROP TABLE MatchTable

GO

SET ANSI_NULLS ON

GO

--Create the table

CREATE TABLE [dbo].[MatchTable](

[MatchID] [int] IDENTITY(1,1) NOT NULL,

[MatchGroup] [varchar](8) NULL,

[MatchBetween] [varchar](50) NULL,

[ScheduleDate] [date] NULL

) ON [PRIMARY]

GO

--Insert records

Insert Into MatchTable Values

('Group-A','India VS Australia','08/14/2011')

,('Group-A','India VS Pakistan','08/15/2011')

,('Group-A','India VS Newzealand','08/16/2011')

,('Group-A','Australia VS Pakistan','08/17/2011')

,('Group-A','Australia VS Newzealand','08/18/2011')

,('Group-A','Newzealand VS Pakistan','08/19/2011')

,('Group-B','USA VS WestIndies','08/20/2011')

,('Group-B','USA VS Ireland','08/21/2011')

,('Group-B','USA VS Bangaladesh','08/22/2011')

,('Group-B','WestIndies VS Ireland','08/23/2011')

,('Group-B','WestIndies VS Bangaladesh','08/24/2011')

,('Group-B','Ireland VS Bangaladesh','08/25/2011')

-- Project the records

Select * From MatchTable

Script for PlayerTable generation

IF EXISTS (SELECT * FROM sys.objects WHERE name = N'PlayerTable' AND type = 'U')

DROP TABLE PlayerTable

GO

SET ANSI_NULLS ON

GO

--Create the table

CREATE TABLE [dbo].[PlayerTable](

PlayerID INT IDENTITY(1001,1),

PlayerName VARCHAR(15),

BelongsTo VARCHAR(15),

MatchPlayed INT,

RunsMade INT,

WicketsTaken INT,

FeePerMatch NUMERIC(16,2)

) ON [PRIMARY]

GO

--Insert records

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('A. Won','India',10,440,10, 1000)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('A. Cricket','India',10,50,17, 4000)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('B. Dhanman','India',10,650,0,3600)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('C. Barsat','India',10,950,0,5000)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('A. Mirza','India',2,3,38, 3600)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('M. Karol','US',15,44,4, 2000)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('Z. Hamsa','US',3,580,0, 400)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('K. Loly','US',6,500,12,8000)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('S. Summer','US',87,50,8,1230)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('J.June','US',12,510,9, 4988)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('A.Namaki','Australia',1,4,180, 9999)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('Z. Samaki','Australia',2,6,147, 8888)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('MS. Kaki','Australia',40,66,0,1234)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('S. Boon','Australia',170,888,10,890)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('DC. Shane','Australia',28,39,338, 4444)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('S. Noami','Singapore',165,484,45, 5678)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('Z. Biswas','Singapore',73,51,50, 2222)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('K. Dolly','Singapore',65,59,1,9999)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('S. Winter','Singapore',7,50,8,128)

INSERT INTO PlayerTable(PlayerName, BelongsTo, MatchPlayed,RunsMade,WicketsTaken,FeePerMatch) VALUES('J.August','Singapore',9,99,98, 890)

-- Project the records

Select * from PlayerTable

a.Lead Function

Purpose:This function returns result set starting from the next row in the table.

Syntax:Lead(expression [,offset [,default] ] ) over( [ Partition_By_clause] order by clause)

Where,

Expression => A table column or built-in function but not analytical functions

Offset => It is optional and represents the physical offset from the current row in the table. If not specified, the default value is 1 and cannot accept negative numbers.

Default = > It is again optional. If not specified, then whenever the offset value goes out of the table bounds, then default null is returned.

Partition_By_clause = > Partition the query result set. It is again optional

Order By Clause = > Indicates how the data is ordered within the partition.

Example 1: Find next row result/Skip first row

Suppose we want to see the next match date and the next match between the teams. We can do this easily using the Lead function as under

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,NextMatchBetween = Lead (MatchBetween) Over(Order by ScheduleDate)

,NextMatchDate = Lead (ScheduleDate) Over(Order by ScheduleDate)

From MatchTable

--OR

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,NextMatchBetween = Lead (MatchBetween,1) Over(Order by ScheduleDate)

,NextMatchDate = Lead (ScheduleDate,1) Over(Order by ScheduleDate)

From MatchTable

Now let us try to understand the behavior of the Lead function. We have not specified any offset in this query and hence the default value of 1 has been taken into granted. Henceforth, it started from the second row . However, if we explicitly specify the offset value as 1, it will return the same result. This function takes into account TopRowNumber and BottomRowNumber and skips the number of rows from the top specified as bolstered in the below figure

Example 2:Find next to next row result/Skip first 2 rows

If we need to skip 2 rows, we need to specify 2 in the offset as under

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,NextMatchBetween = Lead (MatchBetween,2) Over(Order by ScheduleDate)

,NextMatchDate = Lead (ScheduleDate,2) Over(Order by ScheduleDate)

From MatchTable

We have skipped two rows as revealed from the above figure

Example 3:Specifying 0 or space in offset

If we give 0 in offset, indicates that we are not skipping any row

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,NextMatchBetween = Lead (MatchBetween,0) Over(Order by ScheduleDate)

,NextMatchDate = Lead (ScheduleDate,0) Over(Order by ScheduleDate)

From MatchTable

Same thing can be achieved if we specify space as under

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,NextMatchBetween = Lead (MatchBetween,'') Over(Order by ScheduleDate)

,NextMatchDate = Lead (ScheduleDate,'') Over(Order by ScheduleDate)

From MatchTable

Example 4:Specifying negative offset

Lead function cannot accept negative offset

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,NextMatchBetween = Lead (MatchBetween,-1) Over(Order by ScheduleDate)

,NextMatchDate = Lead (ScheduleDate,-1) Over(Order by ScheduleDate)

From MatchTable

Example 5:Specifying null value in offset yields null

Giving null value in offset yields null

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,NextMatchBetween = Lead (MatchBetween,null) Over(Order by ScheduleDate)

,NextMatchDate = Lead (ScheduleDate,null) Over(Order by ScheduleDate)

From MatchTable

Example 6:Working Lead function with fractional parts

We can even use fractions in the offset clause of Lead function.Let's see the below

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,NextMatchBetween = Lead (MatchBetween,12/10) Over(Order by ScheduleDate)

,NextMatchDate = Lead (ScheduleDate,12/10) Over(Order by ScheduleDate)

From MatchTable

Example 7: Lead demonstration with Calendar table

It can be use in conjunction with Common Table Expression as

;With DtCalender As(

Select Dt = Convert(Date,'8/1/2011')

Union All

Select DATEADD(dd,1,Dt)

From DtCalender

Where Dt < Convert(Date,'8/31/2011')

)

Select

MatchID

,MatchBetween

,Lead(c.Dt,1) Over(Order by c.Dt) As [Lead Example]

From MatchTable m

join DtCalender c on c.Dt = m.ScheduleDate

option (maxrecursion 0)

The query is simple to understand. We have created a calendar table on the fly for one month (August, 2011) and that we are using inside the lead function only for those dates that matches with the match date column.

Example 8:Working with Scalar Expression or Function

We can even use a saclar expression or function in the expression field as

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,NextMatchBetween = Lead ((Select Top 1 MatchGroup from MatchTable),1) Over(Order by ScheduleDate)

,NextMatchDate = Lead (ScheduleDate,1) Over(Order by ScheduleDate)

From MatchTable

Example 9:Using the Default Value of Lead function

This is also an optional argument.If not specified, then the implicit NULL value will be taken into account as the default which we have already seen in the previous examples. However, we can specify our own default value.

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,NextMatchBetween = Lead (MatchBetween,1,'No more Match') Over(Order by ScheduleDate)

,NextMatchDate = Lead (ScheduleDate,1) Over(Order by ScheduleDate)

From MatchTable

The default date has been marked for easy understanding. If we read the graphical execution plan we can make out that,it is calling the Convert_Implicit method for the conversion of the default value specified

However, the default value’s datatype should be such that it can be converted by the engine as per the datatype of the scalar expression.Henceforth, if any value specified that the Convert_Implicit method fail to convert, then it will result into exception

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,NextMatchBetween = Lead (MatchBetween,1,'No more Match') Over(Order by ScheduleDate)

,NextMatchDate = Lead (ScheduleDate,1,'No more Match') Over(Order by ScheduleDate)

From MatchTable

This statement failed because the default value should of date data type but we are passing varchar type.In order to avoid this,we can use try parse in conjunction with IIF as under

Declare @defaultValue VARCHAR(20) = 'No more Match'

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,NextMatchBetween = Lead (MatchBetween,1,@defaultValue) Over(Order by ScheduleDate)

,NextMatchDate = Lead ( ScheduleDate

,1

,IIF(

Try_Parse(@defaultValue as Date) Is Not Null

,@defaultValue

,Null

)

) Over(Order by ScheduleDate)

From MatchTable

Example 10 : Working with Partition by clause

It can work smoothly in conjunction with partition by clause as under

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,NextMatchBetween = Lead (MatchBetween,1,'No more Match in this group') Over(Partition By MatchGroup Order by ScheduleDate)

,NextMatchDate = Lead (ScheduleDate,1) Over(Partition By MatchGroup Order by ScheduleDate)

From MatchTable

Example 11 : Lead demonstration with partition by clause to restricted rows

We can even restrict rows as under

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,NextMatchBetween = Lead (MatchBetween,1,'No more Match in this group')

Over(Partition By MatchGroup Order by ScheduleDate)

,NextMatchDate = Lead (ScheduleDate,1)

Over(Partition By MatchGroup Order by ScheduleDate)

From MatchTable

Where MatchGroup = 'Group-A'

I believe that we have seen many examples of Lead function and has understand the behaviour of it. So we can proceed to the next function Lag which is the counterpart of it

b.Lag Function

Purpose :This function returns result set starting from the previous row in the table.Syntax :Lag(expression [,offset [,default] ] ) over( [ Partition_By_clause] order by clause)

Where,

Expression => A table column or built-in function but not analytical functions

Offset => It is optional and represents the physical offset from the current row in the table. If not specified, the default value is 1 and cannot accept negative numbers.

Default = > It is again optional. If not specified, then whenever the offset value goes out of the table bounds, then default null is returned.

Partition_By_clause = > Partition the query result set. It is again optional

Order By Clause = > Indicates how the data is ordered within the partition.

N.B.~Lag and Lead are the opposite side of a coin and henceforth whatever example we have seen for Lead will be applicable for Lag. Henceforth, it will not be wise to repeat the same scenarios here.We will see some of the example of Lag though

Example 1:Find the previous match date and the previous match between the teams

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,PrevMatchBetween = Lag (MatchBetween) Over(Order by ScheduleDate)

,PrevMatchDate = Lag (ScheduleDate) Over(Order by ScheduleDate)

From MatchTable

--OR

Select

MatchID

,MatchGroup

,MatchBetween

,ScheduleDate

,PrevMatchBetween = Lag (MatchBetween,1) Over(Order by ScheduleDate)

,PrevMatchDate = Lag (ScheduleDate,1) Over(Order by ScheduleDate)

From MatchTable

Now let us try to understand the behavior of the Lag function. We have not specified any offset in this query and hence the default value of 1 has been taken into granted. Henceforth, it ended at N-1 th row from the bottom. However, if we explicitly specify the offset value as 1, it will return the same result.This function takes into account TopRowNumber and BottomRowNumber(like Lead) and skips the number of rows from the bottom as bolstered in the below figure

As specified earlier, whatever example follows for Lead will be applicable for Lag. Henceforth, the scenarios are not repeated here and we are proceeding to the next function.

c.First_Value Function

Purpose:It returns the first value from the order set of values.

Syntax:First_Value(expression) over( [ Partition_By_clause] order by clause [rows_range_clause])

Where,

Expression => A table column or built-in function but not analytical functions.

Rows_range_clause => It helps to further limit the effect of analytical function.

As said, the First_Value function returns the first value from the order set of values. If the first value is null, then the function returns null.Let us see this function into action.

Example 1: Simple First_Value demo for getting the first and the last match played

Suppose we need to find out the first and the last match played. We can achieve it as under

Select

MatchBetween

,ScheduleDate

,First_Value(MatchBetween) Over(Order By ScheduleDate Desc) As [Last Match]

,First_Value(MatchBetween) Over(Order By ScheduleDate ) As [First Match]

From MatchTable

Well, by looking into the result set for this anyone can argue that, it can be achieved by

a)Row_Number approach as

;With Cte AS(

Select

RowID = ROW_NUMBER() Over(Order by (select 1))

,MatchBetween

,ScheduleDate

From MatchTable )

Select

MatchBetween

,ScheduleDate

, [Last Match] = (Select MatchBetween from Cte where RowID = 12)

, [First Match] = (Select MatchBetween from Cte where RowID = 1)

from Cte

N.B.~ I have deliberately used Row_Number() function instead of the [MatchID] column just to get the work done using Row_Number() way.

b)Max/Min approach as

Select

MatchBetween

,ScheduleDate

, [Last Match] = (Select MatchBetween from MatchTable where ScheduleDate = (Select MAX(ScheduleDate) from MatchTable))

, [First Match] = (Select MatchBetween from MatchTable where ScheduleDate = (Select MIN(ScheduleDate) from MatchTable))

from MatchTable

And this argument will be valid. But wait, is it the only purpose of First_Value() function? Let’s see some more example.

Example 2: Simple First_Value demo with Partition By clause

Select

MatchBetween

,ScheduleDate

,MatchGroup

,First_Value(MatchBetween) Over(Partition By MatchGroup Order By ScheduleDate Desc) As LastMatch

,First_Value(MatchBetween) Over(Partition By MatchGroup Order By ScheduleDate) As FirstMatch

From MatchTable

As can be make out that, in this case the function is working based on the partitions made.

Example 3: First_Value with new windowing clause

Select

MatchBetween

,ScheduleDate

,First_Value(MatchBetween) Over(Order By ScheduleDate Range Unbounded Preceding) As Rng_UPrec

,First_Value(MatchBetween) Over(Order By ScheduleDate Rows Unbounded Preceding) As Row_UPrec

,First_Value(MatchBetween) Over(Order By ScheduleDate Range Current Row) As Rng_Curr

,First_Value(MatchBetween) Over(Order By ScheduleDate Rows Current Row) As Row_Curr

,First_Value(MatchBetween) Over(Order By ScheduleDate Range Between Unbounded Preceding And Current Row) As Rng_UPrec_Curr

,First_Value(MatchBetween) Over(Order By ScheduleDate Rows Between Unbounded Preceding And Current Row) As Row_UPrec_Curr

,First_Value(MatchBetween) Over(Order By ScheduleDate Range Between Unbounded Preceding And Unbounded Following) As Rng_UPrec_UFoll

,First_Value(MatchBetween) Over(Order By ScheduleDate Rows Between Unbounded Preceding And Unbounded Following) As Row_UPrec_UFoll

,First_Value(MatchBetween) Over(Order By ScheduleDate Range Between Current Row And Unbounded Following) As Rng_Curr_UFoll

,First_Value(MatchBetween) Over(Order By ScheduleDate Rows Between Current Row And Unbounded Following) As Row_Curr_UFoll

From MatchTable

d.Last_Value Function

Purpose:Returns the last value from the order set of values.

Syntax :Last_Value(expression) over( [ Partition_By_clause] order by clause [rows_range_clause])

Where,

Expression => A table column or built-in function but not analytical functions

Rows_range_clause => It helps to further limit the effect of analytical function.

As said, the Last _Value function returns the last value from the order set of values. If the last value is null, then the function returns null.

Let us see this function into action.

Example 1: Simple Last_Value demo

Suppose we need to find out the last match played in every group. We can achieve it as under

Select

MatchBetween

,MatchGroup

,Last_Value(MatchBetween) Over(Partition By MatchGroup Order By MatchGroup) As [Last Match In Group]

From MatchTable

Example 2: Last_Value with new windowing clause

Select

MatchBetween

,ScheduleDate

,Last_Value(MatchBetween) Over(Order By ScheduleDate Range Unbounded Preceding) As Rng_UPrec

,Last_Value(MatchBetween) Over(Order By ScheduleDate Rows Unbounded Preceding) As Row_UPrec

,Last_Value(MatchBetween) Over(Order By ScheduleDate Range Current Row) As Rng_Curr

,Last_Value(MatchBetween) Over(Order By ScheduleDate Rows Current Row) As Row_Curr

,Last_Value(MatchBetween) Over(Order By ScheduleDate Range Between Unbounded Preceding And Current Row) As Rng_UPrec_Curr

,Last_Value(MatchBetween) Over(Order By ScheduleDate Rows Between Unbounded Preceding And Current Row) As Row_UPrec_Curr

,Last_Value(MatchBetween) Over(Order By ScheduleDate Range Between Unbounded Preceding And Unbounded Following) As Rng_UPrec_UFoll

,Last_Value(MatchBetween) Over(Order By ScheduleDate Rows Between Unbounded Preceding And Unbounded Following) As Row_UPrec_UFoll

,Last_Value(MatchBetween) Over(Order By ScheduleDate Range Between Current Row And Unbounded Following) As Rng_Curr_UFoll

,Last_Value(MatchBetween) Over(Order By ScheduleDate Rows Between Current Row And Unbounded Following) As Row_Curr_UFoll

From MatchTable

e.Percent_Rank Function

Purpose:Within a group of rows, it calculates the relative rank of a row.

Syntax :Percent_Rank () Over ( [partition_by_clause] order_by_clause)

Formula:

Percent_Rank = (R -1)/(N-1)

Where ,

R => The row whose rank needs to be found

N => Total number of rows or records.

e.g. We have a set with 10 elements. We need to find the relative rank of the 7th row. Henceforth, applying the values to the above formula, we get

R = 7 , N = 10

So, Percent_Rank = (7-1)/(10-1) = 0.6666666666666667

The range of values return by this function is between 0 to 1 inclusive. The return data type is always a positive number.

Example

Select

PlayerID

,PlayerName

,BelongsTo

,FeePerMatch

,Percent_Rank() Over(Partition By BelongsTo Order By BelongsTo,FeePerMatch) As PercentRank

From PlayerTable

Now let us understand how the relative rank for the [Fee Per Match] column has been generated.

Take the case of the row where the Player ID is 1015 i.e. 3rd from the row.

There are altogether 5 rows for the [Belongs to] field “Australia”.

So , we have R = 3 and N = 5.

Placing the values in our formula yields

Percent_Rank = (3 -1)/(5-1) = 2/4 = 0.5

f.Cume_Dist Function

Purpose:Within a group of rows, it calculates the cumulative distribution of a value in a group of values.

Syntax :Cume_Dist() Over ([partition_by_clause] order_by_clause)

Formula:

Cumulative_Distribution = (R)/(N)

Where,

R => The row whose rank needs to be found

N => Total number of rows or records.

e.g. We have a set with 10 elements. We need to find the cumulative distribution of the 7th row. Henceforth, applying the values to the above formula, we get

R = 7 , N = 10

So, Cumulative_Distribution = (7)/(10) = 0.7

The range of values return by this function is between 0 to 1 inclusive. The return data type is always a positive number.

Example

Select

PlayerID

,PlayerName

,BelongsTo

,FeePerMatch

,Cume_Dist() Over(Partition By BelongsTo Order By BelongsTo,FeePerMatch) As CumilativeDistribution

From PlayerTable

Now let us understand how the relative rank for the [Fee Per Match] column has been generated.

Take the case of the row where the Player ID is 1015 i.e. 3rd from the row.

There are altogether 5 rows for the [Belongs to] field “Australia”.

So , we have R = 3 and N = 5.

Placing the values in our formula yields

Cumulative_Distribution = (3)/(5) = 0.6

g.Percentile_Cont Function

Purpose:An inverse distribution function which takes a percentile value and a sort specification and returns an interpolated value that would fall into that percentile value with respect to the sort specification.It accepts any numeric datatype or any nonnumeric datatype that can be implicitly converted to a numeric datatype as it's argument and returns the same datatype as the numeric datatype of the argument.

Syntax:Percentile_Cont ( numeric_literal ) within group(Order by sort_expression [asc|desc]) OVER ([partition_by_clause])

Example

SELECT [MatchID]

,[MatchGroup]

,[MatchBetween]

,[ScheduleDate]

,Percentile_Cont =PERCENTILE_Cont(.6) WITHIN GROUP (ORDER BY MatchID)OVER(PARTITION BY [MatchGroup])

FROM MatchTable

h.Percentile_Disc Function

Purpose:An inverse distribution function which takes a percentile value and a sort specification and returns an element from the set.It accepts any numeric datatype or any nonnumeric datatype that can be implicitly converted to a numeric datatype as it's argument and returns the same datatype as the numeric datatype of the argument.

Syntax:Percentile_Disc ( numeric_literal ) within group(Order by sort_expression [asc|desc]) OVER ([partition_by_clause])

Formula:

Percentile_Disc = Cumulative_Distribution * N

Where,

N => Total number of rows or records.

e.g. We have a set with 10 elements. We need to find the Percentile_Disc of the 7th row.Let us first find the Cumulative_distribution as

So, Cumulative_Distribution = (7)/(10) = 0.7

So, Percentile_Disc = Cumulative_Distribution * N = 0.7 * 10 = 7

The range of values return by this function is between 0 to 1 inclusive. The return data type is always a positive number.

Example

SELECT [MatchID]

,[MatchGroup]

,[MatchBetween]

,[ScheduleDate]

,PercentileDisc =PERCENTILE_DISC(.6) WITHIN GROUP (ORDER BY MatchID)OVER(PARTITION BY [MatchGroup])

,Cume_Dist() Over(Partition By [MatchGroup] Order By MatchID) As CumilativeDistribution

,PercentileDiscByFormula = Cume_Dist() Over(Partition By [MatchGroup] Order By MatchID) * (6)

FROM MatchTable

In this article we have seen the new and the enhanced functions from TSql perspective that Denali CTP 3 has offered us.It is really a good list of functions which will help the developeres to write tsql programs easily.In the next article we will explore Column Store Index which is again a new concept.

I hope that this article will help us to know and apply the new TSql featues in a variety of ways.Share your valueable feedback about the same and stay tune for the next one.