Introduction

Ever had problems with slow execution when looping through tables in a dataset?

If so, this little tip might help.

Background

In a project I had to save the data in a DataSet to a tab-separated text file. The dataset consists of several nested tables, where the the two largest is a Group that contains a SubGroup. Both these tables has a Description which is stored in another table for multi-language support.

Not exactly rocket science, I thought, and started to implement. After a few hours of coding and testing with a small number of data to get the text format right, I started to test the function with a real life scenario. This consists of about 3000 groups (MPG) with about 400 sub-groups (MP) each.

I ran the test again and got an unpleasant surprise. The test software totally froze. (no threading in my small test bench). Time to get a coffee and then start to figure out what went wrong. When I came back I saw that the execution had finsished. Hmm, so it was not frozen, it just took a long time.

After I added a timer I realized it took 4 minutes! to save a text file with about 16000 lines. As this functionality is part of a GUI, you can imagine how happy any user would be to have to wait 4 minutes for every time the file is saved.

Analyzing my code

After some analysis I found some stupid coding of my part, e.g. I had put one operation inside a loop that only needed to be done once. I also changed from String to StringBuilder where I had a lot of concatenations. This helped some, I cut the time by 50 seconds.

The real culprit, however, was the LINQ extensions I used to get data from indirectly referenced tables. These extensions are pretty neat as they save some lines of code used for type casting, but appearantly they are not very efficient when used inside a loop.

In my code I called the same function around 15000 times in my code (3000 groups + 3000 groups * 400 sub-groups). The only difference is if the type should be MPG or MP.

string description = "Description is missing.";

DescrRow rowDescription = rowDescriptions.GetDescrRows().

Where(x => x.Type == "MPG" && x.Name == rowMPG.Name).SingleOrDefault();

if (rowDescription != null)

description = rowDescription.Text;

The little snippet above took a very long time to execute, so I had to do something about it. I tested quite a few different variants, but the final verdict was that the old Select method was the fastest in my case.

First I created two temporary tables, one for MPG and one for MP descriptions. This is done outside the loop.

DataTable tableMPGDescriptions = null;

DataTable tableMPDescriptions = null;

if (rowDescriptions != null)

{

IEnumerable<DescrRow> descriptions = null;

descriptions = rowDescriptions.GetDescrRows().Where(x => x.Type == "MPG");

tableMPGDescriptions = descriptions.CopyToDataTable<DescrRow>();

descriptions = rowDescriptions.GetDescrRows().Where(x => x.Type == "MP");

tableMPDescriptions = descriptions.CopyToDataTable<DescrRow>();

}

Inside the loop the code below is used.

description = "Description is missing.";

if (tableMPGDescriptions != null)

{

DataRow[] descriptionRows = null;

descriptionRows = tableMPGDescriptions.Select(String.Format("Name='{0}'", rowMPG.Name));

if (descriptionRows.Length == 1)

description = descriptionRows[0]["Text"].ToString();

}

Final Result

After changing the code at all places where I used LINQ methods inside a loop the time to save the file became less than 4 seconds, which for this application is acceptable.

So from 4 minutes to 4 seconds in around 4 hours of work.

Using the code

As I can't post the code from my project here, I created a small demo project that shows the difference in time between the default method Select() and the extension method Where().

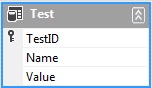

I created a small dataset to simulate my real code.

I filled the table with dummy values

I updated the number of rows in the data table to get more data.

DataSetTest dsTestData = new DataSetTest();

Random randy = new Random();

for (int i = 0; i < 45000; i++)

{

dsTestData.Test.AddTestRow(String.Format("Hello_{0}", i),

(int)(1000 *randy.NextDouble()));

}

and then called this code different number of times.

for (int n = 1; n <= 10000; n *= 10)

{

Console.WriteLine("Function call made {0} times.", n);

Console.WriteLine("");

timee.Restart();

for (int i = 0; i < n; i++)

{

name = String.Format("Hello_{0}", i);

selectRows = (DataSetTest.TestRow[])dsTestData.Test.

Select(String.Format("Name = '{0}'", name));

}

Console.WriteLine("Using DataTable.Select()

: {0,6} ms", timee.ElapsedMilliseconds);

timee.Restart();

for (int i = 0; i < n; i++)

{

name = String.Format("Hello_{0}", i);

linqRows = dsTestData.Test.Where(x => x.Name == name);

linqRow = linqRows.ElementAt(0);

}

Console.WriteLine("Using Where().ElementAt(0) : {0,6} ms", timee.ElapsedMilliseconds);

timee.Restart();

for (int i = 0; i < n; i++)

{

name = String.Format("Hello_{0}", i);

linqRows = dsTestData.Test.Where(x => x.Name == name);

linqRow = linqRows.FirstOrDefault();

}

Console.WriteLine("Using Where().FirstOrDefault() : {0,6} ms", timee.ElapsedMilliseconds);

timee.Restart();

for (int i = 0; i < n; i++)

{

name = String.Format("Hello_{0}", i);

linqRows = dsTestData.Test.Where(x => x.Name == name);

linqRow = linqRows.SingleOrDefault();

}

Console.WriteLine("Using Where().SingleOrDefault()

: {0,6} ms", timee.ElapsedMilliseconds);

}

The table below shows the time elapsed for different values of n.

Time Elapsed (ms) | Method | n = 1 | 100 | 1000 | 10000 | Comment |

| DataTable.Select() | 586 | 8 | 82 | 257 | First call to takes a long time. |

| Where() | 3 | 0 | 2 | 13 | I kept this result for reference. |

| ElementAt(0) | 3 | 0 | 2 | 4913 | Exponentially longer time. |

| FirstOrDefault() | 0 | 1 | 205 | 4887 | Exponentially longer time. |

| SingleOrDefault() | 15 | 1280 | 6944 | 72583 | Slow as a turtle. |

The times differ a bit from execution to execution, but the ratios are usually the same.

Conclusions

My little investigation shows that using the LINQ extension methods ElementAt(), FirstOrDefault() and SingleOrDefault()

really can slow things down if used inside loops and the number of iterations > 1000.

For single calls and a low number of iterations the LINQ methods are fast.

That the Where() by itself is fast is because the execution is delayed until you try to access any element in the list. (Thanks Mike and SA for explaining that to me.)

That SingleOrDefault() takes a long time is because the whole list has to be searched. (Thanks Nicolas for that pointer.)

One thing I still don't get is why the execution time per iteration for ElementAt() and FirstOrDefault() increases with the number of iterations.

Time Elapsed / number of iterations (ms) | Method | 100 | 1000 | 10000 | Comment |

| DataTable.Select() | 0.080 | 0.082 | 0.025 | Getting faster and faster. |

| ElementAt(0) | 0 | 0.163 | 0.491 | Exponentially longer time. |

| FirstOrDefault() | 0.01 | 0.205 | 0.489 | Exponentially longer time. |

| SingleOrDefault() | 12.800 | 6.944 | 7.253 | Execution time decreases. |

Points of Interest

I learned a thing or two about optimization and that new is not always better.

- Use StringBuilder instead of String inside loops if a lot of concatenations are performed.

- Avoid using the LINQ extension methods inside loops.

History

- First release of the tip.

- Source code and Conclusions section updated after comments.