Introduction

Vince Lombardi once said "Practice does not make perfect. Only perfect practice makes perfect." I have worked in few places where the Data Analysts team was newly created, and practices and habits were not perfect for sure. One of the many challenges coming from such newly created teams is that everybody in the team has their own coding philosophy. I put together this best practices article in order to help putting some structure on a newly created data analysts team as well as an established team which might need less anarchy in their processes.

Background

The core function of an SSIS package is to automate data Extract, Transform, and Load. The data extract sources can be diverse when the Data Services Organization is working with multiple clients, therefore proper naming convention as well as package annotations would come in handy in case tasks are transferred from one analyst or BI developer to another one.

In this tip, below are the main topics we would discuss about:

- General Best Practices Topics

- Best practices ideas for new data load implementation

- Best practices ideas for bookings Reconciliation

- Other best practices ideas

- Example of acronyms for package annotations

1. General Best Practices Topics

- The Use of workflows in the ETL process

- Dynamic Package Configuration design helps when migrating an ETL package from development to staging to production:

- Configuration files

- Environment Variables

- SQL Configuration tables

- Parent package variables

- Establish naming convention for Store Procedures, Functions, tables, etc.

- The use of Pipeline performance statistics to track the volume and speed of data as it flows through the data flow

- Establish QA checklist for peer reviews

- ETL audit reporting to troubleshoot and optimize the SSIS Package

- Use of change data capture(CDC)

- Always backup data before updating data in the production server

- Have a historical table for the raw data and data loaded by the ETL package

2. Best Practices Ideas for New ETL Implementation

- Analyze the data: “Investing time in analyzing the requirements and having a mature data model for the target enterprise data warehouse can reduce the ETL challenges to a large extent.” http://searchbusinessintelligence.techtarget.in/tip/Six-ETL-best-practices-followed-by-Shoppers-Stop

- Look for a unique identifier- if not provided by the client, create one for duplicate check

- Each table should have a unique identifier

- Make a clear distinction between exclusion and exception records

- Create a

[dataloadresults] table to check for load data outcomes: customized it as needed - For first few data loads, validate total loaded volume, suggest ideas to the client on how to fix the data at the source if there are issues

- Use stored procedures, and functions in the SSIS package in order to simplify the package portability and business rules editing or adjustments: “If you perform an operation more than two or three times, consider writing a function”1

- Use Sequence Containers in the SSIS Package to group the tasks: “Place logical components together and order them in the way they will need to execute according to database constraints”2

- Use excel plus task so that password protection can be added, and ultimately load results report can be broken down in tabs

- Try to put no more than 6 tasks in a Sequence Container

3. Best Practices Ideas for Bookings Reconciliation

- Mass Update the Bookings to close, create quote records

- Before mass updating the bookings- validate the data with clients team

- While mass updating the bookings: keep historical table of updated records

- Have an exception report and summary report and each step

- New load for the unmatched Bookings: close, create quote records?

While loading the unmatched new bookings to close: double check that in the meantime those opportunities have not been created- check for duplicates.

Validate with sales team to make sure the numbers are unusually inconsitent–Usually the numbers should be consistent or realistic.

4. Other Best Practices Ideas

- Create a SSIS package to check for bad data in the database

- Create a template SSIS package for junior analysts to train on

- Use annotations wherever possible. At the very least, each data-flow should contain an annotation.

- Always use template packages to standardize on logging, event handling and configuration.

- Keep number of connections string to a minimum

- Use views for stage data to load in production so that the load of new accounts be reflected

- Depending on client data: may have one single table for all entities load

Or separate stage tables for each entities for reusability:

- If possible, use SFTP for sharing data with client

- Only select columns that you need in the pipeline to reduce buffer size and reduce On Warning events at execution time

- Don't pull all configurations into a single XML configuration file. Instead, put each configuration into a separate XML configuration file. This is a more modular approach and means that configuration files can be reused by different packages more easily.

- If you need a dynamic SQL statement in an OLE DB Source component, set AccessMode="SQL Command from variable" and build the SQL statement in a variable that has

EvaluateAsExpression=TRUE. (http://blogs.conchango.com/jamiethomson/archive/2005/12/09/2480.aspx)

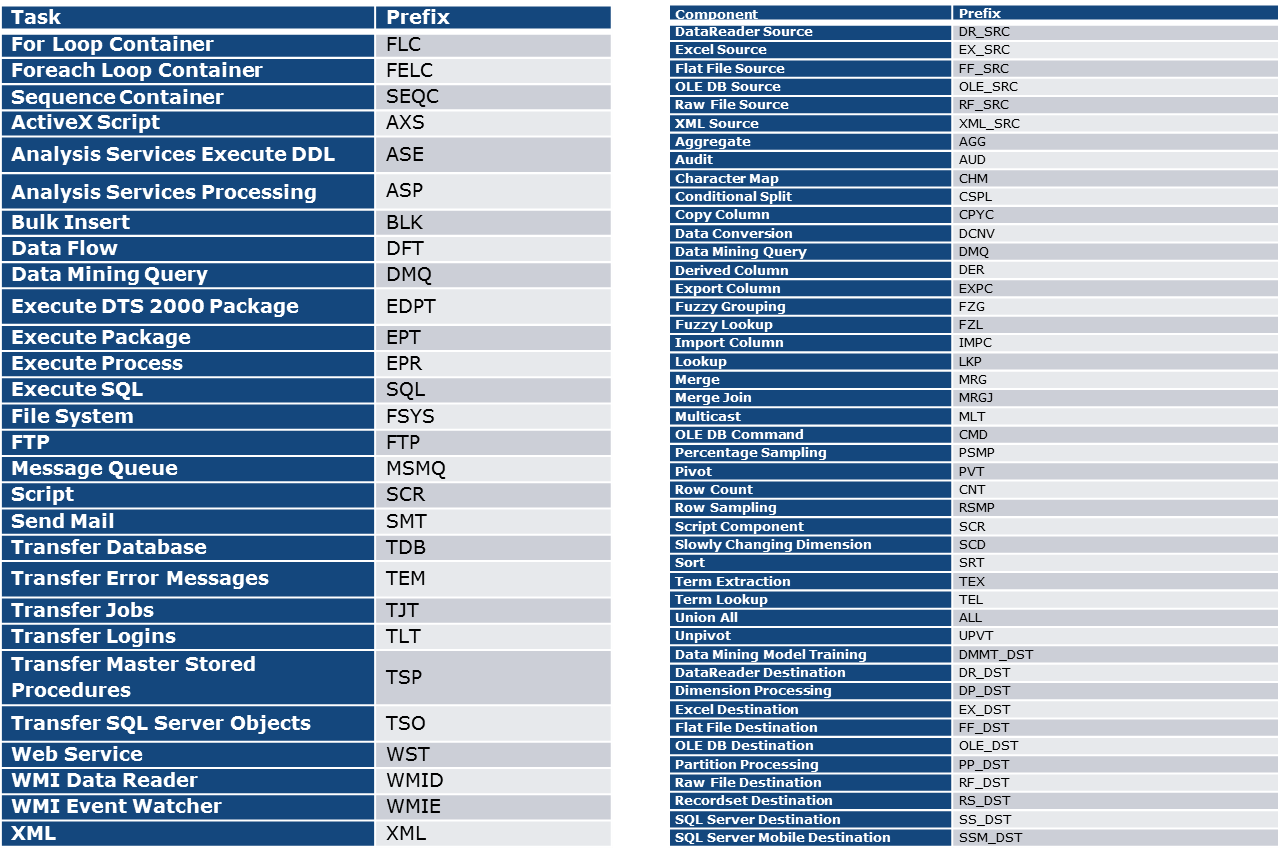

4. Example of Acronyms for Package Annotations

References

- Using Business Objects Data Services to combat ETL problems arising from bad source Data. Don Loden, BI Consultant at Decision First Technologies. October 5 – 7, 2010, Orlando, Florida.

- Using Business Objects Data Services to combat ETL problems arising from bad source Data. Don Loden, BI Consultant at Decision First Technologies. October 5 – 7, 2010, Orlando, Florida.

Note: Please contact Don Loden if you want the full presentation, the link has been taken down.