Introduction

Floating point arithmetic is fast, however, the precision is limited. This is a problem for many algorithms, especially for vector arithmetics. The result of intersection calculations of lines and planes are usually not accurate.

The intersection point of two lines is not exactly on these lines. Therefore it is necessary to compare the results with epsilon tolerances. In result, for all of such algorithms we have only a robustness, there is always input with wrong output.

To solve the problem, it is a known approach to calculate with rational numbers instead with floating point or decimal numbers. This is not as fast but the results are always correct and the precision is unlimited. Internal such number works with numerator and denominator and like school mathematics, we can multiply add, subtract and divide without restrictions.

We can exactly calculate with fractions like 1/3 what would be 0.3333... as floating point number. And fortunately, these operations are enough for the critical vector calculations like dot- and cross product.

Background

Unfortunately, we have no rational number class as part of the system but there are several very similar implementations. For C#, I found the class BigRational in several versions and from several locations, numerator and denominator mostly as System.Numeric.BigInteger.

It works to use such solution but I found out that it is not very efficient.

There is the problem with size. BigInteger has internal int sign and uint[] bits field. The structure size is already 64 bit (32 bit system) and they need two for the Rational class. Therefore Rational is something like a 128 bit data type.

For vector algorithms with thousands of vertices, it needs a lot of memory.

There is the problem with speed. I saw that the Microsoft implementation of BigInteger is not very efficient, probably based on old C++ code without special CLI optimizations.

Therefore, I wrote my own Rational class with some new approaches and I can show that this solution is at least 5 times faster than a solution based on Microsoft BigInteger or similar BigInteger classes.

To solve the size problem, I decided not to use BigInteger. Therefore, I keep numerator and denominator in one array if necessary. In result, I need only half of memory. Additionally, for small numbers, I keep numerator and denominator in the sign field, no array memory is necessary. This works well for a great range of typical numbers: +/- (0..32768) / (1..65536)

Numbers like 0,1,-1, 0.1, 100.5, etc.

To solve the speed problem, I have a set of approaches. I found out that it is definitely faster to use unsafe code for this. Unsafe code can be faster and must not be necessarily unsafe so long as the code is correct.

The typical case is that we need the most memory for interim results. To allocate this memory always in C# arrays is possible but long term we get a lot of GC loops which costs time and is not necessary.

Therefore, I use stackalloc where possible to keep interim results short term. There is additionally a small ring buffer on unmanaged memory in use to keep interim results between operator calls. This is a little bit dangerous, a long sequence of operator calls could end in overflow but only at development time.

To make speed, I reduced function calls where possible. We have no real inline function steering in C# and therefore I wrote self inline code where possible.

Now the code is not very good readable but this is the compromise for 5% more speed.

The bottleneck function for rational arithmetics is always to calculate the greatest common divisor (GCD algorithm). I took the Microsoft BigInteger GCD algorithm and found some nice improvements. The GCD for small numbers was not optimal, many checks and code for cases that are not possible is gone. So we have all together 10% more speed and less code.

Another new concept to make speed is to reduce the GCD calls itself. I found out that especially for interim results, it is many times faster to calculate internal with larger fractions and to apply GCD only at the end before it is necessary to allocate managed memory to keep the final result.

For other important functions like Sign, I never call GCD. The Sign test is important, many calls if we test points against planes for example. For such calls, we need no memory allocation, no GCD and it can be 50 times faster if we process a big array of vertices.

Using the Code

The demo project is a small speed test application.

As reference, I added the class BigRational based on Microsoft BigInteger.

The class NewRational is the new implementation and as one file solution, it is easy to import in other applications.

The speed test is a meaningless calculation on lots of random numbers just to make a lot of calculations similar to sequences of typical vector calculations.

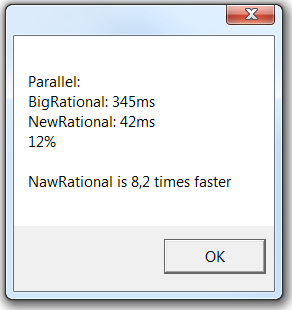

In result, I show and compare the times using BigRational and NewRational. It shows on my i7 machine that NewRational is more than 5 times faster. In 64Bit mode, I get additional 15% more.

Finally, the same calculation in parallel what is more than 3 times faster. Interesting that in parallel mode NewRational is more than 8 times faster. I don't know why.

Don't forget to test in Release mode. In Debug, it's only two times faster because BigInteger is always in release.

Points of Interest

I publish this and hope that others find improvements, extensions or bugs. Some internal details like the ring buffer approach is probably not the best solution. Maybe someone has a general faster implementation. This would be interesting.