Trying to Avoid It… Among other things…

Since the advent of “Extreme Programming (XP)” in the early 2000s, software professionals have been attempting to find new ways to develop quality applications under increasingly tighter deadlines, smaller budgets, both with declining resources. Much of the thrust of these efforts has been the result of pressures that were forced upon IT organizations from the increasing traumas of the then new business fad of outsourcing in-house talent to less expensive foreign firms. In a very real sense the traumas visited upon the professional software development community, especially in the United States, encouraged the increasing avoidance of proven strategies in order to satisfy technical management that were under the same pressures to produce but did not have the courage to manage the deteriorating circumstances properly.

The “XP” development paradigm was the first formulated endeavor that attempted to reduce actual project development foundations, which included initial planning analysis, requirements analysis, design process analysis, scheduling analysis, and proper project adjustments as a result of planned risk analysis. The idea was that projects, as had been increasingly been done as a result of new microcomputer development processes, could be continued to be implemented against a “code first” construct, which still plagues many IT organizations today. It is true that “XP” did not intend to eliminate all such foundations but merely instead to reduce their importance while ignoring the realities of their critical application to quality software development.

Previous to “XP”, all software development had been done in a standard “waterfall” process whereby a master project design was devised and each of it’s steps appropriately planned, analyzed and designed, and then implemented while needed adjustments and defects found in each phase were simply accounted for by looping back to a phase’s initial starting point, correcting the issue and then moving forward in that phase again. It was a time consuming but ultimately successful process in the mainframe era and it successfully carried on into the initial phases of the microcomputer evolution.

Unfortunately, the initial endeavor that formulated the eventual “XP” paradigm suffered a mixed bag of successes and failures, which eventually saw the project cancelled leaving “XP” as a failed construct. It wasn’t all that well received either in the nascent microcomputer arena. It was seen by many as simply as a means to ignore time tested development processes. And its inclusion of “paired programming” made little sense to many veterans of the Information Technology field.

Nonetheless, professionals at the time still sought ways to be able to define new software endeavors by carrying on the basic concepts that “XP” attempted to promote. Management, both technical and non-technical, aided this process indirectly with the eventual elimination of business and systems analyst tiers in many organizations (also as a result of massive outsourcing along with the claim that it was necessary to reduce costs) with the unfounded idea that developers could accommodate such expertise in addition to their technical skills.

This process eventually resulted in the “Agile” development paradigm (which in some cases would incorporate “XP” concepts) that in the last several years is now giving way in some organizations to a shift towards a more enterprise version of it’s concepts now being called “DevOps”. This new construct is an attempt to more tightly integrate development and operations together. However, this has already been accomplished with older more time tested frameworks such as ITIL (Information Technology Integration Framework) and the like. Unfortunately, with so many older more senior professionals now gone from the profession there are few left that can aid the younger personnel in accommodating such mature processes leaving them to believe that they are creating something new with their own interpretations.

Both the “Agile” and “DevOps” followings have developed their own “manifestos”, which in essence means that such paradigms are as much ideological as they are technical. The claim that such paradigms are more ideological in nature than actual credible software engineering practices can be demonstrated by the vendor hype given to them via the development of new tools to accommodate their use along with the growing “movements” behind them that have often been very vocal in their support. Though not necessarily envisioned as some level of ideology, reading many of the posts promoting these paradigms provides enough evidence that actual ideologies are involved here.

Software Engineering itself is instead institutionalized with little claim to any loud or vociferous groups working to encourage its use within IT organizational infrastructure. The principals, practices, and processes involved in its practice are quite varied and encompass many capabilities that can be applied to any type of software development effort whether it be large or small. These capabilities also include processes that are very much similar to the ones that “Agile” now touts.

The practice of software engineering is predicated on many years of research in development paradigms and project analytics, which the latter includes both successful and failed projects as well as the causal factors associated with each. The result is then the enabling of metric analysis for software development professionals such as that which is promoted with the successful process improvement paradigm, CMMI or “Capability Maturity Model Integration” (https://en.wikipedia.org/wiki/Capability_Maturity_Model_Integration), when used against software development endeavors. With metric analysis a mathematical foundation can be built to correctly analyze and forecast the expectation of any project endeavor.

Though CMMI is not related to software engineering directly since it must accomplish efficiencies in general business and other technical processes as well (ie: inventory management) it does provide for processes that allow for the proper integration of software engineering analytics against development projects that have proven over time to guarantee the shortest development schedules within budget while eliminating defects to the point where some projects can claim zero-defect deliverables running in production.

Unfortunately, both of these highly successful process environments are so counter-intuitive to most business and technical management that implementation of either or both is often a severe struggle for its practitioners and those who want to engage in their learning and subsequent integration. This apparent rejection of practical software engineering paradigms which are seen as counter-intuitive are basically embedded in the psyche of software development managers and their own supervisors who are most often more interested in meeting elusive deadlines than producing actual quality.

Though both the “Agile” and “DevOps” paradigms appear to be more cultural theory than anything else, where either has been successfully implemented and there are metrics to prove such success, it is more likely that the professionals involved actually implemented sound software engineering methodologies and\or process management techniques than anything actually related to the “buzz” and “hype” surrounding either of these new technical incarnations. Not surprisingly, if you were to go to the Software Engineering Institute (http://www.sei.cmu.edu/index.cfm) it would be found that “Agile” is mentioned in the research done by this organization but not in the context that would be expected. Instead, you will find “Agile” subordinated to actual software engineering practices.

To sum this all up simply; you cannot develop quality software other than how quality software is to be developed. You can change the paradigm of how you are going to go about it but if it doesn’t include the necessary constructs that will yield quality deliverables, the paradigm itself won’t mean much. In lieu of this, it is often touted as to how successful corporations such as Google do invest and use such new constructs as “Agile” and now it’s more recent enterprise incarnation of “DevOps” successfully. However, such hi-tech companies as Google, Microsoft, and others simply could not produce their quality products without the sound concepts that are found in standard software engineering practices. Nonetheless, these organizations do not have perfect software production records and have in fact experienced massive failures, indicating that something is even amiss among these highly successful entities. However for their quality products, their development paradigms may be called something other than software engineering but they are still just that. Anyone who believes otherwise is simply fooling themselves.

“Agile’ has sought to rectify “XP’s” original shortcomings but the way it appears to be practiced in many IT organizations; it hasn’t done much to remedy this. In such organizations, it is still “forget the requirements and design and just get to the coding…”

Initial Planning Analysis; Two Basic Concepts

No matter whether you call it software engineering or something else, to be successful “Initial Planning Analysis” has to be the first phase of any new project or task. To ignore its vital importance is to guarantee some level of project failure in the end. And to this end one can then understand why so many software endeavors continue to fail at the exorbitant rate of around 70%, which is still the overall project failure rate in IT as a profession.

Many have noted that our profession, among all the technical professionals, is still the only one that has never actually matured into one that has definitive standards and processes to produce quality deliverables. This is directly attributed to the fact that younger, succeeding generations of new professionals are still attempting to find a “magic elixir” that will allow them to create quality products under increasingly impossible circumstances formulated by technical and business management. Given the present trends one should expect a complete systemic failure in the profession at some point in the future as this futile search for this formula continues since there is a limit as to how quickly any quality software product can be created.

It should be noted that from a software engineering perspective, project failure is not an all or nothing construct. Project failure in such terms then results when any aspect of a planned project is not met. Thus, if a project goes over budget, fails to meet stated requirements, fails to meet scheduled deadlines, or simply experiences defects in processing, such a project would be considered a statistical failure. The result of such a construct is that a project does not necessarily have to be pronounced as unusable to be considered within the realm of failure. However, many in fact turn out to be such.

Sound “Initial Planning Analysis” must begin between the intended users and developers with two primary understandings…

- The intended goal of the project

- The aspects of the overall project that the user would like to control

Believe it or not understanding the intended goal of a new project or task is quite critical to its success. Understanding such a concept therefore will also define the project’s intended scope. Thus, everything that is eventually planned and designed for this endeavor will be limited by such a constraint. Without such a limitation there is no way a development team can move forward with accurate planning and implementation phases since the project under such circumstances is simply to open-ended to accommodate.

Unfortunately, most projects have been and still are presented as open-ended endeavors with the expectation that the code-base should be made as flexible as possible to include new requirements as necessary. This type of project setup is then open to the technical manager and in some cases the lead developer preference for the “What if?” scenario of thinking. Such a scenario is often promoted by ambitious technical managers or technicians who want their projects to be easily extensible so that anything can be added to it. Such personnel believe that all code should be extensible and in many cases generic so it can literally be molded like silly-putty. Such thinking is an ingrained part of the “magical thinking” that many software projects suffer under.

On the one hand, one can only realistically plan for the requirements that are known while keeping an eye on the possibility for the inclusion of variations of specific processes such as the importation of data. And certain processes can be considered within the realm of possible additions in later phases of a project. However, here too it is necessary for some level of definition to be included with such thinking. Saying that a project may require the ability process data imports via MSMQ in reality is saying nothing at all since even here precise planning is required.

Nonetheless, what happens is that such projects make good candidates for eventual project failure as development teams cannot concentrate on the known requirements but have to split their efforts between the known as well as the unknown; a nice trick if you can actually hire someone who can predict the future. The result of such open-ended thinking is that a concise project cannot be successfully planned and implemented towards quality completion since the scope of the project often changes on such a regular basis as to quickly give rise to “feature creep”, which has been the bane of many projects that has suffered its consequences.

There are however, many successful projects that do in fact plan for extensibility but such capabilities are known or expected factors to be included at later phases in the project’s lifetime that often include major completion milestones along the way. Thus for example, if an application is expected to receive possible imported input from many source (ie: CSV file upload), the section of code that would be responsible for such processes can be designed in such a way that adding new modules to incorporate new import processes follows an easily discernable pattern for implementation. Yet, you would be surprised as to how many technical managers do not plan for such eventualities but instead prefer to consider “whimsical thinking” as appropriate demands on project development. Such thinking often falls into the category of “maybes”…

The second item noted above is actually the most important basis for any project to succeed. This is the part of initial project planning where both users and developers decide as to what areas of a project the user needs and\or prefers to control in order to meet their own requirements for its successful implementation. However, complete control cannot be afforded to the user or the project will fail since the development team will never be able to place legitimate constraints on the project’s scope that can be used for their own design processes. Again, allowing a user to control the entirety of a project will only subject it to an ongoing deleterious process of “feature creep”.

When developers meet with a user or a user team the best way to present this aspect of project planning is by showing them a picture of the “Software Trade-Off Triangle” as shown below…

In order for the project to succeed, the software triangle’s three constituent parts must continuously be kept in a state of balance throughout the lifetime of the project. If it falls out of balance developers and users must then agree on how to re-balance it. The more complex the project, the more critical it becomes to maintain the triangle’s level of balance as the endeavor proceeds.

Again, a user can only be allowed to control two of the three aspects of the triangle. Therefore, if a user wants to control the “Product” and ‘Cost” aspects, the development team will then define what the project “Schedule” is to be. If the user is only requiring control over the “Product” aspect of the triangle then the development team can provide “Schedule” and “Cost” combinations allowing the user to select the one that is most beneficial for their needs.

To clarify the three components of the triangle we define them as follows…

- Schedule – the “estimated” time it will take to complete the project successfully

- Product – the features desired in the product and quality is assumed

- Cost – the budget and resources allocated to the project

If a user insists on total control of all aspects of a project the development team must inform the user that such a project would be impossible to complete successfully.

Once the primary goal of a project is understood and who will be in control over what aspects of it, a general project scope has now been initiated so that actual requirements analysis can begin.

A Word on “Estimates”

During initial planning meetings it is often quite common for the development staff to provide an “estimate” for the delivery of a production-level product for the user. Users and technical managers alike assume that since the goal of the project may have been defined that experienced development professionals can also provide an “estimate” target data for delivery, which is then accepted as an “actual” date of delivery. Nothing could be further from the truth.

The problem with “estimates” is that they are simply that; at best, educated guesses based on generalities when provided at such meetings. And even an educated guess is a stretching the concept a little too far. The idea that one can be asked to provide an estimate with any degree of accuracy at such an early stage in the project planning process is a revered concept promoted by many technical managers who have no real experience in managing quality software development. It makes them look good to their own business users and supervisors but such a perception is often fleeting. When the project begins in earnest any such “estimate” will be quickly found to be completely untenable, which then places a technical manager in the common position of demanding unjustified overtime to pressure development staff to make a deadline that has had no planning to support it.

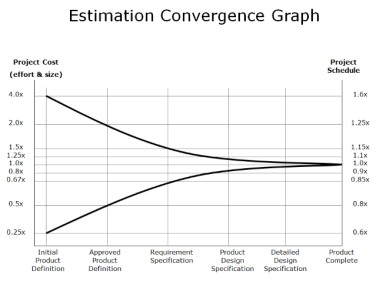

The reality of estimates at such an early stage in the process has been categorically proven statistically to be either too long or too short a period of time needed for project completion. As the graph below demonstrates, the inaccuracy of such estimates can be as large as a factor difference of 16. And though this information was defined over 20 years ago, it’s statistical basis is still quite relevant for today’s projects since nothing has really changed when providing unsupported project estimates.

“Rapid Development”, McConnell 1996

Click to Enlarge

All project timelines, no matter how they are planned and defined, follow a granulated progression, which then allows developers to provide increasingly accurate target dates. Such a phased in process of depicting accurate completion dates also has the advantage of often being at the end of the shortest possible time that the specified project could have been delivered with quality results. Noting the sections in the graph above, developers can then provide a phased estimate-to-actual completion date generation process, which would allow them far more flexibility in making such a completion date than attempting to adhere to an initial estimate. As is shown, completion date projection accuracy increases as more design data is incorporated into the planning process.

To circumvent such a situation in initial planning meetings, no matter which party controls what constituent parts of the “Software Trade-Off Triangle”, the development staff should take the initiative to avoid providing any such unsupported estimates. Instead, the best course of action would be to inform the user that after proper analysis of the initial requirements (whenever initial product requirements have been completed) an “estimate package” will be provided to the user with the caveat that one, this is only an initial “estimate” based on what information is known about the project so far, and two, as planning proceeds the estimate will be found to be actually shrinking instead of expanding.

Such an “estimate package” will be based upon the actual project requirements and data known at the time the estimate is provided. However, the reality of completion data planning only comes into its own when all the requirements have been provided for the project and the development staff can work on breaking down the individual timelines each component in the project will require.

Until that level of analysis is generated all such “estimates” can be expected to be nothing more than general expectations for when a project can be expected to be completed. Again, such preliminary estimates can be nothing more than any experienced developer’s best educated guess.

The specifics of preparing such an estimation package reflecting actual component timeline analysis will be fleshed out in a later part of this series. However, to get to that point we first have to investigate the first critical aspect of project design, “Requirements Analysis”. This is the phase in which much of the project’s basic design data will be afforded.

“Requirements Analysis” will be part II of this series…