Introduction

This second part deals with contexts. A context is a policy field to be applied during parsing of a text. A text is not always formed by the same rules. For example, literal character strings are supervised by both a quotation ends of the literal. Between these two quotes, all characters and all the words are not involved not at the same parsing than the entire text.

Similarly, all programming languages offer add comments will never be taken into account in the compilation. However, these comments serve for "comment" or even document the program procedures (typing, defining what the procedure, etc.) and why not, manage the traceability of changes during maintenance of computer programs in the comments.

Another case more difficult, are dynamic web pages and scripting languages such as JavaScript or VB (for Windows) used directly into the Web page to display. The HTML tag <script> language attribute must contain; generally, this attribute is JavaScript but it is possible to choose as VB language. Under Windows, the script is then recognized as a Visual Basic script. Similarly, labels containing the prefix "asp" (for ASP.NET) or the prefix <?php for PHP scripts have an impact for a common parsing system while each technology tends to be compartmentalized in a system that does not support all formats.

Why is it useful ?

From these diverse views, it seems that define a parsing context is paramount in the reverse-but engineering not in the case of the operating programs by each system. Also, parsing the text parts in different syntax demonstrates that it is not necessary to analyze the entire text but only the fragments. However, a hybrid parsing system capable of handling the full text of an end to end free partitioning of fragments of the text by simple delimiters and dealing with a delay seems to have an interest in reverse-engineering and the evolution of programming languages in general. It is therefore important to know define contexts to analyze fragments of programming languages without losing the dynamic potential impact of these fragments.

The problems are as follows

- "In general, how differently analyze the fragments identified by a delimiter at both ends?"

- "What should we develop algorithm to switch context to another without treating the fragments separately?"

- "How to ensure that the algorithm used is not closed in some languages 'original'?"

<o:p>

Background

In the previous article, I talked about the VB language and the wildcard character used to treat an instruction on several lines as one. Using a context and associate grammatical rules for a text fragment must be able to properly handle the VB syntax.

Function Call (param1, param2, _

param3, param4, _

param5)

You can download VB .NET specification to the web address https://www.microsoft.com/en-us/download/confirmation.aspx?id=22306. The document states that Visual Basic supports the continuation of a line starting with a space followed by the character _ and 0 or more spaces followed by a carriage return. If the VB specification BNF used in this document, this does not mean, provided that the language was developed with a program like LEX or FLEX. We note that Microsoft never provided a lexical and syntactic analysis program and that there was before .NET, no library of its kind for Windows. While In Linux, FLEX and BISON are usually installed by default.

Generally, program a "compiler" (or just parsing) requires 5 steps:

- It seems that there is no difficulty in creating a FLEX file and (after) BISON for any programming language whatsoever, even those that do not exist yet.

- When you begin to create the FLEX file, there are already some small difficulties to get to correctly parse the text with all tests. There is always one or more tests do not work immediately.

- Once exceeded this stage, we must continue to complete the entire source programming language without causing regression on previous tests. The big problems are beginning and we realize that FLEX is not that well, actually, and it makes the task very difficult or even understand that it is impossible to complete parsing facto with FLEX.

- Then you have to focus on because BISON and FLEX is never enough. We must then create the BISON file and finally generate the syntax tree necessary to read the compiler.

- Finally, we must:

- Manage all the possible errors: granularly treat each mistake and have a correct description of the indication of the error, the nearest possible to the "real" reason.

- Do not stop "compile" at the first error and propose possible options.

It will therefore be to complete the program that compiles a programming language whatsoever. This is where it will take several months to ensure stability and high reliability by using it regularly. Learning and a huge job wait over several months finally arrive at a milling about right and head full of developments, even a complete overhaul of your developments own analysis tool without FLEX nor BISON!

These programs are fine for small scanners but they are not at all suitable for a professional project or a "real" compiler. Also, grammatical and syntactic analyzers are legion, not because it's exciting and difficult but only because of the inflexibility of the proposed tools yet.

Using the code

User manual

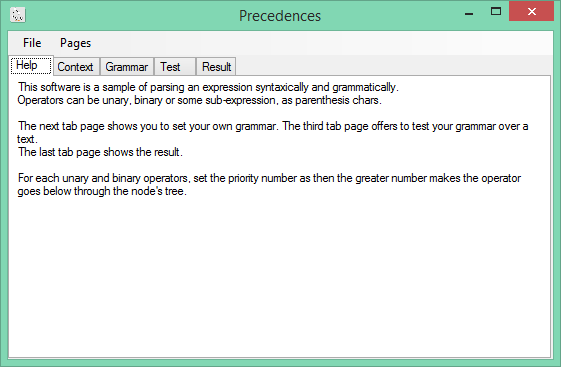

I first present a description of the use of the software. The software is called Precedence because I use a concept original definition of precedence with a single parameter: a number of a priority. The number of the lowest priority is 1. It is the priority for all terms (numbers and names). The higher numbers define the priority for operators knowing that the following operators are sorted in ascending order of priority: + - * /. Parentheses may be attached and in this case, the precedence is changed.<o:p>

The application displays a single window.<o:p>

There are 5 tabs and a menu: Help, Usage, Grammar, Test and Result. File and Pages menu: File menu to load / save a file extension * .f containing examples of rules grammars, the second menu shows each tab.<o:p>

The Context tab is a list where each line contains a check box, a name and a title. The stored information in the form of data corresponds to all the contexts of any of the list in this tab. Each context defining a finite set of grammar rules to be specific to this context; the context has a name to identify it uniquely and title; it is the title that is displayed in the Grammar tab.

The Grammar tab matches the configuration of a set of grammar rules for a context selected following the list dropdown placed just above the list of rules. The example below shows the rules to identify affectations with the = operator and could express an XML tree. Parentheses are used to change the precedence of operations.

<o:p>

The fourth tab is used to select a sample test with a drop-down list. The text to be analyzed is in the entry area; the area is editable; a button to add a new test or keep the changes to the text. Click on the button "Parse" performs parsing of the text in accordance with the rules of grammar defined in the tab "Grammar".<o:p>

The last tab shows the results of the analysis. The result is the XML representation of the tree hierarchy parsing.<o:p>

Defining the rules of grammar<o:p>

- A rule is first identified by a target i.e. its type:

- No action: it nothing happens: this rule is useful for "eat" certain words.

- Expression list: This action creates a new expression and stores it in a list; it creates a series of successive expressions.

- Open brackets: this action opens a parenthesis in an expression.

- Close brackets: this action ends a parenthesis; a closing parenthesis followed an opening parenthesis, otherwise a parser error occurs.

- Exchange Context: This action performs a change of immediate context; when the context is changed, the rules of grammar are applied that of the context and selected. You can create a new instance of the context or resume the previous instance. Context precedent is cached.

- Close Context: this action ends a context; the previous cached context is taken and its grammar rules apply again; grammar of the closed environment is no longer applied.

- Open sub-expression: this action starts a sub-branch of the tree.

- Close sub-expression: this action goes in the sub-branch of the tree.

- Unary operator: indicates a unary operator; you must specify a priority number.

- Binary operator: Binary; you must specify a priority number.

- Statement: indicates that this is an instruction.

- Term: indicates that it is a term.

- A unary or binary operator must have a higher priority number or equal to 1. An instruction or term still has the lowest priority, that is to say 1. The higher the priority number is high more the operator is secondary to the other: so, whenever a priority operator larger is encountered, it is below the smallest priority number of operators. For example, the addition operator + has a lower priority than the multiplication operator * is a priority because the multiplication with respect to addition; also, the multiplication is automatically placed below a + operator if there is one. Similarly, if a multiplication followed by an addition, then the addition takes place automatically in the shaft above multiplication. The priority number is therefore the sole indicator of how operators and terms are stored in the tree. The terms and instructions have the level of the lowest priority because it will always be the leaves of the syntax tree.

- The objects to build in the tree are uniquely identified by a regular expression.

- In the case of a change of context, context field is used to indicate the name (not the title) the exact context. The value in the next field is selected if the previous instance of the context should be used. The unchecked box indicates the need to continue the analysis of the text with a new instance of the context.

- Attributes can be specified: Attributes are named by name and contain a value. The suite name = value [, ...] form the set of attributes. The attributes are added to the XML representation of the resulting syntax tree.

Application

The most common tests are the recognition of literals: numbers and strings. Other tests are possible, such as XML and / or HTML or dynamic web pages. Here is the BNF example :

start

ident := [a-zA-Z][a-zA-Z0-9_]*

literal := entier | decimal | scientific | any-string | quoted-char

entier := non-zero-chiffre chiffres

non-zero-chiffre := [1-9]

chiffre := [0-9]

chiffres := chiffre*

decimal := entier "," chiffre+

scientific := entier "." chiffre+ "E" ("+"|"-") entier

dchar := "\\\\" | "\\\"" | "\\" | "\"" | .*

dstring := dchar*

dquoted-string := "\"" dstring

char := "\\\\" | "\\'" | "\\" | "'" | .*

string := char*

quoted-string := "'" string

quoted-char := "'" char

any-string := quoted-string | dquoted-string

name := [a-zA-Z][a-zA-Z0-9_-]*

attribute := name "=" quoted-string

attributes := attribute* | ">"

xmlOpenNode := "<" name attributes

xmlCloseNode := "</" name attributes

xml := (xmlOpenNode | xmlCloseNode | ident)*

start := ident "=" literal | "xml" xml | "html" xml

Note the two special cases in which the context is cached and changed for another context and returns the context cached: a string characters and xml between the <and>. We can be sure that the transition from one context to another corresponds to the term under FLEX reentrant. However, the fact to exchange two existing contexts must instantiate multiple FLEX grammars.

Here then the application result PParser

| Context Name

| Context Title

| Is start Context

| Rule Name

| Type of rule

| Lexic word

| Context change

| Renew Context

| Attributes

|

| default

| Default context

| True

| | | | | | |

| quote

| a literal quoted string

| False

| | | | | | |

| tagxml

| tag xml

| False

| | | | | | |

| dquote

| a literal double quote string

| False

| | | | | | |

| default

| | | xml

| Unary operator

| xml

| | False

| |

| default

| | | IDENT

| Term

| [a-zA-Z][a-zA-Z0-9_]*

| | False

| |

| default

| | | Equal

| Binary operator

| =

| | False

| |

| default

| | | Number

| Term

| [1-9][0-9]*(\.[0-9]+)?(E(\+|\-)[1-9][0-9]*)?

| | False

| |

| default

| | | empty

| Term

| ""

| | False

| |

| default

| | | dblquote

| Context change

| "

| dquote

| True

| |

| default

| | | empty

| Term

| ''

| | False

| |

| default

| | | quote

| Context change

| '

| quote

| True

| |

| default

| | | openNode

| Context change

| \<[a-zA-Z-]+

| tagxml

| False

| |

| default

| | | list

| Expression list

| \r\n

| | False

| |

| default

| | | closeNode

| Context change

| \</[a-zA-Z]+

| tagxml

| False

| |

| default

| | | inner

| Open brackets

| \(

| | False

| |

| default

| | | outer

| Close brackets

| \)

| | False

| |

| quote

| | | Quote

| Term

| \\'

| | False

| escape=true

|

| quote

| | | Backslash

| Term

| \\\\

| | False

| escape=true

|

| quote

| | | Escape

| Term

| \\[^' ]

| | False

| escape=true

|

| quote

| | | NoEscape

| Term

| \\

| | False

| no-escape=true

|

| quote

| | | End

| Context stop

| '

| | False

| |

| quote

| | | string

| Term

| [^\\']*

| | False

| |

| tagxml

| | | attributeName

| Term

| [a-zA-Z-]+

| | False

| |

| tagxml

| | | Equal

| Binary operator

| =

| | False

| |

| tagxml

| | | dquote

| Context change

| "

| dquote

| True

| |

| tagxml

| | | quote

| Context change

| '

| quote

| True

| |

| tagxml

| | | Close

| Context stop

| \>

| | False

| |

| tagxml

| | | spaces

| Expression list

| [ \t]+|(\r\n)+

| | False

| |

| dquote

| | | doubleQuote

| Term

| \\"

| | False

| escape=true

|

| dquote

| | | Backslash

| Term

| \\\\

| | False

| escape=true

|

| dquote

| | | Escape

| Term

| \\[^\ ]"

| | False

| escape=true

|

| dquote

| | | NoEscape

| Term

| \\

| | False

| no-escape=true

|

| dquote

| | | End

| Context stop

| \"

| | False

| |

| dquote

| | | string

| Term

| [^\\]+"

| | False

| |

The above table shows for each context the different rules to apply.

Also, the tests are as follows:

a = 1

b = 349095

c = 34.44

d = 344E+14

e = 34.000011E-10

e = ''

a = '1234.555'

b = 'reieopioiprerze \ jklfjdflkds \\ fjdljfdls \r\n \b \' kfdmlkfdkfdms'

e = ""

a = "aakfdfdjs fsdjklfj kfldsj fkljslks"

a = "1234.555"

b = "reieopioiprerze \ jklfjdflkds \\ fjdljfdls \r\n \b \" kfdmlkfdkfdms"

xml (<a>ttt</a>)

xml (<a>tt<b></b></a>)

xml (<a m='1' g='zzzzzz'>tt<b m='2' g="kfjsdlkjklfjs \ \r\n \"">rrr</b></a>)

Note that for some grammars, it would have been interesting to:

- Add / delete rules dynamically

- Assess content knowledge "no eating"

- Trigger a particular action if any of the terms has been recognized

Developments

The Fifo class implements a provider / consumer algorithm. The class provides FifoWriter subsequently recognized tokens. Lexical units are read once for all; no other reading or read-only product; the construction of the tree is optimal because the sub-branches are added successively without being replayed.

The FifoReader class implements a thread that processes the lexical data received subject to the form of expression objects whose class indicates the type of object to insert into the tree. The reading of data causes an immediate search for the position in the parse tree. An error can occur for example, when two successive binary operators. In this case, the resulting treatment may become chaotic. Class Expression encompasses all actions towards sending FifoWriter class. The methods of this class are applied to each type item send: an opening or closing parenthesis, as a phrase, a list of expressions, a unary or binary operator, a term or instruction.

A Terminate() method indicates that the treatment is over and must complete the execution of the thread. The insertion system object in the tree is implemented by three major classes: Mouvement, Nodule and NoeudNAire. The Nodule class and its derived classes NoduleOperateurBinaire, NoduleOperateurUnaire, NoduleTerme and NoduleInstruction are stored items in the tree. Each class implements the virtual function Print() that writes the representation in an XML document. NoeudNAire class is the one that performs the search of the insertion position in the graph taking into account the type of object to be inserted and its priority over other existing nodes already in the graph: It is possible to insert the top of the tree node or inserting instead of an existing node and this node move in a subgraph. According to the priority, if it is larger than that of an object of the shaft while the object to be inserted arises below. The tree may have two branches; with over two legs, it indicates that the grammar rules defined should be reviewed.

The class GrammarContext and WorkingContext deals with the context: GrammarContext class defines the name of the context and the list of rules associated grammars context. When one starts reading the file, the starting context is the first context in which the Start box is checked. To analyze the text, we use one regular expression; this regular expression is a list of lexicons of each rule context. An operator | (or) will add between two lexicons.

For example, for the context "tagxml" rules are

| tagxml

| | | attributeName

| Term

| [a-zA-Z-]+

| | False

| |

| tagxml

| | | Equal

| Binary operator

| =

| | False

| |

| tagxml

| | | dquote

| Context change

| "

| dquote

| True

| |

| tagxml

| | | quote

| Context change

| '

| quote

| True

| |

| tagxml

| | | Close

| Context stop

| \>

| | False

| |

| tagxml

| | | spaces

| Expression list

| [ \t]+|(\r\n)+

| | False

| |

So what will the following regular expression

(?<r1> [a-zA-Z -]+) | (?<r2>=) | (?<r3>\") | (?<r4>') | (?<r5>\>)|(?<r6>[ \t]+ | (\r\n)+)

In this regular expression, the order of words is very important: if two words start with the same correspondence, before the term must be one whose correspondence will be longer. For example, if A: = "\\\\" and B = "\\", it will declare A before B. For a given context, the regular expression will define all the rules of the context in the order listed.

string regexStr = String.Empty;

int index = 0;

foreach (GrammarItem g in c.ContextRules)

{

if (!String.IsNullOrEmpty(g.LexicWord))

{

if (index > 0) regexStr += "|";

++index;

regexStr += "(?<r" + index.ToString() + ">" + g.LexicWord + ")";

}

}

Then, each identified connection from end to end in the input text needs to search the corresponding rule and detect the type of rule among the types of possible rules, as seen in the list above.

Regex r = new Regex(regexStr, RegexOptions.Multiline);

Match m = r.Match(this.txtSample.Text, previous.MatchPosition);

for (int indexGroup = 1; indexGroup <= countRules; ++indexGroup)

{

Group g = m.Groups["r" + indexGroup.ToString()];

if (g.Success)

{

if (String.IsNullOrEmpty(g.Value))

{

++previous.MatchPosition;

goto redo2;

}

GrammarItem gi = c.ContextRules[indexGroup - 1];

lexic = new LexicItem(c, gi, g.Value);

lexic.MatchPosition = g.Index + g.Length;

break;

}

}

The variable previous is the previous match: MatchPosition property ensures that the search for correspondence pick up where it left off whatever the context.

Note: The test null string on the Value property of the Group class (class .NET regular expressions) provides that the position will continue further (to prevent an infinite loop) when nothing was read.

When the context has changed, the current context is cached and the regular expression is that of the new context; when treatment're finished with the current context, the previous cached context is taken and the regular expression is rebuilt.

Only when it comes to form the XML representation of the parse tree that we must move from one context to another to group XML fragments into one. Also, two classes of objects Nodule derived from class to control the construction of the entire XML file: NoduleContextChange and NoduleContextStop. The NoduleContextChange class retains the name of the work context to apply (running context instances) and NoduleContextStop class retains the name of the context of earlier work.

To represent all elements of the syntax tree, each node of the tree implements Nodules property. This property built the sequence of a tree node and all its sub-nodes. In addition, two additional nodes are added: XmlStartElement and XmlEndElement. Class XmlWriter .NET builds a syntax tree by mapping functions such as WriteStartElement() and WriteEndElement(). The whole algorithm sequentially build the syntax tree and ultimately to obtain a single XML file containing all the fragments built in each context.

Class Mouvement performs the actual insertion functions in the resulting syntax tree. It corresponds to the end of the processing of a node to be inserted in the tree.

If the implementation was carried out in this way, by using a thread in the background, it's because treatments are so detailed time took over reading treatment of lexical units. Also, an underlying system could allow to study other situations such as error detection, treatment and their indications (row, column, error description) to help the developer to correct his grammar rules or fix the source of its program.

Points of Interest

This is a great advanced technology in the field of parsing. Chomsky has always considered the text analysis and compilation as the most difficult technique to master and the longest to develop. With this program, and creative use of a priority number, recursive forms disappear in favor of a simple list of lexical units identified by a regular expression; reading the text to be analyzed is made from start to finish without proofreading and no state is required to follow the progress of grammar rules.

Reentrancy is implemented using contexts. Each set of rules of a context is context specific. It is possible to maintain the state of a context to resume later; an adaptation of the program with multiple files remains feasible. With this program, the definition of grammar rules is a list, not a parse tree.

The construction of a grammar becomes easy, simple, fast, intuitive and uncomplicated.

That will be very exciting to continue this project. The process can give more fun techniques and it could parse extremely specific programming languages, as well as new. While writing this code, some bugs has needed time to correct it; fortunately, VS has a great debugger.

This article talk about a software. A software that it could talk to.

History

In a future article, I will present the evolution of this software such as:

- Type inference (whole, real, operators with feedback data)

- Attributes with expressions and calculation functions

- Stores data computing during parsing

- The definition of n-ary operators (three or more sub-nodes)

- Syntax highlighting

- Preparing to direct compilation (or conversion code)

- Reverse-enginering