Introduction

Over the course of development of various large scale distributed and cloud based systems my team bumped into a number of situations where there was a need to provide some flag for an EXCLUSIVE ACCESS to a shared resource or alternatively ensure that ONLY ONE also called a LEADER process (can be also be worker, server, service) running as part of the distributed solution can execute certian workflow or act in a unique role.

Such flag would require kind of LOCK functionality that would ensure UNIQUENESS of such flag holder across the system but would also be ensure RELEASE and REALLOCATION in case of a crash of the current holder of the locked resource.

The solution presented below involves usage of MongoDB NoSql database as a provider of atomic lock functionality used in this code sample.

Background

Let's consider the following scenario: You host your business logic processing services as a Worker Role in Azure Cloud. The worker role can scale up to any number of instances of this role. All the instances of the Worker role will be getting and processing their jobs from some messaging queue. But, here comes the reason for the article, one of the instances needs to assume a unique role, say being responsible for the activation scheduled tasks that need to run in your system. There should be only one such service in the system at any point of time as same scheduled task should not be executed more than once at the same time.

One option would be to have a separate Scheduler component in the system that would be responsible for only that - runnig scheduled tasks, but going that path would naturally require additional IT resource to deploy, monitor, provide redundancy and scale for additional component.

The conclusion that our team came to was to take the approach of creating a distributed mechanism that would provide unique right to one of the earlier mentioned instances of the service and give it exclusive right to server as a Scheduler in the system. Such a mechanism would need to provide kind of LOCK like behaviour that would ensure that no second instance would concurrently server as a scheduler in the system. One of the obvious requirements for such solution would be to provide redundancy for the Scheduler compoenent so in case something happens (such as crash or severe resource congestion) to the instance that is currently wearing the "hat" of the Scheduler - another of his healthy peers will take his role and will become the Scheduler.

At this stage there was a need to choose some shared resource or a lock service that would become the source of truth for the prupose of making the decision of whether and who is holding the lock. As we understood part of the solution for the distributed lock would require polling for the lock availability by the services to check whether they can acquire the lock and proceed performing scheduler related tasks. Due to the fact that there was already MongoDB database incorporated in the solution and given its good performance benchmarks and its support for atomicity at a document level we decided to use it as a locking. Other option considered was to use MS SQL server stored procedure with update command to provide same kind of atomic locking functionality - but due to the fact that we did not need any relational database specific functionality and given lower performance that relational databases produce compared to No SQL products we decided to stick to MongoDB. Of course potentially other No SQL databases such as Redis could also be used for this role given that they provide strong consistency for the write operations.

One additional reason that MongoDB was chosen for but eventually proved as useless for us was its support for TTL indices - meaning MongoDb would remove a record once the value in a date type field of the document used as the TLL index would expire. It could be used a way to release a lock in case of a current Scheduler crash to allow other clients to get access to the lock. The problem with that was that Mongo does not promise to remove the document the second it expires but at certain point in time based on the internal background data flushing policy. Any delay it the release of the lock was not acceptable by the system design so we had to implement out own Lock release mechanism.

Please note that competing for an exclusive lock on some shared resource is not the only way to provide system wide concensus that ensures that only one of group of peers gets to executing certain action or possess an exclusive role. There are well known other, more elaborate ways to reacha concensus n a system - one such example would be election like method that MongoDb Replica Set itself employs in order to select Master node.

Given the fact that we did not have any requirement for specific service selection logic or prefernce critera we were satisfied with a simple solution that would randomly produce holder for the role of Scheduler.

Top level look on the solution

The solution has following functional characteristics:

- Each client wanting to acquire the lock must be uniquely identified so that once the lock is acquired marked as belong to that specific client / process/ service.

In the DistributedLockingTestClient project accompanying this article you can see <font face="Courier New">var uniqueId = Guid.NewGuid().ToString();</font> that creates unique identifier for instance of the client application that you run. You can see that all the methods IExclusiveGlobalLock interface are expecting to get <font face="Courier New">string clientIdentifier</font> as a parameter for the call.

- Each lock is held for a limited time. In case a client wants to hold it for a longer period of time he should periodically extend it before it expires. This mechanism resembles Sliding Expiration approach used in many cache implementations.

For that purpose IExclusiveGlobalLock Interface has ProlongLock method used to further extend the lock duration. Given that it is called by the currently Lock holding client and is performed before the time of the expiration of the Lock- it would update the lock document LockAcquireTime date field - and provide additional time extension for the lock.

- Clients not holding the lock should periodically poll for the lock. If lock is not held by anyone or is expired the client can ask for the lock and create a lock record in MongoDb with his client Id.

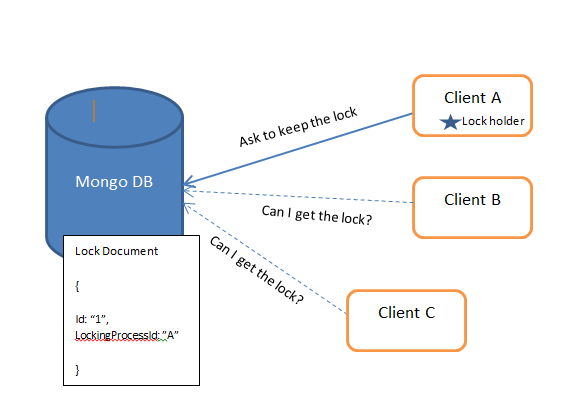

As can be seen in the picture above, MongoDB Document that is created contains Id of the currently locking client stored in LockingProcessId field as well as the time the lock was acquired.

Under the hood - all the clients asking for the lock are actually trying to insert a document with the SAME Id ( Id of "1" in the sample code) and given that Id of a document in MongoDB collection has to be unique - no two concurrent inserts for the same Lock can succeed. Only once the Lock generated document is remmoved - only them can new Lock document be inserted.

Detailed look at the code

The code implementation of the solution is based on 2 main interfaces:

One, IExclusiveGlobalMongoBasedLock which basically provides the low level access to the required basic Exclusive Lock related functionality:

public interface IExclusiveGlobalLock

{

bool TryGetLock(string clientIdentifier);

void ProlongLock(string clientIdentifier);

void ReleaseLock(string clientIdentifier);

DateTime? LastAquiredLockTime {get;}

int LockDurationTimeInMilliSeconds { get; }

}

At the core of the solution lies ExclusiveGlobalMongoBasedLock class that is MongoDB specific implementation of IExclusiveGlobalMongoBasedLock interface. It uses MongoClient library to insert into MongoDb ExclusiveLockStorageModel class instance which is getting converted into a BSON document that represents this exclusive lock.

_collection.Insert(new ExclusiveLockStorageModel()

{

LockId = _lockId,

LockAcquireTime = _lastAquiredLockTime.Value,

LockingProcessId = clientIdentifier

});

In case no error returned the code assumes that the lock was successfully acquired and moves into lock hold maintaining mode.

Given that most of the time developers will want to implement some kind of asynchronous task or thread based solution for the lock polling and lock extension mechanism I also created IExclusiveGlobalLockEngine interface that provides signature for Application facing abstaction layer that would hide the process of lock acquisition .

The interface is implemented in ExclusiveGlobalMongoBasedLockEngine class that is directly created by the Console application serving as a client in the sample code.

public interface IExclusiveGlobalLockEngine

{

void StartCheckingLock(string clientIdentifier, Action onLockAcquired, Action<string> onLockLost);

void StopCheckingOrReleaseLock(string clientIdentifier);

}

</string>

When using ExclusiveGlobalMongoBasedLockEngine as a way to get the locking functionalty you client code become quite minimal. The main part is number of configuration parameters explained in the attached code comments, unique identifier fo rthe client instance and then two callbacks: one to react on Lock being acquired by the client and the other callback when the lock is lost.

IExclusiveGlobalLockEngine lockEngine = new ExclusiveGlobalMongoBasedLockEngine(

mongoDbConnectionString, "TestLockDb", "Lock",

lockDurationInMills, lockCheckFrequency);

lockEngine.StartCheckingLock(uniqueId, () =>

{

Console.WriteLine( "Lock Acquired");

....

}, (reason) =>

{

...

});

Using the code

In order to run the code open DistributedLocking solution using Ms Visual Studio 2012 or later. Then you need to right click on the solution and select to "Enable nuget package restore". That way, mongocsharpdriver , the only Nuget package that is used in the solution will be downloaded and deployed into the project.

Sample application included in this arcticle requires runnig MongoDB database instance that runs on the local machine on the default 27017 port.

In case you already have it installed on differnt location you can go to Program.cs file in DistributedLockingTestClient porject and modify mongoDb connection string.

In the DistributedLockingTestClient - project that contains the code of the client making the Lock request there is a RunMe.bat file. I would recommend running that file from the bin folder of the project once it is successfully compiled. The batch file would create two instances of the client console application, each one will automatically be given a unique Id and it will be easy to see that one of the client acquires the exclusive lock whereas the other is still trying to get one.

This is what you suppose to see after you run the batch file: