Some teaser screenshots

Source Code

Besides the link in the article, if you want to get the latest updates or contribute to the project, the source code is hosted on GitHub: https://github.com/cliftonm/neurosim

Introduction

In this article I am presenting a neuron simulator and study tool. Typically, neuron simulation is either highly idealized, consisting of an input layer, one or more hidden layers, and an output layers. Signals propagate through the network based on weighting and summation thresholds. This diagram is a basic example:

(from http://www.scielo.br/scielo.php?pid=S0100-06832013000100010&script=sci_arttext)

Conversely, other neuron simulators will be very low level, simulating the sodium, potassium, and calcium channels. You can read more about that, but here's a start: http://www.ncbi.nlm.nih.gov/books/NBK26910/

What this article presents is a middle ground, where the electrical characteristics of input stimulus (either excitatory or inhibitory) affects the action potential. Also, neuron characteristics such as its refractory period (the time it takes to recover from an action potential) are simulated. An approximate plot of a typical action potential looks like this:

(from https://en.wikipedia.org/wiki/Action_potential)

In the software presented here, a typical plot looks like this (it's not nearly as nicely curvy):

Idealized Action Potential in Neuro-Sim of a "pacemaker" Neuron

Neuro-Sim let's you play around with specific neural configurations:

Simulating a reflex action

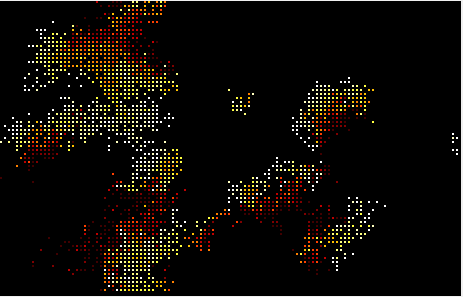

as well as playing around with full networks, which can be quite mesmerizing to watch:

Snapshot of a neural network in action

The reason for the excellent Yogi Berra quote at the beginning of this article is that, while there is much we know about neurons, there are also a lot of theories out there (and quite frankly, unknowns) as to how a cluster of neurons results in consciousness, the ability to think, and so forth. Also, keep in mind that most studies of neurons are invasive, requiring probes to measure membrane potential and/or electrically stimulate neurons. The practice of actually implementing is neural network capable of, well, thinking, is still, as far as I'm concerned, in the realm of theory.

History

I originally wrote a very similar simulator back (in C++) in the days of the 80286 processor (30 years ago, oh wow), running at 8 Mhz, and it was significant improvement to add in an 80287 math co-processor! At that time, I was only able to simulate a 20x20 grid of neurons, and at that, it chunked along. The simulator presented here easily handles over 8000 neurons, and that's running on a single thread, including UI updates, on my 2.3Ghz i7 laptop. So, this has been one of those back-burner projects I've been wanting to resurrect for years and I'm happy to have finally gotten around to it. What surprised me the most in implementing this on today's computer is that I had expected to have to implement the neuron "sum and fire" computations in threads, batching them across available CPU's. This was not the case! All the neuron calculations run in the application's main thread. For larger networks I would expect that I would need to balance the workload across multiple CPU's, but I haven't tested that implementation yet.

What is not Simulated

A variety of qualities of a neuron are not simulated. If the terms used here are unfamiliar, read the next section "Neurons: A Crash Course". Most notably, Neuro-Sim does not account for:

-

Propagation time along the axon.

-

Myelinated vs. un-myelinated axons (affects propagation time).

-

Location of receptor on the dendrite or soma (affects how the localized membrane potential change affects the axon hillock).

-

Relative refractory period (currently, the entire refractory period is absolute -- the neuron cannot fire again).

-

Pre-synaptic facilitation or inhibition -- rather than connecting directly to the dendrite or cell body, some synapses will connect to the pre-synaptic node of the pre-synaptic neuron, facilitating or inhibiting a specific synapse.

-

There is no specific time basis -- the program implements a measureless "tick" in which all summations occur, followed by any action potential "firing." The recovery rates are based on changes per "tick" with no regard to what unit of time a "tick" actually is.

Given these limitations, very interesting networks and studies can still be created.

Learning

This simulator does not implement any "learning" algorithms. The concept of neural network learning, from a biological perspective, is very complicated and poorly understood, and includes such concepts as:

-

synaptogenesis - the formation of synapses between neurons. This occurs through life but is most predominant in early brain development and also involves "synaptic pruning" -- eliminating synapses, which normally occurs between birth and puberty.

-

neurogenesis - the creation of neurons from neural stem cells or progenitor cells. Again, this is most active in pre-natal development but there's some evidence that it occurs in adults as part of learning and memory.

-

Hebbian theory2 (neuro-plasticity) - the effectiveness of a synapse can be increased or decreased depending on the temporal correlation of the action potential between the pre-synaptic and post-synaptic neuron. Meaning, if the pre-synaptic neuron fires and the post-synaptic also fires within a specific time span, then the synapse is strengthened. If the pre-synaptic neuron fires but the post-synaptic neuron does not (or the post-synaptic neuron fires while a pre-synaptic neuron doesn't) then the synapse strength is decreased.

-

changes in myelination -- some theories / studies indicate that learning also involves improving a neuron's efficacy through myelination, even in adults (sorry, no reference.)

There are other theories and studies as well, but these are the predominant ones. None of them adequately explain what "learning" is, and how the associated role of "memory" works, but they are interesting first-order concepts to play with. Perhaps a future implementation will address these ideas.

Neurons: A Crash Course

There's a few terms that one needs to know about with regards to neurons. This section is a crash course in neurons, and is by no means complete. I'm going to heavily reference wikipedia for various definitions here (yes, I've made a donation to them.) While I reference Wikipedia a lot here, the basis for Neuro-Sim comes from the book Principles of Neuroscience, now in its fifth edition (the book I'm using is the second edition!) This book is so seminal it has its own webpage.

Neuron

"A neuron (/ˈnjʊərɒn/ nyewr-on or /ˈnʊərɒn/ newr-on; also known as a neurone or nerve cell) is an electrically excitable cell that processes and transmits information through electrical and chemical signals. These signals between neurons occur via synapses, specialized connections with other cells. Neurons can connect to each other to form neural networks. Neurons are the core components of the brain and spinal cord of the central nervous system (CNS), and of the ganglia of the peripheral nervous system (PNS). Specialized types of neurons include: sensory neurons which respond to touch, sound, light and all other stimuli affecting the cells of the sensory organs that then send signals to the spinal cord and brain, motor neurons that receive signals from the brain and spinal cord to cause muscle contractions and affect glandular outputs, and interneurons which connect neurons to other neurons within the same region of the brain, or spinal cord in neural networks.

A typical neuron consists of a cell body (soma), dendrites, and an axon."1

It's not entirely accurate to say a neuron is electrically excitable because the actual communication between neurons is usually chemical -- neurotransmitters are released in the pre-synaptic cleft of an excited neuron and are received at the post-synaptic cleft of a receiving neuron, which affects the local membrane potential of the receiving neuron. There are also many kinds of neurons, each with different behaviors, including neurons that do connect electrically rather than chemically.

It's not entirely accurate to say a neuron is electrically excitable because the actual communication between neurons is usually chemical -- neurotransmitters are released in the pre-synaptic cleft of an excited neuron and are received at the post-synaptic cleft of a receiving neuron, which affects the local membrane potential of the receiving neuron. There are also many kinds of neurons, each with different behaviors, including neurons that do connect electrically rather than chemically.

Dendrite

"Dendrites (from Greek δένδρον déndron, "tree") (also dendron) are the branched projections of a neuron that act to propagate the electrochemical stimulation received from other neural cells to the cell body, or soma, of the neuron from which the dendrites project. Electrical stimulation is transmitted onto dendrites by upstream neurons (usually their axons) via synapses which are located at various points throughout the dendritic tree. Dendrites play a critical role in integrating these synaptic inputs and in determining the extent to which action potentials are produced by the neuron."3

A synaptic connection at a dendrite usually results in a local membrane potential change, either excitatory or inhibitory. This change propagates to the axon hillock (see below) for summation. Depending on the distance, the actual potential change at the axon hillock will be less than that at the location of the synapse. This affect is not simulated in Neuro-Sim.

A synaptic connection at a dendrite usually results in a local membrane potential change, either excitatory or inhibitory. This change propagates to the axon hillock (see below) for summation. Depending on the distance, the actual potential change at the axon hillock will be less than that at the location of the synapse. This affect is not simulated in Neuro-Sim.

Axon

"An axon (from Greek ἄξων áxōn, axis), also known as a nerve fibre, is a long, slender projection of a nerve cell, or neuron, that typically conducts electrical impulses away from the neuron's cell body. The function of the axon is to transmit information to different neurons, muscles and glands...Axons make contact with other cells—usually other neurons but sometimes muscle or gland cells—at junctions called synapses. At a synapse, the membrane of the axon closely adjoins the membrane of the target cell, and special molecular structures serve to transmit electrical or electrochemical signals across the gap...A single axon, with all its branches taken together, can innervate multiple parts of the brain and generate thousands of synaptic terminals."4

Some of the more bizarre behaviors of neurons, such as not having an axon where signals transmitting to dendrites, or axons ending in synapses on other axons, are two of many exceptions that are not simulated in Neuro-Sim.

Some of the more bizarre behaviors of neurons, such as not having an axon where signals transmitting to dendrites, or axons ending in synapses on other axons, are two of many exceptions that are not simulated in Neuro-Sim.

Axon Hillock

"The axon hillock is the last site in the soma where membrane potentials propagated from synaptic inputs are summated before being transmitted to the axon...Both inhibitory postsynaptic potentials (IPSPs) and excitatory postsynaptic potentials (EPSPs) are summed in the axon hillock and once a triggering threshold is exceeded, an action potential propagates through the rest of the axon (and "backwards" towards the dendrites as seen in neural backpropagation). The triggering is due to positive feedback between highly crowded voltage-gated sodium channels, which are present at the critical density at the axon hillock (and nodes of ranvier) but not in the soma.

In its resting state, a neuron is polarized, with its inside at about -70 mV relative to its surroundings. When an excitatory neurotransmitter is released by the presynaptic neuron and binds to the postsynaptic dendritic spines, ligand-gated ion channels open, allowing sodium ions to enter the cell. This may make the postsynaptic membrane depolarized (less negative). This depolarization will travel towards the axon hillock, diminishing exponentially with time and distance. If several such events occur in a short time, the axon hillock may become sufficiently depolarized for the voltage-gated sodium channels to open. This initiates an action potential that then propagates down the axon."5

In other words, while of course more complicated, the axon hillock is where localized changes in membrane potential (usually from synapses along the dendrite but also on the cell body itself) are summed. If the membrane potential at the axon hillock (or at the first unmyelinated segment of the axon) exceeds a specific threshold, an action potential occurs -- the neuron fires.

Synapse

"In the nervous system, a synapse[1] is a structure that permits a neuron (or nerve cell) to pass an electrical or chemical signal to another neuron...Synapses are essential to neuronal function: neurons are cells that are specialized to pass signals to individual target cells, and synapses are the means by which they do so. At a synapse, the plasma membrane of the signal-passing neuron (the presynaptic neuron) comes into close apposition with the membrane of the target (postsynaptic) cell. Both the presynaptic and postsynaptic sites contain extensive arrays of molecular machinery that link the two membranes together and carry out the signaling process. In many synapses, the presynaptic part is located on an axon, but some postsynaptic sites are located on a dendrite or soma."6

The modeling of a synapse is actually quite complex. The spatial location of a synapse is important as the depolarization that occurs locally on post-synaptic neuron diminishes over time and distance as it travels toward the axon hillock (this is not modeled by Neuro-Sim.) In addition, depending on the neurotransmitter and the type of synapse, the neurotransmitter might not be "cleaned up" right away, allowing for continuous depolarization (also not modeled by Neuro-Sim.) As mentioned earlier, pre-synaptic facilitation and inhibition, affecting the pre-synaptic cleft, not the post-synaptic neuron, is possible also (again not modeled by Neuro-Sim.) These are just a few of the interesting characteristics of synapses.

Refractory Period

"The refractory periods are due to the inactivation property of voltage-gated sodium channels and the lag of potassium channels in closing. Voltage-gated sodium channels have two gating mechanisms, the activation mechanism that opens the channel with depolarization and the inactivation mechanism that closes the channel with repolarization. While the channel is in the inactive state, it will not open in response to depolarization. The period when the majority of sodium channels remain in the inactive state is the absolute refractory period. After this period, there are enough voltage-activated sodium channels in the closed (active) state to respond to depolarization. However, voltage-gated potassium channels that opened in response to repolarization do not close as quickly as voltage-gated sodium channels; to return to the active closed state. During this time, the extra potassium conductance means that the membrane is at a higher threshold and will require a greater stimulus to cause action potentials to fire. This period is the relative refractory period."7

The salient part here being that there is an absolute refractory period in which the neuron cannot fire again (usually about 1ms) and a relative refractory period in which the action potential threshold is higher. The latter is not modeled by Neuro-Sim.

Resting Potential

"The relatively static membrane potential of quiescent cells is called the resting membrane potential (or resting voltage), as opposed to the specific dynamic electrochemical phenomena called action potential and graded membrane potential."8

The resting potential is configurable for specific neuron "studies" and is group assigned in "network" mode.

Threshold Potential

"The threshold potential is the critical level to which the membrane potential must be depolarized in order to initiate an action potential."9

Under certain conditions, the depolarization of a neuron can occur slowly enough that a higher than normal threshold is required to initiate an action potential. In fact, if the depolarization occurs slowly enough, no level of depolarization will achieve an action potential. This behavior is not modeled in Neuro-Sim. However, the threshold potential is configurable for specific neuron "studies" and is group assigned in "network" mode.

Action Potential

"In physiology, an action potential is a short-lasting event in which the electrical membrane potential of a cell rapidly rises and falls, following a consistent trajectory. Action potentials occur in several types of animal cells, called excitable cells, which include neurons, muscle cells, and endocrine cells, as well as in some plant cells. In neurons, they play a central role in cell-to-cell communication. In other types of cells, their main function is to activate intracellular processes. In muscle cells, for example, an action potential is the first step in the chain of events leading to contraction. In beta cells of the pancreas, they provoke release of insulin.[a] Action potentials in neurons are also known as "nerve impulses" or "spikes", and the temporal sequence of action potentials generated by a neuron is called its "spike train". A neuron that emits an action potential is often said to "fire".10

The action potential value is configurable for specific neuron "studies" and is group assigned in "network" mode.

Pacemaker Neuron

A pacemaker neuron is a neuron that spontaneously fires at regular intervals. Neuro-Sim uses pacemaker neurons in the network simulations to kick-start (and maintain) network activity. The number of pacemaker neurons is configurable in "network" mode.

An interesting simple "study" network consists of two pacemaker neurons, where one neuron either inhibits or stimulates the other pacemaker neuron. Later on we will see a couple examples of this, achieving simple frequency division and frequency increases in the target pacemaker neuron.

An interesting simple "study" network consists of two pacemaker neurons, where one neuron either inhibits or stimulates the other pacemaker neuron. Later on we will see a couple examples of this, achieving simple frequency division and frequency increases in the target pacemaker neuron.

Myelination

"Myelin is a fatty white substance that surrounds the axon dielectric (electrically insulating) material that forms a layer, the myelin sheath, usually around only the axon of a neuron. It is essential for the proper functioning of the nervous system. It is an outgrowth of a type of glial cell.

The production of the myelin sheath is called myelination. In humans, myelination begins in the 14th week of fetal development, although little myelin exists in the brain at the time of birth. During infancy, myelination occurs quickly, leading to a child's fast development, including crawling and walking in the first year. Myelination continues through the adolescent stage of life."11

Besides not being able to ever spell this word correctly, myelination is what allows an electrical signal to travel quickly and without degradation down the axon to the pre-synaptic terminals. Without myelination, our nervous system simply would not work.

Additional Terms used in Neuro-Sim

Refractory Recovery Rate

This is a configurable option for how quickly a neuron returns to its resting potential from the hyperpolarization that occurs after an action potential or an inhibitory input.

Hyperpolarization Overshoot

This is the level that the membrane potential is set to after an action potential.

Resting Potential Return Rate

This is the rate at which a neuron returns from a depolarization event that did not result in an action potential, back to its resting potential.

Simulation Modes

There are two simulation modes implemented in Neuro-Sim: network and study.

Network Mode

Network Mode, showing an Action Potential Decay Plot

In network mode, all the neurons are configured the same, however you can control:

-

the number of connections

-

the maximum distance (or length of axon) between connections

-

the connection "radius" -- how dispersed the connections are from the axon endpoint

-

the number of pacemaker neurons -- the neurons that fire at regular intervals to kickstart the simulation.

There are also to ways of viewing the plot:

-

Action Potential Decay Plot -- this is illustrated above. When a neuron fires, the plotter will show the neuron as white and over 10 steps decay the neuron's plot through yellow, red, and finally black.

-

Membrane Potential Plot -- as illustrated below, this plot draws a neuron in:

-

white when it fires

-

red during its refractory period

-

and shades of green (fairly hard to see actually) representing the neuron's membrane potential

-

black for neurons at their resting potential.

Network Mode, showing a Membrane Potential Plot

Study Mode

Study Mode: A Reflex Simulation

In study mode, the user can create individual neurons with specific characteristics and connections. Each neuron has a "probe" that shows its activity on the scope. The above screenshot illustrates one of the demos, a "reflex" simulation. The configuration values and usage is described later.

The UI

Click here for a full-size screenshot of Neuro-Sim on startup.

Let's first deal with the UI. There are two displays: on the left we have either the neural network or the study network (shown below) and on the right we have an oscilloscope ("scope") display of neurons in the study network. Below, there are three tabs:

-

Neuron - the default neuron configuration

-

Study - for studying small networks whose state is displayed on the scope

-

Network - for configuring neuron connectivity and simulating large neural networks

Neuron Configuration Options

Configuring the Default Neuron

The six values that can be configured for a neuron are:

-

Resting Potential -- by default, the resting potential is -65mv (millivolts). This represents the acquiescent state of the neuron.

-

AP Threshold -- this is the action potential threshold -- if the membrane potential at the summation site, the axon hillock, meets or exceeds this value, an action potential occurs (the neuron "fires")

-

AP Value -- this is the action potential value, by default 40mv.

-

Ref. Rec. Rate -- this value represents the return to the resting potential as a rate -- the change in membrane potential per "tick."

-

HP Overshoot -- this is the value subtracted from the resting potential to represent the hyperpolarization after an action potential. The default, 20mv, represents that the hyperpolarization value after an action potential will be -65 - 20, or -85mv.

-

RP Return Rate -- this value represents the return to the resting potential after depolarization -- the change in membrane potential per "tick." When the neuron is depolarized without achieving the action potential threshold, this value is subtracted from the depolarized membrane potential per "tick", eventually returning the neuron to its resting potential.

These values can be changed in realtime for the "network mode" of the simulation.

Network Mode Configuration Options

Network Mode Configuration Options

In network mode, the following can be configured:

-

the number of connections

-

the maximum distance (or length of axon) between connections

-

the connection "radius" -- how dispersed the connections are from the axon endpoint

-

the number of pacemaker neurons -- the neurons that fire at regular intervals to kickstart the simulation.

-

the plot type -- action potential decay plot or membrane potential plot (as described earlier.)

Study Mode

Study Mode Neuron Configuration

In study mode, individual neurons can be added and removed from the network. Each neuron's options can also have a specific configuration:

The six values that can be configured for a neuron are:

-

RP: Resting Potential -- by default, the resting potential is -65mv (millivolts). This represents the acquiescent state of the neuron.

-

APT: AP Threshold -- this is the action potential threshold -- if the membrane potential at the summation site, the axon hillock, meets or exceeds this value, an action potential occurs (the neuron "fires")

-

APV: AP Value -- this is the action potential value, by default 40mv.

-

RRR: Ref. Rec. Rate -- this value represents the return to the resting potential as a rate -- the change in membrane potential per "tick."

-

HPO: HP Overshoot -- this is the value subtracted from the resting potential to represent the hyperpolarization after an action potential. The default, 20mv, represents that the hyperpolarization value after an action potential will be -65 - 20, or -85mv.

-

RPRR: RP Return Rate -- this value represents the return to the resting potential after depolarization -- the change in membrane potential per "tick." When the neuron is depolarized without achieving the action potential threshold, this value is subtracted from the depolarized membrane potential per "tick", eventually returning the neuron to its resting potential.

-

LKG: Leakage -- this represents a depolarization rate (per "tick") and is used to create a pacemaker neuron

-

PCOLOR: the scope plot color -- this value is auto-assigned

-

Conn: the neuron's connections

Connections

The connections is a comma delimited list of "connect to" neuron and the post-synaptic membrane potential change in parenthesis. For example:

3(40),4(40),6(40)

indicates that the pre-synaptic neuron connects to neurons 3, 4, and 6 (the neuron number "n" in the screenshot above) and results in a post-synaptic depolarization of 40mv in each case. Excitatory synapses are represented by positive values and inhibitory synapses are represented by negative values. Here, for example, is an inhibitory synapse:

5(-40)

The Network

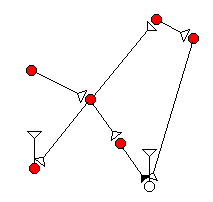

Study Mode Network Plot

In study mode, the network is plotted using an idealized representation of a neuron:

-

The cell body is represented by a circle.

-

Excitatory synapses are represented as white triangles.

-

Inhibitory synapses are represented as black triangles.

Also, when running the simulation, when a neuron fires, the "cell body" (the circle) turns red and goes through the decay as in an action potential decay plot.

Adjusting the Study Mode Plot

After Moving Neurons in the Study Plot

The user can click and drag a neuron "body" (the circle) to create the desired visual plot. The above screenshot shows the same network but with the neurons in different positions. Also, when clicking on a neuron's "body", the corresponding entry in the neuron configuration grid will be selected, which makes it easier to figure out which neuron is what. (Granted, this UI could be improved a lot!)

Pause / Resume / Step

You start a simulation by clicking on "Resume". You can also pause the simulation by clicking on Pause. When paused, the Step button is enabled. Step will run the simulator until a neuron fires.

Study Mode Examples

I've put together several examples to play with.

Pacemaker Neuron

A Pacemaker Neuron Example

This is very simple example illustrating the activity of a pacemaker neuron. A pacemaker neuron is useful for seeding a network or simulating external stimulus (as is used in the reflex example).

Frequency Divider Example

A Divider Neuron Example

In this example, a simple frequency divider neural "circuit" illustrates how a recurring action potential will result in the post-synaptic neuron summing the action potentials in a graded manner and eventually firing when the post-synaptic neuron's action potential threshold is met.

Frequency Decreaser Example

Decreasing Frequency Example

In this example, a baseline pacemaker neuron (the red trace) is used to illustrate how one pacemaker neuron (the cyan trace) can be used to decrease the firing rate of a second pacemaker neuron (the white trace). This is a nice example of the action of an inhibitory signal, as one can see the inhibitory action (polarization) on the target neuron whenever the pre-synaptic neuron (the cyan trace) fires.

Frequency Increaser Example

Increasing Frequency Example

Similar to the previous example, a baseline neuron (the red trace) is used to show how a pre-synaptic pacemaker neuron (the cyan trace) increases the frequency of firing of the post-synaptic pacemaker neuron (the white trace.) Here, the pre-synaptic pacemaker neuron acts in an excitatory manner upon the post-synaptic neuron, increasing its firing rate by further depolarizing the neuron.

Reflex Action Example

This is the most interesting example, as it models the knee jerk reflex:

Knee Jerk Reflex (courtesy of http://cbsetextbooks.weebly.com/21-neural-control-and-coordination.html)

In Neuro-Sim, this is modeled as:

Model of Reflex Action

In this example, the neuron attached to the "muscle spindle" (circled in red) has an afferent pathway, connecting to the neuron in the "muscle" that jerks in response to stimulus (green). A signal is also sent to the "brain" which tries to prevent the contraction by exciting a neuron that attempts to activate the muscles at the motor endplate. However, this is not possible because the afferent neuron, via an interneuron, creates an inhibitory reaction, prevent the "brain" from creating a muscle contraction on the nerve attached to the motor endplate.

Scope and Network Trace of the Reflex Action

The above diagram illustrates the scope trace and the neurons that fire. Note the bottom right neuron does not fire because the interneuron is inhibiting it, thus you, as a person, cannot prevent your leg from jerking up!

Behind the Scenes

There really isn't that much to the simulator!

Neuron State

Neurons implement a state machine that determines whether the neuron is integrating (summing excitatory and inhibitory inputs), performing an action potential, or is in the refractory period:

public void Tick()

{

switch (actionState)

{

case State.Integrating:

Integrate();

Fired = FireOnActionPotential();

if (!Fired)

{

int dir = Math.Sign(config.RestingPotential - CurrentMembranePotential);

if (dir == -1)

{

CurrentMembranePotential += config.RestingPotentialReturnRate;

}

break;

case State.Firing:

Fired = false;

++actionState;

break;

case State.RefractoryStart:

CurrentMembranePotential = config.RestingPotential - config.HyperPolarizationOvershoot;

++actionState;

break;

case State.AbsoluteRefractory:

CurrentMembranePotential += config.RefractoryRecoveryRate;

if (CurrentMembranePotential >= config.RestingPotential)

{

inputs.Clear();

actionState = State.Integrating;

}

break;

case State.RelativeRefractory:

break;

}

}

Neuron Math

The math is all integer based. Whole number "potentials" are represented in the upper 24 bits of the integer, while the fractional component is represented in the lower 8 bits. This (theoretically) speeds up the processing of the integration, as we can stay completely within integer math. The concept is illustrated by seeing how the default neuron is configured:

public NeuronConfig()

{

RestingPotential = -65 << 8;

ActionPotentialThreshold = -35 << 8;

ActionPotentialValue = 40 << 8;

RefractoryRecoveryRate = 1 << 8;

HyperPolarizationOvershoot = 20 << 8;

RestingPotentialReturnRate = -8;

}

Network Plots

The performance of network plots is improved by using the FastPixel implementation that CPian "ratamoa" proved in, yes, 2006, in this article. This allows for fast pixel writes:

public void Plot(FastPixel fp, Point location, Color color)

{

fp.SetPixel(location, color);

fp.SetPixel(location + new Size(1, 0), color);

fp.SetPixel(location + new Size(0, 1), color);

fp.SetPixel(location + new Size(1, 1), color);

}

where each neuron is plotted as a 2x2 box.

Study Plots

The neurons in the study network are rendered using the standard Graphics functions. The most complicated code is here, in calculating how to draw the connecting "axon" and "synapse":

protected void DrawSynapse(Pen pen, Graphics gr, Point start, Point end, Connection.CMode mode)

{

double t = Math.Atan2(end.Y - start.Y, end.X - start.X);

double endt = Math.Atan2(start.Y - end.Y, start.X - end.X);

Size offset = new Size((int)(5 * Math.Cos(t)), (int)(5 * Math.Sin(t)));

Point endOffset = new Point((int)(15 * Math.Cos(endt)), (int)(15 * Math.Sin(endt)));

end.Offset(endOffset);

gr.DrawLine(pen, start + offset, end);

double v1angle = t - 0.785398163;

double v2angle = t + 0.785398163;

Point v1 = new Point((int)(10 * Math.Cos(v1angle)), (int)((10 * Math.Sin(v1angle))));

v1.Offset(end);

Point v2 = new Point((int)(10 * Math.Cos(v2angle)), (int)((10 * Math.Sin(v2angle))));

v2.Offset(end);

switch (mode)

{

case Connection.CMode.Excitatory:

gr.DrawLine(pen, end, v1);

gr.DrawLine(pen, end, v2);

gr.DrawLine(pen, v1, v2);

break;

case Connection.CMode.Inhibitory:

gr.FillPolygon(brushBlack, new Point[] { end, v1, v2 }, System.Drawing.Drawing2D.FillMode.Winding);

break;

}

}

This involves calculating the angle between the pre-synaptic body and the post-synaptic body, as well as keeping the connection offset by the width the of circle representing the neuron body.

Axons are "virtualized", in that multiple connections all extend in different directions from the pre-synaptic body source, so it appears as if the neuron has multiple axons:

Neuron has One Axon But Looks Like Three

As the above illustrates, it looks like the neuron on the left has three axons connecting to three other neurons -- this is a convenience for drawing the network.

Conclusion

As a preliminary simulation of a neuron's membrane potential through integration, action, and refractory periods, Neuro-Sim is a fun and interesting tool to use to play with simple neuron networks. In find the "network mode" is quite mesmerizing to watch but for the moment it doesn't represent anything concrete. Adding external stimuli (sound, images, etc) is perhaps a next logical step, as well as exploring learning and memory.

References

1 - Neuron: https://en.wikipedia.org/wiki/Neuron

2 - Hebbian Theory: https://en.wikipedia.org/wiki/Hebbian_theory

3 - Dendrite: https://en.wikipedia.org/wiki/Dendrite

4 - Axon: https://en.wikipedia.org/wiki/Axon

5 - Axon Hillock: https://en.wikipedia.org/wiki/Axon_hillock

6 - Synapse: https://en.wikipedia.org/wiki/Synapse

7 - Refractory Period: https://en.wikipedia.org/wiki/Refractory_period_(physiology)

8 - Resting Potential: https://en.wikipedia.org/wiki/Resting_potential

9 - Threshold Potential: https://en.wikipedia.org/wiki/Threshold_potential

10 - Action Potential: https://en.wikipedia.org/wiki/Action_potential

11 - Myelination: https://en.wikipedia.org/wiki/Myelin