It has never been easier for C# desktop developers to write code that takes advantage of the amazing computing performance of modern graphics cards. In this post I will share some ad hoc performance test results for a simple program written in C# as obtained from my current desktop computer, a Dell Precision T3600, 16GB RAM, Intel Xeon E5-2665 0 @ 2.40GHz, NVidia GTX Titan. I will also leave you with the source code to run this same test on your hardware of choice.

Source Code

https://www.assembla.com/code/cudafyperformance/subversion/nodes

Introduction

It is likely that the graphics card in your computer supports CUDA or OpenCL. If it does, then you are in for a real treat if you take the time to explore its capabilities. In this article I am showing off the new 4.5 terra-flop GTX Titan card from NVidia ($1000). The one year old GTX 680 costs half that and comes in at a still staggering 3 terra-flops. Even if you have a lower cost GPU card, chances are that its performance will still be pretty impressive compared to your CPU.

We will a “test” using the GPU with CUDA, the GPU with OpenCL, the CPU with OpenCL, and the CPU using straight C# - all within the safe confines of a managed C# application. Then we will explore the concept of streams, which allow us to overlap computations with memory transfers. Later on we will leave C#, using only C, and find that there are no performance gains to be found down that path. Finally we will tune our GPU code to make your head hurt, but also to really extract all the computing power from our GPU.

Source code for all of this is provided (see above) and a checklist of required downloads is provided below.

The Test

Smooth one million floating point values using a set of 63 smoothing coefficients.

Here is the function that computes the smoothed value of a given point:

static float SmoothPoint(float[] data, int index)

{

var sum = 0f;

var count = 0;

for (var coefficientIndex = 0; coefficientIndex < Coefficients.Length; coefficientIndex++)

{

var sourceIndex = index + coefficientIndex - 32;

if (sourceIndex >= 0 && sourceIndex < data.Length)

{

sum += data[sourceIndex] * Coefficients[coefficientIndex];

count++;

}

}

return sum / count;

}

Before calling this function, we set up the coefficients as follows:

public static float[] Coefficients = new float[64];

static PerfTest()

{

for (var i = 0; i < 32; i++)

{

Coefficients[i] = Coefficients[62 - i] = i / 31f;

}

}

The Results

Here are the results to save you the time of scrolling to the end – which is what you would do now anyway.

The CPU is 70x slower for this specific task than one of the CUDA implementations.

The CPU

To execute this test using the Xeon, I set up the call this way:

public static void CpuSmooth()

{

const int mb = 1024 * 1024;

var dataIn = new float[mb];

var dataOut = new float[mb];

for (var i = 0; i < mb; i++)

{

dataIn[i] = i;

}

const int loops = 1024;

for (var loop = 0; loop < loops; loop++)

{

Parallel.For(0, mb, index => dataOut[index] = SmoothPoint(dataIn, index));

}

}

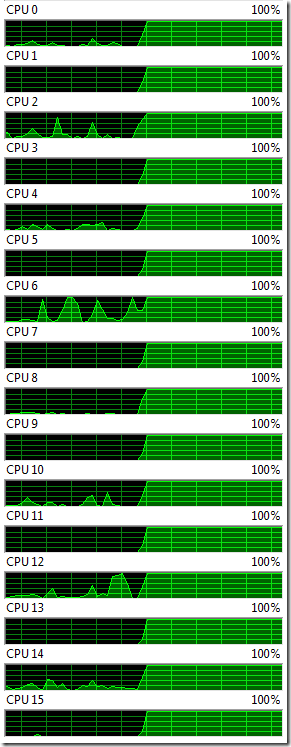

As you can see below, the parallel for loop does a nice job of keeping all the processors busy.

The GPU

To execute the SmoothPoint function on the GTX Titan, I need to do the following:

1) Obtain Software:

a) For CUDA for your NVidia GPU, obtain CUDA from NVidia: https://developer.nvidia.com/cuda-downloads

b) For OpenCL for your GPU, your video driver should be all that you need.

c) For OpenCL for your Intel CPU, obtain this SDK: http://software.intel.com/en-us/vcsource/tools/opencl-sdk

c) For CUDAfy, for your C# development, obtain this DLL: http://cudafy.codeplex.com/.

As of this writing, you need to obtain version 1.21 (beta) if you want OpenCL support in CUDAfy.

d) For development, obtain Visual Studio. If you are doing CUDA work, you need the 2010 C++ compiler lurking on your HDD. You can use VS2010 or VS2012 for all the work in this article.

2) For CUDA work, ensure that your environment variable PATH contains a link to the VS2010 C++ compiler. Mine includes this string: C:\Program Files (x86)\Microsoft Visual Studio 10.0\VC\bin

3) Add a reference to Cudafy.net to the application.

4) Replace the CPU parallel for loop with this code:

public static void Smooth(GPGPU gpu)

{

const int mb = 1024 * 1024;

var cpuMemIn = new float[mb];

var cpuMemOut = new float[mb];

for (var i = 0; i < mb; i++)

{

cpuMemIn[i] = i;

}

var gpuMemIn = gpu.Allocate<float>(mb);

var gpuMemOut = gpu.Allocate<float>(mb);

gpu.CopyToConstantMemory(Coefficients, Coefficients);

const int loops = 1024;

for (var loop = 0; loop < loops; loop++)

{

gpu.CopyToDevice(cpuMemIn, gpuMemIn);

gpu.Launch(1024, 1024, SmoothKernel, gpuMemIn, gpuMemOut);

gpu.CopyFromDevice(gpuMemOut, cpuMemOut);

}

gpu.Free(gpuMemIn);

gpu.Free(gpuMemOut);

}

5) Write the SmoothKernel function:

static void SmoothKernel(GThread gThread, float[] dataIn, float[] dataOut)

{

dataOut[gThread.get_global_id(0)] = SmoothPoint(dataIn, gThread.get_global_id(0));

}

6) Adorn the Coefficients, SmoothKernel, and SmoothPoint with the [Cudafy] attribute.

7) Execute some setup code to access the GPU.

If I want to use CUDA, I create a GPGPU object like this:

public static GPGPU NewCuda()

{

var gpu = CudafyHost.GetDevice(eGPUType.Cuda);

CudafyTranslator.Language = eLanguage.Cuda;

var module = CudafyTranslator.Cudafy(eArchitecture.sm_35);

gpu.LoadModule(module);

return gpu;

}

If I want to use OpenCL, I create a GPGPU object like this:

public static GPGPU NewOpenCl()

{

var gpu = CudafyHost.GetDevice(eGPUType.OpenCL);

CudafyTranslator.Language = eLanguage.OpenCL;

var module = CudafyTranslator.Cudafy();

gpu.LoadModule(module);

return gpu;

}

CUDA vs OpenCL

As you can see above, CUDAfy gives you a choice in GPU technologies to use with your C# application. I believe it is pretty amazing that I can write some code in C# and have that code executed on the GPU using either CUDA or OpenCL and on the CPU using straight C# or OpenCL for Intel CPUs. There have been a few heated debates on CUDA vs. OpenCL for GPUs and I certainly do not want to give the impression that I know which technology is better. Here are some points to consider:

1) OpenCL is available for many video card technologies. CUDA is available for NVidia-based cards only (from Asus, EVGA, Msi, etc.). OpenCL is also available as a driver that uses the main CPU.

2) CUDAfy with OpenCL uses the video card driver to compile the code. CUDAfy with CUDA uses the C++ compiler at run time - but you can use a premade CUDAfy module (*.cdfy) or embed the code in the .NET assembly using the cudafycl tool.

3) Streaming in CUDA can achieve a 2X improvement in performance. I’ve been told OpenCL supports streams too, but I have not figured out how that works yet.

Under the Hood

Behind the scenes, CUDAfy magically creates either a CUDA or an OpenCL rendition of your code. The CUDA code must be compiled using a C++ compiler with the NVida CUDA extensions. The OpenCL code is processed by the device driver so there is much less headache in the distribution of your code.

Cuda.cu

#if defined(cl_khr_fp64)

#pragma OPENCL EXTENSION cl_khr_fp64: enable

#elif defined(cl_amd_fp64)

#pragma OPENCL EXTENSION cl_amd_fp64: enable

#endif

// GlsecPerfCSharp.PerfTest

__kernel void SmoothKernel(global float* dataIn, int dataInLen0, global float* dataOut, int dataOutLen0 , __constant float* Coefficients);

// GlsecPerfCSharp.PerfTest

float SmoothPoint(global float* data, int dataLen0, int index , __constant float* Coefficients);

// GlsecPerfCSharp.PerfTest

#define CoefficientsLen0 64

// GlsecPerfCSharp.PerfTest

__kernel void SmoothKernel(global float* dataIn, int dataInLen0, global float* dataOut, int dataOutLen0 , __constant float* Coefficients)

{

dataOut[(get_global_id(0))] = SmoothPoint(dataIn, dataInLen0, get_global_id(0), Coefficients);

}

// GlsecPerfCSharp.PerfTest

float SmoothPoint(global float* data, int dataLen0, int index , __constant float* Coefficients)

{

float num = 0.0f;

int num2 = 0;

for (int i = 0; i < CoefficientsLen0; i++)

{

int num3 = index + i - 32;

if (num3 >= 0 && num3 < dataLen0)

{

num += data[(num3)] * Coefficients[(i)];

num2++;

}

}

return num / (float)num2;

}

OpenCL.cpp

__device__ int get_global_id(int dimension)

{

int result = 0;

if (dimension == 0)

result = blockIdx.x * blockDim.x + threadIdx.x;

else if (dimension == 1)

result = blockIdx.y * blockDim.y + threadIdx.y;

else if (dimension == 2)

result = blockIdx.z * blockDim.z + threadIdx.z;

return result;

}

// GlsecPerfCSharp.PerfTest

extern "C" __global__ void SmoothKernel( float* dataIn, int dataInLen0, float* dataOut, int dataOutLen0);

// GlsecPerfCSharp.PerfTest

__device__ float SmoothPoint( float* data, int dataLen0, int index);

// GlsecPerfCSharp.PerfTest

__constant__ float Coefficients[64];

#define CoefficientsLen0 64

// GlsecPerfCSharp.PerfTest

extern "C" __global__ void SmoothKernel( float* dataIn, int dataInLen0, float* dataOut, int dataOutLen0)

{

dataOut[(get_global_id(0))] = SmoothPoint(dataIn, dataInLen0, get_global_id(0));

}

// GlsecPerfCSharp.PerfTest

__device__ float SmoothPoint( float* data, int dataLen0, int index)

{

float num = 0.0f;

int num2 = 0;

for (int i = 0; i < CoefficientsLen0; i++)

{

int num3 = index + i - 32;

if (num3 >= 0 && num3 < dataLen0)

{

num += data[(num3)] * Coefficients[(i)];

num2++;

}

}

return num / (float)num2;

}

CUDA Streaming

Simply stated, “streaming” in CUDA allows the GPU to perform concurrent tasks. In this application, the performance gains in CUDA are due to three overlapped operations. At any point in the performance test, the CUDA code performing each of these three tasks concurrently:

Upload raw data from the host memory (CPU) to the device (GPU) memory.

Process (smooth) the data in device memory.

Download smoothed data from the device to the host.

Synchronize to wait for all operations issued on the given stream to complete before proceeding.

The slight difference in performance is due to the way the tasks are scheduled in CUDA. These are the three scheduling methods I implemented:

Now I don’t have the stamina to turn this blog post into a tutorial on CUDA streaming. Feel free to examine the source code and see how the above three methods are implemented.

CUDA C vs. CUDAfy C#

Some have wondered if the overhead of C# could be significant. Therefore I put together a straight C version of the same streaming performance test. The source code at assembla now includes this new test.

The results show that in this test at least, there is no overhead in using C#. Here are the results:

Faster!

It turns out that much of the time in the smoothing kernel is spent retrieving the input data and the smoothing coefficients from RAM. NVidia calls this “device memory”. Each smoothing coefficient is accessed 1 million times and each data point in the source is accessed 64 times. Maybe we can do something about that. NVidia tells us that device memory is relatively slow.

I had already broken the smoothing problem down into 1024 “blocks”, where each block has 1024 threads. This means I have allocated 1 thread per data point. It turns out that the threads within a block can share this really fast memory called, well, “shared memory”. Shared memory is at least two orders of magnitude faster than device memory. So the idea is to allocate and load the shared memory with all the smoothing coefficients and all the data points from device memory that the threads in that block will need. We need 64 coefficients and (because we are smoothing +/- 32 values around each data point) we need 32 + 1024 + 32 data points loaded from device memory into shared memory.

Since we have 1024 threads, I decided to let them move the first 1024 data points from device memory into shared memory in parallel:

static void FastKernel(GThread gThread, float[] dataIn, float[] dataOut)

{

var threadIndex = gThread.threadIdx.x;

var dataIndex = gThread.blockIdx.x * ThreadsPerBlock + threadIndex;

var dataCopy = gThread.AllocateShared<float>("d", ThreadsPerBlock + 64);

dataCopy[threadIndex + 32] = dataIn[dataIndex];

I also decided to use the first 64 threads to copy the 64 coefficient from device memory to shared memory.

var coefficients = gThread.AllocateShared<float>("c", 64);

if (threadIndex < 64)

{

coefficients[threadIndex] = Coefficients[threadIndex];

}

Finally, we load in the 32 points on either side of the 1024 data points, being careful not to exceed the source data range.

else if (threadIndex < 96)

{

var zeroTo31 = threadIndex - 64;

var tempDataIndex = gThread.blockIdx.x * ThreadsPerBlock - 32 + zeroTo31;

if (tempDataIndex >= 0)

dataCopy[zeroTo31] = dataIn[tempDataIndex];

}

else if (threadIndex < 128)

{

var zeroTo31 = threadIndex - 96;

var tempDataIndex = (gThread.blockIdx.x + 1) * ThreadsPerBlock + zeroTo31;

if (tempDataIndex < dataIn.Length)

dataCopy[ThreadsPerBlock + 32 + zeroTo31] = dataIn[tempDataIndex];

}

gThread.SyncThreads();

The call to SyncThreads ensures that all 1024 threads in this block have copied their assigned data to shared memory before any threads proceed past this point.

The rest of the code is pretty much self-explanitory.

var sum = 0f;

var count = 0;

for (var coefficientIndex = 0; coefficientIndex < coefficients.Length; coefficientIndex++)

{

var sourceIndex = dataIndex + coefficientIndex - 32;

if (sourceIndex >= 0 && sourceIndex < dataIn.Length)

{

var copyIndex = sourceIndex - gThread.blockIdx.x * ThreadsPerBlock + 32;

sum += dataCopy[copyIndex] * coefficients[coefficientIndex];

count++;

}

}

dataOut[dataIndex] = sum / count;

}

It turns out that the copying of the coefficients to shared memory did not buy me much in terms of performance, but copying the data sure helped.

The Future

I tried executing a “null” kernel that did nothing but return. This gave me a time of about 0.74 ms. Therefore, the next place to take time out of this system is to obtain an NVidia card that sports what is called a “dual copy engine” which allows one upload, one download, and several kernels to all run concurrently.