Goals

The goal of this tutorial is to show how to build a search engine web service for non trivial information needs beyond simple keyword search with Python, Tornado and Strus.

All prerequisites you need are delivered as docker image. The tutorial will take less than an hour to complete.

Background

Strus is a set of libraries and tools to build search engines. This article presents its Python bindings in the ensemble with the Tornado web framework.

Update

5th October 2017

The documentation and the sources of this tutorial and the docker image have been updated to the latest version of Strus. Instead of Python 2.7, Python 3.4 is used now. Python 2.X is not supported anymore by the language-bindings of Strus.

Though the examples presented here work, some techniques and best practices have changed. Nevertheless, the examples provide some insight into Strus.

The query evaluation method NBLNK introduced here is not implemented in the same way in the Strus Wikipedia demo search anymore. It has been replaced by the method 'accunear' that relies on proximity statistics of the query terms and does not build term expressions anymore.

Prerequisites

You need docker installed.

At least for downloading the docker image for this tutorial, when you run it the first time, you need an internet connection.

Knowledge requirements

To execute and understand the steps of this tutorial some intermediate knowledge about programming Python is helpful. But the language concepts used are not very sophisticated. You can probably also understand the code if you know PHP.

To understand the rendering of the web server results, you should know the very basic constructs of HTML.

This tutorial addresses a public with some previous knowledge about basic concepts of information retrieval. You can complete this tutorial also without any knowledge, but it will hardly be convincing. There are much more mature out of the box solutions available for you in this case.

Sources

The sources introduced in this tutorial and in the tarball delivered with this article are organized in "steps" as the tutorial. So the source file strusIR.py shown in step 6 is in src/step6/strusIR.py of the source tarball or in /home/strus/src/step6/strusIR.py of the docker image.

Introduction

The artificial collection for this tutorial is a list of 201 countries and the languages spoken there. Additionally every country is assigned to a continent. First we will inspect an information retrieval query with BM25 as query evaluation scheme, searching for languages and getting a ranked list of countries. Then we will have a look at an example of weighting entities appearing in matching documents, searching for languages and getting a ranked list of continents.

Both weighting schemes introduced here not fitting to the example collection. They are a little bit like using a sledge-hammer to crack a nut. To extract entities from sentences that match to the query for example appears silly in a collection with documents consisting only of one sentence. But I did not find a suitable test collection (with entities to extract) for this tutorial, so I constructed a very simple one on by hand.

The weighting scheme extracting and weighting the entities is originating from the NBLNK weighting scheme of the Strus demo project, a search on the complete Wikipedia collection (English). The NBLNK retrieval method there ranks links that are occurring in sentences that match to the query. It does not inspect all documents for that, but the only best 300 BM25 weighted documents. The original source (PHP) can be found here.

Glossary

In this tutorial you will encounter several terms shortly explained here:

-

Posting: In information retrieval the term posting usually defines a document number with associated information like positions in the document. It is an element used to describe the occurrence of a term in the collection. In Strus a posting describes a pair (d,p) of cardinal numbers, where d refers to a document and p to a position. The sets of postings describe the domain of the functions that form the expressions we use for retrieval. This structure is the Boolean algebra of sets of pairs (d,p) with some n-ary functions on these sets added.

-

Weighting function: Function that assigns a numerical weight (double precision floating point number) to documents. Weighing functions are parameterized and use iterators on the sets of postings of query expressions and some numeric meta data to calculate the weight of a document.

-

Summarizer: A function to extract content of a document for presentation or further processing.

-

Term expression: An arbitrary complex expression represented as tree with typed terms as leaves. An expression is built from typed terms and n-ary functions on the sets of postings of term sub expressions. Although this definition for constructing expressions looks very universal at the first glance, it is restrictive.

-

Feature: A feature (or query feature) is associating some term expression with a weight and a name of a set to address it. Objects that reference features are for example weighting functions and summarizers.

-

SearchIndex: The index that maps terms to occurrencies or sets of postings. In information retrieval theory called inverted index.

-

ForwardIndex: The index that maps documents to terms. See definition here.

Step 1: Understanding the example document collection

The document structure

In this tutorial all example documents are in one file, a multipart document. It has a <list> root tag and <doc> tags for the items to insert as documents. Each item contains one sentence that relates country, continent and languages spoken together. Continent is marked as entity that can be extracted. The following example shows an example multipart document with two items in the list:

<?xml version='1.0' encoding='UTF-8'

standalone='yes'?>

<list>

<doc id='Sweden'>

In the country Sweden on the <continent

id='Europe'>Europe</continent>

are the following languages spoken: Swedish, Sami, Finnish.

</doc>

<doc id='Switzerland'>

In the country Switzerland on the <continent id='Europe'>Europe</continent>

are the following languages spoken: German, French, Italian, Romansch

</doc>

</list>

Step 2: Start the docker container

To start the docker image typing the following command in your shell:

docker run -p 40080:80 -t -i patrickfrey/strus-ub1604-torntuto:v0_15 /bin/bash

and you will get a prompt like this

root@8cbc7f49f3cf:/home/strus#

All following shell commands of this tutorial are executed in this shell.

Step 3: Create the storage

Initialize the storage database

We will use an utility program of Strus to create the storage. The command line utilities of Strus are programs for accessing the storage. In this tutorial we will only use them for the initial creation of the storage.

Creating the storage from the web service and thus managing different storages in the web service would make the example a lot more complicated because of synchronization issues. The command line command to create the storage we use for now looks as follows:

strusCreate -s "path=storage; metadata=doclen UINT16"

you get

storage successfully created.

The meta data element doclen was declared because it is required by the query evaluation scheme BM25 we will use.

Step 4: Define the web server skeleton with Tornado

Define the server skeleton

We design the web server top down and define the skeleton of the server with Tornado without implementing the request handlers needed yet. It looks like follows:

#!/usr/bin/python3

import tornado.ioloop

import tornado.web

import os

import sys

# [1] Request handlers:

class InsertHandler(tornado.web.RequestHandler):

def post(self):

pass;

#... insert handler implementation

class QueryHandler(tornado.web.RequestHandler):

def get(self):

pass;

#... query handler implementation

# [2] Dispatcher:

application = tornado.web.Application([

# /insert in the URL triggers the handler for inserting documents:

(r"/insert", InsertHandler),

# /query in the URL triggers the handler for answering queries:

(r"/query", QueryHandler),

# /static in the URL triggers the handler for accessing static

# files like images referenced in tornado templates:

(r"/static/(.*)",tornado.web.StaticFileHandler,

{"path": os.path.dirname(os.path.realpath(sys.argv[0]))},)

])

# [3] Server main:

if __name__ == "__main__":

try:

print( "Starting server ...\n");

application.listen(80)

print( "Listening on port 80\n");

tornado.ioloop.IOLoop.current().start()

print( "Terminated\n");

except Exception as e:

print( e);

It consists of three parts: The main program that runs the server [3]. The application dispatcher that selects a handler based on URL patterns [2] and a list or request handlers used in the application [1]. If we start this program in a source file strusServer.py with the following command:

python3 strusServer.py

the we get a listening we server:

Starting server ...

Listening on port 80

... But it does not react to any command yet, so we stop it again.

Step 5: Define the Tornado HTML templates to render the result

Tornado has a template engine with a substitution language that allows the execution of arbitrary Python commands in the template scope. It also has a concept of inheritance. We declare for our example one basic template search_base_html.tpl and three templates search_nblnk_html.tpl, search_bm25_html.tpl and search_error_html.tpl implementing the block with the result called resultblock differently:

The base template search_base_html.tpl

This is the base template with the frame of the page. All other templates are derived from this, implementing the block with the result differently.

<html>

<head>

<title>A search engine with Python, Tornado and Strus</title>

<meta http-equiv="content-type" content="text/html; charset=utf-8" />

</head>

<body>

<h1>A search engine with Python, Tornado and Strus</h1>

{% block resultblock %}

{% end %}

</body>

</html>

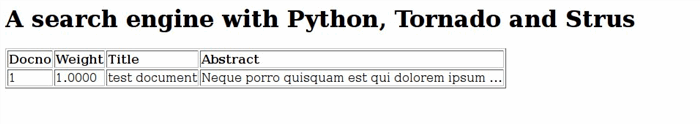

The template for the BM25 search search_bm25_html.tpl

This is the template used for the ordinary BM25 query. For each result document we map the document number docno, the weight, the title and the abstract.

{% extends "search_base_html.tpl" %}

{% block resultblock %}

<table border=1>

<tr>

<th align='left'>Docno</th>

<th align='left'>Weight</th>

<th align='left'>Title</th>

<th align='left'>Abstract</th>

</tr>

{% for result in results %}

<tr>

<td>{{ result['docno'] }}</td>

<td>{{ "%.4f" % result['weight'] }}</td>

<td>{{ result['title'] }}</td>

<td>{% raw result['abstract'] %}</td>

</tr>

{% end %}

</table>

{% end %}

The template for the entity weighting search_nblnk_html.tpl

This is the template used for the ranked list we get when weighting entities extracted from matching documents. For each result document, we map the weight and the title.

{% extends "search_base_html.tpl" %}

{% block resultblock %}

<table border=1>

<tr>

<th align='left'>Weight</th>

<th align='left'>Title</th>

</tr>

{% for result in results %}

<tr>

<td>{{ "%.4f" % result['weight'] }}</td>

<td>{{ result['title'] }}</td>

</tr>

{% end %}

</table>

{% end %}

The template for errors search_error_html.tpl

This is the template used for errors caught. We just map the error message here.

{% extends "search_base_html.tpl" %}

{% block resultblock %}

<p><font color="red">Error: {{message}}</font></p>

{% end %}

Step 6: Define the request handlers

The information retrieval engine backend (dummy implementation)

We try now to define a dummy version of the request handlers needed, to fill our skeleton with life. We do this in a source file strusIR.py. Later in this tutorial we will replace this module with a real information retrieval engine:

class Backend:

# Constructor creating a local Strus context with the storage configuration

# string passed as argument:

def __init__(self, config):

pass

# Insert a multipart document as described in step 1 (doing nothing for the moment):

def insertDocuments( self, content):

return 0

# Query evaluation scheme for a classical information retrieval query

# with BM25 (returning a dummy ranked list with one element for now):

def evaluateQueryText( self, querystr, firstrank, nofranks):

rt = []

rt.append( {

'docno': 1,

'title': "test document",

'weight': 1.0,

'abstract': "Neque porro quisquam est qui dolorem ipsum ..."

})

return rt

# Query evaluation method that builds a ranked list from the best weighted entities

# extracted from sentences with matches (returning an dummy list for with

# one element now):

def evaluateQueryEntities( self, querystr, firstrank, nofranks):

rt = []

rt.append( {

'title': "test document",

'weight': 1.0

})

return rt

In our skeleton we can now insert the following lines after the import directives that declare the information retrieval backend used.

#!/usr/bin/python3

import tornado.ioloop

import tornado.web

import os

import sys

import strusIR

# Declare the information retrieval engine:

backend = strusIR.Backend( "path=storage; cache=512M")

After these changes we can replace the request handlers in our skeleton.

The insert request handler

The insert request handler accepts POST requests with a multipart document as body and calls the insertDocuments method of the backend with it. It returns a plain text string with OK or ERR as header depending on the result:

# Declare the insert document handler (POST request with the multipart document as body):

class InsertHandler(tornado.web.RequestHandler):

def post(self):

try:

content = self.request.body

nofDocuments = backend.insertDocuments( content)

self.write( "OK %u\n" % (nofDocuments))

except Exception as e:

self.write( "ERR %s\n" % (e))

The query request handler

The query request handler accepts GET requests with the following parameters:

-

q: query string

-

s: query evaluation scheme (either BM25 or NBLNK)

-

i: Index of first result rank to return

-

n: Maximum number of result rank to return

It returns a HTML page with the result rendered with the Tornado template engine:

# Declare the query request handler:

class QueryHandler(tornado.web.RequestHandler):

def get(self):

try:

# q = query terms:

querystr = self.get_argument( "q", None)

# i = first rank of the result to display (for scrolling):

firstrank = int( self.get_argument( "i", 0))

# n = maximum number of ranks of the result to display on one page:

nofranks = int( self.get_argument( "n", 20))

# c = query evaluation scheme to use:

scheme = self.get_argument( "s", "BM25")

if scheme == "BM25":

# The evaluation scheme is a classical BM25 (Okapi):

results = backend.evaluateQueryText( querystr, firstrank, nofranks)

self.render( "search_bm25_html.tpl",

scheme=scheme, querystr=querystr,

firstrank=firstrank, nofranks=nofranks, results=results)

elif scheme == "NBLNK":

# The evaluation scheme is weighting the entities in the matching documents:

results = backend.evaluateQueryEntities( querystr, firstrank, nofranks)

self.render( "search_nblnk_html.tpl",

scheme=scheme, querystr=querystr,

firstrank=firstrank, nofranks=nofranks, results=results)

else:

raise Exception( "unknown query evaluation scheme", scheme)

except Exception as e:

self.render( "search_error_html.tpl",

message=e, scheme=scheme, querystr=querystr,

firstrank=firstrank, nofranks=nofranks)

Step 7: Call the server

Our server definition is now complete.

Now we can start the server and issue a query. We started the docker image with the port 40080 mapped to the docker image port 80. So we can issue the GET request

http:

with our favorite web browser. You get the following results from our dummy implementation of the retrieval engine:

or if you search with NBLNK with this query string:

http:

you get

Step 8: Define the real information retrieval engine with Strus

Now we take a look at the strusIR.py module and the Backend class it implements. We replace step by step the dummy implementation by a real one. First we have to import the following modules:

import strus

import itertools

import heapq

import re

The analyzer configuration

If we want to insert and retrieve documents, we have to describe the mapping of information items in the document to their normalized form. The same normalization has to be done with items in a query, so that items in the query can be matched against items in the document with help of an index.

This example analyzer configuration is kept as simple as possible. Not all steps are explained in detail, but you will find all document and query analyzer methods in the Python interface documentation of Strus: DocumentAnalyzer, QueryAnalyzer, .

The strusIR.Backend methods to create the document and the query analyzers look as follows:

# Create the document analyzer for our test collection:

def createDocumentAnalyzer(self):

rt = self.context.createDocumentAnalyzer()

# Define the sections that define a document (for multipart documents):

rt.defineDocument( "doc", "/list/doc")

# Define the terms to search for (inverted index or search index):

rt.addSearchIndexFeature( "word", "/list/doc//()",

"word", ("lc",("stem","en"),("convdia","en")))

# Define the end of sentence markers:

rt.addSearchIndexFeature( "sent", "/list/doc//()",

("punctuation","en","."), "empty")

# Define the placeholders that are referencable by variables:

rt.addSearchIndexFeature( "continent_var", "/list/doc/continent@id",

"content", "empty", "succ")

# Define the original terms in the document used for abstraction:

rt.addForwardIndexFeature( "orig", "/list/doc//()", "split", "orig")

# Define the contents that extracted by variables:

rt.addForwardIndexFeature( "continent", "/list/doc/continent@id",

"content", "text", "succ")

# Define the document identifier:

rt.defineAttribute( "docid", "/list/doc@id", "content", "text")

# Define the doclen attribute needed by BM25:

rt.defineAggregatedMetaData( "doclen",("count", "word"))

return rt

# Create the query analyzer according to the document analyzer configuration:

def createQueryAnalyzer(self):

rt = self.context.createQueryAnalyzer()

rt.definePhraseType( "text", "word", "word", ["lc", ["stem", "en"], ["convdia", "en"]] )

return rt

The following constructs need further explanation:

-

The document text selector expressions (2nd parameter of defineDocument, addSearchIndexFeature, addForwardIndexFeature, defineAttribute) are used to address the parts of the document to process. The syntax and semantics of selector expressions is dependent of the document segmenter you use. The default document segmenter used is an XML segmenter based on the textwolf library. It uses an expression syntax derived from abbreviated syntax of XPath. Another segmenter for another document format might define selection expressions differently.

-

Expressions describing functions for list of functions for normalizers, tokenizers, aggregators as arrays in Python. For example

("lc",("stem","en"),("convdia","en"))

describes a list of the normalizer functions: lowercase followed by stemming in English and diacritical character conversion in English, applied in this order. The notation of arrays for complex trees or lists is used for having compact initializers of the functions of the core you need. The first argument of an array is the function name and the rest are describing the arguments of the function. A similar initializer notation is also used in describing query expression, we will encounter later on in this tutorial.

-

The "succ" argument in some feature declarations. This option tells the analyzer, that this element has no own position in the document, but the same position assigned as the next element with an own position assigned. "pred" references the previous position accordingly. These options help to declare annotations in the document, that are bound to another item. Positions are very important for describing positional neighbour relationships in structured features like A is immediately followed by B.

The weighting scheme configuration for BM25

Here we declare the strusIR.Backend method to create the weighting scheme for BM25 including the summarizers to present the results. Not all steps are explained in detail, but you will find all query evaluation methods in the Python interface documentation of Strus: QueryEval. The definition of the weighting scheme configuration for BM25 in our case look as follows:

# Create a simple BM25 query evaluation scheme with fixed

# a,b,k1 and avg document lenght and title with abstract

# as summarization attributes:

def createQueryEvalBM25(self):

rt = self.context.createQueryEval()

# Declare the sentence marker feature needed for abstracting:

rt.addTerm( "sentence", "sent", "")

# Declare the feature used for selecting result candidates:

rt.addSelectionFeature( "selfeat")

# Query evaluation scheme:

rt.addWeightingFunction( "BM25", {

"k1": 1.2, "b": 0.75, "avgdoclen": 20, "match": {'feature':"docfeat"} })

# Summarizer for getting the document title:

rt.addSummarizer( "attribute", { "name": "docid" }, {"result":"TITLE"})

# Summarizer for abstracting:

rt.addSummarizer( "matchphrase", {

"type": "orig", "windowsize": 40, "sentencesize":30,

"matchmark": '$<b>$</b>', "struct":{'feature':"sentence"},

"match": {'feature':"docfeat"} }, {"phrase":"CONTENT"}

return rt

The following constructs need further explanation:

-

addTerm method: This method is used to add terms to the query, that are not part of the query itself, but used as context information by weighting functions or summarizers. A good example are structural markers like end of sentence. These markers are not part of the query but they might play a role in weighting or summarization.

-

addSelectionFeature method: In Strus the what you weight is strictly separated from the how. You always have to declare a set of features that declare, what set of documents is considered for the result. This separation is something you need in big document collections to avoid to expansive scans of result candidates.

-

Values of weighting or summarizer functions are either strings or numeric values or addressing features by the name of the feature set. Strings or numeric values are recognized by their type, features are specified as dictionary with one element. { 'feature': <name> }.

The weighting scheme configuration for weighting entities in matching documents

The strusIR.Backend method to create the weighting scheme for NBLNK including the summarizers to present the results look as follows:

# Create a simple BM25 query evaluation scheme with fixed

# a,b,k1 and avg document lenght and the weighted extracted

# entities in the same sentence as matches as query evaluation result:

def createQueryEvalNBLNK(self):

rt = self.context.createQueryEval()

# Declare the sentence marker feature needed for the

# summarization features extracting the entities:

rt.addTerm( "sentence", "sent", "")

# Declare the feature used for selecting result candidates:

rt.addSelectionFeature( "selfeat")

# Query evaluation scheme for entity extraction candidate selection:

rt.addWeightingFunction( "BM25", {"k1": 1.2, "b": 0.75,

"avgdoclen": 20,

"match": {'feature':"docfeat"} })

# Summarizer to extract the weighted entities:

rt.addSummarizer( "accuvar", { "match": {'feature':"sumfeat"},

"var": "CONTINENT", "type": "continent",

"result":"ENTITY" } )

return rt

# Create a simple BM25 query evaluation scheme with fixed

# a,b,k1 and avg document lenght and the weighted extracted

# entities in the same sentence as matches as query evaluation result:

def createQueryEvalNBLNK(self):

rt = self.context.createQueryEval()

# Declare the sentence marker feature needed for the

# summarization features extracting the entities:

rt.addTerm( "sentence", "sent", "")

# Declare the feature used for selecting result candidates:

rt.addSelectionFeature( "selfeat")

# Query evaluation scheme for entity extraction candidate selection:

rt.addWeightingFunction( "BM25", {

"k1": 1.2, "b": 0.75, "avgdoclen": 500,

"match": {'feature': "docfeat"} })

# Summarizer to extract the weighted entities:

rt.addSummarizer(

"accuvariable", {

"match": {'feature': "sumfeat"}, "var": "CONTINENT", "type": "continent",

"result":"ENTITY" })

return rt

We have nothing more to explain here. The declarations look similar to BM25. We have a different summarizer for the entity extraction defined.

The strusIR.Backend constructor

We have now all helper methods declared we need to create a strusIR.Backend object. The constructor of strusIR.Backend looks like follows:

# Constructor. Initializes the query evaluation schemes and the query and document analyzers:

def __init__(self, config):

if isinstance( config, ( int, long ) ):

self.context = strus.Context( "localhost:%u" % config)

self.storage = self.context.createStorageClient()

else:

self.context = strus.Context()

self.context.addResourcePath("./resources")

self.storage = self.context.createStorageClient( config )

self.queryAnalyzer = self.createQueryAnalyzer()

self.documentAnalyzer = self.createDocumentAnalyzer()

self.queryeval = {}

self.queryeval["BM25"] = self.createQueryEvalBM25()

self.queryeval["NBLNK"] = self.createQueryEvalNBLNK()

The method to insert a multipart document

The method for inserting a multipart document as described in step 1 looks like follows:

# Insert a multipart document:

def insertDocuments( self, content):

rt = 0

transaction = self.storage.createTransaction()

for doc in self.documentAnalyzer.analyzeMultiPart( content):

docid = doc['attribute']['docid']

transaction.insertDocument( docid, doc)

rt += 1

transaction.commit()

return rt

The method creates a transaction and inserts all analyzed document parts.

The method evaluate a BM25 query

The method for evaluating a BM25 query looks like follows:

# Query evaluation scheme for a classical information retrieval query with BM25:

def evaluateQueryText( self, querystr, firstrank, nofranks):

queryeval = self.queryeval[ "BM25"]

query = queryeval.createQuery( self.storage)

terms = self.queryAnalyzer.analyzeTermExpression( [ "text", querystr ] )

if len( terms) == 0:

# Return empty result for empty query:

return []

selexpr = ["contains", 0, 1]

for term in terms:

selexpr.append( term )

query.addFeature( "docfeat", term, 1.0)

query.addFeature( "selfeat", selexpr, 1.0 )

query.setMaxNofRanks( nofranks)

query.setMinRank( firstrank)

# Evaluate the query:

results = query.evaluate()

# Rewrite the results:

rt = []

for pos,result in enumerate(results['ranks']):

content = ""

title = ""

for summary in result['summary']:

if summary['name'] == 'TITLE':

title = summary['value']

elif summary['name'] == 'CONTENT':

content = summary['value']

rt.append( { 'docno':result['docno'], 'title':title, 'weight':result['weight'], 'abstract':content })

return rt

The method analyzes the query text and builds one query feature of each term. The selection feature is an expression, that selects only the documents, where all query terms appear in ("contains"). The biggest part of this method rewrites the resulting ranked list to get to a list of flat structures for not letting implementation specific structures intrude into the presentation layer (the tornado templates).

The method evaluate a query weighting matching document entities

The method for extracting and weighting entities (continent) from our example documents is constructing expression features for the extracting summarizer from pairs of query features or from one term in case of a single term query. For the construction of expression features from pairs of query features two cases are considered: If one of the terms is immediately following the other, there is also an expression built, that is searching for the immediate sequence in document sentences. The summarizer features built from these sequence expressions get the highest weight. For all the terms whether they are neighbours in the query or not, we construct expressions searching for features in a distance of 5 or 20 (word count distance) in the same sentence. For neighbour terms in the query the features built from these expressions get a slightly higher weight.

All these summarizer feature expressions also search for an entity inside a distance of 50 (word count distance) inside the same sentence. A variable is attached to this entitiy, that is extracted by the summarizer accumulating the weights of all entities.

The expressions associating subexpressions inside a sentence are "within_struct" and "sequence_struct". Both take a structure element expression as first argument. In our case the sentence delimiter. This structure element must not be covered by the interval defined by the matching sub expression with the smallest position and the biggest position of a candidate match.

In Strus variable assignments are bound to one position of an expression match. Therefore the item extracted has to define the position of an expression match. So we build the summarizer feature expressions around the entities we want to extract. The following expression shows one example feature built from "local languages":

sumexpr = [ "sequence_struct", 50,

["sent"],

[{'variable':"CONTINENT"}, "continent_var",""],

[ "within_struct", 20,

["sent"],

["word", "local"],

["word", "languages"]

]

]

query.defineFeature( "sumfeat", sumexpr, 1.0 )

translated this means: We look inside a structure delimited by sentence delimiters for a 'continent' with a variable 'CONTINENT' assigned followed by a structure within a maximum distance of 50 terms delimited by sentence delimiters containing the words "local" and "languages" within a maximum distance of 20 terms.

The expression {'variable':"CONTINENT"} is a marker that declares the string "CONTINENT" as variable attached to the structure where it appears. You may call this a hack. But it is the last of this kind used in the Strus bindings using trees or lists as initializers of objects.

We introduce now the helper methods to build the summarizer features and finally to the weighting scheme using these features to extract the continents:

The method to create the summarization features for neighbour terms in the query

# Helper method to define the query features created from terms

# of the query string, that are following subsequently in the query:

def __defineSubsequentQueryTermFeatures( self, query, term1, term2):

# Pairs of terms appearing subsequently in the query are

# translated into 3 query expressions:

# 1+2) search for sequence inside a sentence in documents,

# The summarizer extracts entities within

# a distance of 50 in the same sentence

# 3) search for the terms in a distance smaller than 5 inside a sentence,

# The summarizer extracts entities within a distance

# of 50 in the same sentence

# 4) search for the terms in a distance smaller than 20 inside

# The summarizer extracts entities within

# a distance of 50 in the same sentence

expr = [

[ "sequence_struct", 3, ["sent",''], term1, term2 ],

[ "sequence_struct", 3, ["sent",''], term2, term1 ],

[ "within_struct", 5, ["sent",''], term1, term2 ],

[ "within_struct", 20, term1, term2 ]

]

weight = [ 3.0, 2.0, 2.0, 1.5 ]

ii = 0

while ii < 4:

# The summarization expression attaches a variable referencing an

# the entity to extract.

# CONTINENT terms of type 'continent_var':

sumexpr = [ "chain_struct", 50, ["sent",''],

[{'variable':"CONTINENT"}, "continent_var", ""],

expr[ ii] ]

query.addFeature( "sumfeat", sumexpr, weight[ ii] )

sumexpr = [ "sequence_struct", -50, ["sent",''],

expr[ ii],

[{'variable':"CONTINENT"}, "continent_var", ""], ]

query.addFeature( "sumfeat", sumexpr, weight[ ii] )

ii += 1

The summarization feature construction looks a little bit complicated. It catches the cases of multiple entities to extract appearing in one sentence. In order to extract all entities, we have to bind the position assigned to a pattern match to the entity extracted. A simple in range search ("within") instead of two sequences, one forward, one reversed, would return only one match. To understand this imagine a sequence W L1 L2, where W is a matching term and L1 and L2 are associated links. A "within" in Strus would match on W L1 and then stop matching other structures at the position of W. A backward sequence would match on the position of L1 because it finds W in front of it, and the the position of L2 for the same reason. Therefore it returns 2 matches and extracts L1 and L2. Here you see clearly the limitations of the model of Strus. But we got through this time by rearranging the expressions.

The method to create the summarization features for non neighbour terms in the query

# Helper method to define the query features created from terms

# of the query string, that are not following subsequently in the query:

def __defineNonSubsequentQueryTermFeatures( self, query, term1, term2):

# Pairs of terms not appearing subsequently in the query are

# translated into two query expressions:

# 1) search for the terms in a distance smaller than 5 inside

# a sentence, weight 1.6,

# where d ist the distance of the terms in the query.

# The summarizer extracts entities within a distance

# of 50 in the same sentence

# 2) search for the terms in a distance smaller than 20 inside

# a sentence, weight 1.2,

# where d ist the distance of the terms in the query.

# The summarizer extracts entities within a distance

# of 50 in the same sentence

expr = [

[ "within_struct", 5, ["sent",''], term1, term2 ],

[ "within_struct", 20, ["sent",''], term1, term2 ]

]

weight = [ 1.6, 1.2 ]

ii = 0

while ii < 2:

# The summarization expression attaches a variable referencing

# the entity to extract.

# CONTINENT terms of type 'continent_var':

sumexpr = [ "chain_struct", 50, ["sent",''],

[{'variable':"CONTINENT"}, "continent_var", ""],

expr[ ii] ]

query.defineFeature( "sumfeat", sumexpr, weight[ ii] )

sumexpr = [ "sequence_struct", -50, ["sent",''],

expr[ ii],

[{'variable':"CONTINENT"}, "continent_var", ""]

]

query.addFeature( "sumfeat", sumexpr, weight[ ii] )

ii += 1

The method to create the summarization features for a single term query

# Helper method to define the query features created from a single

# term query:

def __defineSingleTermQueryFeatures( self, query, term):

# Single term query:

expr = [ term['type'], term['value'] ]

# The summarization expression attaches a variable referencing an

# the entity to extract.

# CONTINENT terms of type 'continent_var':

sumexpr = [ "chain_struct", 50, ["sent",''],

[{'variable':"CONTINENT"}, "continent_var", ""],

expr ]

query.addFeature( "sumfeat", sumexpr, 1.0 )

sumexpr = [ "sequence_struct", -50, ["sent",''],

expr,

[{'variable':"CONTINENT"}, "continent_var", ""]

]

query.addFeature( "sumfeat", sumexpr, 1.0 )

The query evaluation method

Here we finally come to the definition of the query evaluation method based on the 3 helper methods to construct the summarization features for entity extraction introduced before:

# Query evaluation method that builds a ranked list from the best weighted entities

# extracted from sentences with matches:

def evaluateQueryEntities( self, querystr, firstrank, nofranks):

queryeval = self.queryeval[ "NBLNK"]

query = queryeval.createQuery( self.storage)

terms = self.queryAnalyzer.analyzeTermExpression( [ "text", querystr ] )

if len( terms) == 0:

# Return empty result for empty query:

return []

# Build the weighting features. Queries with more than one term are building

# the query features from pairs of terms:

if len( terms) > 1:

# Iterate on all permutation pairs of query features and creat

# combined features for summarization:

for pair in itertools.permutations(

itertools.takewhile(

lambda x: x<len(terms), itertools.count()), 2):

if pair[0] + 1 == pair[1]:

self.__defineSubsequentQueryTermFeatures( query, terms[pair[0]], terms[pair[1]])

elif pair[0] < pair[0]:

self.__defineNonSubsequentQueryTermFeatures( query, terms[pair[0]], terms[pair[1]])

else:

self.__defineSingleTermQueryFeatures( query, terms[0] )

# Define the selector ("selfeat") as the set of documents that contain all query terms

# and define the single term features for weighting and candidate evaluation ("docfeat"):

selexpr = ["contains", 0, 1]

for term in terms:

selexpr.append( term )

query.addFeature( "docfeat", term, 1.0)

query.addFeature( "selfeat", selexpr, 1.0 )

# Evaluate the ranked list for getting the documents to inspect for entities close to matches

query.setMaxNofRanks( 300)

query.setMinRank( 0)

results = query.evaluate()

# Build the table of all entities with weight of the top ranked documents:

entitytab = {}

for pos,result in enumerate(results['ranks']):

for summary in result['summary']:

if summary['name'] == 'ENTITY':

weight = 0.0

if summary['value'] in entitytab:

weight = entitytab[ summary['value'] ]

entitytab[ summary['value']] = weight + summary['weight']

# Extract the top weighted documents in entitytab as result:

heap = []

for key, value in entitytab.items():

heapq.heappush( heap, [value,key] )

topEntities = heapq.nlargest( firstrank + nofranks, heap, lambda k: k[0])

rt = []

idx = 0

maxrank = firstrank + nofranks

for elem in topEntities[firstrank:maxrank]:

rt.append({ 'weight':elem[0], 'title':elem[1] })

return rt

<!--EndFragment-->

The complete strusIR module

Our strusIR module is now complete.

Step 9: Restart the server and insert the documents with CURL

Now as we have replaced our information retrieval module with a real implementation, we can insert the documents. First we have to restart the server, so that it loads our new strusIR module. We start it in the background, because we need the commandline afterwards to insert the documents:

python3 strusServer.py &

and we get

Starting server ...

Listening on port 80

Then we can insert the document with a CURL command:

curl -X POST -d @countries.xml localhost:80/insert --header "Content-Type:text/xml"

and we get

OK 201

Step 10: Check the query results

Now as we have a real search engine with an inserted document collection running, lets issue some queries with our favorite browser:

http:

we get:

This result shows the country documents weighting the occurrence of the query term 'spanish' with the BM25 weighting scheme.

If you search with NBLNK with this query string:

http:

you get

This result shows the continents ranked by a weight calculated matching the query against the sentences where the continent entity appears. In case of a single term query this is the the number of countries owhere the entity appears in the same sentence. In our example this is the number of countries where Spanish is spoken. For multiterm queries like "local language" we get, with the weighting scheme introduced, a weight that is artificial:

It is a trivial task to extract these entities from documents to weight them, but the weighting scheme introduced can also be applied to huge collections of data, if the number of documents inspected by summarizers can be restricted to a reasonable size.