Introduction

A previous article I wrote on 'DBA Skills for Developers' gave a round up of various tips and tricks to make the life of a developer who had 'inherited' the task of in-house DBA hopefully a bit better. I was going to expand that article and add information on importing data to SQL server, but decided it is a topic and article that will grow over time so I decided to break it out on its on - and this is it.

Importing data to SQL Server

While the wonderful amazing database those lovely people who pay you designed trundles along and does its thing, generating its own data from simple in-app crud and pulling data perhaps from web-services and other cool sources, the day will come when you are presented with a big lump of flat-file style data in the format perhaps of CSV or XML, and told - import that please.... #groan

I have found that its generally better to work with the tools provided by the clever people who designed and maintain MS SQL server. For this reason, when presented with flat file data, I tend to turn to three methods of importing data depending on the type and format of data.

(1) Bulk CSV data loader

Bulk Insert is a transact SQL statement that allows you to call and import data from the SQL command line. Typically I use this inside stored procedures that are either manually called or triggered on a schedule.

Here's an example... lets assume we have the following table already in-place:

create table SomeImportTestTable

(ID int,

PersonsName varchar(50),

Country varchar(100))

and a file called 'c:\import\testdata.csv' that contains data like this:

1, AJSONDEV, United Kingdom

2, Mahabir, India

3, Joanna, Netherlands

To get this data in and sitting in our table, we call bulk import, like this...

bulk insert SomeImportTestTable

from 'c:\import\testdata.csv'

with

(Fieldterminator = ','

RowTerminator = '\n')

It will import as expected into our table

ID PersonsName Country

----------------------------------------------

1 AJSONDEV United Kingdom

2 Mahabir India

3 Joanna Netherlands

You will have seen above, that there are options to allow you to specify the delimiter between fields, line terminator, etc. Learn more about Bulk CSV loader here: https://msdn.microsoft.com/en-GB/library/ms188365.aspx

(2) BCP data import utility

Sometimes, you can't get inside the database to build and run a script for whataver reason - in this case, you can call on bulk imports best friend, the BCP utility. This little nugget is a command line utility that basically does the same thing as the bulk import statement (I have read somewhere that it calls the bulk import utility but I've not confirmed that thus far). BCP can be used by not only those who speak the dark tongue of SQL, but pretty much anyone who can hack together a command line script - the reason for this is that it is very much command line/switch driven.

Bringing data from a CSV file into your database is a snap...

bcp MyDatabaseName.SomeImportTestTable in c:\import\testdata.csv -t, -U MyLoginName

Prety simple - call the utility name, followed by the name of the database/table you wish to import into, the keyword 'IN' (thats the direction!), the '-t' tells the utility its tab delimited, and the '-U' says 'next token is my username for login'. Once you click <enter>, the utility will ask for your password and then execute.

As well as using the BCP utility to bring data into a database, you can also use it to get data out. This MSDN example illustrates:

bcp AdventureWorks2012.Sales.Currency out "Currency Types.dat" -T -c

Learn more about the BCP utility at this link: https://msdn.microsoft.com/en-gb/library/ms162802.aspx

NB: Gotchas with importing CSV data

You know that feeling when you learn a new skill or tool and you are on the honeymoon period and everything is rosy in the garden and you cant wait to get up and smell the roses and boy oh boy are they just wonderful ... <breathe!!> ... UNTIL IT ALL JUST GOES FOOBAR !!!! #OhDear .... right - heres some hard lessons learned about importing CSV style data into SQL...

(a) watch the integrity of your delimiters ... that is, ensure every object that needs to be wrapped with a comma is wrapped, and every record object that needs to be separated by a comma is separated ... also (!!!!!!) ... look for any data that *breaks* the format ... by this I mean a row using single quotes to isolate data tokens, that are effectively broken by the user data containing the same delimiter somewhere..

example - working: '1','AJSONDEV','United Kingdom'

example - NOT working: '1','AJSON O'DEV','United Kingdom'

in the non working example above, note that AJSON has gone all Irish on us and is now Ajson O'Dev (begorrah!) ... unfortunately, the single quote separating the "O" and "Dev" is also the delimiter so the import will break.

(b) watch the data quality being imported and watch out for any odd looking characters (non expected non standard ascii for example) that could break the import.

So what to do about it? ... I generally use one of two approaches, depending ont he severity of how I see import failures as my project progresses. Some data by its nature comes in clean, other data is just downright messy. The first approach is generally to use a white-list of characters actually allowed to get imported, and pre-parse the csv file to enforce the list (eg: only allow 'A..z', '0..9'). The second approach which is a bit more work and heavy handed, is to take each token due to be imported, encode it (I have used base64 at times for this), import, then decode. The latter however allows any spurious characters to be transferred and gives me the least amount of pain once set up correctly.

(3) XML data import

SQL Server gives a lot of flexibility when dealing with XML data, but you have to duck and dive at times to make things happen. if you have an xml file that you wish to import, where the data is neatly structured in easy to get to nodes, then you are in luck - things will run very smoothly for you. If on the other hand you have numerous child nodes, lots of deep trees etc, then you have to seat a bit to get at what you require. You have the option to take an entire XML file from disk, and store that into a special table column with type XML, or, load up an XML file, query its contents, and extract these and insert them into a standard table for regular plain old sql manipulation.

Example 1 - simple import to XML type field.

Assuming we have a table with a column with type XML, then we can use the following SQL command to import a file 'testdata.txt' into that column.

Insert into MyTestImportTable(XmlColumn)

select * from OpenRowSet( bulk 'c:\import\testdata.txt', single_blob ) as X;

(... oh goodness, looksee, its our friend 'BULK' doing the heavy lifting for us again .... how cool!)

Note that the data after execution, is now present *in XML format*, stored in one single column, and you still need work to extract the data and use it.

Example 2 - import to an VarChar/String variable

Sometimes we need to examine the XML as text, perhaps to pre-parse something before manipulating it as XML. This example shows how to load the file into a VarChar variable.

Select @fileData=BulkColumn

from OpenRowSet(Bulk 'C:\import\testdata.xml', Single_blob ) x;

The variable @fileData is now available, as a string, to manipulate as you see fit. To cast it back to an XML object, simply convert it.

Example 3 - import xml data and insert individual values to table row columns

Our input XML example would look like this:

<persons>

<person>

<id> 1 </id>

<personsname> AjsonDev </personsname>

<country> United Kingdom </country>

</person>

<person>

<id> 2 </id>

<personsname> Mahabir </personsname>

<country> India </country>

</person>

<person>

<id> 3 </id>

<personsname> Joanna </personsname>

<country> Netherlands </country>

</person>

</persons>

The following SQL will open the file, and read the data into individual record columns.

Declare @fileData XML

Select @fileDataX=BulkColumn from OpenRowSet(Bulk'C:\data\hpra\latestVMlistX.xml',Single_blob) x;

insert into SomeImportTestTable

(ID, PersonsName, Country)

select

xData.value('ID[1]','int') ID,

xData.value('PersonsName[1]','varChar(50)') PersonsName,

xData.value('Country[1]','varChar(100)') Country,

from @fileData.nodes('/Persons/Person')

Example 4 - extracting nested xml child data and flattening it out

For this example, we are going to look at an input XML file that has nested child nodes - lets look at that first:

<persons>

<person>

<id> 1 </id>

<personsname> AjsonDev </personsname>

<country> United Kingdom </country>

<languages>

<language> C# </language>

<language> JavaScript </language>

<language> Python </language>

<language> Objective-C </language>

<language> Pascal </language>

<language> F# </language>

<language> R </language>

</languages>

</person>

<person>

<id> 2 </id>

<personsname> Mahabir </personsname>

<country> India </country>

<languages>

<language> C# </language>

<language> JavaScript </language>

<language> C++ </language>

<langauge> VB

<language> SQL </language>

</langauge></languages>

</person>

<person>

<id> 3 </id>

<personsname> Joanna </personsname>

<country> Netherlands </country>

<languages>

<language> Dutch </language>

<languages>

</languages></languages></person>

</persons>

What we want to achieve, is to extract the core data (Name, Country), but instead of having to construct another table (1:M) to store the languages, or put them into an XML field in our table, we decide to flatten the languages out by comma delimiting into one field. Like most things in development, there are many ways to approach this. In this example, I show how to use xpath navigation to first go deep and extract all of the child nodes in one line, then use '..' notation to walk back up the xml tree and pick out the parent data we require.

insert into SomeImportTestTable

(ID, PersonsName, Country, Languages)

SELECT

xData.value('../ID[1]','int') ID,

xData.value('../PersonsName[1]','VARCHAR(50)') PersonsName,

xData.value('../Country[1]','varchar(100)') Country,

xData.value('(.)[1]', 'varchar(1000)')

FROM @fileDataX.nodes('./Persons/Person/Languages') as

x(xData)

When the data is extracted, it will go as normal into the ID,Person and Country fields, however, the 'Languages' data will include each language, separated by a line-break. In this case to delineate the data, I may choose to separate out the tokens with a comma.

NB: XML Gotcha! XML can be really simple, or as complex as you can make it to deal with. Sometimes there are things that cause problems when trying to load up XML data using the methods described here. Recently, I had a problem with a namespace issue, where when the namespace was present, the data would not load, when it was taken out, it would load. It needed to be referenced correctly it seemed. If you encounter issues like this, you have two choices:

(a) you can reference the xml explicitly, for example, by using with XMLNameSpaces, however, for me, this didn't work, so I decided to just strip out the link as I didn't need it.

(b) My quick/dirty solution was to load the XML as a VarChar, and then remove the NameSpace string. Having done that, I converted the varchar back to an XML type, and proceeded to work as required.

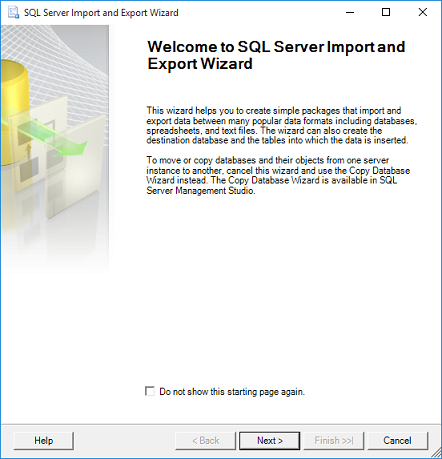

Import/export wizard

Even in the lowest version of MS SQL Server, there is some GUI assistance with data import where you can avoid coding (why?!! :P) ... as you would expect however, there are some restrictions, the main being that you cannot save your ETL plan. Regardless, when you need to get something done in a hurry, this tool is very useful.

Quote:

In SQL Server Express, Web, or Workgroup, you can run the package that the Import and Export Wizard creates, but cannot save it. To save packages that the wizard creates, you must upgrade to SQL Server Standard, Enterprise, Developer or Evaluation.

Using SQL Express, from the 'All programs' menu, select 'SQL Server Import and Export Wizard' - its very laid out and self explanatory, so select a data source, edit if neccessary any parameters relating to the parsing and mapping of the incoming data, select a data destination, and let it fly!

(1) Start wizard

(2) choose data source

(3) specify data source type

(4) edit any import parameters and mappings between source and destination

(5) confirmation source/destination

(6) Run the import!

Advanced BCP

Earlier in the article, we looked briefly at using the BCP utility to import adn export data. There is however more to it than meets the eye, and if you start using the utility heavily, its worth delving a bit more into it to see how else it can help you. Here are some of the switches I use most frequently:

- -a packet_size

-

this tells the utility the number of bytes to (per network packet transfer) to use when exchanging data to/from the server. The default is 4096, but I have found for large datasets that increasing this size can give increased performance on import routines.

- -b batch_size

-

you can use this flag to pass through the total number of rows that should be processed for import at one time (a batch). This is *extremly*useful when you are dealing with potentially dodgy data, where an import continuously breaks and you need to (a) track it down and (b) isolate the issue so the rest of the data can keep flowing. This switch ensures that should a batch fail, the rest of the process will continue - in addition, any actions carried out because of the transaction will be rolled back.

- -e err_file

-

I use this always in conjunction with the -b above. It gives the utility a location where I want it to write log files of any errors encountered, it will output any rows that gave errors and were not imported. Big gotcha! ... if you *dont* include this when using the batch-size option, then a log file will not be created and you dont know what was left out of the import should anything go wrong!

- -F first_row

-

This one is sweet, and can be used in combination with the -L ('last row' below) - in fact using them both together is similar to the pattern of 'skip/take' we use when dealing with paging scenarios. This switch tells the utility where to start importing the first row of data.

- -L last_row

-

The otherside of first_row, it indicates what the last row number to import should be. Using -F and -L together, allow you to extract a chunk of data in the middle of a large import file while ignoring the rest.

- -m max_errors

-

Clearly there is no point in processing a file if it is full of errors, and you may decide you can only accept a certain number or percentage of errors before baulking out. -m allows you to specify this number. An fail occurs when a row of data cannot be imported for whatever reason.

- -t field_term

-

Depending on the source of your data, the field data in each row may be delimited by a tab, comma, pipe, etc. This switch allows you to tell BCP what the terminator is. The default is \t (tab character).

Selective export

Sometimes I only need to take parts of data from a table, and not export the entire thing. To assist with this, we can use the 'queryout' switch.

examples:

bcp "SELECT Name FROM SomeTable" queryout MyExportedFile.txt -T

bcp "SELECT * FROM Country WHERE PopulationMillion > 10 AND ExportKGMonth > 170 " queryout "CountryExports.dat" -T

Data im/ex in Cloud

As we move more data and services to the cloud, we are faced with coming up different ways of doing things from the desktop and traditionally hosted server. Regardless of the platform however, the requirement to import/export csv/xml/etc formatted data remains. Sometimes it may not seem obvious how to do things and you have to come up with various workarounds that fit the situation. Here are some things I have done to assist with data import/export on both Azure and other cloud hosted environments.

Setup an external drive.

One of the solutions I use is to create a virtual drive that is external to my SQL VM, and use this as a staging point for data. I can interact directly with SQL to work with the data on the drive, or, hook it in as a mapped drive to the SQL VM and use it for automated jobs such as backup. I have an article here on scheduled backup of Azure based SQL using this method.

Remote into your drive

There are various tools available to hook into cloud storage - the one I mostly use is Azure Cloudberry Explorer from Cloudberry Labs. (nb: there are versions for EC2 etc as well). The freeware version is extremly capable and will do most things you need for facilitating basic im/ex. If you require more power, then their pro version is a sizable step up and is very reasonably priced for the features it has, and importantly, the time it saves you.

(the screenshot above from cloudberry shows local file explorer on the left, and the remote virtual drive on the right).

Other methods

There are a number of other approaches to loading data into SQL server - I will cover them off in a later update to this article. Among those to consider would be SSIS, which allows you to load blocks of data in parallel, always a good thing when you are dealing with large volumes of data

Here are some interesting resources worth checking out about SSIS:

I have attached both an SQL script and test XML data to demonstrate the code working - feel free to download and try it. Finally, If you liked the article, please give it a vote at the top of the page!

History

21 Jan 2016 - Version 1

10 Feb 2016 - Version 2 - added im/ex wizard

17 Feb 2016 - Version 3 - added Azure/cloud section, added section on advanced BCP im/ex

12 Apr 2016 - Version 4 - Updated code, added sample SQL download script