Introduction

In Part 1, I introduced dotNetify, a C#/JavaScript library for real-time, interactive web application development that provides MVVM-styled data binding over SignalR with the following major benefits:

- allows you to architect your web application with a higher degree of separation of concerns: client-side only holds UI component-specific logic, while the UI-agnostic presentation and business logic remains server-side.

- eliminates the need to implement RESTful service layer, making your code leaner and more maintainable.

- the bulk of your logic will be on the server-side, where you can take full advantage of the better language/tooling support in C#/.NET, which would make it much easier to apply SOLID.

The dotNetify library is not limited to ASP.NET, however. In this Part 2, I'm picking up the real-time web chart example in Part 1 and further develop it into a simple Process Monitor that provides an interactive UI on the browser, and can be hosted either on a Windows service, or self-hosted on a Linux box with the help of Mono.

In place of ASP.NET, I will use Nancy, an open-source, lightweight web framework that's perfect for these kinds of applications. Nancy will be hosted on top of OWIN.

Architecture

The simple Process Monitor we are going to build will be a list of processes that can be filtered by name. When a process is selected on the list, we will show two charts for CPU and memory usages, and the charts will update every second. We will use the APIs provided by System.Diagnostics to get all the data we need.

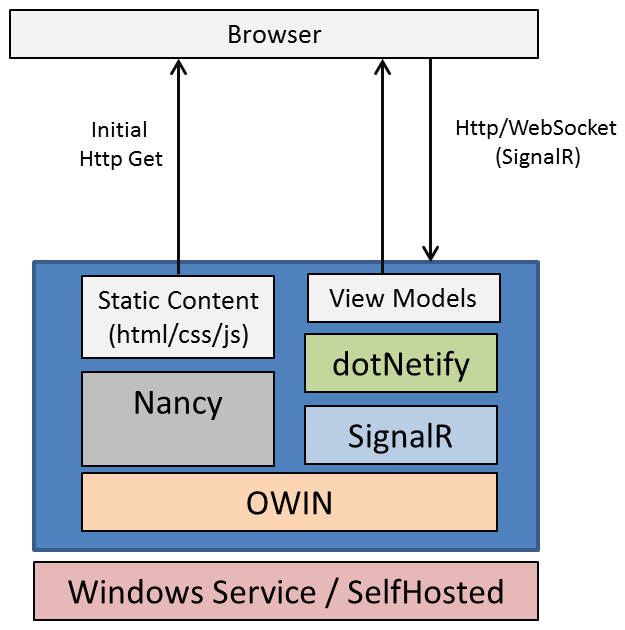

The following diagram shows the components. We will divide the solution into 3 projects: the principal class library that references dotNetify, Nancy libraries and their dependencies, the self-host console app shell, and the Windows service shell.

<im g="" src="http://www.codeproject.com/KB/Articles/17094/Diagram.png" style="width: 320px; height: 323px;">

Nancy basically provides the initial browser's HTTP request with the static files (HTMLs, styles, scripts), and once the dotNetify script runs on the client-side, further two-way communication is done through SignalR.

Building the Solution

Here, I will take you through setting up a Visual Studio 2015 solution, with the libraries included using NuGet.

Setting Up Class Library

Let's start by creating a brand new Class Library project and name it DemoLibrary, then use the NuGet Package Manager Console to install the following packages:

Install-Package Microsoft.Owin.Hosting

Install-Package Nancy.Owin

Install-Package DotNetify

In the project, create an OWIN startup file and initialize the libraries.

using System;

using Microsoft.Owin;

using Microsoft.Owin.Hosting;

using Owin;

using DotNetify;

[assembly: OwinStartup(typeof(DemoLibrary.Startup))]

namespace DemoLibrary

{

public class Startup

{

public void Configuration(IAppBuilder app)

{

app.MapSignalR();

app.UseNancy();

VMController.RegisterAssembly(GetType().Assembly);

}

public static IDisposable Start(string url)

{

return WebApp.Start<Startup>(url);

}

}

}

Next, create a Nancy module where the HTTP requests will go to. As you can see, its only job is to respond to the root URL request by providing the HTML View.

using Nancy;

namespace DemoLibrary

{

public class HomeModule : NancyModule

{

public HomeModule()

{

Get["/"] = x => View["Dashboard.html"];

}

}

}

Nancy has pre-configured places where it will look for static files, including folders with the names "Content" and "Views". So that's where we'll put our CSS files (under Content) and HTML files (under Views). However, "Scripts" doesn't seem to be part of Nancy's convention, so we will need to tell Nancy to recognize it too by adding a Nancy bootstrapper file:

using Nancy;

using Nancy.Bootstrapper;

using Nancy.Conventions;

using Nancy.TinyIoc;

namespace DemoLibrary

{

public class Bootstrapper : DefaultNancyBootstrapper

{

protected override void ApplicationStartup(TinyIoCContainer container, IPipelines pipelines)

{

base.ApplicationStartup(container, pipelines);

this.Conventions.StaticContentsConventions

.Add(StaticContentConventionBuilder.AddDirectory("Scripts"));

}

}

}

When the project is compiled, we need the static files to be copied to the Bin folder as well, so will now add to the Post-Build event on the project settings to copy them:

xcopy /E /Y "$(ProjectDir)Views" "$(ProjectDir)$(OutDir)Views\*"

xcopy /E /Y "$(ProjectDir)Content" "$(ProjectDir)$(OutDir)Content\*"

xcopy /E /Y "$(ProjectDir)Scripts" "$(ProjectDir)$(OutDir)Scripts\*"

Since we will create other projects, let's have them all compiled to the same Bin folder by changing the output path to ..\Bin. Now we're ready to implement our process monitor logic.

View Models

The first view model will be the one that presents the process list.

using System;

using System.Collections.Generic;

using System.Linq;

using System.Diagnostics;

using DotNetify;

namespace DemoLibrary

{

public class ProcessVM : BaseVM

{

public class ProcessItem

{

public int Id { get; set; }

public string Name { get; set; }

}

public string SearchString

{

get { return Get<string>(); }

set

{

Set( value );

Changed( () => Processes );

}

}

public List<ProcessItem> Processes

{

get

{

return Process.GetProcesses()

.Where( i => String.IsNullOrEmpty( SearchString ) ||

i.ProcessName.StartsWith( SearchString,

StringComparison.InvariantCultureIgnoreCase ) )

.OrderBy( i => i.ProcessName )

.ToList()

.ConvertAll( i => new ProcessItem { Id = i.Id, Name = i.ProcessName } );

}

}

public int SelectedProcessId

{

get { return Get<int>(); }

set

{

Set( value );

Selected?.Invoke( this, value );

}

}

public event EventHandler<int> Selected;

}

}

Every view model derives from BaseVM, which provides the implementation of INotifyPropertyChanged and the associated Get, Set, and Changed methods. There are 3 properties that will be bound to the controls on the View:

SearchString, bound to a text-box for the incremental search. When the user types, this property will receive the characters and raise the changed event on the Processes property.Processes, bound to a list view that displays the process IDs and names.SelectedProcessId, bound to the click event on any list item. On change, this will raise the Selected event.

Next is the view model that presents the CPU usage chart:

using System;

using System.Diagnostics;

using DotNetify;

namespace DemoLibrary

{

public class CpuUsageVM : BaseVM

{

private PerformanceCounter _cpuCounter =

new PerformanceCounter

( "Processor", "% Processor Time", "_Total" );

public string Title

{

get { return Get<string>() ?? "Processor Time"; }

set { Set( value ); }

}

public double Data

{

get { return Math.Round( (double) _cpuCounter.NextValue(), 2 ); }

}

public int ProcessId

{

set

{

var processName = GetProcessInstanceName( value );

if ( processName != null )

{

_cpuCounter.Dispose();

_cpuCounter = new PerformanceCounter

( "Process", "% Processor Time", processName );

Title = "Processor Time - " + processName;

}

}

}

public void Update()

{

Changed( () => Data );

PushUpdates();

}

private string GetProcessInstanceName( int id )

{

}

}

}

As in the example Part 1, the property Data will be bound to the chart control, but we won't put the timer that updates every second here, since we want to use the same timer on the view model for the memory usage chart. Therefore, this class will just provide a public method to raise the changed event on the property and push the update to the client.

The ProcessId property setter switches the data source to the process of a given ID. This property will be set in response to a process being selected on the process list view.

The MemoryUsageVM class follows similar construct and needs no further narrative. And that brings us to the last view model, which requires a bit of explanation.

Master View Model

So far, we have three view models that present distinct views on the web page. If they don't need to be interacting, then that's all there is to it. But we do want them to be interacting such that user selecting a process in the process view will cause the charts to switch their data source to that process. And so, there needs to be a way for us to establish an observer pattern between the view models.

The default dotNetify's behavior is to instantiate the view models independent of each other and only on request, but in order to support exactly this requirement, it introduces the concept of a master view model. When the client requests for a view model that's associated with a master view model, the instantiation request will go to the master view model to be fulfilled, which gives it the opportunity to do all sorts of initialization on the instances.

The following master view model implements GetSubVM to provide instances of the above view models that have been initialized to let the ProcessVM instance be observed and the other view models be notified when a process is selected. The timer is placed here that calls the Update method of the chart view models every second so they can push their updates to the client every second.

using System.Timers;

using DotNetify;

namespace DemoLibrary

{

public class DashboardVM : BaseVM

{

private Timer _timer;

private MemoryUsageVM _memoryUsageVM = new MemoryUsageVM();

private CpuUsageVM _cpuUsageVM = new CpuUsageVM();

private ProcessVM _processVM = new ProcessVM();

public DashboardVM()

{

_processVM.Selected += ( sender, e ) =>

{

_cpuUsageVM.ProcessId = e;

_memoryUsageVM.ProcessId = e;

};

_timer = new Timer( 1000 );

_timer.Elapsed += Timer_Elapsed;

_timer.Start();

}

public override void Dispose()

{

_timer.Stop();

_timer.Elapsed -= Timer_Elapsed;

base.Dispose();

}

public override BaseVM GetSubVM( string vMTypeName )

{

if ( vMTypeName == typeof( CpuUsageVM ).Name )

return _cpuUsageVM;

else if ( vMTypeName == typeof( MemoryUsageVM ).Name )

return _memoryUsageVM;

else if ( vMTypeName == typeof( ProcessVM ).Name )

return _processVM;

return base.GetSubVM( vMTypeName );

}

private void Timer_Elapsed( object sender, ElapsedEventArgs e )

{

_memoryUsageVM.Update();

_cpuUsageVM.Update();

}

}

}

View

We will now create the HTML view into Dashboard.html and put the file under the Views folder. What's shown here has been trimmed, mostly of its Twitter Bootstrap styling (.css file placed in Content/css), to focus just on the things of interest.

<!DOCTYPE html>

<html>

<head>

<link href="/Content/css/bootstrap.min.css" rel="stylesheet">

<script src="/Scripts/require.js"

data-main="/Scripts/app"></script>

</head>

<body>

<div data-master-vm="DashboardVM">

<div class="row">

<!--

<div data-vm="ProcessVM" class="col-md-5">

<div class="input-group">

<span>Search:</span>

<input type="text" data-bind="textInput: SearchString" />

</div>

<div>

<table>

<thead>

<tr>

<th>ID</th>

<th>Process Name</th>

</tr>

</thead>

<tbody data-bind="foreach: Processes">

<tr data-bind="css:

{ 'active info': $root.SelectedProcessId() == $data.Id() },

vmCommand: { SelectedProcessId: Id }">

<td><span data-bind="text:

Id"></span></td>

<td><span data-bind="text:

Name"></span></td>

</tr>

</tbody>

</table>

</div>

</div>

<div class="col-md-5">

<!--

<div data-vm="CpuUsageVM">

<div class="panel-heading">

<span data-bind="text: Title"></span>

<div><span data-bind="text:

_currentValue"></span><span>%</span></div>

</div>

<div class="panel-body">

<canvas data-bind="vmOn: { Data: updateChart }" />

</div>

</div>

<!--

<div data-vm="MemoryUsageVM">

<div class="panel-heading">

<span data-bind="text: Title"></span>

<div><span data-bind="text:

_currentValue"></span><span> KBytes</span></div>

</div>

<div class="panel-body">

<canvas data-bind="vmOn: { Data: updateChart }" />

</div>

</div>

</div>

</div>

</div>

</body>

</html>

The data-vm attribute marks the scope of the named view model as explained in Part 1, but now they are nested under the markup with data-master-vm attribute to put them under the scope of the named master view model.

Throughout this view, standard Knockout data binding notations are used to bind various markups to the view model properties, i.e., the textInput, forEach, css, and text. DotNetify adds its own custom binding:

vmOn, which will call updateChart JavaScript function on Data changed event.vmCommand, used for command binding. In the above case, when the table row is clicked, send the value of the Id property of the associated process item to the SelectedProcessId.

Code-Behind

For rendering the charts on the canvas elements, the view uses ChartJS library (.js file placed in Scripts), which has to be initialized and updated with JavaScript. In Part 1, this was referred as code-behind, written in the module pattern, and is basically the client-side logic of your application to handle UI-specific components. Here's the same JavaScript converted to TypeScript.

declare var Chart: any;

class LiveChartVM {

_currentValue: number = 0;

updateChart(iItem, iElement) {

var vm: any = this;

var data = vm.Data();

if (data == null)

return;

if (vm._chart == null) {

vm._chart = this.createChart(data, iElement);

vm._counter = 0;

}

else {

vm._chart.addData([data], "");

vm._counter++;

if (vm._counter > 30)

vm._chart.removeData();

}

vm._currentValue(data);

vm.Data(null);

}

createChart(iData, iElement) {

var chartData = { labels: [], datasets: [{ data: iData }] };

return new Chart(iElement.getContext('2d')).Line(chartData);

}

}

We will reuse this code for both the CPU and Memory usage charts, simply by inheriting it:

class CpuUsageVM extends LiveChartVM {}

class MemoryUsageVM extends LiveChartVM {}

The last thing to do for this class library is to include the transpiled JavaScript files in app.js, which is the dotNetify's RequireJS config file. I placed those TypeScripts under /Scripts/CodeBehind.

require.config({

baseUrl: '/Scripts',

paths: {

"chart": "chart.min",

"live-chart": "CodeBehind/LiveChart",

"cpu-usage": "CodeBehind/CPUUsage",

"mem-usage": "CodeBehind/MemoryUsage",

},

shim: {

"live-chart": { deps: ["chart"] },

"cpu-usage": { deps: ["live-chart"] },

"mem-usage": { deps: ["live-chart"] }

}

});

require(['jquery', 'dotnetify','cpu-usage', 'mem-usage'], function ($) {

$(function () {

});

});

After compiling, make sure the JavaScript files from the TypeScripts were created. I'm a bit paranoid on this because Visual Studio only builds them on save, and when you do fresh get from the repository, they won't build. That's why I usually include the transpiled JavaScript files along when I check in.

Setting Up Self-Host Console App

We will now set up the console app to self-host the application. This is good for testing, and we can use this later to deploy to other operating system using Mono. Create a new Console Application project, then:

- change the output path to ..\Bin.

- add a reference to the

DemoLibrary. - install this package:

Install-Package Microsoft.Owin.Host.HttpListener

Replace the class in Program.cs with this one, and you're good to go!

class Program

{

static void Main(string[] args)

{

var url = "http://+:8080";

using (DemoLibrary.Startup.Start(url))

{

Console.WriteLine("Running on {0}", url);

Console.WriteLine("Press enter to exit");

Console.ReadLine();

}

}

}

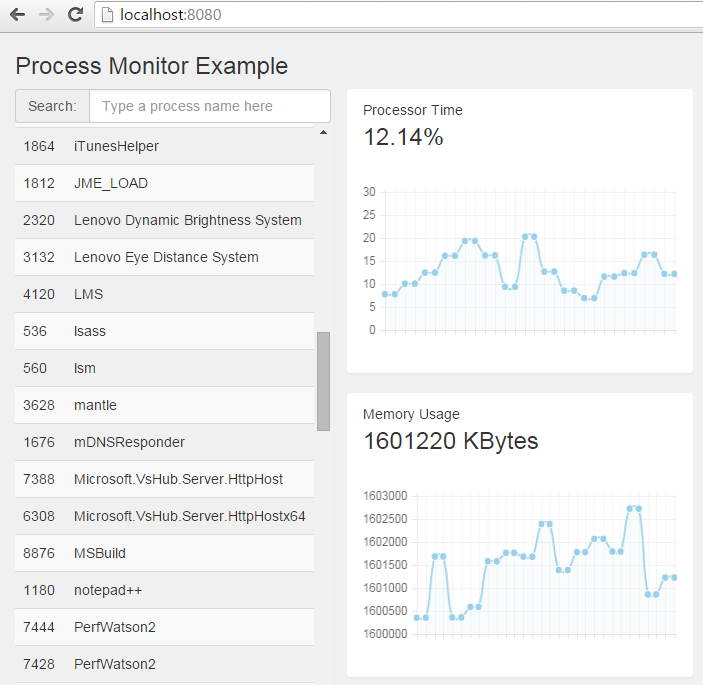

Build and run this app with Administrator privilege. Go to localhost:8080 on your browser, you should see the screen below:

Setting Up Windows Service

We're now ready to host this in a Windows service. Create a new Windows Service project, then do the same thing as the console app: change the output path to ..\Bin, add a reference to the DemoLibrary, and install the Microsoft.Owin.Host.HttpListener package. Then:

- open Service1.cs

[Design], right-click and select Add Installer. - on

ServiceInstaller1 properties, set the service name. - on

ServiceProcessInstaller1 properties, change the account to LocalSystem. - open Service1.cs and replace the class with the following:

public partial class Service1 : ServiceBase

{

IDisposable _demo;

public Service1()

{

InitializeComponent();

}

protected override void OnStart(string[] args)

{

var url = "http://+:8080";

_demo = DemoLibrary.Startup.Start(url);

}

protected override void OnStop()

{

if (_demo != null)

_demo.Dispose();

}

}

Build and register the service with InstallUtil.exe. Start the service and test it by going to localhost:8080 on the browser.

Cross-Platform With Mono

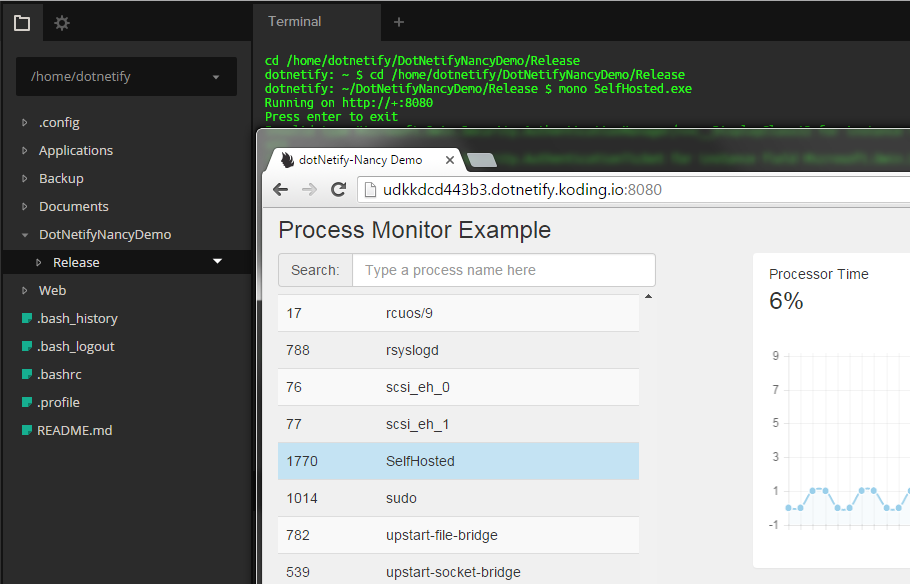

It doesn't take much to get this application to run on a Linux box. All you have to do is compile the console app project using Xamarin Studio (the free version will do), copy the entire Bin folder to that Linux box, install Mono, and just run the .exe with mono. Here's what it looks like when I ran it on the Koding's Ubuntu VM:

In this environment, the app was able to get process names and total CPU usage but not the individual process info; perhaps due to Mono implementation of System.Diagnostic.

And so, this concludes the presentation. If you like what you see here, there are many more examples in dotNetify's website at http://dotnetify.net. It is a free, open-source project hosted on Github where participation is welcomed.

Related Articles