Introduction

This article is about an experimental implementation for highly responsive web services that call one another to do service-reuse. It is based on the 'Fluid Services' idea that was published in the SOA Magazine. This implementation demonstrates how services can be made immune to increasing response time and scalability problems allowing higher reuse and composability of web services by using stream oriented communication as an alternative to message oriented communication in order to harness the parallelism among clients and servers on distributed architectures. This implementation is in no way a reference or the best way to implement such services. It is just provided as a toy project for architects and developers to observe and understand the behavior of such distributed architectures on a single box. Of course, in reality this approach would be beneficial when many distributed services are composed to create new services in an enterprise and larger scale systems of the future.

Background

When we implement web services, we usually ignore the possible future reuse of them. This is somewhat due to our humanly short sighted vision caused by immediate necessities of today. Another reason is, service reuse and composability with today's message-oriented services is not feasible due to technical limitations. When a service calls another, response times accumulate, throughput decreases and scalability is reduced or sometimes practically removed. What if service calls were as cheap as in-memory method calls? It is not hard to imagine that people would be much more motivated to do service reuse which would increase the value of current investments by reducing reimplementation. SOA encourages service reuse, but it doesn't show how. Starting from these facts, I have developed a proposal for service developers to adopt a stream oriented approach to reduce service response time and overlap service processing. This would make sure service delegation would not add huge amounts of delays to the total response time. This can be achieved by using a request/replies or requests/replies pattern rather than request/reply pattern. That is, the service is known to return multiple elements which is often the case. Thus, the service could push data back to its client as it produces results, and the client could process data as it receives them from a stream of elements. This is very similar to video/audio streaming but chunkier. This would allow us to harness the parallelism between clients and servers rather than just blocking them until each one is done. I have developed this idea targeting service-oriented architectures and published an article on SOA Magazine under the title 'Fluid Services' which you can read here.

The Scenario

The sample code demonstrates a scenario where the client calls a service which in turn calls another which in turn calls another, thus going 4 levels of call depth to get a set of patients. You may say this is not realistic. But keep in mind, the point is to make such call chaining much more practical and performant so that such level of reuse can be rendered possible.

The sample app allows you to lunch any number of services to simulate a given call-depth. Then you can invoke services to return a given number of elements. You can also set the initial and per element processing delays for each launched service host by using the provided textboxes. After launching services, we will compare two types of service invocations which return 1000 patients in buffered and overlapped modes. The buffered mode is a simulation of regular web services that you and me develop with today's best practices. The overlapped mode on the other hand uses streaming to write data while producing the data, and consume the data while receiving it.

Launching the Services

After building the solution, make sure TestLauncher is selected as the startup project and run it. If you are running it outside VS.NET, make sure you run the app as Administrator because it'll host services at ports starting from 9000.

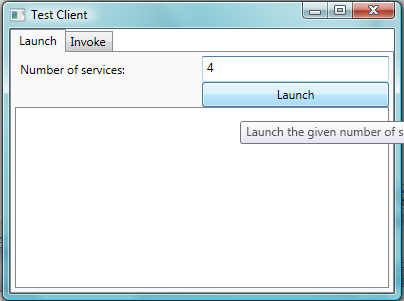

In the Launch tab, enter 4 for the 'Number of Services' text box.

Then click 'Launch' button to actually start the service host processes.

Each service is hosted on a different port and is designated with a number shown in the large blue rectangle. The client app knows where each service and its process is, so it's possible to stop and start a new set of service hosts. To do this, just change the 'Number of Services' value and click the 'Launch' button again.

Invoking Services in Buffered Mode (Old Fashioned Service Calls)

Once all services are launched, we can invoke services from the TestLauncher's Invoke tab. In the 'Number of Patients to Return' textbox, enter 1000. Uncheck the 'Overlapped' checkbox. Before invoking the service, let's understand how the call chain is processed.

When we click 'Invoke' button, the client calls Service 0. After the call is received, Service 0 immediately invokes Service 1, which then calls Service 2, which then calls Service 3. Service 3 is the final destination of this request and does the actual work to produce results. Service 3 will return the requested number of dummy patient records. In reality, the data could be pulled from any data source. Since we're on the same computer, we don't really want to use up the CPU time to do processing. This eliminates the need to actually run each service on a different box to get the real parallelism that we intend to harness. Since each service is not using much CPU, it would return almost immediately. So we just simulate a processing time by introducing Response Time and Response Time/elem.

As each service processes elements, it'll show its progress. As shown in the figure above, each service will complete sequentially in the reverse order starting from Service 3. The client measures the initial response time to get the first record from the service and then the total time it took to complete the entire call.

The client received the first element from the service in 35 seconds, and the full service call was completed in 45 seconds. This is normal given the number of elements to be processed, per-element processing time and the fact that services had to execute one after the other without any parallelism.

Invoking Services in Streamed Mode (Fluid Service Calls)

When we use streaming to do the same type of processing, we see a dramatic improvement in response times. This time check the 'Overlapped' checkbox and click 'Invoke' button again.

The services are called in the same order of delegation from Service 0 to Service 3. But this time, the services don't just wait for their dependency to finish. They start processing the partially available data as soon as it is received, and they push to the response stream as soon as the results are available. The client also works in the same fashion. It consumes the results as it is available. Notice that all the services are actually running in parallel now. They do their processing as the data flows through them. This behavior is similar to a fluid flowing a pipe. The benefit of this design is obvious without requiring a lot of interpretation. First and foremost, the client was able to receive the first records in less than a second (771 ms). So despite the 4 level call depth for a service implementation that returns 1000 records, we get the results almost immediately. Second, the total processing time is obviously going to be much lower because almost all processing is overlapped in time.

As seen in the above figure, the total call time is about 18 seconds which is only 40% of the buffered call (45 seconds). What's more, this design has better scalability against both payload and call depth. The latter is not much mentioned in the SOA literature, but again I think this is because of the unfeasibility of high level of service reuse. And it is important, so that when we develop new services we will know that reusing existing services to create new ones will not be a huge performance concern, and that our service will also be callable without reducing the performance significantly.

In a fluid service design, the initial response time is not affected by the total number of elements in the stream at all. If the services consume only a limited number of elements from the service and do not really process all of the data, then it could even be possible to just cancel the rest thus reducing even the total time dramatically. Even when the data size grows, responsiveness and throughput will be greatly improved.

Immunity Against Call Depth and Payload Size

The above screenshot shows a scenario with a calldepth of 8, to illustrate how the initial and total response time is affected. Not surprisingly, thanks to the overlapping nature of the fluid services, neither changed significantly. This is indeed the second most important benefit of using fluid services, because it will essentially remove the latency barrier when service composition and reuse comes into play, hence increasing the asset value of services for an organization.

The intermediary services in the above scenario don't really do any processing on the data other than receiving, sleeping to simulate latency and pushing it back to the caller. But you can imagine it could do all sorts of processing that works on partial data. The moment a service needs the full dataset to do its processing, its fluidity vanishes. In effect, it just becomes a buffered service. There is still some benefit to using streaming, but it's only for IO overlapping of the serialized data, not a full overlapping of the entire processing pipeline. So, causing such buffering must be avoided if possible. For example, if you have to sort the data, you would basically need the entire dataset to do this. One way to solve this issue, is to defer the sorting of data as close to the final consumer as possible so that no further sorting will be necessary. Thus, buffering in one of the services (backend or frontend) doesn't actually kill the benefits of this design. But if all services in a chain become buffered due to programming logic, or network intermediaries, then it will basically become a fully buffered service architecture just like today's services. Another and probably a more efficient solution is to actually develop components that can work on partial data and can work concurrently with other steps in the processing. Imagine an aggregator, a filtering step, a data translator, and a resequencer step are pipelined to create a service implementation. Such a service could then be pipelined to other services that are designed in a similar fashion that also works on partial data and never do buffering. So this type of design really requires a lot of care to avoid those pitfalls. We can envision a future where patterns of overlapped service processing emerge and even made available as libraries and frameworks for developers to easily implement their code.

Comparing Fluid Services Statistics for Scalability against Payload Size and Call Depth

I have run a number of tests to actually collect some real data. Although it's in a simulated environment rather than a real distributed environment, the results should be pretty close to real behavior. Each service assumes a 10 ms initial delay, plus 10 ms per element processing delay. The payload size is measured by the number of elements returned from the services. In this analysis, we are focused on initial and total response time of the overall call when only a single call is made. We are not interested in observing the throughput, but you can imagine the throughput characteristics are also affected positively by reducing the response times.

The following chart illustrates the raw data collected for initial response times.

Initial Response Time Statistics

The region marked with red letters and a thick rectangle is our area of interest since it shows a relatively large gain in terms of initial and total response times.

The following diagram is a different way of interpreting the above data. It just shows the times factor (buffered/overlapped) as a measure of improvement for initial response time.

Improvement in Initial Response Time Statistics (Times = Buffered/Overlapped)

The following chart illustrates the raw data collected for total response times.

Total Response Time Statistics

The following diagram is a different way of interpreting the above data. It just shows the times factor (buffered/overlapped) as a measure of improvement for total response time.

Improvement in Total Response Time Statistics (Times = Buffered/Overlapped)

Now let's visualize and see how overlapped vs buffered services scale against payload size.

In the above graph, for a call depth of 4, both the initial and the total response times scale much better than buffered services. The initial response time is especially good, because it's virtually constant. On the other hand, the total response time increases with the total payload size, but it's never multiplied by the call depth, hence has a much lower slope.

The above graph shows the same comparison for a call depth of 16. If you look carefully, the difference between buffered and overlapped services are now much more emphasized. The overlapped services are pretty good at being reused and called by others because their response time characteristics are not adversely affected. A streaming architecture that does overlapped processing on 16 chained services to process 1000 elements can still respond as quickly as 6 seconds, and complete as fast as 25 seconds whereas it would take more than 180 seconds for a buffered design.

To see how well fluid services scale against call depth, hence decide on its reuse value, let's analyze the following graphs.

In the above graph, for a payload of 100 elements, the overlapped call's response time grows much slower than the buffered calls. In fact, the above graph says that it wouldn't even be practical to do service reuse beyond 4 levels because the initial response time would grow fast. On the other hand, the overlapped design allows a higher level of reuse because it's now possible to neglect the cost of additional service calls.

This graph shows the same comparison for a payload size of 1000 elements. Now, the difference between overlapped and buffered services is really much clearer. Overlapped calls are able to go up to call depth of 16 levels with a payload size of 1000 without getting bogged down. On the other hand, it is not feasible to chain buffered services to this level of call depth, especially when the payload size is open to growth, which is usually the case as system usage increases over time.

How to Implement Fluid Services

Fluid services could be implemented on different platforms, languages and protocols. This sample is written on .NET WCF, using the BodyWriter class and streaming capability of the XmlSerializer.

In real life, no one would expect you to deal with all the details of writing such services. It just complicates everything. But if these capabilities are encapsulated within a framework, potentially using new language features, it's possible to make it much more accessible to application service developers.

So here are the key details of implementation for this sample code.

To develop the service, we first design an interface that can do custom serialization to the response body:

[ServiceContract]

public</span /> interface</span /> IGetPatients

{

[OperationContract(Action = "</span />GetPatientsFluidWriter"</span />,

ReplyAction = "</span />GetPatientsFluidWriter"</span />)]

Message GetPatientsFluidWriter(PatientRequest request);

The service implementation creates a Message object which is created and returned as soon as the method is called:

Message IGetPatients.GetPatientsFluidWriter(PatientRequest request)

{

try</span />

{

Notifications.Instance.OnCallReceived(this</span />);

ThreadSleep(ServiceBehaviorConfig.Instance.ResponseTime);

Message message = Message.CreateMessage(MessageVersion.Soap11,

"</span />GetPatientsFluidWriter"</span />, new</span /> FluidBodyWriter(typeof</span />(Patient),

"</span />patients"</span />, GetPatientsImpl(request)));

return</span /> message;

}

catch</span /> (Exception ex)

{

Debug.WriteLine("</span />Error: "</span /> + ex.ToString());

throw</span />;

}

finally</span />

{

}

}

This makes sure that we have full control over when and how the serialization occurs. What we want to do is, we want to overlap this serialization with the processing of the service.

The FluidBodyWriter object will be invoked as soon as the service method returns. The data it will serialize to XML is actually produced by GetPatientsImpl() method which returns an IEnumerable<</span />Patient></span />.

public</span /> class</span /> FluidBodyWriter : BodyWriter

{

...

</span /> </span /> </span /> protected</span /> override</span /> void</span /> OnWriteBodyContents(XmlDictionaryWriter writer)

{

XmlSerializer serializer = new</span /> XmlSerializer(elementType);

writer.WriteStartElement(this</span />.containerNode);

int</span /> count = 0</span />;

try</span />

{

foreach</span /> (object</span /> elem in</span /> this</span />.fluidDataStream)

{

Patient patient = (Patient)elem;

serializer.Serialize(writer, elem);

Debug.WriteLine("</span />Written "</span /> + patient.PatientID);

count++;

}

Debug.WriteLine(string</span />.Format("</span />{0} items sent"</span />, count));

writer.WriteEndElement();

}

...

}

All this code does is, gets an item from the enumerator and serializes it right away. You can imagine the data could be coming from another stream such as a fluid service or a database async query. This service will just serialize the data as it arrives or as it is produced.

The client does a similar thing. It just creates a reader on the Message object that is received. The Message object does not contain the fully formed response, but rather can be used to deserialize objects as they are read from the response stream.

private</span /> IEnumerable<Patient> ReadPatients(Message message, string</span /> localName)

{

XmlReader reader = message.GetReaderAtBodyContents();

if</span /> (reader.LocalName != localName)

{

throw</span /> new</span /> Exception("</span />Invalid container node"</span />);

}

XmlSerializer serializer = new</span /> XmlSerializer(typeof</span />(Patient));

reader.ReadStartElement(localName);

while</span /> (!reader.EOF && reader.LocalName == "</span />Patient"</span />)

{

Patient patient = (Patient)serializer.Deserialize(reader);

yield</span /> return</span /> patient;

}

reader.ReadEndElement();

}

What makes this work, is that the XmlSerializer object is smart enough to wait until sufficient number of characters are received from the stream before starting actual deserialization of the given type.

Finally, you need to configure both the client and the server to use streaming mode.

<</span />basicHttpBinding</span />></span />

<</span />binding</span /> name</span />="</span />basicHttp"</span /> maxReceivedMessageSize</span />="</span />67108864"</span />

transferMode</span />="</span />StreamedResponse"</span />

sendTimeout</span />="</span />0:01:1"</span /> maxBufferSize</span />="</span />16384"</span /> closeTimeout</span />="</span />0:00:1"</span /> /</span />></span />

<</span />/</span />basicHttpBinding</span />></span />

Conclusion

This article and the included sample code demonstrated that not only the 'Fluid Services' concept can really work in practice but also that it doesn't even require a lot of programming either. All that is needed is special care to make sure distributed services utilize their inherent parallelism to the max. I'm hereby openly inviting framework developers to start considering this type of architecture and come up with possible patterns around it. We really need a consistent and easy-to-use framework that also guides the developer to follow the right way to implement efficient asynchronous and overlapped processing. Of course, the idea is not mature yet, and there are a lot of areas to improve like adding in-memory parallel processing of pipelined stages, patterns of partial processing on an element stream, security, call cancellation and reevaluation of service-oriented patterns. This is why I preferred to write a toy project rather than a generic framework. I would also like to emphasize that this sample did not focus on the 'call cancellation' aspect which might really create a new way of scaling such fluid services. But it really requires a separate discussion on its own. This sample provided a way of call cancellation by just dropping the service connection, which was obviously not an efficient way to implement it. None of the scenarios that we walked through didn't take that into account either. However, with a design that supports an efficient way of call cancellation, it will be possible to create systems that allow much more graceful usage of server resources because clients will be able to decide how much of the data to consume, rather than having to consume all the data all the time.

I really hope this article will inspire architects and developers to adopt such design, come up with patterns, frameworks, libraries, tools and eventually create a whole new programming paradigm which is competent enough to cope with the scalability and responsiveness requirements of SOA systems of the future.

History

- 3rd September, 2010: Initial version