In this article, we will see how you can use OPENJSON function to insert array of JSON object into table.

Table of Contents

- Introduction

- Background

- Use Case - Loading JSON into Database

- Handling Required Fields

- Importing Complex JSON Structures

- GenerateCode

- Inside

- History

Introduction

SQL Server 2016 and Azure SQL Database have a new function OPENJSON that enables you to easily parse JSON text and insert it into any table. See more details about JSON support in Friday the 13th - JSON is coming to SQL Server. In this article, we will see how you can use this function to insert array of JSON object into table.

Background

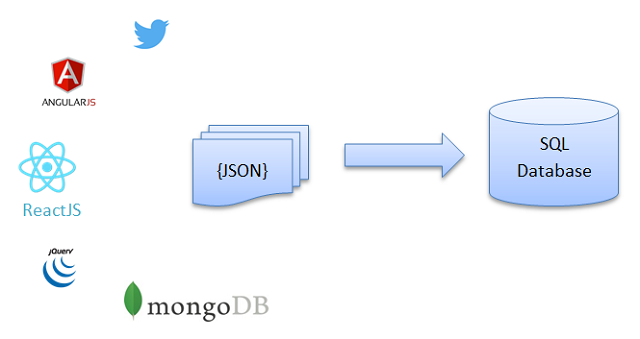

Have you ever created some REST API that accepts JSON and you had to import this JSON into database? Maybe you have REST service that receives JSONs from JQuery, AngularJS or ReactJS applications? Did you ever call some REST service that returns response as JSON or loaded some JSON from file and then you had to store results in SQL tables? Maybe you had to load some JSON documents from Twitter or MongoDB into database?

In the past, you probably had to parse this JSON using JSON.Net or some other serializer or use frameworks to map JSON into objects and then store them into database using ADO.NET or Entity Framework. With SQL Server 2016, you have another alternative - just send the entire JSON text to database and parse it using new OPENJSON function.

In this article, we will see how you can do it.

Use Case - Importing JSON in Database

Imagine that you have one or many JSON objects like in the following example:

[

{ "id" : 2,"firstName": "John", "lastName": "Smith",

"age": 25, "dateOfBirth": "2007-03-25T12:00:00" },

{ "id" : 5,"firstName": "John", "lastName": "Smith",

"age": 35, "dateOfBirth": "2005-11-04T12:00:00" },

{ "id" : 7,"firstName": "John", "lastName": "Smith",

"age": 15, "dateOfBirth": "1983-10-28T12:00:00" },

{ "id" : 8,"firstName": "John", "lastName": "Smith",

"age": 12, "dateOfBirth": "1995-07-05T12:00:00" },

{ "id" : 9,"firstName": "John", "lastName": "Smith",

"age": 37, "dateOfBirth": "2015-03-25T12:00:00" }

]

If you send this JSON as a parameter of some query or stored procedure, or set it as some local variable, you can easily convert this array of JSON objects to set of rows using OPENJSON function, and see what's in this JSON:

SELECT *

FROM OPENJSON(@json)

WITH (id int, firstName nvarchar(50), lastName nvarchar(50),

age int, dateOfBirth datetime2)

OPENJSON function will parse JSON, and in WITH clause, you can specify what column names you want to see. OPENJSON will match column names with keys in JSON arrays and return set of rows. Also, it will do automatic conversion from character data into types that are associated to each column. If you execute this query, you will get results something like below:

2 John Smith 25 2007-03-25 12:00:00.0000000

5 John Smith 35 2005-11-04 12:00:00.0000000

7 John Smith 15 1983-10-28 12:00:00.0000000

8 John Smith 12 1995-07-05 12:00:00.0000000

9 John Smith 37 2015-03-25 12:00:00.0000000

Now, you can easily import these values in any table:

INSERT INTO Person (id, name, surname, age, dateOfBirth)

SELECT id, firstNAme, lastName, age, dateOfBirth

FROM OPENJSON(@json)

WITH (id int,

firstName nvarchar(50), lastName nvarchar(50),

age int, dateOfBirth datetime2)

So, this is a single command that directly imports your JSON into table. You can put this in your stored procedure and just provide JSON as an input parameter:

DROP PROCEDURE IF EXISTS dbo.PersonInsertJson

GO

CREATE PROCEDURE dbo.PersonInsertJson(@Person NVARCHAR(MAX))

AS BEGIN

INSERT INTO Person (id, name, surname, age, dateOfBirth)

SELECT id, firstNAme, lastName, age, dateOfBirth

FROM OPENJSON(@json)

WITH (id int, firstName nvarchar(50), lastName nvarchar(50), _

age int, dateOfBirth datetime2)

END

You can use similar procedures to update existing rows in table from JSON object. In the following example, I will use OPENJSON to parse input JSON text, and update name, surname, age, and dateOfBirth fields by matching id field:

UPDATE Person

SET name = json.firstname,

surname = json.lastname,

age = json.age,

dateOfBirth = json.dateOfBirth

FROM OPENJSON(@json)

WITH (id int,

firstName nvarchar(50), lastName nvarchar(50),

age int, dateOfBirth datetime2) AS json

WHERE Person.id = json.id

See details in this post OPENJSON – The easiest way to import JSON text into table.

If you are an advanced SQL user, you can use MERGE statement that will insert row if it does not exist in the table, and update it if there is a match.

MERGE INTO Person AS P

USING (

SELECT *

FROM OPENJSON(@json)

WITH (id int, firstName nvarchar(50), lastName nvarchar(50),

age int, dateOfBirth datetime2) InputJSON

ON (P.id = InputJSON.id)

WHEN MATCHED THEN

UPDATE SET P.firstName = InputJSON.firstName,

P.lastName = InputJSON.lastName,

P.age = InputJSON.age,

P.dateOfBirth = InputJSON.dateOfBirth

WHEN NOT MATCHED THEN

INSERT (firstName, lastName, age, dateOfBirth)

VALUES (InputJSON.firstName, InputJSON.lastName, InputJSON.age, InputJSON.dateOfBirth);

This is combined UPdate or inSERT command that will either update row by id, or add a new one. You can see more details at Upsert JSON documents in SQL Server 2016.

Handling Required Fields

JSON may have some missing fields in objects, which might be fine. OPENJSON will return NULL if there is no property in input. However, if you want to ensure that you have all required fields in input JSON, you can add strict option in column:

SELECT *

FROM OPENJSON(@json)

WITH (id int 'strict $.id',

firstName nvarchar(50) 'strict $.firstName',

lastName nvarchar(50),

age int,

dateOfBirth datetime2)

If you add '$.strict keyName' option after type, OPENJSON will know that this keyName is required. If it cannot find value in the keyName, it will throw an error.

Fields that are not marked with strict are not required, and OPENJSON will return null if it cannot find them.

Importing Complex JSON Objects

JSON does not need to be flat. Your JSON objects may have nested values like in the following example:

[

{ "id" : 2,

"info": { "name": "John", "surame": "Smith" },

"age": 25 },

{

"id" : 5,

"info": { "name": "Jane", "surame": "Smith" },

"dateOfBirth": "2005-11-04T12:00:00" }

]

OPENJSON can also parse this structure. If you don't have flat hierarchy of key:value pairs, you can specify "path" of each property after type:

SELECT *

FROM OPENJSON(@json)

WITH (id int 'strict $.id',

firstName nvarchar(50) 'strict $.info.name',

lastName nvarchar(50) '$.info.surname',

age int,

dateOfBirth datetime2)

If you don't have flat hierarchy of key value pairs and you have some fields in nested objects, you can specify something like JavaScript-like path of the field. You can combine nested paths with strict keyword.

JSON can even have nested arrays like in the following example:

[

{ "id" : 2,

"info": { "name": "John", "surame": "Smith" },

"age": 25,

"skills": ["C#","SQL","JSON","REST"]

},

{

"id" : 5,

"info": { "name": "Jane", "surame": "Smith" },

"dateOfBirth": "2005-11-04T12:00:00",

"skills": ["C#","SQL"] }

]

You can also parse this JSON text with OPENJSON. Since we want to read this skills JSON array as "whole JSON", we need to add AS JSON option:

SELECT *

FROM OPENJSON(@json)

WITH (id int 'strict $.id',

firstName nvarchar(50) 'strict $.info.name',

lastName nvarchar(50) '$.info.surname',

age int,

dateOfBirth datetime2,

skills NVARCHAR(MAX) AS JSON

)

Now OPENJSON will return entire JSON array is skills column:

id firstName lastName age dateOfBirth skills

2 John Smith 25 NULL ["C#","SQL","JSON","REST"]

5 Jane Smith NULL 2005-11-04 12:00:00.0000000 ["C#","SQL"]

As you can see, with a few options, you can easily read any JSON structure and validate required fields.

Generating Code that Inserts JSON

Although this is a simple command, it might be hard to write it if you have wide tables with 20-30 columns. Also, if some of the columns have special characters, you will need to surround them with [ ] in SQL names, and with " " in JSON paths.

Therefore, I have created a function that generates this script - you can download it here. The signature of this SQL function looks like this:

CREATE FUNCTION

dbo.GenerateJsonInsertProcedure(@SchemaName sysname, @TableName sysname, _

@JsonColumns nvarchar(max), @IgnoredColumns nvarchar(max))

RETURNS NVARCHAR(MAX)

In order to generate Insert stored procedure, you can specify Schema name of your table and table name. Also, if you have some columns in table that contain JSON text and if you will have some nested JSON in your input, you can specify list of these columns in @JsonColumns parameter. Finally, if some columns should not be inserted via JSON (e.g., DateModified, ModifiedBy) you can provide them as comma separated list of column names.

Now, let's see how it works. I will generate JSON insert stored procedure for AdventureWorks Person.Address table:

declare @SchemaName sysname = 'Person'

declare @TableName sysname = _

'Address'

declare @IgnoredColumns nvarchar(max) = _

'DateModified'

declare @JsonColumns nvarchar(max) = '||'

print (dbo.GenerateJsonInsertProcedure(@SchemaName, @TableName, @JsonColumns, @IgnoredColumns))

In this case, I will just print the script that function returns. Output will be:

DROP PROCEDURE IF EXISTS [Person].[AddressInsertJson]

GO

CREATE PROCEDURE [Person].[AddressInsertJson](@Address NVARCHAR(MAX))

AS BEGIN

INSERT INTO Address([AddressLine1],[AddressLine2],[City],[StateProvinceID],_

[PostalCode],[ModifiedDate])

SELECT [AddressLine1],[AddressLine2],[City],[StateProvinceID],[PostalCode],[ModifiedDate]

FROM OPENJSON(@AddressJson)

WITH (

[AddressLine1] nvarchar(120) N'strict $."AddressLine1"',

[AddressLine2] nvarchar(120) N'$."AddressLine2"',

[City] nvarchar(60) N'strict $."City"',

[StateProvinceID] int N'strict $."StateProvinceID"',

[PostalCode] nvarchar(30) N'strict $."PostalCode"',

[ModifiedDate] datetime N'strict $."ModifiedDate"')

END

Function will go through all columns in the specified table, check what is the type, is it required column (in that case, it will generate $.strict modifier in path) and create script. You can modify this query and remove unnecessary columns if you want.

If you want to try it, you can download GenerateJsonInsertScript.zip that contains SQL script.

Inside the Script

If you want to know details about this script, here are some more detailed explanations.

First, we need a query that will return list of columns that will be generated in INSERT list, SELECT list, and WITH clause. I have used this query:

select

col.name as ColumnName,

column_id ColumnId,

typ.name as ColumnType,

case typ.name

when 'char' then '(' + cast(col.max_length as varchar(10))+ ')'

when 'nchar' then '(' + cast(col.max_length as varchar(10))+ ')'

when 'nvarchar' then (IIF(col.max_length=-1, '(MAX)', _

'(' + cast(col.max_length as varchar(10))+ ')'))

when 'varbinary' then (IIF(col.max_length=-1, '(MAX)', _

'(' + cast(col.max_length as varchar(10))+ ')'))

when 'varchar' then (IIF(col.max_length=-1, '(MAX)', _

'(' + cast(col.max_length as varchar(10))+ ')'))

else ''

end as StringSize,

case

when col.is_nullable = 1 then '$.' else 'strict $.'

end Mode,

CHARINDEX(col.name, @JsonColumns,0) as IsJson

from sys.columns col

join sys.types typ on

col.system_type_id = typ.system_type_id AND col.user_type_id = typ.user_type_id

LEFT JOIN dbo.syscomments SM ON col.default_object_id = SM.id

where object_id = object_id(QUOTENAME(@SchemaName) + '.' + QUOTENAME(@TableName))

and col.is_identity = 0

and col.is_computed = 0

and col.is_hidden = 0

and col.is_rowguidcol = 0

and generated_always_type = 0

and (sm.text IS NULL OR sm.text NOT LIKE '(NEXT VALUE FOR%')

and LOWER(typ.name) _

NOT IN ('text', 'ntext', 'sql_variant', 'image','hierarchyid','geometry','geography')

and col.name NOT IN (SELECT value FROM STRING_SPLIT(@IgnoredColumns, ','))

This query will return list of columns with their id, names and types b looking in system tables.

Interesting thing is StringSize column. This column formats type in WITH clause, e.g., nvarchar(50) or varchar(max) based on type and length.Mode column returns "strict $" instead of "$" if column is required, i.e., not null. This mode requires that key must exists in JSON. All columns in @jsonColumns variable will be marked with 1 in IsJson column.

This code will not return columns that should not be inserted in table using explicit insert. In this code, I'm excluding identity, hidden, computed, rowguid and generated always columns. Also, I have excluded all columns that will be populated via sequences.

Also, if OPENJSON cannot return some types like CLR, ot geometry/geography, this query will ignore these columns.

Finally, all columns placed in IgnoredColumns will be ignored.

Now I need to generate list of columns that belongs to table schema that will be added in INSERT (<<column list>>) and SELECT <<column list>> parts in stored procedure. I'm using this script:

declare @TableSchema nvarchar(max) = '';

select @TableSchema = @TableSchema + QUOTENAME(ColumnName) + ','

from <<col_def>>

order by ColumnId

SET @TableSchema = SUBSTRING(@TableSchema, 0, LEN(@TableSchema))

<<col_def>> is previous query (used as CTE in my script). This code will concatenate all column names from that query and return them as comma separated string.

Now I need to generate columns, types and json paths in WITH clause of OPENJSON function. Here is the query:

declare @JsonSchema nvarchar(max) = '';

select @JsonSchema = @JsonSchema + '

' + QUOTENAME(ColumnName) + ' ' + ColumnType + StringSize +

N''' + Mode + '"' + STRING_ESCAPE(ColumnName, 'json') + _

'"''' +IIF(IsJson>0, ' AS JSON', '') + ','

from col_def

order by ColumnId

This query is similar to the previous one. Here, I am generating the following sequence:

column_name type json_path [AS JSON],

Finally, I need to inject these two column lists in INSERT SELECT OPENJSON WITH() script and generate script that will be returned by stored procedure:

declare @Result nvarchar(max) =

N'DROP PROCEDURE IF EXISTS ' + QUOTENAME( @SchemaName) + '.' + _

QUOTENAME(@TableName + 'InsertJson') + '

GO

CREATE PROCEDURE ' + QUOTENAME( @SchemaName) + '.' + _

QUOTENAME(@TableName + 'InsertJson') + '(@' + @TableName + ' NVARCHAR(MAX))

AS BEGIN

INSERT INTO ' + @TableName + '(' + @TableSchema + ')

SELECT ' + @TableSchema + '

FROM OPENJSON(' + @JsonParam + ')

WITH (' + @JsonSchema + ')

END'

RETURN REPLACE(@Result,',)',')')

Final replace command is used to remove last comma in WITH clause.

If you just want to call this stored procedure, you don't need these details; however, if you are planning to modify it, you will need these details.

History

- 25th March, 2016 - Initial version

- 26th March, 2016 - Added details about implementation of function that generates code