Introduction

This article is part three of a multi part series:

Background

I have practiced the art of web scraping for quite a while, and mostly, carry out the task by hand. I have seen some commercial offerings that offered a quicker and easier way to pull data from web pages that is literally, point and click. This is not only useful for saving time for us poor coders, but also for users who are not coders, but still need to get data from a webpage (without annoying the coders of course!). This article will start as a short introduction to what is needed to put such an engine together and highlight some techniques for building a point-and-click web-scrape/crawl engine.

There is enough in this article to get you started working on something yourself, and I intend revisiting it later with working code once I have that completed.

Point and Click Engine

Putting together most things is usually one part brain power and joining the dots, and one part building on the shoulders of those who have gone before us - this project is no different.

The workflow is pretty basic - and a few commercial outfits have done this already, including Kimono Labs. They were sold and closed their product, so it's no use to us, but we can learn a lot from it! ...

Step 1 - Highlight/select Elements to Scrape

The first thing is to have a method for dynamically, in the browser, selecting/identifying HTML elements that contain data that we want to scrape. This is generally done as a browser extension/plugin.

Overall, it's a pretty simple thing to do, there are examples here and here. 'Selector Gadget' is also a good example to look at.

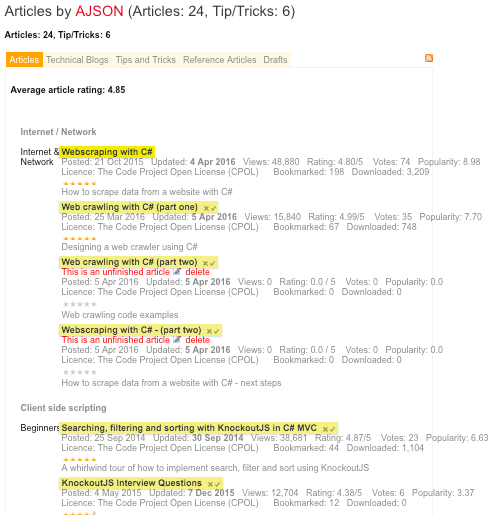

To get repeating elements like in the Kimono screenshot below, you just need to look at the element selected, then look around its parent/siblings for patterns of elements that repeat, and make a guess (letting the user correct things as you go). In this example, you can see I have in browser, clicked on the title of one of my articles, and the code has magically auto-selected what it thinks are other article titles.

The concept above just gets repeated with other fields/blocks of data on a page you want to scrape, and saved into a template. The key to knowing what to scrape, is to grab the XPath from the elements you want to scrape. Sure, this can be a bit involved at times, but it's worth it in the long run. Learn a bit more about XPath here. Once you have the XPath of one or more elements, you can use the techniques demonstrated in my introduction to web scraping article to scrape data from these with a CSS Select query.

The following diagram shows how, for example, you might store a template in XML for the scrape of the div 'title', above.

Borrowing some code from the previous article on web scraping, and based on the XML example above, this is how you would then pull all of the 'title' data from the above page into a list variable called Titles:

WebPage PageResult = Browser.NavigateToPage(new Uri(XML.url));

var Titles = PageResult.Html.SelectNodes(XML.elements.element[n].xpath)

Step 2 - The Scrape-flow

You need to tell your engine how to get at both the page the data is on, and where the data on the page is. This is not the same as the selecting in Step 1. What I refer to here, is things that bring the data to the page - let's say I had 100 articles, but the page only showed 30 at a time. In this case, you need to let your engine know that it needs to:

- Go to page

- Find elements, scrape

- Go to NEXT page (and how to do it)

- Rinse, repeat, until last page

To make this happen, you need to let the engine know how to navigate, this involves identifying for paged data:

- Start page

- End page

- Rows per page

- Prev/next links

Step 3 - Schedule and Scrape!

Ok, the last piece of this puzzle is putting it all together so you can point-click what you want to scrape, and then schedule it to happen on a regular basis. Depending on your needs, here are some useful tools that might assist you along the way:

Quartz .net scheduler

This is an extremely robust timer/scheduler framework. It is widely used, easy to implement and a far better approach to scheduling things in code than using and abusing the ubiquitous timer class. You can implement schedules to be very simple 'every Tuesday', 'once, at this specific time', or be quite complex beasts using the in-built CRON trigger methods.

Here are some examples:

| 0 15 10 ? * * | Fire at 10:15am every day |

| 0 0/5 14 * * ? | Fire every 5 minutes starting at 2pm and ending at 2:55pm, every day |

| 0 15 10 ? * 6L 2002-2005 | Fire at 10:15am on every last Friday of every month during the years 2002, 2003, 2004 and 2005 |

Pretty powerful stuff!

JQuery-cron builder

If your user interface is on the web, this JQuery plugin may come in useful. It gives the user an easy interface to generate/select schedule times without having to know how to speak cron !

The job of this final step is simply to execute a scrape process, against the stored templates, at a pre-determined scheduled time. To get something up and running fast, with the basics, is easy - the fun starts when you have to work on building it out. Watch this space. :)

Summary

That completes the basics of this article, and should be enough to get you started coding!

The next update will provide some working code you can implement and build on.

So remember:

- Select into template

- Identify the scrape-flow

- Schedule and scrape!

I have attached an example project of dynamic selecting in the browser, taken from one of the links above to get you started.

Finally - If you liked this article, please give it a vote above!!

History

- 7th April, 2016: Version 1