Contents

- Introduction

- Background

- The Architecture

- High-Level Design

- The Intel Xeon Phi

- PCIe Communication

- Network Architecture

- Rack Construction

- Monitoring and Maintenance

- Hot Water Cooling

- Quick Couplings and Manifolds

- Roll-Bond Plates and Interposers

- Tubes, Glue and Thermal Paste

- The Software Stack

- Operating System and Provisioning

- Compilers and Tools

- Firmware and Drivers

- Communication Libraries

- Crucial Benchmarks

- Points of Interest

- References

- History

Introduction

Sometimes people ask me what I do. I do a lot I guess. Maybe too much. But honestly .NET and the web are great companions and I would never betray them. Therefore I spent most of my time in another, but closely related field: High Performance Computing, or short HPC. HPC is the industry that tries to come up with larger, more efficient systems. The largest simulations in the world are run on HPC systems. The state of the HPC industry is reflected by two very important lists. The Top 500, which contains the 500 fastest supercomputers currently on the planet and the Green 500. If a machine is listed in the Top 500, it is allowed to enter the Green 500. The Green 500 cares about computations per energy. If a machine is able to run the most computations, but requires the most energy, it may be less efficient then a second system, doing almost as many computations, but with far less energy consumption.

Supercomputers are very expensive. The one-time price to design, purchase and build the system is actually quite low, compared to the regular costs that come with running the system. A standard HPC system is easily consuming hundreds of kW of power. Hence the strong drift for energy-efficient computing. Luckily, chip vendors have also caught that drift and provide us with new, exciting possibilities. These days ARM is a good candidate for future energy-efficient systems. But who knows what's to come in the future. The design possibilities seem endless. Good ideas are still rare, though.

An excellent idea has been to introduce hot water cooling. Regular (cold) water cooling has been established for quite a while, but is not as efficient as possible. Of course it is much more efficient than standard air cooling, but the operation and maintenance of chillers are real deal breakers. They require a lot of energy. Hot water cooling promises to yield chiller-free, i.e., cost-free, cooling all year long. The basic principle is similar to the way that blood flows through our body.

In this article I will try to discuss the ideas and principles behind designing a state of the art supercomputer. I will outline some key decisions and I will discuss the whole process for a real machine: The QPACE 2. The small machine is fast enough to be easily ranked in the Top 500. It is also efficient enough to be one the twenty most energy efficient systems in the currently known universe. The prototype is installed at the University of Regensburg. It is also possible to purchase the technology that has been created for this project. The Aurora Hive series from EuroTech is the commercially available product, which emerged from this project.

Background

A while ago I realized that I wanted to write a kind of unique article for CodeProject. An article that covers a topic that is rarely explained and that does not spawn a very broad and generalized explanation, but rather something specialized and maybe interesting. There was only one topic: supercomputing!

In the past we've seen really cool articles like Using Cudafy for GPGPU Programming in .NET by Nick Kopp (don't forget to check also out his related article CUDA Programming Model on AMD GPUs and Intel CPUs) or golden classics such as High performance computing from C++ to MMX. Also the countless great IoT articles during the Microsoft Azure IoT contest shouldn't be forgotten.

So what I wanted to do is give a broad overview of the field and then go into really special topics in greater detail. Especially the section about hot water cooling should be of interest. The whole article is themed to be about efficient high-performance computing. The consequences from research in this field are particularly interesting. They will empower future generations of data centers, mobile devices and personal computing. The demand for more energy has to stop. But the demand for computing is not going away. This crisis can only be solved by improved technology and more intelligent algorithms. We need to do more with less.

I hope you like the journey. I included a lot of pictures to visualize the process even though my writing skills are limited.

The Architecture

When an HPC project is launched there has to be some demand. Usually a specific application is chosen to be boosted by the capabilities of the upcoming machine. The design and architecture of this machine has to be adjusted for that particular application.

Sometimes there are a lot of options to achieve the goals for the desired application. Here we need to consider secondary parameters. In our case we aimed for innovative cooling, high computing density and outstanding efficiency. This reduced the number of options to two candidates, where both are accelerators. One is a high-end Nvidia GPU and the other option is a brand new product from Intel, called a co-processor. This is basically a CPU on steroids. It contains a magnitude more cores than a standard Xeon processor. The cores are quite weak, but have a massive vector unit. This is an x86 compatible micro-architecture.

At this point it is good to go back to the application. What would be better for the application? Our application was already adjusted for x86 (like) processors. An adjustment for GPU cards would have been possible, however, creating another work item did not seem necessary at the time. Plus using a Xeon Phi seems innovative. Alright, so our architecture will revolve around the Intel Xeon Phi - codename KNC (Knights Corner), the first publicly available MIC product (Many Integrated Cores).

This is where the actual design and architecture starts. In the following subsections we will discuss the process of finding the right components for issues like:

- Power Supply (PSU)

- Power Distribution (PDU)

- Cooling (Intra-Node)

- Package Density (Node)

- Host Processor (CPU)

- Board Management (BMC)

- Network (NWP)

- PCIe Communication (Intra-Node)

- Network Topology (Inter-Node)

- Chassis (Rack)

- Remote Power Control

- Emergency Shutdown

- Monitoring System

This is a lot of stuff and I will hopefully give most parts the space they deserve.

High-Level Design

From an efficiency point of view it is quite clear that the design should be accelerator focused. The accelerators have a much better operation / energy ratio than ordinary CPUs. We would like to alter the design of our applications to basically circumvent using the CPU (besides for simple jobs, e.g., distributing tasks or issuing IO writes). The way to reflect this objective in our design is by reducing the number of CPUs and maximizing the number of co-processors.

The Xeon Phi is no independent system. It needs a real CPU to be able to boot. After all, it is just an extension board. Hence we require at least 1 CPU in the whole system. The key question is now how many co-processors can be sustained by a single CPU? And what are the requirements for the CPU? Could we take any CPU (x86, ARM, ...)? It turns out that there are many options, but most of them have significant disadvantages.

The option with the least probability of failure is to purchase a standard Intel server CPU. These CPUs are recommended by Intel for usage with the Xeon Phi co-processor. There are some minimal requirements that need to be followed. A standard solution would contain two co-processors. The ratio of 2:1 sounds lovely, but is far away from the density we desire. Additionally to the requirement of using as many co-processors as possible we still need to make sure to be able to sustain high-speed network connections.

In the end we arrive at an architecture that looks as follows. We have 6 cards in the PCIe bus, with one containing the CPU and another one for the network connection(s). The remaining four slots are assigned to Xeon Phi co-processors.

The design and architecture of the CPU board has been outsourced to a specialized company in Japan. The only things they need to know are specifications for the board. What are its dimensions? How many PCIe lanes and what generation should it use / be compatible with? What processor should be integrated? What about the board management controller? What kind of peripherals have to be added?

Finally the finished card contains everything we care about. It even has a VGA connector, even though we won't have a monitor connected normally. But such little connectors are essential for debugging.

The CPU board is called Juno. It features a low-power CPU. The Intel Xeon E3-1230L v3 is a proper fit for our needs. It supports the setup we have in mind.

Speaking about debugging: The CPU card alone does not have the space to expose all debugging possibilities. Instead an array of slots is offered, which may be used in conjunction with the array of pins available on a subboard. This suboard contains yet another Ethernet and USB connector, as well as another array of slots. The latter can be used with UART. UART allows us to do many interesting things, e.g., flashing the BMC firmware or doing a BIOS update.

UART can also be used to connect to the BMC or receive its debugging output. If no network connection is available UART may be the only way of contacting the machine.

Internally everything depends on two things: Power and communication via the PCIe bus. Both of these tasks are managed by the midplane, which could be regarded as a mainboard. The design and construction of this component has been outsourced to a company in Italy. Especially the specification for implementing the PLX chip correctly is vast. There are many edge cases and a lot of knowledge around the PCIe bus system is required.

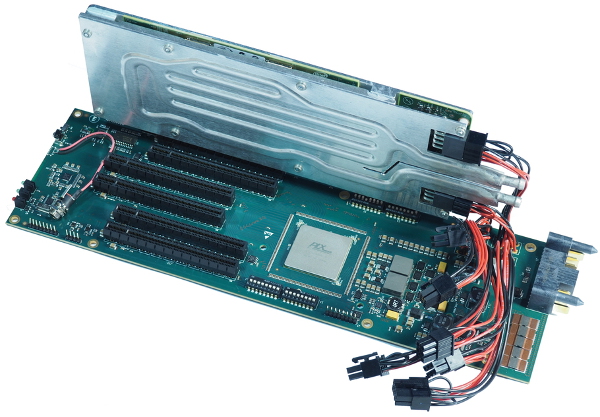

The PLX chip is the PEX 8796. It is a 96-lane, 24-port, PCIe Gen3 switch developed on 40 nm technology. It is therefore sufficient for our purposes. The following picture shows the midplane without any attached devices. The passive cooling body on top of the PCIe switch is not mounted in the final design.

The midplane is called Mars. It is definitely the longest component in a QPACE 2 node. It exposes basic information of the system via three LEDs. It contains the six PCIe slots. It features a hot swap controller to enable hot plugging capabilities. Among the most basic features of a hot swap controller are the abilities to control current and provide fault isolation.

The board also features an I2C expander and an I2C switch. This makes the overall design very easy to extend. Internally a BMC (Board Management Controller) is responsible for connecting to I2C devices. Therefore the BMC image needs to be customized by us. The BMC also exposes an IPMI interface allowing other devices to gather information about the status of the system.

A crucial task that has been set up for the BMC is the surveillance of the system's temperature. While we will later see that a whole monitoring infrastructure has been set up for the whole rack, the node needs to be able to detect anomalous continuous temperature spikes, which may require full node shutdown.

Similarly efficient water leakage sensors are integrated to prevent single leaks to be unspotted. An case of a local leak the nodes need to be shut down to prevent further damages from happening. Leakages should also be detectable by pressure loss in the cooling circuit.

The Intel Xeon Phi

The Intel Xeon Phi is the first publicly available product based on Intel's Many Integrated Cores architecture. It comes in several editions. For our purposes we decided to use the version with 61 cores, 16 GB of GDDR5 memory and 1.2 GHz. The exact version is 7120X. The X denotes that the product ships without any cooling body. The following picture shows an Intel Xeon Phi package with a chassis for active cooling.

We can call the Intel Xeon Phi a co-processor, an accelerator, a processor card, a KNC or just a MIC. Nevertheless, it should be noted that each term has a subtle different meaning. For instance an accelerator is possibly the broadest term. It could also mean a GPU. A MIC could also mean another generation of co-processor, e.g., a KNL (successor of the KNC, available soon) or a KNF (ancestor, we could name it a prototype, or beta version of the KNC).

Let's get into some details of the Xeon Phi. After all we need to be aware of all the little details of its architecture. It will be our main unit of work and our application(s) need to be perfectly adjusted to get the maximum number of operations from this device. Otherwise we lose efficiency, which is the last thing we want to reduce.

The following two images show the assembled KNC PCB from both sides. We start with the back.

The KNC contains a small flash unit, which can be used to change hardware settings, access the internals error buffer or do firmware upgrades. It is a more or less independent system. Therefore it needs an SMC unit. The SMC unit also manages various counters for the system. It aggregates and distributes the data received from the various sensors.

The board is so dense that its hard to figure out what important components are assigned to what side. For instance the GDDR memory chips are definitely placed on both sides. The form a ring around the die. This is also reflected by the internal wiring as we will see later.

The front also shows the same ring of GDDR.

There seems to be a lot more going on on the front. We have the two power connectors, a variety of temperature sensors (in total there are seven) and electronic components, such as voltage regulators. Most importantly we not only see the vias of the silicon, but also the chip itself. This is a massive one - and it carries 61 cores.

A single KNC may take up to 300 W of power during stress. In idle the power consumption may be as low as 50 W, but without any tricks we may end oscillate around 100 W. A neat extra is the blue status LED. It may be one of the most useless LEDs ever. In fact I've never received any useful information from it. If the KNC fails during boot, it blinks. If it succeeds, it blinks. Needless to say that the only condition for a working LED is a PCIe connection. Even if power is not connected the LED will blink from the current transmitted via the PCIe connectors.

Internally the cores of a KNC are connected with each other by a ring bus system. Each core has a shared L2 cache, which is connected to a distributed tag directory. The cache coherency protocol for the KNC cores is MESI. There are up to 6 memory controllers, which can be accessed via the ring bus as well. Finally the ring bus proxies the connection to the PCIe tree.

The following image illustrates the concept of the ring bus.

Even more important than understanding the communication of the cores with each other is knowledge about the core's internal architecture. Knowing the cache hierarchy and avoiding cache misses is crucial. Permanently using the floating point units instead of waiting for data delivery ensures high performance.

We care about two things: The bandwidth delivered by a component, measured in GB/s, and the latency of accessing the device - here we count the clock cycles. In general the observed behavior is similar to common knowledge. These are basically numbers every programmer should know.

Interestingly access to another core's L2 cache is a magnitude more expensive than reading from its own L2 cache. But this is still another magnitude cheaper than accessing data controlled by the memory controllers.

The next illustration gives us some accurate numbers to give us a better impression of the internal workings. We use 2 instead 4 threads, since only 2 threads are run within one cycle. Threads only operate every other cycle, effectively cutting the frequency in half for all four threads.

The quite low memory bandwidth is a big problem. There are some applications that are not really memory bound, but for our applications in Lattice QCD we will be definitely constraint by that. Once we reach the memory limit we are basically done with our optimizations. There is nothing more that could be improved. The next step would be search for better algorithms, which may lower the memory bandwidth requirements. This can be achieved by better data structures, more data reuse or more effective approximations to the problem that needs to be solved.

The modular design of the QPACE 2 makes it theoretically possible to exchange the KNC with, e.g., the KNL. The only practical requirement is that the PCIe chip and the host CPU are able to manage the new card. But besides that there are no other real limiting factors.

PCIe Communication

The CPU serves as the PCIe root complex. The co-processors as well as the Connect-IB card are PCIe endpoints. Peer-to-peer (P2P) communication between any pair of endpoints can take place via the switch. The reasoning behind this node design is that a high-performance network is typically quite expensive. A fat node with several processing elements and cheap internal communications (in this case over PCIe) has a smaller surface-to-volume ratio and thus requires less network bandwidth per floating-point performance. This lowers the relative cost of the network.

The number of KNCs and InfiniBand cards on the PCIe switch is determined by the number of lanes supported by commercially available switches and by the communication requirements within and outside of the node. We are using the largest available switch, which supports 96 lanes of PCIe Gen3. Each of the Intel Xeon Phis has a 16-lane Gen2 interface. This corresponds to a bandwidth of 8 GB/s. Both, the CPU and the Connect-IB card, have a 16-lane Gen3 interface, i.e., almost 16 GB/s each. The external InfiniBand bandwidth for two FDR ports is 13.6 GB/s. This balance of internal and external bandwidth is consistent with the communication requirements of our target applications.

The following image shows our PCIe test board. It is equipped with the PLX chip and contains PCIe slots, just like a normal mainboard does. Additionally it comes with plenty of jumpers to setup the bus according to our needs. Furthermore we have some debug information displayed via the integrated LCD display.

The PCIe bus is a crucial component in the whole design of the machine. The intra-node communication is done exclusively via the PCIe bus. It is therefore required to have the best possible signal quality. We did many measurements and ensured that the link training could be performed as good as possible. The signal quality is controlled via standard eye diagrams. Even though every PCIe lane should perform at its best, it was not possible to test every lane as extensive as desired. We had to extrapolate or come to conclusions with limited information. The result, however, is quite okay. In general we only see a few PCIe errors, if any. The few errors we sometimes detect are all correctable and do not affect the overall performance.

Network Architecture

As the principle working horse of our network architecture an InfiniBand network has been chosen. This solution can be certainly considered conservative. Among the top 500 supercomputers, InfiniBand is the interconnect technology in most installations. Ethernet is still quite popular, though. We can compare the 224 installations of InfiniBand with 100 using Gb/s Ethernet connections, and 88 using 10 Gb/s.

A proper network topology has to ensure that less cables (with fewer connectors) are needed, while minimizing the need for switches and increasing the directness of communication. The most naive, but expensive and unpractical way, would be to connect every node with every other node. Here, however, we require N - 1 ports on each node. Those ports need to be properly cabled. A lot of cables and a lot of ports, usually resulting in a lot of cards.

Therefore we need a smarter way to pursue our goals. A good way is by looking at the number of possible ports first. In our case the decision was between a single and a dual port solution. Two single port cards are not a proper solution, because such a solution would consume too many PCIe slots. Naturally an obvious solution is to connect nearest neighbors only.

A torus network describes such a mesh interconnect. We can arrange nodes in a rectilinear array of N > 1 dimensions, with processors connected to their nearest neighbors, and corresponding processors on opposite edges of the array connected. Here each node has 2N connections. Again we do not have enough ports available per node. The lowest number would be four. With two ports we can only create a ring bus.

In the end we decided that the possibly best option is to use a hyper-crossbar topology. This has been used before, e.g., by the Japanese collaboration CP-PACS. Essentially we calculate the number of switches by using two times the number of nodes (i.e., 2 ports per node) divided by the number of ports per switch. In our case we have 36 ports per switch, which leaves us with 4 switches. We use 32 of these 36 ports per switch for the nodes, with the additional ports being assigned to connect the machine to a storage system. A hyper-crossbar solution is 2-dimensional, which means we have 2 switches in x-direction and the same number of y-direction.

The previous image shows the hyper-crossbar topology in our scenario. The switches in x-direction are marked by the red rectangles, the switches in y-direction by the blue rectangles. Each node is represented by a black outlined ellipse. The assignment of the two ports are indicated by the bullets. The bullets are drawn with the respective color of their assigned switch.

For a hyper-crossbar we need a dual port connector. Another requirement is the support for FDR data rates. FDR stands for fourteen data rate. FDR enables A suitable candidate could be found with the Connect-IB card from Mellanox. The card is providing 56 Gb/s per port, reaching up to 112 Gb/s in total.

Additionally Connect-IB is also great for small messages. A single card can handle at least 130 × 106 messages per second. The latency is measured to be around a microsecond. For us the card is especially interesting due to CPU offload of transport operations. This is hardware enhanced transfer, or DMA. No CPU is needed to transport the bytes to the device. In the end we will benefit especially for our co-processor.

The following image shows a Connect-IB card with the passive cooling body installed. Also we still have the mounting panel attached.

There are other reasons for choosing the Connect-IB card. It is the fourth generation of IB cards from Mellanox. Furthermore it is highly power efficient. Practically a network with Connect-IB cards easily scales to tens-of-thousands of nodes. Scaling is yet another important property of modern HPC systems.

Rack Construction

Before the dimensions of a single node can be decided we should be sure what rack size we want or what our limits are. In our case we decided pretty early for a standardized 42U rack. This is the most common rack size in current data centers. The EIA (Electronic Industries Alliance) standard server rack (1U) is a 19 inch wide rack enclosure with rack mount rails which are 17 3/4 " (450.85 mm) apart and whose height is measured in 1.75" (44.45 mm) unit increments. Our rack is therefore roughly 2 m tall.

The rack design introduces a system of pipes and electrically conductive rails additionally to the standard rack. Each node is measured to be 3U in height. We can have 8 such nodes per level. Furthermore we need some space to distribute cables to satisfy network and power demands. Additional space for some management electronics is required as well. At most we can pack 64 nodes into a single rack.

The next image shows a CAD rendering of the rack's front bottom level. We only see a single node being mounted. The mounting rails are shown in pink. The power supply rails are drawn in dark gray and red. The water inlet and outlet per node is shown besides the green power supply.

The nodes are inserted and removed by using the lever. In the end a node is pulled to break out of the connection held by the quick couplings. The quick couplings will be explained later. Inserting a node is done by pushing it into the quick couplings.

The whole power distribution and power supply system had to be tested extensively. We know that up to 75 kW may be used, since we expect up to 1.2 kW per node (with 64 nodes). This is quite some load for such a system. The high density also comes with very high demands on terms of power per rack.

The connection to the electrically conductive rails is very important. We use massive copper to carry the current without any problems. The supply units are also connected to the rails via copper. The following picture shows the connection prior to our first testing.

It is quite difficult to simulate a proper load. How do you generate 75 kW? Nevertheless we found a suitable way to do some testing up to a few kW. The setup is sufficient to have unit tests for the PSUs, which will then scale without a problem.

Our test setup was quite spectacular. We used immersion heaters borrowed from electric kettles. We aggregated them to deliver the maximum power. The key question was if the connected PSUs can share the load. A single PSU may only take up to 2 kW. Together they should go way beyond those 2 kW. In total we can go up to 96 kW. We use 6 PSUs (with at most 12 kW) to power 8 nodes (requiring at most 9.6 kW). Therefore we have a 5 + 1 system, i.e., 5 PSUs required to work under extreme conditions, with a single PSU redundancy.

The grouping of PSUs to nodes forms a power domain. In total we have 8 such power domains. The power domains relate to the phase in the incoming current. Unrelated to the power domain is the power management. A PSU control board designed in Regensburg monitors and controls the PSUs via PMBus. We use a BeagleBone Black single-board computer that plugs into the master control board, which we named Heinzelmann. A single PSU control board services 16 PSUs. Therefore we require 3 control boards for the whole rack.

There have been other questions, of course, that have been subject for this or similar tests. In the end we concluded that the provided specification was not completely accurate, but we could work around the observed problems.

The top of the rack is reserved for the PSU devices. We have 24 PSUs on each side of the rack. Between the nodes (bottom) and the PSUs (top) we have additional space for auxiliary devices, such as Ethernet switches or the Heinzelmann components. All these components are - in contrast to the nodes - not water cooled. Most of them use active air cooling, some of them are satisfied with passive cooling.

The PSU components also require PDU devices. They are 1U in size and come in an unmanaged configuration. We choose to have 12 outlets following the IEC 60320 C13 standard. This is a polarized three pole plug. Our PDUs distribute 10 A per outlet in 3 phases.

A famous principle for the installation of water circuits is the Tichelmann principle. It states that paths need to be chosen in such a way, that the water pressure is equal for each point of interaction. In our case the point of interaction is the coupling to a node. Since paths are not equal in length, the Tichelmann principle is not chosen.

A possible way around is to design the pipes differently for the points of interaction that have a longer distance from the origin. Also we may artificially extend the path to the coupling of each node to be as long as the longest path. This all sounds hard to design, control and build. It is also quite expensive.

Since the pipes are quite large we estimated that the effect of water flow resistance is too small to have a negative influence in practice. We expect nearly the same flow on the longest vs the shortest path taken. The resistance within a node is much higher than the added resistance by traveling the longer path.

The following image shows the empty rack of the QPACE 2 system. The piping system has already been installed.

The previous image contained the electrically conductive rails, most of the power connectors and the mounting rails. The auxiliary devices, such as the management components, the InfiniBand and Ethernet switches, as well as cables and other wires are not assembled at this point.

Assembling the rack is practically staged as follows. We start with the interior. First all possible positions need to be adjusted and prepared. Then the InfiniBand switches need to be mounted. Power supply units need to be attached. The trays need to be inserted. Now cables are laid out. The should be labeled correctly. The last step is to insert the nodes.

Finally we have the fully assembled and also quite densely packed rack. The following image shows the front of the QPACE 2 rack. All nodes are available at this point in time. The cabling and the management is fully active. The LED at the bottom shows the water flow rate through the rack in liters per minute.

Overall the rack design of the QPACE 2 is not as innovative as the whole machine, but it is a solid construction that fulfills its promise. The reasons to neglect the Tichelmann principle have been justified. The levers to insert and remove nodes work as expected.

Monitoring and Maintenance

Another interesting part of the development of an HPC system is its monitoring system. Luckily we already had a scalable solution that could just be modified to include the QPACE 2 system.

A really good architecture for monitoring large computer systems centers around a scalable, distributed database system. We choose Cassandra. Cassandra makes it possible to have high frequency write operations (many small logs), with great read performance. As a drawback we are limited in modifications (not needed anyway) and we need intelligent partitioning.

The explicit logging and details about the database infrastructure will not be discussed in this article. Instead I want to walk us through a web application, which allows any user of the system to acquire information about its status. The data source of this web application is the Cassandra database. Complementary other data points, such as current ping information is used. All in all the web application is scalable as well.

As an example the following kiviat diagram is shown to observe the current (peak) temperatures of all nodes within the rack.

All temperature sensors are specified in a machine JSON file. The file has a structure that is similar to the following snippet. We specify basic information about the system, like name, year and the number of nodes. Additionally special queries and more are defined (not shown here). Finally an array with temperature sensors is provided.

The id given for each sensor maps to the column in the Cassandra database system. The name represents a description of the sensor that is shown on the webpage.

{

"qpace2": {

"name": "QPACE 2",

"year": 2015,

"nodes": 64,

"temperatures": [

{ "id": "cpinlet", "name": "Water inlet" },

{ "id": "cpoutlet", "name": "Water outlet" },

{ "id": "core_0", "name": "Intel E3 CPU Core 1" },

{ "id": "core_1", "name": "Intel E3 CPU Core 2" },

{ "id": "core_2", "name": "Intel E3 CPU Core 3" },

{ "id": "core_3", "name": "Intel E3 CPU Core 4" },

{ "id": "mic0_temp", "name": "Intel Xeon Phi 1" },

{ "id": "mic1_temp", "name": "Intel Xeon Phi 2" },

{ "id": "mic2_temp", "name": "Intel Xeon Phi 3" },

{ "id": "mic3_temp", "name": "Intel Xeon Phi 4" },

{ "id": "pex_temp", "name": "PLX PCIe Chip" },

{ "id": "ib_temp", "name": "Mellanox ConnectIB" }

]

},

}

Reading the peak temperatures of each node is achieved by taking the maximum of all current sensor readings. But the obtained view is only good to gain a good overview quickly. In the long run we require a more detailed plot for each node individually.

Here we use a classic scatter chart. We connect the dots to indicate some trends. The following picture shows a demo chart for some node. The covered timespan is the last day. We see the different levels of temperature for different components. The four co-processors are measured to have roughly the same temperature.

The CPU cores, as well as the water in- and outlet are among the lower temperatures. The PLX chip and the Mellanox Connect-IB card are slightly higher for idle processes. Nevertheless, they won't scale such as the Xeon Phi co-processors. Hence there are no potential problems coming up as a consequence from the given observation.

How is the web front-end build exactly? After all this is just a simple Node.js application. The main entry point is shown below. Most importantly it gets some controllers and wires them up to some URL. A configuration brings in some other useful settings, such as serving static files or the protocol (http or https) to use.

var express = require('express');

var readline = require('readline');

var settings = require('./settings');

var site = require('./site');

var server = require('./server');

var homeController = require('./controllers/home');

var machineController = require('./controllers/machine');

var app = express();

settings.directories.assets.forEach(function (directory) {

app.use(express.static(directory));

});

app.set('views', settings.directories.views);

app.set('view engine', settings.engine);

app.use('/', homeController(site));

app.use('/machine', machineController(site));

app.use(function(req, res, next) {

res.status(404).send(settings.messages.notfound);

});

var instance = server.create(settings, app, function () {

var address = server.address(instance)

console.log(settings.messages.start, address.full);

});

if (process.platform === 'win32') {

readline.createInterface({

input: process.stdin,

output: process.stdout

}).on(settings.cancel, function () {

process.emit(settings.cancel);

});

}

process.on(settings.cancel, function () {

console.log(settings.messages.exit)

process.exit();

});

We use https for our connections. The page does not contain any sensitive data and we do not have a signed certificate. So what's the purpose? Well, https does not harm and is just the wave of the future. We should all https everywhere. The server load is not really affected and the client can handle it anyway.

The agility of switching from http to https and vice versa is all provided in the server.js file included in the code above. Here we consider some options again. The settings are passed in to an exported create function, which setups and starts the server, listening at the appropriate port and using the selected protocol.

var fs = require('fs');

var scheme = 'http';

var secure = false;

var options = { };

function setup (ssl) {

secure = ssl && ssl.active;

scheme = secure ? 'https' : 'http';

if (secure) {

options.key = fs.readFileSync(ssl.key);

options.cert = fs.readFileSync(ssl.certificate);

}

}

function start (app) {

if (secure) {

return require(scheme).createServer(options, app);

} else {

return require(scheme).createServer(app);

}

}

module.exports = {

create: function (settings, app, cb) {

setup(settings.ssl);

return start(app).listen(settings.port, cb);

},

address: function (instance) {

var host = instance.address().address;

var port = instance.address().port;

return {

host: host,

port: port,

scheme: scheme,

full: [scheme, '://', host, ':', port].join('')

};

}

};

The single most important quantity for standard users of the system is the availability. Users have to be able to see what fraction of the machine is online and if there are resources to allocate. The availability is especially important for users who want to run large jobs. These users are particularly interested about the up-time of the machine.

There are many charts to illustrate the availability. The most direct one is a simple donut chart showing the ratio of nodes that are online against the ones that are offline. Charting is implemented by using the Chart.js library with some custom enhancements and further customization. Without the Chart.Scatter.js extension most of the plots wouldn't be so good / useful / fast. A good scatter plot is still the most natural to display.

Also for the availability we provide a scatter plot. The scatter plot only shows the availability and usage over time. There are many different time options. Most of them relate to some aggregation options set in the Cassandra database system.

Since our front-end web application follows the MVC pattern naturally, we need a proper design for the integrated controllers. We chose to export some constructor function, which will operate on a site object. The site contains useful information, such as the settings, or functions. As an example the site carries a special render function, which is used to render a view. The view is specified over its sitename. The sitename is mapped to the name of a view internally. Therefore we can change the names of files as much as we want to - there is only one change required.

Internally the sitemap is used for various things. As mentioned the view is selected over the sitemap's id. Also links are generated consistently using the sitemap. Details of this implementation remain disclosed, but what should be noted is that we can also use the sitemap to generate breadcrumbs, a navigation or any other hierarchical view of the website.

Without further ado the code for the HomeController. It has been stripped down to show only two pages. One is the landing page, which gets some data to fill blank spots and then transports the model with the data to the view. The second one is the imprint page, which is required according to German law. Here a list of maintainers is read from the site object. These sections are then transported via the model.

var express = require('express');

var router = express.Router();

module.exports = function (site) {

router.get('/', function (req, res) {

site.render(res, 'index', {

title: 'Overview',

machines: machines,

powerStations: powerStations,

frontends: frontends,

});

});

router.get('/imprint', function (req, res) {

var sections = Object.keys(site.maintainers).map(function (id) {

var maintainers = site.maintainers[id];

return {

id: id,

persons: maintainers,

};

});

site.render(res, 'imprint', {

title: 'Imprint',

sections: sections

});

});

return router;

};

The data for the plots is never aggregated when rendering. Instead placeholders are transported. All charts will be loaded in parallel by doing further AJAX requests. There are specialized API functions that will return JSON with the desired chart data.

The utilization shows the number of allocated nodes. An allocation is managed by the job submission queuing system. As we will see later, our choice is SLURM. Users should be aware of the system's current (and / or past) utilization. If large jobs are up for submission, we need to know what the mean time to job initialization will be. The graphs displayed on the webpage give users hints to help them picking a good job size or estimating the start time.

Again we use donut charts for the quick view.

The site builds the necessary queries for the Cassandra database by using a query builder. This is a very simply object that allows us to replace raw text CQL with objects and meaning. Instead of having the potential problem of a query failing (and returning nothing) we may have a bug in our code, which crashes the application. The big difference is that the latter may be detected much more easily, even automatically, and that we can be sure about the transported query's integrity. There is no such thing as CQL injection possible, if done right.

In our case we do not have to worry about CQL injection at all. Users cannot specify parameters, which will be used for the queries. Also the database is only read - the web application does not offer any possibility to insert or change data in Cassandra.

The following code shows the construct of a SelectBuilder. It is the only kind of builder that is exported. The code can be easily extended for other scenarios. The CQL escaping for fields and values is not shown for brevity.

var SelectBuilder = function (fields) {

this.conditions = [];

this.tables = [];

this.fields = fields;

this.filtering = false;

};

function conditionOperator (operator, condition) {

if (this.fields.indexOf(condition.field) === -1)

this.fields.push(condition.field);

return [

condition.field,

condition.value

].join(operator);

}

var conditionCreators = {

delta: function (condition) {

if (this.fields.indexOf(condition.field) === -1)

this.fields.push(condition.field);

return [

'token(',

condition.field,

') > token(',

Date.now().valueOf() - condition.value * 1000,

')'

].join('');

},

eq: function (condition) {

return conditionOperator.apply(this, ['=', condition]);

},

gt: function (condition) {

return conditionOperator.apply(this, ['>', condition]);

},

lt: function (condition) {

return conditionOperator.apply(this, ['<', condition]);

},

standard: function (condition) {

return condition.toString();

}

};

SelectBuilder.prototype.from = function () {

for (var i = 0; i < arguments.length; i++) {

var table;

if (typeof(arguments[i]) === 'string') {

table = arguments[i];

} else if (typeof(arguments[i]) === 'object') {

table = [arguments[i].keyspace, arguments[i].name].join('.');

} else {

continue;

}

this.tables.push(table);

}

return this;

};

SelectBuilder.prototype.where = function () {

for (var i = 0; i < arguments.length; i++) {

var condition;

if (typeof(arguments[i]) === 'string') {

condition = arguments[i];

} else if (typeof(arguments[i]) === 'object') {

var creator = conditionCreators[arguments[i].type] || conditionCreators.standard;

condition = creator.call(this, arguments[i]);

} else {

continue;

}

this.conditions.push(condition);

}

return this;

};

SelectBuilder.prototype.filter = function () {

this.filtering = true;

return this;

};

SelectBuilder.prototype.toString = function() {

return [

'SELECT',

this.fields.length > 0 ? this.fields.join(', ') : '*',

'FROM',

this.tables.join(', '),

'WHERE',

this.conditions.join(' AND '),

this.filtering ? 'ALLOW FILTERING' : '',

].join(' ');

};

var builder = {

select: function (fields) {

fields = fields || '*';

return new SelectBuilder(Array.isArray(fields) ? fields : [fields]);

},

};

module.exports = builder;

Of course such a SelectBuilder could be much more complex. The version shown here is only a very lightweight variant that can be used without much struggle. It provides most of the benefits that we can expect from a DSL for expressing CQL queries without using raw text or direct string manipulation.

Monitoring the power consumption may also be interesting. This is also a great indicator about the status of the machine. Is it running? How much is the load right now? Monitoring the power is also important for efficiency reasons. In idle we want to spent the least amount of energy possible. Under high stress we want to be as efficient as possible. Getting the most operations per energy is key.

We have several scatter plots to illustrate the power consumption over time. A possible variant for a specific power grid is shown below.

How are these charts generated? The client side just uses the Chart.js library as noted earlier. On the server-side we talk to an API, which eventually calls a method from the charts module. This module comes with some functions for each supported type of chart. For instance the scatter function generates the JSON output to generate a JSON chart on the client site. Therefore the view is independent on the specific type of chart. It will pick the right type of chart in response to the data.

The example code does two things. It iterates over the presented data and extracts the series information from it. Finally it creates an object that contains all information that are potentially needed or relevant for the client.

function withAlpha (color, alpha) {

var r = parseInt(color.substr(1, 2), 16);

var g = parseInt(color.substr(3, 2), 16);

var b = parseInt(color.substr(5, 2), 16);

return [

'rgba(',

[r, g, b, alpha].join(', '),

')'

].join('');

}

var charts = {

scatter: function (data) {

for (var i = data.length - 1; i >= 0; i--) {

var series = data[i];

series.strokeColor = withAlpha(series.pointColor, 0.2);

}

return {

data: data,

containsData: data.length > 0,

type: 'Scatter',

options: {

scaleType: 'date',

datasetStroke: true,

}

};

},

};

module.exports = charts;

The withAlpha function is a helper to change a standard color presented in hex notation to an RGBA color function string. It is definitely useful to, e.g., use an automatically defined lighter stroke color than the fill color.

In conclusion the monitoring system has a well established backend with useful statistic collection. It does some important things autonomously and sends e-mails in case of warnings. In emergency scenarios it might initiate a shutdown sequence. The web interface gives users and administrators the possibility to have a quick glance at the most important data points. The web application is easy to maintain and extend. It has been designed for desktop and smartphone form factors.

Hot Water Cooling

Water cooling is the de-facto standard for most HPC systems. There are reasons to use water instead of air. The specific heat capacity of water is many times larger than the specific heat capacity of air. Even more importantly, water is three magnitudes more dense than air. Therefore we have a much larger efficiency. Operating large fans just to amplify some air flow is probably one of the least efficient processes at all.

Why is hot water cooling more efficient than standard water cooling? Well, standard water cooling requires cool water. Cooling water is a process that quite energy hungry. If we could save that energy we would already gain something. And yes, hot water cooling mimics how blood flows through our body. The process does not require any cooling, but still it has the ability to cool / keep a certain temperature. Hot water cooling is most efficient if we can operate on a small ΔT, which is difference between the temperature of the water and the temperature of the component.

Hot water cooling does not require any special cooling devices. No chillers are needed. We only need a pump to drive the flow in the water circuit. There are certain requirements, because hot water cooling lives from turbulent flow. A turbulent flow is random and unorganized. It will self-interact and provide a great basis for mixing and heat conductivity. On the contrast a lamar flow will tend to separate and run smoothly along the boundary layers. Hence there won't be much heat transfer and if so, then only certain paths will be taken. The mixing character is not apparent.

The design of our hot water cooling infrastructure had to split up in several pieces. We have to:

- Get a proper cooling circuit

- Decide for the right pump (how much water? desired flow?)

- Decide for the right tubes

- Design the cooling packages for the components

- Design the rack

- Decide what kind of connections to use between rack-cooling circuit, node-rack and node-component

The discussion about the rack design has already taken place. After everything has been decided we needed to run some tests. We used a simple table water cooler to see if the cooling package for a co-processor was working properly.

The portable table cooler provides us with a fully operational water cooling circuit. We have a pump that manages to generate a flow of 6.8 liters per minute. The table cooler is operated with current from the standard power plug. It can be filled with up to 2.5 liters of water. In total the table cooler can deal with 2700 W of cooling capacity.

In our setup we connected the cooling packages (also called cold plate) with the table cooler via some transparent tubes. The tubes used for this test are not the ones we wanted to use later. Also the tubes have been connected directly to the table cooler. In the process we still need to decide on some special distribution / aggregation units.

The next picture shows the test of the cold plate for the co-processor. We use a standard Intel Server Board (S1200V3RP). This is certainly good enough to test a single KNC without encountering incompatibilities, e.g., with the host CPU or the chipset. We care about the ΔT in this test. It is measured via the table cooler and the peak temperature of the co-processor. The latter can be read out using the MPSS software stack from Intel.

The real deal is of course much larger than just a single table cooler. We use a large pump in conjunction with some heat exchanger and a sophisticated filtering system. One of the major issues with water cooling is the potential threat of bacteria and leakages. While a leak is destructive for both, components and infrastructure, a growing number of bacteria has the ability to reduce cooling efficiency and congest paths in our cold plates.

A good filter is therefore necessary, but cannot guarantee to prevent bacteria growth. Additionally all our tubes are completely opaque. We don't want to encourage breeding with external energy. But the most important action against bacteria is the usage of special biocide in the cooling water.

The following picture shows how the underfloor wiring installation below the computing center looks like.

As already explained the cooling circuit is only the beginning. Much more crucial are the right design choices for the cold plates and connectors. We need to take great care about possible leakages. The whole system should be as robust, efficient and maintainable as possible. Also we do need some flexibility and there are limits in our budget. What can we do?

Obviously we cannot accept fixed pipes. We need some tubes inside the nodes. Otherwise we do not have some tolerance for the components inside. A fixed tube requires a very fixed chassis. Our chassis has been build with modularity and maintainability in mind. There may be a few millimeters here and there that are out of the specification scope.

Let's start the discussion with the introduction of the right connectors.

Quick Couplings and Manifolds

The connection of the nodes to the rack is done via quick couplings. Quick couplings offer a high quality mechanism for perfectly controllable transmission of water current. If not connected a quick coupling seals perfectly. There is no leakage. If plugged in a well-tuned quick coupling connection works binary. Either the connection is alright and we observe transmission with the maximum flow possible, or we do not see any transmission at all (the barrier is still sealed).

A possible quick coupling connector is shown below. We choose a different model, but the main principles and design characteristics remain unchanged.

The quick couplings need to be pulled back to release a mounted connector. This means we need another mechanism in our case. The simplest solution was to modify the quick couplings head, such that it can always release the mounted connector if enough force is being used. The disadvantage of this method is that a partial mounting is now possible. Partial mounting means that only a fraction of the throughput is flowing through the attached connector. We have practically broken the binary behavior.

The two connectors attached to the quick coupling are the ones from the rack and a node. The node's connector is bridging the water's way to a distribution unit, called a manifold. The manifold can also work as an aggregation unit, which is the case for other connector (outlet). The job of the inlet's manifold is to distribute the water from a single pipe to six tubes.

The six tubes end up in six cold plates. Here we have:

- Four Intel Xeon Phi cold plates

- A cold plate for cooling the CPU board

- A cold plate to cool the PLX chip and the InfiniBand card

Especially interesting is the choice of clamps to attach the tubes to the manifold (and cold plates). It turns out that there is actually an optimum choice. All other choices may either be problematic right away, or have properties that may result in leakages over time (or under certain circumstances).

The following picture shows a manifold attached to a node with transparent tubes. The tubes and the clamps are only for testing purposes. They are not used in production.

Choosing the right tubes and clamps is probably one of the most important decisions in terms of preventing leakages. If we choose the wrong tubes we could amplify bacteria growth, encounter problems during the mounting process or simply have a bad throughput. The clamps may also be the origin of headaches. A wrong decision here gives us a fragile solution that won't boost our confidence. The best choice is to use clamps that fit perfectly for the diameter of the tubes. The only ones applying to that criterion are clamps, which have a special trigger that requires a special tool. In our case the clamps come from a company called Oetiker.

These ear clamps (series PG 167) are also used in other supercomputers, such as the SuperMUC. In our mounting process we place an additional stub on the tube. The stubs are processed at a temp of 800°C. The stub is then laid on the tube and heated together with an hot-air-gun (at 100 °C). As a result the tubes are effectively even thicker. Their outer wall strength has additionally increased. Consequently the clamps fit even better than they would have without the stubs.

Finally we also have to talk about the cold plates. A good representative is the cold plate for the Intel Xeon Phi. It is not only the cold plate that has to show the best thermal performance, but also the one that is produced most often.

Roll-Bond Plates and Interposers

The quick couplings and manifolds just provide the connection and distribution units. The key question now is: How are the components cooled exactly? As already touched we use a special cold plate to transfer the heat from the device to the flowing water. The cold plate consists of three parts. A backside (chassis), a connector (interposer) and a pathway (roll-bond). The backside is not very interesting. It may transfer some heat to the front, but its main purpose is to fix the front, such that it is attached in the right position.

The interposer is the middle part. It is positioned between the roll-bond and the device. One of the requirements for the interposer is that it is flexible enough to adjust for possible tolerances on the device and solid enough to have good contact for maximum heat conductivity. The interposer features some nifty features, such as a cavity located at the die. The gap is then filled with a massive copper block. The idea here is to have something with higher heat conductivity than aluminum. The main reason is to amplify heat transfer in the critical regions. The copper block has to be a little bit smaller than the available space. That way we prepare for the different thermal expansion.

Finally we have the roll-bond plate. It is attached to the interposer via some glue, which is discussed in the next section. The roll-bond plate has been altered to provide a kind of tube within the plate. This tube follows a certain path, which has been chosen to maximize the possible heat transfer from the interposer to the water.

An overview over the previously named components is presented in the image below.

The image shows the chassis (top), the roll-bond (right), the inserted copper block (left bottom) and the interposer (bottom). All cooling components are centered around an Intel Xeon Phi co-processor board.

The yellow stripes in the image are thermal interface materials (TIMs). TIMs are crucial components of advanced high density electronic packaging and are necessary for the heat dissipation which is required to prevent the failure of electronic components due to overheating. The conventional TIMs we use are manufactured by introducing highly thermally conductive fillers, like metal or metal oxide microparticles, into the polymer matrix. We need them to ensure good heat transfer while providing the sufficient insulation.

The design of the roll-bond has been done in such a way to ensure turbulent water already arising at our flow rates. Furthermore the heat transfer area had to be maximized without reducing the effective water flow. Finally the cold plate has to be reliable. Any leak would be a disaster.

Tubes, Glue and Thermal Paste

Choosing the right tubes seems like a trivial issue in the beginning. One of the problems that can arise with water cooling is leakage due to long-term erosion effects on the tubes. Other problems include increased maintenance costs and the already mentioned threat of bacteria. Most of those problems can be alleviated using the right tubes. We demand several properties for our tubes. They should have a diameter around 10.5 mm. They have to be completely opaque. Finally they should be as soft as possible. Stiff tubes make maintenance much harder - literally.

We've chosen ethylene propylene diene monomer (EPDM) tubes. These tubes are quite soft and can be operated in a wide temperature range, starting at -50 °C and going up to 150 °C. The high tensile failure stress of 25 MPa makes it also a good candidate for our purposes. Also the tube is good enough to withstand our requirement of 10 bar pressure.

The following image shows a typical EPDM tube.

For the thermal paste we demand the highest possible thermal conductance without being electrically conductive. In practice this is hard to achieve. Electron mobility is the reason for an electric conductor and also a good mechanism for heat transportation. Another mechanism, however, can be found with lattice vibrations. Here phonons are the carriers.

We found a couple of thermal pastes that satisfy our criteria. In order to find out what is the best choice in terms of the shown thermal performance we prepared some samples. Each sample was then benchmarked using a run of Burn-MIC, a custom application that nearly drives the Xeon Phi to its maximum temperature. The maximum temperature is then used to create the following distribution.

We see that PK1 from Prolimatech is certainly the best choice. It is not the most expensive one here, but it is also certainly not cheap. For 30 g we have to pay 30 &eur;. Some of the other choices are also not bad. the cheapest one, KP98 from Keratherm, is still an adequate choice. We were disappointed by the most expensive one, WLPK from Fischer. However, even though the WLPK did not show an excellent performance here, this may be due to its more liquid-ish form as compared to the others. In our setup we therefore end up with a contact area that is not ideal.

Now that our system is cooled and its design is finished, we need to take care about the software side. HPC does not require huge software stacks, but just the right software. We want to be as close to the metal as possible, so any overhead is usually unwanted. Still, we need some compatibility, hence we will see that there are many standard tools being used in the QPACE 2 system.

The Software Stack

Choosing the right software stack is of important. I guess there is no question about that. What needs to be evaluated is what kind of standard software is expected by the users of the system. We have applications in the science area, especially particle physics simulations in the field of Lattice QCD. It is therefore crucial to provide the most basic libraries, which are used by most applications. Also some standard software has to be available. And even more important, it has to be optimized for our system. Finally we also have to provide tools and software packages, which are important to get work done on the system.

Like most systems in the Top 500 we use Linux as our operating system. There are many reasons for this choice. But whatever our reasons regarding the system itself are, it also makes sense from the perspective of our system's users. Linux is the standard operating system for most physicists and embraces command line tools, which are super useful for HPC applications. Also job submission systems and evaluation tools are best used in conjunction with a Linux based operating system.

The following subsections discuss topics such as our operating system and its distribution, our choice of compilers, as well as special firmware and communication libraries. We will discuss some best practices briefly. Let's start with the QPACE 2 operating system.

Operating System and Provisioning

Every node does not have a single operating system. Instead every node runs multiple operating systems. One is the OS running on the processor of the CPU board. This is the main operating system, even though it is not the first one to be started. The first one is the operating system embedded in U-Boot. U-Boot is running on the BMC, before the BMC's real operating system can be started. It is an OS booter, so to speak. Finally each co-processor has its own operating system as well.

The BMC on the CPU card is running an embedded Linux version and boots from flash memory as soon as power is turned on. It supports the typical functionalities of a board management controller, e.g., it can hold the other devices in the node in reset or release them. Additionally it can monitor voltages as well as current and temperature sensors and act accordingly. As an example an emergency shutdown could be started in severe cases. It can also access the registers in the PCIe switch via an I2C bus.

Our nodes are disk-less. Once the CPU is released from reset it PXE-boots a minimal Linux image over the Ethernet network. The full Linux operating system (currently CentOS 7.0) uses an NFS-mounted root file system. The KNCs are booted and controlled by the CPU using Intel's KNC software stack MPSS. The KNCs support the Lustre file system of our main storage system, which is accessed over InfiniBand. Also the HDF5 file system has to be supported for our use-case.

A variety of system monitoring tools are running either on the BMC or on the CPU, which, for example, regularly checks the temperatures of all major devices (KNCs, CPU, IB HCA, PCIe switch) as well as various error counters (ECC, PCIe, InfiniBand). A front-end server is used to NFS-export the operating system. The front-end server is also used to communicate with the nodes and monitor them. Furthermore the system makes it possible to log in to the machine, and to control the batch queues.

Compilers and Tools

Choosing the right compiler is a big deal in HPC. Sometimes months of optimization are worthless if we do not spent days evaluating available compilers and their compilation flags. The best possible choice can make a huge difference. Ideally we benefit without any code adjustments at all. Even though the best compiler is usually tied to the available hardware, there may be exclusions.

In our case we do not have much choice actually. There are not many compilers that can compile for the KNC. Even though the co-processor is said to be compatible with x86, it is not on a binary level. The first consequence is that we need to recompile our applications for the Intel Xeon Phi. The second outcome of this odd incompatibility is that a special compiler is required. There is a version of the GCC compiler that is able to produce binaries for the Xeon Phi. This version is used to produce kernel modules for the KNCs operating system. However, this version does not know about any optimizations, in particular the ones regarding SIMD, and is therefore a really bad choice for HPC.

The obvious solution is to use the Intel compiler. In some way that makes the Intel Xeon Phi even worse. While the whole CUDA stack can be obtained without any costs, licenses for the Intel compiler are quite expensive. Considering that the available KNC products are all very costly, it is a shame to be still left without the right software to write applications for it. Besides this very annoying model, which should not be supported at all by anyone, we can definitely say that the Intel compiler is delivering great performance.

In addition to the Intel compiler we also get some fairly useful tools form Intel, most. Most of these tools come with Intel's Parallel Studio, which is Intel's IDE. Here we also get the infamous VTune. Furthermore we get some performance counters, debuggers, a lot of analyzers, Intel MPI (with some versions, e.g., 5.0.3) for distributed computing, the whole MPSS software stack (including hardware profilers, sensor applications and more) and many more goodies. For us the command line tools are still the way to do things. A crucial (and very elementary) task is therefore to figure out the best parameters for some of these tools. As already explained the optimum compiler flags need to be determined. Also the Intel MPI runtime needs to be carefully examined. We want the best possible configuration.

The Intel compiler gives us the freedom not only to produce code compatible for the KNC, but also to leverage the three different programming modes:

- Direct (loading and execution is performed on the accelerator)

- Offload (loading the data from the host, execution on the KNC)

- Native (loading the app from the host, rest on the co-processor)

While the first and third mode are similar in terms of compilation, the second one if quite different. Here we produce a binary that has to be run from the host, which contains offloading sections that are run by spawning a process on the co-processor. The process on the co-processor is delivered by a subset of the original binary, which has been produced using a different assembler. Therefore our original binary was actually a mixture of host and MIC compatible code. Additionally this binary contained instructions to communicate with the co-processor(s).

The native execution requires a tool called micnativeloadex, which also comes with the MPSS software stack.

So what compilation flags should we use? Turns out there are many and to complicate things even more - they also depend on our application. Even though -O3 is the highest optimization level, we might sometimes experience strange results. It is therefore considered unreliable. In most cases -O2 is already sufficient. Nevertheless, we should always have a look at performance and reliability before picking one or the other. For targeting the MIC architecture directly we need the -mmic machine specifier.

There are other interesting flags. Just to list them here briefly:

-no-prec-div Division optimization, however, with loss of accuracy.-ansi-alias Big performance improvements possible, if the program adheres to ISO C Standard alias ability rules.-ipo The interprocedural optimization works between files to perform optimizations. This will definitely increase the required compilation time.

Another possibility that has not been listed previously is the ability for Profile-Guided Optimization (PGO). PGO will improve the performance by reducing branch predictions, shrinking code size and therefore eliminating most instruction cache problems. For an effective usage we need to do some sampling beforehand. We start by compiling our programing with the -prof-gen and the -prof-dir=p flags, where p is the path to the profiler that will be generated. After running the program we will find the results of the sampling in the path that has been specified previously. Finally we can compile our application again, this time with -prof-use instead of the -prof-gen flag.

Compilers are not everything. We also need the right frameworks. Otherwise we have to write them ourselves, which may be tedious and result in a less general, less portable and less maintained solution. The following libraries or frameworks are interesting for our purposes.

Some of these frameworks exclude each other, at least partially. For instance we cannot use the Cilk Plus threading capabilities together with OpenMP. The reason is that both come with a runtime to manage a threadpool, which contains (by default) as many SMT threads as possible. For our co-processor we would have 244 or 240 (with / without the core reserved for the OS). Here we have a classical resource limitation, where both frameworks fight for the available resources. That is certainly not beneficial.

We do not use OpenACC, even though it would certainly be an interesting option for writing portable code that may run on co-processors, as well as on GPUs. The Intel compiler comes with its own set of special pragma instructions, which trigger offloading. Additionally we may want to use compiler intrinsics. The use of compiler intrinsics may be done in a quasi-portable way. An example implementation is available with the Vc library. Vc gives us a general data abstraction combined with C++ operator overloading, that makes SIMD not only portable, but also efficient to program.

In the end we remain with a mostly classical choice. SIMD will either be done by using intrinsics (not very portable) or Cilk Plus (somewhat portable, very programmer friendly). Multi-threading will be laid in the hands of OpenMP. If we need offloading we will use the Intel compiler intrinsics. We may also want to use OpenMP, as an initial set of methods for offloading has been added in the latest version. Our MPI framework is also provided by Intel, even though alternative options, e.g., Open MPI, should certainly be evaluated.

Another interesting software topic is the used firmware and driver stack.

Firmware and Drivers

Even though our hardware stack consists nearly exclusively of commercially available (standard, at least for HPC) components, we use them in a non-standard way. Special firmware is required for these components:

- The complex programmable logic device (CPLD) used as a bootloader

- The microcontroller running the BMC

- The BIOS

- The PLX chip

- The Intel Xeon Phi

- The Mellanox Connect-IB card

The latter two also demand some drivers for communication. The driver and firmware version have to match usually. Additionally we need drivers for connecting to our I/O system (Lustre) and possibly for reading values from the PLX chip. The PLX chip is quite interesting for checking the signal quality or detecting errors in general.

Going away from the nodes we need drivers to access connectors available on the power control board, which is wired to a BeagleBone Black (BBB). Most of these drivers need to be connected to our Cassandra system. Therefore they either need to communicate with some kind of available web API or they need access to Cassandra directly.

The communication with devices via I2C or GPIO is also essential for the BMC. Here we needed to provide our own system, which contains a set of helpers and utility applications. These applications can be as simple as enabling or disabling connected LEDs, but they can also be as complicated as talking to a hot-swap controller.

The custom drivers already kick in during the initial booting of a node. We set some location pins, which can be read out via the BMC. When a node boots, we read out these pins and compare them with a global setting. If the pins are known we continue, otherwise we note assign the right IP address to the given location pin and MAC address. Now we have to restart the node.

The whole process can be sketched with a little flow chart.

Overall the driver and firmware software has been designed to allow any possible form of communication to happen with maximum performance and the least error rate. This also motivated us to create custom communication libraries.

Communication Libraries

Communication is important in the field of HPC. Even the simplest kind of programs are distributed. The standard framework to use is MPI. Since the classic version of Moore's law has come to an end we also need to think about simultaneous multi-threading (SMT). This is usually handled by OpenMP. With GPUs and co-processors we also may want to think about offloading. Finally we have quite powerful cores, which implement the basics of vector-machines most popular the 70 s. The SIMD capabilities also need to be used. At this point we have 4 levels (distribute, offload, multi-thread, vectorize). Also these levels may be different for different nodes. Heterogeneous computing is coming.

We need to care a lot about communication between our nodes. The communication may be over PCIe, or via the InfiniBand network. It may also occur between workers on the same co-processor. Obviously it is important to distinguish between the different connections. For instance if two co-processors are in the same node we should ensure they communicate with each other more often than with other co-processors, which are not in the same node.

Additionally we need to use the dual-port of the Connect-IB card very efficiently. We therefore concluded that it is definitely required to provide our own MPI wrapper (over, e.g., Intel MPI), which uses IBverbs directly. This has been supported by statements from Intel. In the release notes of MPSS 3.2 there is a comment that,

libibumad not avail on mic.

Status: wontfix

In principle MPI implementations can take topologies (such as our hyper-crossbar topology using 2 ports per node) into account. However, this usually requires libibumad. Since this library is not available for the KNC, we better provide our own implementation.

The library would not only be aware of our topology, but would use it in the most efficient manner with the least possible overhead. It is definitely good practice to have a really efficient allreduce routine. Such a routine is only possible with the best possible topology information.

Effective synchronization methods are also important. Initially we should try to avoid synchronization as best as we can. But sometimes there are not robust or reliable ways to come up with a lock-free alternative to our current algorithm. This is the point, where we demand synchronization with the least possible overhead. For instance in MPI calls we prefer asynchronous send operations and possibly synchronous receive. However, depending on the case we might have optimized our algorithm in such a way that we can ensure a working application without requiring any wait or lock operations.

Before we should start to think about optimizing for a specific architecture, or other special implementation optimizations, we should find the best possible algorithm. An optimal algorithm cannot be beaten by smart implementations in general. In a very special scenario the machine-specific implementation using a worse algorithm may do better, but the effort and the scalability is certainly limited. Also such a highly specific optimization is not very portable.

We definitely want to do both - using the best algorithm and then squeeze out the last possible optimizations by taking care of machine specific properties. A good example is our implementation of a synchronization barrier. Naively we can just use a combination of mutexes to fulfill a full barrier. But in general a mutex is a very slow synchronization mechanism. There are multiple types of barriers. We have a centralized barrier, which scales linearly. A centralized barrier may be good for a few threads, if all share the same processing unit, but is definitely slow for over a hundred threads distributed over more than fifty cores.

There are two more effective kinds of barriers. A dissemination barrier (sometimes called butterfly barrier) groups threads into different groups, preventing all-to-all communication. Similarly a tournament (or tree) barrier forms groups of two with a tree like structure. The general idea is illustrated in the next image. We have two stages, play and release. In the first stage the tournament is played, where every contestant (thread) has to wait for its opponent to arrive before possibly advancing to the next round. The "loser" of each round has to wait until it has been released. The winner needs to release the loser later. Only the champion of the tournament switches the tournament stage from play to release.

As a starting point we may implement the algorithm as simple as possible. Hence no device specific optimizations. No artificial padding or special instructions. We create a structure that embodies the specific information for the barrier. The barrier has to carry information regarding each round. Possible pairings and upcoming result. The whole tournament is essentially pre-determined. The result can take 5 different states: winner, loser, champion, dropout and bye. The first two states are pretty obvious. A champion is a winner that won't advance, but rather triggers the state change. It could be seen as a winner with additional responsibilities. Dropout is a placeholder for a non-existing position. Similarly bye: It means that the winner has not to notify (release) a potential loser, as there is none.