Table Of Contents

- Introduction

- Background

- Architecture and Parsing

- Tokenization and Parsing

- Tree Walkers

- Virtual Machine

- API and Extensibility

- Type System

- Exposing an API

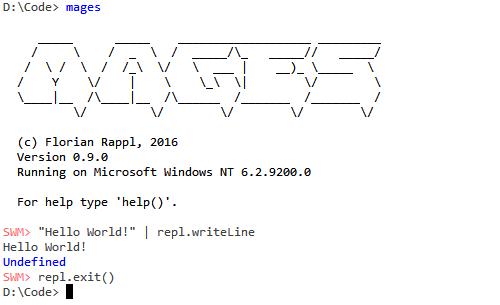

- Interaction

- Performance

- Key Aspects

- Performance Evaluation

- FAQ

- Using the code

- Conclusion

- History

Introduction

In the recent years a new wave of programming languages emerged. Some of them try to be general purpose system programming languages, e.g., Rust, some of them want to be low level for distributed computing, e.g., Go, others try to be perfect for productivity, e.g., Swift, and finally there are also languages for scientific computing like Julia. It is quite interesting to see what concepts they bring to the table and how they want to become popular.

This wave of programming languages is also heavily influenced by the trend towards functional programming. Classical object-oriented programming seems to be ready to take the next evolutionary step. The solid principles force us to follow this trend. The time seems right to do something different. As .NET developers we see this by the rising polarity of F# and the features introduced in C# 6 and especially the ones about to come in C# 7.

So what is this article about? I want to present you a new language that I designed - it's no revolutionary language and nothing that will become mainstream. However, it's something that may be very handy and that could solve one or the other problem you may face today or in the future. This article is about MAGES, a fast lightweight interpreted scripting language that interoperates seamlessly with the .NET Framework. It can be used in most codes, may it be Unity, Xamarin, or the standard framework.

Background

MAGES is a recursive acronym and stands for "MAGES: Another Generalized Expression Simplifier". It is the official successor to YAMP, which was a similar project that was highly successful (see Sumerics). While YAMP was mostly a toy project with an experimental design, MAGES is a completely different beast. It comes with a strict separation of the whole parser pipeline: Lexical analysis, syntax analysis, semantic analysis, and code generation. It used a high-level virtual machine to interpret code in a linear manner.

The primary goals for MAGES are performance, usability, and extensibility. This is mostly achieved by using standard .NET types instead of more convenient wrappers. We will see that the interop between .NET and MAGES is straightforward and easy to manage. This, in turn, enables many scenarios and allows users of the library to quickly integrate it. Essentially, one only needs to declare what elements to open and that's it.

The language itself is not revolutionary. Don't expect too much. It contains some neat features and should be pretty readable, but its main concern was to have something that can be used without requiring much studying. The idea is that most people with some experience in either C, Java, C#, or JavaScript can use the language. The language itself won't be the focus of this article, but we'll see some of its features along the way.

Architecture and Parsing

MAGES has been designed as a library. It's a small core that can be grabbed, e.g., from Nuget. The library itself contains everything that's needed for evaluating, inspecting, or analyzing MAGES code. The evaluation pipeline can be broken down into the following - completely accessible - components:

- Scanner for the source inspection

- Tokenizer for the lexical analysis

- Parser for the syntactic analysis

- AST walkers for detailed inspection and code generation

- A virtual machine with high-level operations

- A runtime system with operators and functions

The abstract syntax tree (AST) walkers are quite important. They are used to retrieve symbol information, generate the operations for the virtual machine, validate the full code, or provide auto-complete information.

Tokenization and Parsing

The whole parsing model follows the traditional pipeline character. An IScanner gives us characters that can be used in an ITokenizer. The resulting stream of tokens (IToken) is then passed to the IParser.

The interfaces are usually quite lightweight. Consider the whole parser:

public interface IParser

{

IExpression ParseExpression(IEnumerator<IToken> tokens);

IStatement ParseStatement(IEnumerator<IToken> tokens);

List<IStatement> ParseStatements(IEnumerator<IToken> tokens);

}

The scanner is a little bit more complete:

public interface IScanner : IDisposable

{

Int32 Current { get; }

TextPosition Position { get; }

Boolean MoveNext();

Boolean MoveBack();

}

In contrast, the tokenizer and the tokens are rather simple:

public interface ITokenizer

{

IToken Next(IScanner scanner);

}

public interface IToken : ITextRange

{

TokenType Type { get; }

String Payload { get; }

}

A token is essentially mainly given by a type, however, some tokens may also be delivered with a payload. For instance, a string literal also comes with the string its defining. The ITextRange interface only declares that objects need to declare a start and end position. This is true for tokens, as they live within certain parts of the text. The text position can be retrieved from the scanner, which has the Position property. The ITokenizer is responsible for collecting and assigning the right text positions.

In MAGES there are four implementations of the tokenizer interface. This may seem like a rather strange realization, however, we will see that this gives us some advantages.

The integrated implementations are:

- A general version for simple tokens

- A version to parse number literals

- One to parse all kinds of comments

- Another one to parse string literals

Arguably, there is a fifth one that is capable of tokenizing a string literal. The reason to omit it in the list above is redundancy. This variant is very similar to the ordinary string tokenizer. It is only sensitive to curly brackets, which are then scanned by the version for simple tokens. The tokenizer implementation is therefore a composition of existing realizations.

Let's have a look at the most basic implementation:

sealed class GeneralTokenizer : ITokenizer

{

private readonly ITokenizer _number;

private readonly ITokenizer _string;

private readonly ITokenizer _comment;

private readonly ITokenizer _interpolated;

public GeneralTokenizer(ITokenizer numberTokenizer, ITokenizer stringTokenizer, ITokenizer commentTokenizer)

{

_number = numberTokenizer;

_string = stringTokenizer;

_comment = commentTokenizer;

_interpolated = new InterpolationTokenizer(this);

}

public IToken Next(IScanner scanner)

{

if (scanner.MoveNext())

{

var current = scanner.Current;

if (current.IsSpaceCharacter())

{

return new CharacterToken(TokenType.Space, current, scanner.Position);

}

else if (current.IsNameStart())

{

return ScanName(scanner);

}

else if (current.IsDigit())

{

return _number.Next(scanner);

}

else

{

return ScanSymbol(scanner);

}

}

return new EndToken(scanner.Position);

}

private IToken ScanSymbol(IScanner scanner)

{

var start = scanner.Position;

switch (scanner.Current)

{

case CharacterTable.FullStop:

return _number.Next(scanner);

case CharacterTable.Comma:

return new CharacterToken(TokenType.Comma, CharacterTable.Comma, start);

}

return new CharacterToken(TokenType.Unknown, scanner.Current, start);

}

private static IToken ScanName(IScanner scanner)

{

var position = scanner.Position;

var sb = StringBuilderPool.Pull();

var current = scanner.Current;

var canContinue = true;

do

{

sb.Append(Char.ConvertFromUtf32(current));

if (!scanner.MoveNext())

{

canContinue = false;

break;

}

current = scanner.Current;

}

while (current.IsName());

if (canContinue)

{

scanner.MoveBack();

}

var name = sb.Stringify();

var type = Keywords.IsKeyword(name) ? TokenType.Keyword : TokenType.Identifier;

return new IdentToken(type, name, position, scanner.Position);

}

}

We can see that the tokenization process is rather straightforward. We use the IScanner instance to get some characters and make some decision based on it. Then we may enter a kind of special state (e.g., ScanName) to continue scanning with the previous information. Otherwise, we just emit a character token.

The stream of tokens is then passed into the parser, which is responsible for the syntax analysis. The result of this step is a tree (i.e., not a 1D stream), which is called the abstract syntax tree or short AST. The AST is the most important structure for any compiler.

The parser of MAGES is essentially a so-called Pratt parser. A Pratt parser is an improved recursive descent parser that associates semantics with tokens instead of grammar rules. This is ideal in our case, as we start with a stream of tokens. The upside of a Pratt parser is the maintainability and readability. The downside is the performance loss due to extensive recursive function calls. This is the only decision in MAGES against performance. The performance gain due to using another kind of implementation does not outweight the additional complexity. Therefore, we accept the (small) performance loss by the (significant) readability gain.

Let's see how such a Pratt parser may look like for parsing an expression. We omit the auxiliary functions and show only the main path up to the invalid expression.

sealed class ExpressionParser : IParser

{

private readonly AbstractScopeStack _scopes;

public ExpressionParser()

{

var root = new AbstractScope(null);

_scopes = new AbstractScopeStack(root);

}

public IExpression ParseExpression(IEnumerator<IToken> tokens)

{

var expr = ParseAssignment(tokens.NextNonIgnorable());

if (tokens.Current.Type != TokenType.End)

{

var invalid = ParseInvalid(ErrorCode.InvalidSymbol, tokens);

expr = new BinaryExpression.Multiply(expr, invalid);

}

return expr;

}

private IExpression ParseAssignment(IEnumerator<IToken> tokens)

{

var x = ParseRange(tokens);

var token = tokens.Current;

var mode = token.Type;

if (mode == TokenType.Assignment)

{

var y = ParseAssignment(tokens.NextNonIgnorable());

return new AssignmentExpression(x, y);

}

else if (mode == TokenType.Lambda)

{

var parameters = GetParameters(x);

return ParseFunction(parameters, tokens.NextNonIgnorable());

}

return x;

}

private IExpression ParseRange(IEnumerator<IToken> tokens)

{

var x = ParseConditional(tokens);

if (tokens.Current.Type == TokenType.Colon)

{

var z = ParseConditional(tokens.NextNonIgnorable());

var y = z;

if (tokens.Current.Type == TokenType.Colon)

{

z = ParseConditional(tokens.NextNonIgnorable());

}

else

{

y = new EmptyExpression(z.Start);

}

return new RangeExpression(x, y, z);

}

return x;

}

private IExpression ParseConditional(IEnumerator<IToken> tokens)

{

var x = ParsePipe(tokens);

if (tokens.Current.Type == TokenType.Condition)

{

var y = ParseConditional(tokens.NextNonIgnorable());

var z = default(IExpression);

if (tokens.Current.Type == TokenType.Colon)

{

z = ParseConditional(tokens.NextNonIgnorable());

}

else

{

z = ParseInvalid(ErrorCode.BranchMissing, tokens);

}

return new ConditionalExpression(x, y, z);

}

return x;

}

private IExpression ParsePipe(IEnumerator<IToken> tokens)

{

var x = ParseOr(tokens);

while (tokens.Current.Type == TokenType.Pipe)

{

if (CheckAssigned(tokens))

{

var y = ParseAssignment(tokens.NextNonIgnorable());

var z = new BinaryExpression.Pipe(x, y);

return new AssignmentExpression(x, z);

}

else

{

var y = ParseOr(tokens);

x = new BinaryExpression.Pipe(x, y);

}

}

return x;

}

private IExpression ParseOr(IEnumerator<IToken> tokens)

{

var x = ParseAnd(tokens);

while (tokens.Current.Type == TokenType.Or)

{

var y = ParseAnd(tokens.NextNonIgnorable());

x = new BinaryExpression.Or(x, y);

}

return x;

}

private IExpression ParseAnd(IEnumerator<IToken> tokens)

{

var x = ParseEquality(tokens);

while (tokens.Current.Type == TokenType.And)

{

var y = ParseEquality(tokens.NextNonIgnorable());

x = new BinaryExpression.And(x, y);

}

return x;

}

private IExpression ParseEquality(IEnumerator<IToken> tokens)

{

var x = ParseRelational(tokens);

while (tokens.Current.IsEither(TokenType.Equal, TokenType.NotEqual))

{

var mode = tokens.Current.Type;

var y = ParseRelational(tokens.NextNonIgnorable());

x = ExpressionCreators.Binary[mode].Invoke(x, y);

}

return x;

}

private IExpression ParseRelational(IEnumerator<IToken> tokens)

{

var x = ParseAdditive(tokens);

while (tokens.Current.IsOneOf(TokenType.Greater, TokenType.GreaterEqual, TokenType.LessEqual, TokenType.Less))

{

var mode = tokens.Current.Type;

var y = ParseAdditive(tokens.NextNonIgnorable());

x = ExpressionCreators.Binary[mode].Invoke(x, y);

}

return x;

}

private IExpression ParseAdditive(IEnumerator<IToken> tokens)

{

var x = ParseMultiplicative(tokens);

while (tokens.Current.IsEither(TokenType.Add, TokenType.Subtract))

{

var mode = tokens.Current.Type;

if (CheckAssigned(tokens))

{

var y = ParseAssignment(tokens.NextNonIgnorable());

var z = ExpressionCreators.Binary[mode].Invoke(x, y);

return new AssignmentExpression(x, z);

}

else

{

var y = ParseMultiplicative(tokens);

x = ExpressionCreators.Binary[mode].Invoke(x, y);

}

}

return x;

}

private IExpression ParseMultiplicative(IEnumerator<IToken> tokens)

{

var x = ParsePower(tokens);

while (tokens.Current.IsOneOf(TokenType.Multiply, TokenType.Modulo, TokenType.LeftDivide, TokenType.RightDivide,

TokenType.Number, TokenType.Identifier, TokenType.Keyword, TokenType.OpenList))

{

var current = tokens.Current;

var implicitMultiply = !current.IsOneOf(TokenType.Multiply, TokenType.Modulo, TokenType.LeftDivide, TokenType.RightDivide);

var mode = implicitMultiply ? TokenType.Multiply : current.Type;

if (!implicitMultiply && CheckAssigned(tokens))

{

var y = ParseAssignment(tokens.NextNonIgnorable());

var z = ExpressionCreators.Binary[mode].Invoke(x, y);

return new AssignmentExpression(x, z);

}

else

{

var y = ParsePower(tokens);

x = ExpressionCreators.Binary[mode].Invoke(x, y);

}

}

return x;

}

private IExpression ParsePower(IEnumerator<IToken> tokens)

{

var atom = ParseUnary(tokens);

if (tokens.Current.Type == TokenType.Power)

{

var expressions = new Stack<IExpression>();

if (!CheckAssigned(tokens))

{

expressions.Push(atom);

do

{

var x = ParseUnary(tokens);

expressions.Push(x);

}

while (tokens.Current.Type == TokenType.Power && tokens.NextNonIgnorable() != null);

do

{

var right = expressions.Pop();

var left = expressions.Pop();

var x = new BinaryExpression.Power(left, right);

expressions.Push(x);

}

while (expressions.Count > 1);

atom = expressions.Pop();

}

else

{

var rest = ParseAssignment(tokens.NextNonIgnorable());

var rhs = new BinaryExpression.Power(atom, rest);

return new AssignmentExpression(atom, rhs);

}

}

return atom;

}

private IExpression ParseUnary(IEnumerator<IToken> tokens)

{

var current = tokens.Current;

var creator = default(Func<TextPosition, IExpression, PreUnaryExpression>);

if (ExpressionCreators.PreUnary.TryGetValue(current.Type, out creator))

{

var expr = ParseUnary(tokens.NextNonIgnorable());

return creator.Invoke(current.Start, expr);

}

return ParsePrimary(tokens);

}

private IExpression ParsePrimary(IEnumerator<IToken> tokens)

{

var left = ParseAtomic(tokens);

do

{

var current = tokens.Current;

var mode = current.Type;

var creator = default(Func<IExpression, TextPosition, PostUnaryExpression>);

if (ExpressionCreators.PostUnary.TryGetValue(mode, out creator))

{

tokens.NextNonIgnorable();

left = creator.Invoke(left, current.End);

}

else if (mode == TokenType.Dot)

{

var identifier = ParseIdentifier(tokens.NextNonIgnorable());

left = new MemberExpression(left, identifier);

}

else if (mode == TokenType.OpenGroup)

{

var arguments = ParseArguments(tokens);

left = new CallExpression(left, arguments);

}

else

{

return left;

}

}

while (true);

}

private IExpression ParseAtomic(IEnumerator<IToken> tokens)

{

switch (tokens.Current.Type)

{

case TokenType.OpenList:

return ParseMatrix(tokens);

case TokenType.OpenGroup:

return ParseArguments(tokens);

case TokenType.Keyword:

return ParseKeywordConstant(tokens);

case TokenType.Identifier:

return ParseVariable(tokens);

case TokenType.Number:

return ParseNumber(tokens);

case TokenType.InterpolatedString:

return ParseInterpolated(tokens);

case TokenType.String:

return ParseString(tokens);

case TokenType.SemiColon:

case TokenType.End:

return new EmptyExpression(tokens.Current.Start);

}

return ParseInvalid(ErrorCode.InvalidSymbol, tokens);

}

private static InvalidExpression ParseInvalid(ErrorCode code, IEnumerator<IToken> tokens)

{

var expr = new InvalidExpression(code, tokens.Current);

tokens.NextNonIgnorable();

return expr;

}

}

We see that the parser always descends down up to the highest-order token. The operators are also taken in an reverse manner, with the least sticky ones being reached first.

Tree Walkers

The visitor pattern is the single most powerful pattern for walking through an AST. It allows us to abstract away the type checking redundancy and to normalize the expected behavior.

The visitor pattern consists of three parts:

- A method declared in an interface to accept visitors

- A walker implementing the visitor interface with methods for all types to visit

- An implementation of the acceptance interface in all types to visit

The visitor interface looks like the following code. Essentially, we need to provide an overload for any type that may be visited.

public interface ITreeWalker

{

void Visit(VarStatement statement);

void Visit(BlockStatement statement);

void Visit(EmptyExpression expression);

void Visit(ConstantExpression expression);

}

The acceptance interface is much simpler. Essentially, it boils down to:

public interface IWalkable

{

void Accept(ITreeWalker visitor);

}

The third part was the implementation of the acceptance interface, e.g., IWalkable, in all types mentioned in the visitor interface.

This looks as trivial as the following code:

public void Accept(ITreeWalker visitor)

{

visitor.Visit(this);

}

Even if the specific type would know the types of its children (usually it does not), it would make sense to let the walker decide if (and when!) to descend. For instance, when the walker sees a BlockStatement it may do the following:

public void Visit(BlockStatement block)

{

foreach (var statement in block.Statements)

{

statement.Accept(this);

}

}

This way, once we are accepted as being a visitor to a node, we may also want to be accepted as a visitor to the sub-nodes. However, this request has to come from the walker. The visited nodes only know which method to "let-in", not how to "guide through". One may say that the visitor pattern helps us to avoid unnecessary casting, which may lead to bugs in the code and a complexity that is unbearable for anyone interested in doing something with the AST.

In order to avoid repetitive statements an abstract base class called BaseTreeWalker has been created, which implements the ITreeWalker interface and makes all methods virtual. They all come with a basic implementation that has one goal: Walk through the AST.

Let's say we want to create a custom tree walker to find any kind of function calls in the code. The corresponding class could look as simple as:

class FunctionCallTreeWalker : BaseTreeWalker

{

private readonly List<CallExpression> _calls;

public FunctionCallTreeWalker()

{

_calls = new List<CallExpression>();

}

public List<CallExpression> AllCalls

{

get { return _calls; }

}

public override void Visit(CallExpression expression)

{

_calls.Add(expression);

base.Visit(expression);

}

}

Everything we have to do is to intercept the CallExpression visits. We still use the base implementation to gather any function calls within the arguments and / or the function itself (how about f()(2, 3) or g(2)(3)(5)()?).

The idea of using so-called tree walkers to collect information stored within the AST is also used for closely related topics, e.g., validation. Every node (i.e., an element of the tree) knows how to validate itself, however, it does not know how to validate the whole tree. Therefore we use a tree walker to visit each node and trigger the self-validation. Walking the tree and validation are therefore used in conjunction to perform the full validation, however, they are disjoint and do not know or require each other.

The tree walker is also used to generate the operations used within the virtual machine.

Virtual Machine

MAGES comes with a small high-level virtual machine (VM). Currently, the following set of instructions is used:

ArgOperation (loads one of the arguments)ArgsOperation (sets the function-scope args variable)CondOperation (evaluates the condition a ? b : c)ConstOperation (loads a constant)DecOperation (decrement the variable)DefOperation (defines a local variable)GetcOperation (calls a getter function)GetpOperation (loads an object property)GetsOperation (loads a local variable)IncOperation (increments the variable)InitMatOperation (initializes a matrix value)InitObjOperation (initializes an object value)JumpOperation (jumps to the given operation index)NewFuncOperation (creates a new function)NewMatOperation (creates a new matrix)NewObjOperation (creates a new object)PopOperation (skips a single operation)PopIfOperation (skips a single operation if true)RngeOperation (creates a new range with explicit stepping)RngiOperation (creates a new range with implicit stepping)RetOperation (returns from the current block)SetcOperation (calls a setter function)SetpOperation (stores an object property)SetsOperation (stores a local variable)

The instructions are then used to cover certain functionality. For instance, calling a function resolves to:

- Place the operations to evaluate the expression of all arguments. (in reverse order)

- Place the operation to load the function to call.

- Place either the

GetcOperation or the SetcOperation to invoke the function.

During execution of the VM the last operation will perform the following steps:

- Create a new

object[] to store all arguments. - Pop a value from the stack to get the function to call.

- Pop values from the stack to fill the

object[]. - Call the function with the given arguments.

This is why the VM operations are called high-level. They do not resolve to the tiniest possible commands, but rather use more advanced .NET commands / multiple intermediate language (IL) instructions.

Looking at the generated instructions, e.g., for a simple addition reveals:

SWM> il("2+3")

const 2

const 3

const [Function]

getc 2

We place the constants (2 and 3) on the stack, as well as the add function. As there is no specific add instruction the operation walker placed the used function on the stack via constant operation. Finally we have the call instruction using two arguments, i.e., three values will be taken from the stack.

For a new matrix the instruction deal mostly with the initialization.

SWM> il("[1,2;3,4;5,6]")

newmat 3 2

const 1

initmat 0 0

const 2

initmat 0 1

const 3

initmat 1 0

const 4

initmat 1 1

const 5

initmat 2 0

const 6

initmat 2 1

Of course, it would be simpler to place the whole matrix on the stack via a constant, however, this is only possible in special cases (such as the one presented above). Usually, a matrix is not full with constants. In the future an optimization may be introduced to only initialize values that are not constants.

What we can see in the code above is that the 3x2 matrix is created with the arguments 3 and 2. Furthermore, for each value at least two instructions are inserted. One to place the constant on the stack and another one to assign the value to the right cell. The latter requires two arguments denoting the 0-based row and column indices.

For a new function the instructions look a bit more complicated. However, we'll see that the essence is still straightforward.

SWM> il("(x, y, z) => x + y + z^2")

newfunc start

arg 0 x

arg 1 y

arg 2 z

args args

gets x

gets y

const [Function]

getc 2

gets z

const 2

const [Function]

getc 2

const [Function]

getc 2

newfunc end

The important part is that a function consists of operations and has therefore somehow to contain these operations. As we deal with a linear chain this is done in the shown manner, i.e., the newfunc operation actually contains these operations. Most instructions shown here belong to the internal part of the function.

Let's confirm that the whole tail starting at gets x belongs to the body:

SWM> il("x + y + z^2")

gets x

gets y

const [Function]

getc 2

gets z

const 2

const [Function]

getc 2

const [Function]

getc 2

So the only instructions actually needed for a new function are the argument initialization, which may be reduced to only the special args argument. The repetition here means that the variable arguments should be accessible via a variable called args.

API and Extensibility

The core classes and functionality lives in the Mages.Core namespace. A very simple console "Hello World!" application may thus look as follows:

using Mages.Core;

using System;

static class Program

{

static void Main(String[] args)

{

var engine = new Engine();

var result = engine.Interpret("21 * 2");

Console.WriteLine("The answer to everything is {0}!", result);

}

}

Of course from this point on MAGES is already nearly a REPL (Read-Evaluate-Print-Loop):

var engine = new Engine();

while (true)

{

Console.Write("Query? ");

var input = Console.ReadLine();

var result = engine.Interpret(input);

Console.WriteLine("Answer: {0}", result);

}

At this point the user is free to start interacting with the MAGES Engine instance. This being said it makes sense now to see how we can interact with the engine in our applications.

Type System

MAGES does not come with its own data types. Instead, it uses existing .NET data types to decrease layers and increase performance. As a positive side-effect the performance is improved with less GC pressure. Also the interaction feels more natural.

var engine = new Engine();

engine.Scope["seven"] = 7.0;

var result = engine.Interpret("seven / 4");

Console.WriteLine(typeof(result).FullName);

The (global) scope is only a .NET dictionary, which allows us to get and set global variables. In the former example seven was a name we introduced. Similarly, results may be stored in this dictionary.

var engine = new Engine();

engine.Interpret("IsSevenPrime = isprime(7)");

Console.WriteLine(engine.Scope["IsSevenPrime"]);

MAGES tries to narrow every .NET data type to one of its data types:

- Number (

System.Double) - Boolean (

System.Boolean) - String (

System.String) - Matrix (

System.Double[,]) - Object (

System.Collections.Generic.IDictionary<System.String, System.Object>) - Function (

Mages.Core.Function, essentially a Delegate mapping Object[] to Object) - Undefined (

null)

Most types will be simply wrapped in a wrapper object that implements the IDictionary<String, Object>. One thing we can easily do is to create new functions in MAGES and use them in .NET applications:

var engine = new Engine();

var euler = engine.Interpret("n => isprime(n^2 + n + 41)");

var testWith1 = (Boolean)euler.Invoke(new Object[] { 1.0 });

var testWith7 = (Boolean)euler.Invoke(new Object[] { 7.0 });

The objects that are given to the function defined in MAGES need to be supplied in MAGES compatible types. So the call wouldn't work with integers:

var isNull = euler.Invoke(new Object[] { 1 });

To circumvent such issues there is much better alternative: Using the Call extension method. This allows us to do the following:

var testWith1 = euler.Call<Boolean>(1);

var testWith7 = euler.Call<Boolean>(7);

There is also an overload without specifying the return type (resulting in an Object instance being returned). The call above returns the default value if the type has not been matched.

The reasoning for including the narrowing in Call instead of the usual Invoke is to allow MAGES internally to directly call the function without the otherwise introduced narrowing overhead.

Overall, the following table reflects the MAGES type system by showing the essential type mapping.

| MAGES | .NET (direct) | .NET (cast) |

| Number | Double | Single |

| | | Decimal |

| | | Byte |

| | | UInt16 |

| | | UInt32 |

| | | UInt64 |

| | | Int16 |

| | | Int32 |

| | | Int64 |

| Boolean | Boolean | |

| String | String | Char |

| Matrix | Double[,] | Double[] |

| | | List |

| Function | Function | Delegate |

| Object | IDictionary | Object |

| Nothing | null | |

Furthermore, within MAGES two interesting functions exist: is and as. Both take a type name (e.g., "Number") and a value as arguments. However, while the former returns a boolean value indicating if the value is of the given type, the latter returns the converted value or undefined.

Exposing an API

Below (at the very bottom) of any scope is another layer that cannot be manipulated by user input: The API space. This layer is used to hold functions, e.g., sin or cos without being in danger of disappearing forever due to scope manipulation from the user.

The API space is accessible via the Globals property of an Engine instance. Like the scope the API layer is instance bound, i.e., two different engine instances can look different here.

var engine = new Engine();

engine.Globals["three"] = 3.0;

At first sight interaction in MAGES looks very similar compared to the interaction with the scope. However, the difference lies in the users disability to overwrite functions in here. The suggestion is to use the scope for observing changes / variables done by the user and the globals for define the API to work with.

As the API will mostly consist of functions (and not of constants), helpers to introduce functions are an important part of MAGES.

var engine = new Engine();

var function = new Function(args => (Double)args.Length);

engine.SetFunction("argsCount", function);

var result = engine.Interpret("argsCount(1, true, [])");

If we use Function directly we are responsible to care about the types being used. We are sure that only MAGES compatible types are entering, however, at the same time we need to make sure to return only MAGES compatible objects.

Potentially, it is better to just use any kind of delegate and pass it in. For instance, the following works as expected.

var engine = new Engine();

var function = new Func<Double, String, Boolean>((n, str) => n == str.Length);

engine.SetFunction("checkLength", function.Method, function.Target);

var result = engine.Interpret("checkLength(2, \"hi\")");

Now, in the former example all used types are MAGES compatible, however, we can even use this with (kind of) arbitrary types:

var engine = new Engine();

var func = new Func<Int32, String, Char>((n, str) => str[n]);

engine.SetFunction("getCharAt", func.Method, func.Target);

var result = engine.Interpret("getCharAt(2, \"hallo\")");

In this example Double (the MAGES compatible type) gets automatically converted to an integer. The result type (a Char) is automatically converted to a String as well.

Similar to functions general objects can be exposed as well. Here MAGES offers the capability of denoting so-called constants, which may be shadowed by the user, but will actually never be overwritten from the user.

var engine = new Engine();

var constant = Math.Sqrt(2.0);

engine.SetConstant("sqrt2", constant);

var result = engine.Interpret("sqrt2^2");

The described way is the preferred alternative to accessing the Globals object directly. The main problem with the Globals object has been indicated previously. Here no safety net is enabled to prevent MAGES incompatible objects from entering the system. Therefore, it is highly recommended to use the wrappers SetFunction and SetConstant to provide functions and constants.

What if a constant is not good enough? What if users should be able to create multiple instances? Here a constructor function is the right answer. In the following example the StringBuilder class from .NET is exposed to MAGES via a constructor function.

var engine = new Engine();

var function = new Func<StringBuilder>(() => new StringBuilder());

engine.SetFunction("createStringBuilder", function);

var result = engine.Interpret("createStringBuilder().append(\"Hello\").append(\" \").appendLine(\"World!\").toString()");

In general such constructor functions are essential combined with features of MAGES such as the automatic wrapping of arbitrary objects. There is, however, an even better way to provide such constructor functions.

Interaction

MAGES makes it easy to expose existing .NET types via the SetStatic extension method. Let's start with a simple example:

var engine = new Engine();

engine.SetStatic<System.Text.StringBuilder>().WithDefaultName();

var result = engine.Interpret("StringBuilder.create().append(\"foo\").appendLine(\"bar\").toString()");

Compared with the code above this seems rather straight forward and trivial. So what exactly is happening here? First, we are exposing the .NET type System.Text.StringBuilder with its default name. In contrast to the previously mentioned extension methods the SetStatic does not expose the result directly. Instead, we need to tell MAGES how to expose it. In this case we go for the standard way, which would be by its original name ("StringBuilder"). Two other ways also exist, which will be discussed later.

By convention the constructors are exposed via the create method. From this point on the code is equivalent to the one above. Again the underlying .NET type (a StringBuilder instance) has been exposed. A legit question would be: Why are the names different?

MAGES comes with the ability to expose .NET types in a API coherent manner. Therefore, every field / property / method / ... name is transformed by a centralized service, an implementation of the INameSelector interface. By default the CamelNameSelector is used, however, we could replace it if we want to. This name selector changes all .NET names from PascalCase to camelCase.

So let's expose something else - how about some kind of array?

var engine = new Engine();

engine.SetStatic<System.Collections.Generic.List<System.Object>>().WithName("Array");

var result = engine.Interpret("list = Array.create(); list.add(\"foo\"); list.add(2 + 5); list.insert(1, true); list.at(2)");

This time we've decided to expose the List<Object> type. However, the default name would be impossible to access; if it would be legit at all. Instead, we've decided to give it a custom name - "Array". We can now use the static Array object to create (wrapped) instances of List<Object>. In this case we name the instance list. Finally everything behaves as we've seen before. There is just one new thing here: The at function does not exist as such on the .NET List<Object>.

MAGES exposes .NET indexers via a convention called the at function. This convention, as with the others, can be changed by providing a custom INameSelector implementation.

Finally, we can use the SetStatic extension method to expose whole collections of functions (or other objects). Let's say we want to expose some functions, e.g.,

static class MyMath

{

public static Double Cot(Double x)

{

return Math.Cos(x) / Math.Sin(x);

}

public static Double Arccot(Double x)

{

return Math.Atan(1.0 / x);

}

}

What we could do is (since the class above is static we cannot use it with generics, but fortunately there is an overload that accepts a Type argument)

var engine = new Engine();

engine.SetStatic(typeof(MyMath)).WithName("mm");

var result = engine.Interpret("mm.arccot(mm.cot(pi))");

This, however, has essentially placed all these functions in a kind of "namespace" (as its a runtime object its not exactly a namespace of course, however, from the code's perspective it could be regarded as such). What if we want to expose all these functions directly, i.e., globally? Here the third option comes into play:

var engine = new Engine();

engine.SetStatic(typeof(MyMath)).Scattered();

var result = engine.Interpret("arccot(cot(1))");

Using Scattered the object is decomposed and inserted into the global API layer.

Performance

One of the main concerns with YAMP was its performance. Even though YAMP did show a decent performance, it lacked in some key areas and did not contain much room for improvement. As YAMP's parsing model is buildt upon using reflection, it was both limited and difficult to maintain. MAGES now tries to introduce a much easier model. We've already seen that the architecture was done with the greatest possible separation of concerns in mind. The coupling has been reduced to the necessary minimum level. This should ensure scalability for features implemented somewhere in the future.

Key Aspects

Even though MAGES uses the standard four layers to evaluate some code it is quite fast - even for small expressions. The layers are:

- Source handling (character stream)

- Tokenization (token stream)

- Syntax model (abstract syntax tree)

- Validation and code generation (operation stream)

Once the operations are generated a lot of the overhead is gone. In YAMP the interpretation was performed against the AST. This involved a part of the validation. Now in MAGES everything related to the validation is strictly separated from running the code. Once the code has been generated MAGES is guaranteed to be operational.

Overall we go from a 1D character stream to a 1D token stream to a tree representation to a 1D array of operations. The final linearization is important for the evaluation. It also allows, e.g., to cache inspected code to be used over and over again without spending time on the (useless) successive validation incl. AST generation etc.

The tokenizer has been designed with a max. look-ahead of a single character. This reduces the random access in the character stream and requires only a two characters (previous, current) to be stored. The tokenization works with no look-ahead, i.e., the AST has to be generated without knowing what is about to come. Everything has to be transformable to its desired state. This is mostly straightforward, however, for lambda expressions this condition is problematic.

(x, y) => x + y

() => 5

(x) => x^2

x => x^2

The first three expressions are all given by an arguments expression, a function operator, and a function body expression. The last one is given by a variable expression, a function operator, and a function body expression. Therefore, the function operator has to take anything on the left side, however, this anything is restricted to a special subset of an arguments expression (only taking variable expressions as expressions), as well as a variable expression. All other expressions are considered invalid. This is a check that is partially performed by the parser, partially by the resulting FunctionExpression.

Usually, we would be required to run a full validation run before generating the code. However, in order to save a little bit on performance we do it one sweep - and we stop the code generation once we encounter the first error. On this error we throw an exception. This minimal validation is rather a protection mechanism than a real validation. MAGES also comes with a real validation tree walker, i.e., one that notes any detected error and does not stop at the first problem.

Performance Evaluation

The performance of MAGES was tested almost exclusively in comparison to YAMP. This may sound insufficient, but actually since YAMP was compared against many other solutions we can use the basic triangle inequality to infer the performance against these other solutions.

For running the performance evaluations we've chosen the very solid Benchmark.NET solution. It is a fine open-source library that can be obtained via Nuget. It considers all the necessary stuff such as warmups runs, consecutive benchmarking, and different workloads to get the most reliable number. In the end the median and the standard deviation are printed. The median may seem like a strange choice, however, as we potentially have a flat distribution with a spiking tail we could argue that the middle will most likely represent the behavior more accurately than an average number that is intoxicated by some outliers.

Providing a benchmarking solution is as easy as creating a console application that executes code like the following snippet:

static class Program

{

static void Main(String[] arguments)

{

BenchmarkRunner.Run<TrivialBenchmarks>();

}

}

The class then contains special methods that are marked as being benchmarked. The next snippet shows such a class using methods, which are decorated with the BenchmarkAttribute attribute coming from Benchmark.NET.

public class TrivialBenchmarks

{

private static readonly String AddTwoNumbers = "2 + 3";

private static readonly Parser YampParser = new Parser();

private static readonly Engine MagesEngine = new Engine();

[Benchmark]

public Double Yamp_AddTwoNumbers()

{

return YampNumeric(AddTwoNumbers);

}

[Benchmark]

public Double Mages_AddTwoNumbers()

{

return MagesNumeric(AddTwoNumbers);

}

private static Double YampNumeric(String sourceCode)

{

var result = YampParser.Evaluate(sourceCode);

return ((ScalarValue)result).Value;

}

private static Double MagesNumeric(String sourceCode)

{

var result = MagesEngine.Interpret(sourceCode);

return (Double)result;

}

}

Now let's look at the actual performance difference between MAGES and YAMP.

| Parser | Method | Median | StdDev |

| Mages | AddMultiplyDivideAndPowerNumbers | 5.1666 us | 0.0879 us |

| Yamp | AddMultiplyDivideAndPowerNumbers | 8.4325 us | 0.0220 us |

| Mages | AddTwoNumbers | 1.3742 us | 0.0136 us |

| Yamp | AddTwoNumbers | 1.8482 us | 0.0122 us |

| Mages | CallStandardFunctions | 8.3647 us | 0.0685 us |

| Yamp | CallStandardFunctions | 16.3588 us | 0.1172 us |

| Mages | MultiplyTwoVariables | 4.0711 us | 0.0325 us |

| Yamp | MultiplyTwoVariables | 5.3462 us | 0.0322 us |

| Mages | Transpose4x5Matrix | 15.7278 us | 0.2363 us |

| Yamp | Transpose4x5Matrix | 41.2478 us | 0.2637 us |

The smallest relative difference is in a test that includes multiple statements with variable access. The main time is spent on variable resolution, i.e., there is not much to distinguish between the two. Variable access works similar in MAGES. There is no upfront resolution, even though this would increase the performance. The reasoning behind this design choice is in the dynamic freedom. This way one can use caching without fixing the global scope.

The largest difference can be seen in the transpose operation. Even though filling the matrix requires a lot of operations in MAGES, the way that matrices are handled is much faster than it was formerly in YAMP. This direct way is also reflected in method handling. This explains why MAGES outperforms YAMP easily when calling a lot of standard functions.

A novel feature of MAGES is the capability to "compile" interpretation-ready code. This way, the whole process of validating and transforming source code to operations is omitted. The speedup is immense.

The following benchmark considers a simple query of the form

sin(pi / 4) * cos(pi * 0.25) + exp(2) * log(3)

evaluated with a trivial caching mechanism in form of a Dictionary<String, Func<Object>>. We compare MAGES against itself without the caching, as we know that MAGES itself already outperforms YAMP by a factor of two.

| Cached? | Method | Median | StdDev |

| Yes | CallStandardFunctions | 1.1564 us | 0.0123 us |

| No | CallStandardFunctions | 8.3767 us | 0.0543 us |

Caching may (dependent on the expression) be several binary orders faster than directly evaluating queries. This is crucial for repeating user input that originated from script files. It is possible to evaluate the user input in the beginning (or just after it changes) and use the cached result for faster, more energy-conserving results.

Until now we've seen that MAGES is decently faster than YAMP. But what about the performance for extended capabilities, e.g., custom-defined functions or matrix operations. The following table compares some of these operations.

| Parser | Method | Median | StdDev |

| Mages | CreateAndUseFunction | 17.5819 us | 0.0444 us |

| Yamp | CreateAndUseFunction | 24.5831 us | 0.2574 us |

| Mages | MultiplySmallMatrices | 5.1958 us | 0.0929 us |

| Yamp | MultiplySmallMatrices | 13.4905 us | 0.1122 us |

| Mages | MultiplyMediumMatrices | 265.5289 us | 0.7518 us |

| Yamp | MultiplyMediumMatrices | 17,874.4550 us | 130.2489 us |

| Mages | MultiplyLargeMatrices | 4,753.0534 us | 25.9203 us |

| Yamp | MultiplyLargeMatrices | 298,255.1038 us | 1,298.1635 us |

| Mages | ObjectAccess | 8.8594 us | 0.0871 us |

| Yamp | ObjectAccess | 18.7118 us | 0.0976 us |

As we can see MAGES does a great job beating YAMP in every scenario. Especially for matrices MAGES brings a huge performance gain to the table. In the benchmark above even with multiplying two "large" matrices MAGES outperforms the performance of YAMP when multiplying medium matrices. Already for small matrices we see a nice gain by using MAGES.

Another area of improvement is objects. Initially, YAMP did not know about objects, however, with one of the more recent versions support for arbitrary objects has been added. Now we see that MAGES, which comes with object literals and extended support from the beginning, is able to outperform YAMP already in simple scenarios easily.

How about the performance in comparison to other math expression parsers? Of course, MAGES is more than just a parser for mathematical expressions, however, for most users it will be the standard use case. Here, we can get a list of other excellent libraries. Besides YAMP, this list includes:

- dotMath

- Jace

- Arpain

- GuiLabs MathParser

- MuParser

- S#

- MxParser

The task for all of them was to evaluate the given expression:

2^3 * (2 + 3 * sin(1) / 0.3 - sqrt(5))

The following table shows how they are performing.

| Parser | Median | StdDev |

| Jace (Default) | 1.1588 | 0.2792 |

| MAGES (Cached) | 1.3827 | 0.0499 |

| dotMath | 8.9858 | 0.1715 |

| MAGES (Default) | 11.1582 | 0.6506 |

| Jace (Uncached) | 12.7334 | 1.7223 |

| Arpain | 15.1224 | 1.8141 |

| GuiLabs | 16.4246 | 3.0767 |

| YAMP | 30.9068 | 0.5521 |

| muParser | 68.0219 | 15.413 |

| S# | 246.5942 | 32.9897 |

| MxParser | 11,543.41 | 216.0238 |

We can see that MAGES is definitely among the fastest math expression evaluators. While Jace is purely focused on mathematical queries, MAGES goes beyond that area. If we compare MAGES to S#, which has a similar set of goals, we see that here we have a vast performance gap.

One of the differences between Jace and MAGES is that Jace comes with expression caching out of the box. In contrast, MAGES does not hide this layer from the user. Having a cache also comes with some responsibility - otherwise, it is far too easy to create a memory leak. MAGES does not want to take this responsibility away from the user. Instead, MAGES offers everything to make it easy for the user to integrate it with a cache.

FAQ

At this point some questions my already be left unanswered. Hopefully, the following list of hypothetical questions and answers may be helpful.

Why another interpreter?

Why not? The idea here was to have something lightweight, yet extensible and powerful. It should also be able to run on a large variety of platforms.

Why is the REPL separated from the library?

This design makes it easy to include MAGES in any application. I think its a far more flexible design to provide the core functionality in a library, which can then be used in whatever kind of application (web, console, or GUI).

Why are some functions only available in the REPL?

Most functions should be available in form of so-called plugins (i.e., usable beyond the REPL), however, a small subset (e.g., spawn) of functions are regarded experimental and not outsourced to a plugin.

Why is it so much faster than YAMP?

The primary reason is the directness of computation - omitting unnecessary abstractions and copying. Overall, this all has been achieved by a better design.

What are the future plans?

MAGES was never thought as a "big thing". It does not reinvent the wheel and it tries to minimize the cognitive load required to write code. Potentially, an (optional) optimizer will be included in the pipeline. This would simplify constant expressions during code creation thus potentially reducing instructions during runtime.

What can I do when I face a bug?

Report it in the official GitHub repository. Just make sure you come up with a minimal working example to show the bug.

How can I help?

A first step would be to star the official GitHub repository. If you can spend more time then any code contribution (could be as simple as more unit tests, but could also be as elaborate as adding another official plugin or language feature) is highly appreciated.

Using the code

Let's say we want to integrate MAGES in an existing (or fresh) application. The first step we should make is to open the Nuget package manager dialog. There we find MAGES and add it to the current project. The following screenshot illustrates this.

Then we may do something as simple as the following. We expose the API-relevant classes in our application to MAGES. If our design is already quite good there is nothing to do. Otherwise, we bring the functionality to expose into a dedicated class.

Now we look at a sample Windows Forms application where we did exactly this. We change the Form1.cs file to look as follows:

public partial class Form1 : Form

{

private readonly ListsApi _api;

private readonly Engine _mages;

public Form1()

{

InitializeComponent();

_api = new ListsApi(listSource, listTarget);

_mages = new Engine();

_mages.SetConstant("api", _api);

}

}

The following screenshot shows the sample application. It essentially consists of two lists, which are connected via two buttons.

Previously, the two buttons may have contained the logic to handle. Now, we alter the design to work against an API layer. This is a best practice anyway. Hence the event handlers now look as follows:

private void btPush_Click(Object sender, EventArgs e)

{

_api.Push();

}

private void btPull_Click(Object sender, EventArgs e)

{

_api.Pull();

}

The API class looks as simple as given here:

class ListsApi

{

private readonly ListBox _source;

private readonly ListBox _target;

public ListsApi(ListBox source, ListBox target)

{

_source = source;

_target = target;

}

public Boolean CanPush

{

get { return _source.SelectedItem != null; }

}

public Boolean CanPull

{

get { return _target.SelectedItem != null; }

}

public void SelectSource(String name)

{

Select(_source, name);

}

public void SelectTarget(String name)

{

Select(_target, name);

}

public void Push()

{

Transfer(_source, _target);

}

public void Pull()

{

Transfer(_target, _source);

}

private static void Transfer(ListBox source, ListBox target)

{

var item = source.SelectedItem;

if (item != null)

{

source.Items.Remove(item);

target.Items.Add(item);

}

}

private static void Select(ListBox list, String name)

{

foreach (var item in list.Items)

{

if ((String)item == name)

{

list.SelectedItem = item;

break;

}

}

}

}

Essentially, this class only cares about moving a selected element from the source to the target or vice-versa. However, having this API centered approach allows us to expose the functionality quickly to MAGES.

Finally, this also allows us to enter the following snippet in the console textbox added to the main form. If we currently have the element "e" on the left side and the element "d" on the right side, both will swap.

api.selectSource("e");

api.push();

api.selectTarget("d");

api.pull()

Of course we could have also exposed the API without the additional api namespace. However, in this case it makes it easy to see where MAGES is accessing the API exposed by us. The concluding semicolon is optional and can therefore be omitted in the source shown above.

Conclusion

MAGES is a nice tool to introduce quick scripting in any kind of .NET application. It is highly performing, extensible, and works seamlessly with .NET. By embracing the .NET type system any kind of API exposure is done in no time. The interaction between the MAGES language and our custom code feels natural.

History

- v1.0.0 | Initial Release | 26.06.2016

- v1.1.0 | Added Table Of Contents | 27.06.2016

- v1.1.1 | Text Fixes and Improvements | 28.06.2016

- v1.1.2 | Corrected Link to the Sample Project | 29.06.2016

- v1.2.0 | Added FAQ | 30.06.2016

- v1.2.1 | Fixed Some Typos | 02.07.2016

- v1.3.0 | Added Chocolatey Link and Performance Chart | 06.07.2016

- v1.3.1 | Fixed Some Typos | 10.07.2016