Introduction

Most non-trivial applications will use a database to store data. This database can be relational or not, but some data store will be needed.

Most modern database management systems (DBMS) can do much more than just storing data, and as we know: with great power comes great responsibility.

What Should a DBMS Do?

A DBMS has 3 main responsibilities:

- Store correct data, preferably in a durable way (but not always, check out in-memory OLTP). So simple data validation is part of this responsibility.

- Retrieve data correctly and fast.

- Security. Make sure that data can only be modified or retrieved by the right people. This is not the scope of this post.

A DBMS has many possibilities to fulfill these 2 conditions. In this post, I will discuss mostly a general DBMS, using SQL Server as an example. So Oracle fans, please don’t shoot me.

Storing Data

Data is stored in tables. That could conclude this chapter, but let’s see what happens when we insert / update / delete a record in SQL Server. I simplified things, and I probably forgot some actions left and right. Feel free to let me know in the comments section!

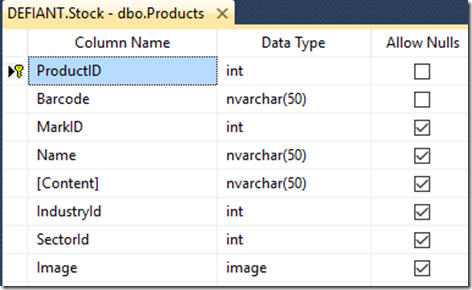

Let’s use this table as an initial example:

Inserting a Record

When a record is inserted in the database, multiple validations are performed:

- Check if all the mandatory fields are filled. In the example, these are all the fields where “Allow Nulls” is not checked.

- Check if all the fields are in a correct format. It will not be possible to store ‘

ABC’ in the MarkID field, because its data type is int. - Check the conditions between fields. Maybe you’re storing a period with a from- and a to-date. Probably you’ll want the from-date to be before the to-date. This can be enforced using check constraints.

- Check unique constraints. A primary key has to be unique, and this must be validated. In the example, the

ProductID is the primary key (as you can see by the key symbol next to it). Other unique constraints need to be validated. You can for example make sure that the first name, last name are unique by creating a unique index over these 2 fields. - Check referential integrity. We saw in last week’s post how to normalize a database, which will create related tables in the database. The relationships (foreign keys) must be checked as well. Creating these relationships will enforce this, and tools (like Entity Framework designer) can use this information to automatically create relations in the model.

- Set fields with default values. Typically, these are fields like:

- ID, which can be an identity field in SQL Server, or come from a sequence in Oracle (and SQL Server too in the latest versions).

- A GUID (unique identifier) that can be generated as a default value.

- A data field (example:

CreationDate) which can be set to the current date (or timestamp) - Specific defaults that have been created for this table.

When all this is done, the row will be inserted. This means that the log file is updated, and that the row is added in the right memory page. When a checkpoint occurs, the page will be written to disk.

After the row is written, the insert triggers are fired (if any). Insert triggers can also perform validations, and rollback the transaction. This is AFTER the row has been written, so it is not the most efficient way of validating data. The trigger execution is part of the same transaction, so if the record is invalid we can rollback in the trigger. This means that the record has been written in de log file already, and this action is now undone.

Usually, the need for triggers indicates some data denormalization. This can be done for performance reasons, or to perform more exotic validations.

Updating a Record

Most of the actions for an insert will be performed for an update as well. Default values will not be set, this only occurs when a record is inserted. Update triggers can be used to set fields like [LastUpdated] for auditing purposes. Referential integrity is verified, and can be handled in several ways.

In the update rule, we see 4 possible actions:

- No Action – When the field is updated and referential integrity is broken, an exception will be thrown and the update will not be performed.

- Cascade – The value of this field will set the value of the foreign key in all the detail records as well.

- Set Null – All the detail records’ foreign key will be set to

null. - Set Default – You can guess once

After the update the update triggers are executed, with the same undo logic.

Deleting a Record

The data page for the row is retrieved and the row is marked as deleted. Referential integrity is checked, and can be handled in the same ways as when updating a record. When the row is deleted, the delete triggers are executed.

Retrieving Data

It is important to retrieve data as fast as possible. Users don’t want to wait for minutes for a web request to return. And often, when a request is slow, it is due to the database. Of course, there can be many other causes, but the database is usually the first thing to look at. Retrieving the data is the simplest operation, but it is very critical because when inserting / updating / deleting records, the DBMS must also retrieve the correct record(s) to work with.

Indexes are very important at this stage, because without indexes, the only mechanism to retrieve data is a full table scan. This will soon become a problem for performance. Indexes are not the scope of this article.

In a well-normalized database, queries can quickly become complex. Most development tools (like Visual Studio, SQL Server Management Studio, …) have query builders that take away the heavy typing. These tools work best when you have also created all the relationships between your tables.

Relationships

SQL Server has a nice designer to maintain your relationships, the Database Diagram Designer. It allows you to create relationships in a graphical way.

When you create relationships, you do yourself some favors:

- The database is automatically documented. When you see the database diagram, you understand immediately how tables are related. In our little example, we see that a

SalesOrderHeader can have many SalesOrderDetails, and must be linked to one customer. - The DBMS can now enforce relational integrity. A

SalesOrderDetail must be linked to a SalesOrderHeader.

What Should the Application Do?

Data Validation (Again)

Now that the database is set up, the application can focus on using the data. We are sure that most data can only be entered in a correct way in the database. This doesn’t mean that no verification must be done at the application level, but if we fail to verify, the DBMS will make sure that no invalid data can be entered. This also applies when data is modified using other tools than the application (for example, by using SQL Server Management Studio or linking tables in Access or Excel and directly modify the data).

Some good reasons to still perform data validation at the application level are:

- Users want to have immediate feedback about the data they enter. It is a pity if a user enters all their data, only to find out that at the last step (saving into the database), there are some things incorrect and they have to start again.

- Referential integrity means that we store the (often meaningless) key to the related table. If you would for example use GUIDs for your primary keys, then users would be required to know – remember – type these GUIDs in the user interface. No user will do this. Combo boxes and other mechanisms are more user friendly. Many tools will generate this automatically if the relations are properly put in the database.

- We preferably enter the data correctly in the database. the DBMS will verify your data, but rejecting the data means that a lot of time is wasted. If you’re alone on the database, this isn’t a problem, but on a loaded system with thousands of users, this will impact the performance for everybody. It is better than to verify the data on the user side as much as possible.

- Not all verifications can be done on the user side. Unique constraints are typically only checked at database level (and therefore require indexes to be created).

CRUD

Entities must be stored in the database. Depending on how your application is set up, entities can correspond to single tables, or be stored in multiple tables. This can be done by simple insert statements (or by your O/RM, which will do the same in the end).

How About Stored Procedures for CRUD?

This used to be a no-brainer. 10 years ago, we would create a procedure for each insert / update / delete, and one or more procedures to read data. Advantages of this approach are:

- Consistency. Every modification in the database is done via a procedure.

- Data validation. More advanced evaluations can be done in the procedure, before issuing the actual DML statement.

- Automatic auditing / logging. In the same stored procedure, we can insert / update / delete records, and then write some entries in a log table. This is typically done in the same transaction.

- Security. For the 2 previous reasons, we may want to block direct DML access to the tables and only allow modifications (and possibly reads) through stored procedures.

As always, there are also disadvantages:

- Many procedures. If we need at least 4 procedures per table, the number of procedures will grow fast. In a moderately big database, this means hundreds of stored procedures to be written and maintained. For this reason, there are tools available that will generate the CRUD procedures for a table. This doesn’t solve the problem of the many procedures, but it will at least reduce typing!

- Code organization. Some code will be repeated in many procedures. So either we copy / paste or we create a new stored procedure for this common code. In SQL Server, is it not possible to create “internal / private” procedures that can only be called from other procedures and “

public” procedures that can be called from the outside. So everything will rely on good naming conventions and discipline. - Tools. Most tools and frameworks (ADO.NET, EF) are capable of calling stored procedures for update statements. But in the procedures, a lot can be going on, and the tool doesn’t know about all the possible side effects. Cached data can be invalid after calling a stored procedure.

For all these reasons, nowadays we usually choose to have the OR/M generate its own DML (data manipulation language) statements and don’t generate all the stored procedures, unless there is a good reason for it. Usually, we create repositories that will be called whenever an application needs data or wants to modify data. In these repositories, we can add some more validation if needed. Of course, this doesn’t prevent the use of applications to directly enter data in the database!

Business Logic

In many applications, business logic is implemented in stored procedures. This is sometimes referred to being a 2 1/2 tier application. This approach makes it possible to change application logic without recompiling / redeploying the application. Sometimes, it can be faster because stored procedures are executed “close to the data”. But (in no specific order)

- Code organization is a problem again, for exactly the same reasons I have already given before.

- SQL is not the best language for programming business logic. Even though the most basic language constructs (loops, selection, …) are available, it usually is easier (and more maintainable) to write the code in an OO language (or for fans of functional languages in F# or Haskell

). Compiler support will be much better.

). Compiler support will be much better. - Your application code depends closely to the DBMS. If your software becomes popular and must run on different DMBSs, you’re out of luck!

- It is hard to debug SQL stored procedures. Since the latest versions of Visual Studio, it is possible to debug stored procedures, but it still complicates things and it is not as powerful as the C# debugger.

- You need a good SQL developer who knows what he is doing on the database. Don’t forget that the database layer is used by many users. So if a procedure is badly written, it may impact the performance of the DBMS, and hence the performance of every application that depends on it.

- By default, database objects (such as procedures) are not versioned. So if someone modifies a stored procedure and now your application doesn’t work anymore, you’ll have a good time finding what has been changed. Some would describe this as “job security”.

- It doesn’t scale! I kept the best for the last. When you discover that stored procedures are the bottleneck of your application, you can’t just put another server on the side to add some more power.

So even though it is possible to use stored procedures to implement business logic, there are some good reasons not to go that way!

So When are Stored Procedures OK Then?

Simple: when you implement functionality that has to do with your database, and which doesn’t implement business logic. For example, just copying client data may qualify.

Having said that, this is simple. I can tell you that if you put a couple DBAs together and you throw this “in the group”, you can expect long and hefty discussions.

Conclusion

The database is a very important part of your application. So it is a good idea to not make it your bottleneck. Try to use the DBMS for guaranteeing that data is stored as correct as possible and retrieved efficiently. Stored procedures (and user functions for that matter) are very useful, but must not be used to implement business logic. With modern OR/M frameworks, the use of stored procedures for CRUD operations is not encouraged anymore.