Introduction

Working with QA can often be an adversarial situation, but in reality, it shouldn't be. The developer, customer, marketing, CTO, CEO, accounting department, and so forth, are all stake holders in delivering a functional, aesthetic, secure, safe, and easy to use product. This article can only scrape the surface of what those six terms:

- functional

- aesthetic

- secure

- safe

- easy to use

- accurate

actually mean. Depending on the product being developed, they will be weighted differently as well. Security may be paramount in a server handling REST requests, where aesthetics is irrelevant because there's no customer facing UI. Safety may be the priority in a piece of medical equipment or an engine cut off switch on an airplane. Safety may be irrelevant in a mobile game application (unless you want to make sure the person isn't crossing a street or driving while playing the game.) So, depending on the application, you may be addressing some or all of the above concerns, and the QA process should provide the right balance of coverage based on how those (and things I've omitted) are tested.

Ultimately, the QA process is like a mathematical proof - it consists are formal, repeatable processes that validate and verify the application.

When Reading this Article...

A few minor points:

- "Developer" can be:

- solo developer

- consultant team

- in house team

- combination of above

- "Customer" can be:

- a person / team playing the role of the customer

- the customer can be in-house (accounting department, for example)

- the actual customer (person or business) for which the product is being written

- anonymous -- you may be creating a product for the masses

- QA people should be everyone:

- the developer

- marketing

- CTO/CEO/COO/etc

- the customer (beta testers, etc.)

- COTS - Commercial Off The Shelf

What is QA?

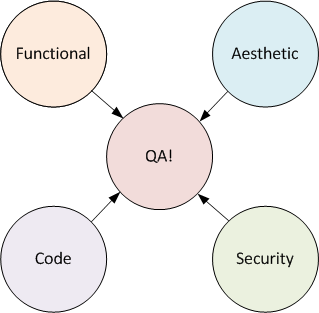

As circular as it may sound, Quality Assurance is the process of testing an application to ensure that it meets a minimum level set of requirements, where the requirements establish a baseline of "quality." There are different kinds of QA, but at a minimum, it's can useful to organize them into four categories:

- Functional

- Aesthetic

- Code

- Security

Functional QA

Functional QA is intended to verify that the application meets the behavioral requirements that inspired the development of the product to begin with. Given various inputs and actions, does the application behave in the correct way, by producing the correct outputs and actions? The functional QA is often guided by:

- The developer - this person/team has unique "white box" perspective and can therefore provide valuable input on how to maximize code path testing to ensure that all behavioral aspects of the program are tested. This includes not just "normal" behaviors, but also error handling and error recovery.

- Customer (or person playing role of customer) - the customer wants to know the program does what they need it to do, and that what the program does is accurate, safe, secure, easy to use, etc.

- QA team - Does it meet the requirements? Does it handle problems (network outage, hardware failure, wrong inputs, etc)? Does it work on various hardware / OS versions? What is the hardware / OS / third party configuration matrix? These are tests that neither the developer nor the customer may be considering and evolve out of knowledge of other systems, and general experience. It can often be guided by issues that the developer encountered during development, and that the customer is aware of based on their specific domain knowledge.

Aesthetic QA

This is an often but important missed step, and whose relevance varies depending on the kind of applications:

- Customer - Is it intuitive to use? Does it work well with existing processes / workflows? Is it easy to learn?

- Marketing - Does it look good? Does it look better than the competition? Does it sell itself?

- Is the UI consistent in its look and feel - fonts, colors, layout, etc.

Nobody wants an application that everyone hates using because it is awkward, creates more work, or is orthogonal to existing business processes. Marketing wants to make sure that the product meets or exceeds what the competition currently has, and first impressions are often a make/break point when the potential customer goes to your website and looks at a screenshot of the application.

Code QA

This is another often missed step. Here, the development team and CTO should be involved:

- Is the code well documented?

- Is the code maintainable?

- Is the application easily extensible?

- Are there exposed back doors?

- Is all sensitive data properly secured / encrypted?

People come and go, applications must be constantly enhanced as new technologies appear and competition upgrades their own products. Again, this may be highly relevant to the product you're developing, or barely relevant.

Security QA

The customer and the company need to know:

- Is the data secure?

- Is the application protected from malicious attacks?

- Are the necessary roles implemented?

- Do the roles block functionality that a person with specific permissions should not have access to?

- Do the roles enable functionality that a person with specific permissions should have access to?

These questions (and more) often fall outside of the application's functional QA and are worthy of separate consideration.

How to go About Doing Quality Assurance

QA is all about inputs and outputs and the behaviors that occur, whether, for example, it's simulating a denial of service attack or verifying that when the user clicks on the Cancel button, the UI returns to the home screen.

Start with Documentation

QA starts with one or (hopefully) more documents:

- Requirements document from customer and marketing (often, marketing will drive additional features))

- Developer's architecture/design documents

- Any internal documents generated during the development process useful for guiding QA process

- Documents from previous QA cycles

- Known bugs (there are always known bugs)

- Known missing features (there are always known missing features)

- Application "arc" - the overall lifecycle of the application:

- design

- development

- maintenance

- future planned enhancements

- end of life

- Other existing documentation

Given some or all these documents, a formal Acceptance Test Plan (ATP) can be created.

Work Together

Creating an ATP is not trivial. It involves working as a team (including the customer if appropriate) in which different stake holders have different requirements for, ultimately, a pass/fail criteria.

As a team, one should develop specific and formal approaches for testing. This can be complicated, especially when trying to determine quality in code, or creating the necessary test fixtures to simulate security issues, hardware failures, etc. Requirement can be orthogonal -- the user wants a single click to create the wirelist for an entire satellite, but the application requires meticulous, accurate, and verified component, signal, and connector information.

It's an Iterative Process

Ironically, the QA process itself needs to be QA'd. Once in the field, do problems occur that the QA process missed? Why? How can the QA process be improved? Try to anticipate this ahead of time, but realize that not everything can be anticipated ahead of time.

Create More Documentation, Write More Code

Before the QA process formally begins, various pieces must be in place.

Acceptance Test Plan

The ATP is the bible, the rule book, that everyone plays by and agrees to. Everyone contributes to the ATP:

- Developer contributes with their white box knowledge to maximize code coverage testing

- Customer contributes with their domain knowledge

- QA can contribute with their "how we will break it" plan

- bad inputs

- unexpected but allowed UI events (black box testing)

- hardware failures

- network failures

- dependency failures (servers, services, etc.)

- Marketing can contribute look and feel requirements, ease of use, help documentation, etc.

- There may be several ATP's:

- White box ATP for internal state testing

- Black box ATP for functional / aesthetic testing

- Code ATP for extensibility / maintainability

- Customer ATP for requirements testing

Keep it simple but comprehensive. Don't get overwhelmed in the details, but decide what level of QA is appropriate for time, money, and staffing that is available.

Test Fixtures

The pain point for QA is "how the heck do I test this?" Here:

- The developer and QA coordinate on test fixtures:

- Test servers

- Endpoints used for testing

- Live vs. simulated (mocked) interfaces

Additional Documentation

What other documents exist that can help guide and create the ATP?

- Installation document

- User document

- Help document

White Box Testing

QA often needs to be able to peer into the application in ways the customer doesn't care or need to know about.

- Probes / Test Points

- Additional applications / instructions on how to verify independently:

- Internal state (useful in a stateful system where a workflow can be in any number of states)

- Intermediate outputs (usually exposed information regarding workflow state)

- Logging

- Developer provides QA with tools to monitor white box logging - the internal guts of what's going on

- Developer provides QA with tools to monitor black box logging

- This is "in the field" logging - only non-secure data, more restrictive, logged to a secure server

- Can often be a customer requirement

- Can often be useful in figuring out what happened out in the field.

- Error / Exception Reporting

- What facilities do you provide QA for monitoring code exceptions?

- Informative message boxes?

- Email notification?

- Specific exception logging channel?

Inputs / Workflows / Outputs

A formalized set of input parameters, workflows, and expected outputs is the cornerstone of the QA process.

- A set of initial input states / values to test against

- The set of expected output states / values for each initial input state/value set

- Reports - are the correct reports created and are they accurate?

Automation Tools

Can the workflows identified above be automated? What tools (both internal and COTS) need to be developed or acquired to aid in test automation?

- Developer and QA work together on internal tools for test automation

- Test data setup / teardown

- "Jump to" specific points in the application to test specific behaviors without having to go through a bunch of pre-steps

- QA determines what COTS tools can help with test automation

Reporting

How does:

- QA report issues?

- the developer report issues are fixed?

- the project manager prioritize issues?

- QA re-test and sign off on an issue?

This involves:

- QA has a formal reporting process of bugs, including:

- COTS tools to track bug fixes

- Exact steps on how to recreate the issue

- Developer attaches any program generated logs that:

- show what the system was doing

- verify that the steps QA took are actually the correct steps

- Developer/QA documents:

- additional testing steps that help recreate the problem

- additional testing steps where the same or similar problem can occur but has been missed in the current ATP

- Customer may have a less formal reporting process which needs to be entered into the formal process

- Project manager has the ability to prioritize issues, move issues to "next release", and track what steps, and how long, it took to resolve an issue.

Sign-off

Sign off involves all the stakeholders:

- Developer

- Customer

- Marketing

- CTO / project manager

- Others

Basic QA Workflow

The workflow below is:

- not necessarily sequential - they can occur in parallel depending on relationships and involvement with customer, etc.

- do not need to occur when the application is completely ready. Specific areas of the application can be tested at any point, particularly when milestones are tied to deliverables.

The basic steps are:

- Developer does their own testing

- In this process, the developer can start creating the "white box" ATP.

- Internal review

- Before the application is handed over to QA, the developer/team does internal testing.

- Here is a good place to also do code QA.

- Any additional guidance to QA can be given here.

- Any additional aids (as discussed above, test fixtures, simulators, etc) can and should be determined here, if not earlier.

- QA testing

- QA tests the application.

- Issues are found and fixed or moved to "next release"

- Process iterates

- Customer / field testing

- Customer tests the application.

- Issues are found and fixed, or workarounds are accepted, or issues are agreed to be moved to "next release".

- Process iterates.

Limited field testing is often a useful step before releasing the application to everyone. Specific customers that are willing to be guinea pigs for new products, have a fallback system in place in case something goes horribly wrong, and can clearly communicate issues and provide useful feedback -- well, those customers are like gold.

Conclusion

While this article just scrapes the surface of a formal QA process, it is intended as an introduction of the high level planning and QA process, especially for teams that have no prior experience working on a "production" application, or for teams looking for ideas on how to improve currently very informal processes. Hopefully this gives you an idea or two!