Introduction

ASP.NET websites and WCF services can be attacked in many ways to slow down the service and even cause a complete outage. One can perform slowloirs attack to exhaust all connections and threads on IIS and cause a complete outage. One can hit expensive URLs like Download URLs or exploit an expensive WCF service to cause high CPU usage and bring down the service. One can open too many parallel connections and stop IIS from accepting more connections. One can exploit a large file download URL and perform continuous parallel download and exhaust the network bandwidth, causing a complete outage or expensive bandwidth bill at the end of the month. One can also find a way to insert a large amount of data in the database and exhaust database storage.

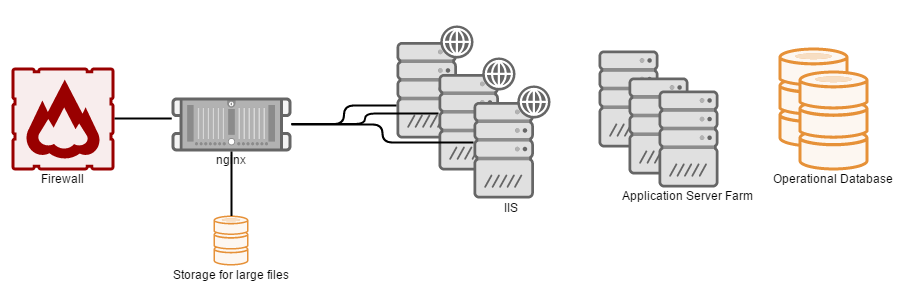

Thus ASP.NET and WCF services, like all other web technology platforms, need more than standard network firewall. They need proper Web Application Firewall (WAF), which can inspect exactly what is being done on the application and block malicious transactions, specific to the application being protected. Nginx (engine x) is such an application that can offer many types of defence for ASP.NET and WCF and it can significantly speed up an ASP.NET website by offloading static files and large file transfers.

Even if you have zero knowledge of Linux, you can still get a decent nginx setup done.

What is nginx

Nginx is a free open source webserver and reverse proxy. It is now the third most used server after Apache and IIS. Besides the free version, there's a commercial version 'Nginx Plus', which offers more features for load balancing, security and monitoring. The free version has no web console for monitoring at all. It has no health check capability on the backend servers, besides simple connection open, no sticky session capability, no web monitoring console (besides nginxtop), no TCP checks and so on. Nginx guys have done clever feature planning to move those features to the commercial version, which you will be itching to have once you go live. But that does not mean using the free version is useless. You can still make very good use of it. At least it is far better than exposing IIS on the internet naked.

In this article, we will look at the features of the free nginx and how to utilize them to defend ASP.NET and WCF services. Its main feature is to act as a reverse proxy, which offloads backend servers like IIS from serving static files, large downloads, video streaming and so on. It can act as a load balancer and rate limiter, limiting traffic going to the backend webservers.

What Attacks Can We Block?

Using nginx, I will show how to implement the following use cases:

- Block Denial of service attacks

- Block slowloris attacks to prevent complete outage

- Limit number of open connections per IP, thus reducing DOS attack impact

- Limit number of http hits made per IP per second/minute, thus reducing DOS attack impact

- Whitelist, blacklist IPs

- Limit download bandwidth per IP to prevent bandwidth exhaustion

- ASP.NET and WCF specific protection

- Protect from large POST on ASP.NET and WCF

- Define POST size per URL, e.g., 100k on the entire website, except 4MB on a download URL

- Disable WCF service WSDL/Mex endpoints, so that no one can explore what services are on the offer.

- Suppress stack trace from error pages, as stack traces reveal internals of application that aids attackers.

- Suppress IIS and ASP.NET headers, so that one cannot figure out which version is running and then exploit the vulnerabilities

- Protect IIS from range attack CVE-2015-1635

- Improve performance and offload IIS

- Cache ASMX and WCF Ajax proxy on browser and save repeated hits

- Cache static files on nginx servers, preventing repeated hits going to IIS

- Offload expensive gzip compression and save IIS CPU

- Offload large file transfers by storing them on nginx server upon first hit

- Block AntiXSS and Injection attacks

- Boost site performance using Google's PageSpeed module for nginx

Deployment

You deploy nginx on a linux server. You can get a small or moderate Linux VM, as nginx has very low footprint, when it handles several thousand requests per second. The linux VM then forwards the connections to the IIS servers.

Installation

There are specific installation instructions available for various linux distributions. However, I compile it from source code because I need the headers-more-nginx-module, which allows header manipulation. Here are the commands to run as a root in order to get nginx and the module downloaded, compiled and installed, ready to use. You should change the nginx URL and folder name based on the latest version of nginx available.

mkdir nginx

cd nginx/

wget http://nginx.org/download/nginx-1.11.2.tar.gz

tar xf nginx-1.11.2.tar.gz

mkdir modules

cd modules/

wget https://github.com/openresty/headers-more-nginx-module/archive/master.zip

unzip master.zip

mv headers-more-nginx-module-master/ headers-more-nginx-module/

cd ..

cd nginx-1.11.2

./configure --prefix=/etc/nginx --sbin-path=/usr/sbin/nginx \

--modules-path=/usr/lib64/nginx/modules --conf-path=/etc/nginx/nginx.conf \

--error-log-path=/var/log/nginx/error.log --http-log-path=/var/log/nginx/access.log \

--pid-path=/var/run/nginx.pid --lock-path=/var/run/nginx.lock \

--http-client-body-temp-path=/var/cache/nginx/client_temp \

--http-proxy-temp-path=/var/cache/nginx/proxy_temp \

--http-fastcgi-temp-path=/var/cache/nginx/fastcgi_temp \

--http-uwsgi-temp-path=/var/cache/nginx/uwsgi_temp \

--http-scgi-temp-path=/var/cache/nginx/scgi_temp \

--user=nginx --group=nginx --with-http_ssl_module --with-http_realip_module \

--with-http_addition_module --with-http_sub_module --with-http_dav_module \

--with-http_flv_module --with-http_mp4_module --with-http_gunzip_module \

--with-http_gzip_static_module --with-http_random_index_module \

--with-http_secure_link_module --with-http_stub_status_module \

--with-http_auth_request_module --with-http_xslt_module=dynamic \

--with-threads --with-stream --with-stream_ssl_module \

--with-http_slice_module --with-mail --with-mail_ssl_module \

--with-file-aio --with-ipv6 --with-http_stub_status_module \

--with-cc-opt='-O2 -g -pipe -Wall -Wp,-D_FORTIFY_SOURCE=2 -fexceptions

-fstack-protector --param=ssp-buffer-size=4 -m64 -mtune=generic' \

--add-module=../modules/headers-more-nginx-module/

make

make install

which nginx

ginx -V

service nginx start

The nginx configuration file is stored at /etc/nginx/nginx.conf.

Let's create a simple configuration file with no protection, just load balancing the backend IIS.

user nginx;

worker_processes 4;

events {

worker_connections 19000;

}

worker_rlimit_nofile 40000;

http {

upstream aspnetservers {

server 10.187.147.99:80 max_fails=3 fail_timeout=30s;

server 10.187.147.100:80 max_fails=3 fail_timeout=30s;

keepalive 32;

}

server {

listen 80;

server_name mynginx.com www.mynginx.com;

location / {

proxy_pass http://aspnetservers;

add_header X-Upstream $upstream_addr;

}

}

Key things to remember here:

http {} - this defines common behavior for all http service, across all servers, domains, urls. This is where you define common configuration for any http traffic handled by nginx. This is like the global configuration.upstream {} - create named groups of backend IIS servers.server {} - define individual virtual hosts which listens on an IP and port and supports certain host headers. This is similar to website on IIS.location {} - define URL patterns and how to deal with them. The pattern is defined as regular expression and it only matches the URI path part, no query string is available for matching.

In the following section, I will be saying "put this in http", "put that on server" and you need to understand which scope it means.

Once you have setup your nginx.conf, you should check this article to make sure you haven't done some configuration that is going to open up vulnerabilities.

Block Denial of Service Attacks

Let's look at various types of denial of service attacks and how to block them.

Slowloris Attacks

Slowloris opens many connections and very slowly posts the request header and body. It puts delay between each byte it sends and makes IIS think it is a valid client, just performing slowly. IIS will hold all these connection open and run out of connections. Slowloris attack can be performed by open a free tool widely available.

In order to block slowloris attack, we need to reject slow request sending. Put this inside http section:

client_body_timeout 5s;

client_header_timeout 5s;

keepalive_timeout 75s;

send_timeout 15s;

Block Too Many Connections Causing Denial of Service

Some form of DOS attacks are performed by opening too many connections and exhausting available connections at load balancer or IIS level. Thus we need to implement maximum open connection limit per IP. While we do that, we need to have some whitelist mechanism so that we do not impose the limit on clients who do need to open many connections.

Without any connection limit, you can generate a lot of load and blow up server CPU. Here's an example of opening too many connections, doing too many requests and causing very high CPU:

Figure: Sending too many requests

CPU goes very high on IIS.

Let's limit the number of connections that can be opened per IP. It will help reduce the load on the server. Let's put this in the http section:

geo $whitelist {

default 0;

1.2.3.0/24 1;

9.10.11.12/32 1;

127.0.0.1/32 1;

10.0.0.0/8 1;

172.16.0.0/12 1;

192.168.0.0/16 1;

}

map $whitelist $limit {

0 $binary_remote_addr;

1 "";

}

limit_conn_zone $limit zone=connlimit:10m;

limit_conn connlimit 20;

limit_conn_log_level warn;

limit_conn_status 503;

Once we deploy this, clients start getting a lot of 503 thrown from nginx:

We can see many requests are now failing, which is good news! But IIS is still getting fried:

When so many requests are getting rejected at nginx, IIS should be consuming a lot less CPU, isn't it?

The reason is, even with the connection limit, over 600 req/sec are reaching IIS, which is enough to blow up the server. We have imposed limit on the number of open connections per IP, but not on the number of requests an IP can make over the open connections. Each IP can send large number of requests through small number of open connections. What we really need is a req/sec limit imposed on nginx. Let's do that in the next section.

Blocking High Request/sec and Brute Force Attacks

Most brute force attacks are performed either by sending many parallel requests, or sending many subsequent requests over small number of open connections. DOS attack is also performed on URL or services which are very small, but can be hit very frequently, in order to generate high number of hits/sec and cause excessive CPU. In order to protect our system from brute force and such form of DOS attack, we need to impose request/sec limit per IP. Here's how you can do that in nginx:

limit_req_zone $limit zone=one:10m rate=50r/s;

limit_req zone=one burst=10;

limit_req_log_level warn;

limit_req_status 503;

Once we deploy rate limit, we can see IIS CPU has gone down to normal level:

We can also see number of successful requests reaching IIS is below the 50 req/sec limit.

You might think, why not put max request/sec limit per IIS server? Then you will be causing an outage for everyone, if just one IP manages to consume that request/sec limit.

Whitelist and Blacklist IP

This is very easy, just add "deny <ip>;" statements inside http scope to block an IP completely. If you put it inside server block, only that virtual host gets the block. You can also block certain IPs on certain locations.

location / {

deny 192.168.1.1;

allow 192.168.1.0/24;

allow 10.1.1.0/16;

allow 2001:0db8::/32;

deny all;

}

If you want to block everyone and whitelist certain IPs, then put some "allow <ip>" and end with "deny all;"

Prevent Bandwidth Exhaustion

Someone can perform DOS attack by exceeding the monthly bandwidth quota. It is usually done by downloading large files repeatedly. Since upload bandwidth is limited, but download bandwidth is usually not limited, one can get some cheap VM and hit a server and exhaust its monthly bandwidth quota quite easily. So, we need to impose limit on the network transfer rate per IP.

First, put this at http scope:

limit_conn_zone $binary_remote_addr zone=addr:10m;

This puts limit on any path which ends with /Download. For example, /Home/Index/Download would get this limit. Say you have made a controller that serves file:

public class HomeController : Controller

{

public ActionResult Index()

{

ViewBag.Title = "Home Page";

return View();

}

public FileResult Download()

{

var path = ConfigurationManager.AppSettings["Video"];

return File(path,

MimeMapping.GetMimeMapping(path), Path.GetFileName(path));

}

Nginx would protect such vulnerable Controllers.

Let's understand the nginx configuration a bit more. Limit_rate_after says first 200k bytes enjoy full speed, more than that will be limited to 100k/sec. Moreover, it will only allow 2 parallel hits to this URL using limit_conn addr. If you don't put the last one, then someone can open many parallel connections and download at 100k/sec and still break your piggy bank.

You should put such limit on static files as well:

location ~* \.(mp4|flv|css|bmp|tif|ttf|docx|woff2|js|pict|tiff|eot|xlsx|jpg|

csv|eps|woff|xls|jpeg|doc|ejs|otf|pptx|gif|pdf|swf|svg|ps|ico|pls|midi|svgz|

class|png|ppt|mid|webp|jar)$ {

limit_rate_after 200k;

limit_rate 100k;

limit_conn addr 2;

}

Cache Static Files on nginx Servers

Nginx is awesome at caching files and then serving them without sending requests to backend servers. You can use nginx to deliver static files for your website and generate custom expiration and cache headers as you like.

First, you put this at http scope:

proxy_cache_path /var/nginx/cache levels=1:2 keys_zone=STATIC:10m inactive=24h max_size=1g;

Then you put this inside server scope:

location ~* \.(css|bmp|tif|ttf|docx|woff2|js|pict|tiff|eot|xlsx|

jpg|csv|eps|woff|xls|jpeg|doc|ejs|otf|pptx|gif|pdf|

swf|svg|ps|ico|pls|midi|svgz|class|png|ppt|mid|webp|jar)$ {

proxy_cache STATIC;

expires 2d;

add_header X-Proxy-Cache $upstream_cache_status;

proxy_ignore_headers "Set-Cookie";

proxy_hide_header "Set-Cookie";

proxy_cache_valid 200 302 2d;

proxy_cache_valid 301 1h;

proxy_cache_valid any 1m;

proxy_pass http://aspnetservers;

}

All those proxy ignore_header, hide_header, cache_valid options are necessary to make this caching business work properly. Otherwise caching malfunctions. This is one area of nginx that took me a lot of time to get it working properly. I have been finding bits and pieces from many articles and stackoverflow article addressing various problems, to eventually reach the final combination of parameters that you see here.

You can test it using curl:

> curl -I -X GET http://mynginx.com/loadtest/Content/bootstrap.css

HTTP/1.1 200 OK

Server: nginx

Date: Thu, 28 Jul 2016 15:57:44 GMT

Content-Type: text/css

Content-Length: 120502

Connection: keep-alive

Vary: Accept-Encoding

Last-Modified: Wed, 20 Jul 2016 22:18:33 GMT

ETag: "37e47a7d4e2d11:0"

Expires: Sat, 30 Jul 2016 15:57:44 GMT

Cache-Control: max-age=172800

X-Proxy-Cache: HIT

Accept-Ranges: bytes

The response shows X-Proxy-Cache:HIT meaning it has been served from nginx without hitting IIS. If it was MISS, then it would have gone to IIS. On first hit, you will get MISS, but subsequent hits should be HIT.

Offload Large File Transfers

You can store large files like videos, pdfs, documents, etc. on the nginx server storage and then serve them directly when certain URL is hit. For example, the below config shows how to serve content from nginx server:

location ~* /Download$ {

sendfile on;

aio threads;

limit_rate_after 200k;

limit_rate 100k;

limit_conn addr 2;

expires 2d;

root /var/nginx/cache/video;

error_page 404 = @fetch;

}

It looks for the file in the local path that you have defined in root. For example, when you hit a URL /Home/Index/Download, then it reads the file /var/nginx/cache/video/Home/Index/Download. If you have used the mp4 pattern, and then you hit a URL /Home/video.mp4, then it will look for a file in /var/nginx/cache/video/Home/video.mp4.

But what if the file is being hit for the very first time and nginx hasn't cached it in local storage yet? Then HTTP 404 is produced, which then gets redirected to the following location rule:

location @fetch {

internal;

add_header X-Proxy-Cache $upstream_cache_status;

proxy_ignore_headers "Set-Cookie";

proxy_hide_header "Set-Cookie";

proxy_cache_valid 200 302 2d;

proxy_cache_valid 301 1h;

proxy_cache_valid any 1m;

proxy_pass http://aspnetservers;

proxy_store on;

proxy_store_access user:rw group:rw all:r;

proxy_temp_path /var/nginx/cache/video;

root /var/nginx/cache/video;

}

First block is the same as caching css, js, etc. static files. However, the important part is the proxy_store block, which tells nginx to permanently save the file received from backend server into the path.

Offload Expensive gzip Compression

This is controversial. Should you put all gzip compression load on your central nginx server, which is a single point of failure, or should you instead distribute the gzip load over your IIS servers? It depends on where it costs the least. If you find IIS consuming too much CPU serving your CPU hungry application, then you might want to offload it on nginx. Otherwise, leave it on IIS and let nginx be free.

Put this block in http scope for turning on gzip compression in all requests. You can also do it at server or location level:

gzip on;

gzip_disable "msie6";

gzip_http_version 1.1;

gzip_comp_level 5;

gzip_min_length 256;

gzip_proxied any;

gzip_vary on;

gzip_types

application/atom+xml

application/javascript

application/json

application/rss+xml

application/vnd.ms-fontobject

application/x-font-ttf

application/x-web-app-manifest+json

application/xhtml+xml

application/xml

font/opentype

image/svg+xml

image/x-icon

text/css

text/plain

text/x-component;

.NET Specific Protection

Protect from Large POST on ASP.NET and WCF

First, you should define a site wide low limit on post so that you do not accidentally leave some vulnerable endpoint letting someone post large bodies. You should put this at http scope:

client_max_body_size 100k;

client_body_buffer_size 128k;

client_body_in_single_buffer on;

client_body_temp_path /var/nginx/client_body_temp;

client_header_buffer_size 1k;

large_client_header_buffers 4 4k;

Then on specific URLs, you can define higher limits. For example, say you have implemented an upload controller action:

[HttpPost]

public ActionResult Index(HttpPostedFileBase file)

{

if (file.ContentLength > 0)

{

var fileName = Path.GetFileName(file.FileName);

var path = Path.Combine(Server.MapPath("~/App_Data/"), fileName);

file.SaveAs(path);

}

return RedirectToAction("Index");

}

You want to allow up to 500KB upload on this URL:

location ~* /Home/Index$ {

client_max_body_size 500k;

proxy_pass http://aspnetservers;

}

Similarly, say you have WCF/ASMX webservices where you want to allow up to 200 KB POST requests:

location ~* \.(svc|asmx)$ {

client_max_body_size 200k;

proxy_pass http://aspnetservers;

}

Disable WCF Service WSDL/Mex Endpoints

You should disable WCF service WSDL/Mex endpoints, so that no one can explore what services are on the offer. It makes hackers life easier to see the definition of the service and then identify what can be exploited. By hiding the definition on production server, you make their job harder.

You can listen to this great talk "Attacking WCF".

location ~* \.svc\/mex$ {

return 444 break;

}

location ~* \.(svc|asmx)$ {

client_max_body_size 200k;

if ( $args ~* wsdl ) {

return 444 break;

}

proxy_pass http://aspnetservers;

}

Suppress Stack Trace From Error Pages

You should suppress stack trace from error pages or error responses from JSON/XML services. Stack traces reveal internals of application that aids attackers. You want to make attackers' life harder and waste as much time as possible. One easy way to do it is to capture all HTTP 500 generated from IIS, and return some fake error message. Someone trying to find out information about your application by generating error from every endpoint will waste a lot of time before figuring out that it is all fake.

server {

listen 80;

server_name mynginx.com www.mynginx.com;

error_page 500 502 503 504 = /500.html;

proxy_intercept_errors on;

location /500.html {

return 500 "{\"Message\":\"An error has occurred.\",\"ExceptionMessage\":

\"An exception has occurred\",\"ExceptionType\":\"SomeException\",\"StackTrace\": null}";

}

Suppress IIS and ASP.NET Headers

You should suppress IIS and ASP.NET headers, so that one cannot figure out which version is running and then exploit the vulnerabilities. You can define this at http scope:

server_tokens off;

more_clear_headers 'X-Powered-By';

more_clear_headers 'X-AspNet-Version';

more_clear_headers 'X-AspNetMvc-Version';

Before all your dirty laundries are out:

HTTP/1.1 200 OK

Server: nginx/1.11.2

Date: Thu, 28 Jul 2016 16:58:49 GMT

Content-Type: text/html; charset=utf-8

Content-Length: 3254

Connection: keep-alive

Vary: Accept-Encoding

Cache-Control: private

X-AspNetMvc-Version: 5.2

X-AspNet-Version: 4.0.30319

X-Powered-By: ASP.NET

After:

HTTP/1.1 200 OK

Server: nginx

Date: Mon, 25 Jul 2016 08:54:08 GMT

Content-Type: text/html; charset=utf-8

Content-Length: 3062

Connection: keep-alive

Keep-Alive: timeout=20

Vary: Accept-Encoding

Cache-Control: private

Notice that I have left Server: nginx header intact, but just hidden the version. This is a deterrent. Once an attacker sees you are smart enough to use nginx, they will be put off, because they know they can't break nginx so easily. They will realize that they need much more budget to break your website since nginx will make it quite hard to perform Denial of Service. So, proudly show your nginx to everyone.

Cache ASMX and WCF Ajax Proxy on Browser and Save Repeated Hits

You should cache ASMX and WCF Ajax proxy on browser and save repeated hits. This is one of the killer optimization techniques because if you don't do this, on every page load, browser will hit server to get the Ajax proxies downloaded. This is an aweful waste of traffic and one of the main reasons for making ASP.NET AJAX websites slow loading.

If you don't do this, every time you hit a .svc/js or .asmx/js URL, you get no cache header:

HTTP/1.1 200 OK

Cache-Control: public

Content-Length: 2092

Content-Type: application/x-javascript

Expires: Thu, 28 Jul 2016 17:01:51 GMT

Last-Modified: Thu, 28 Jul 2016 17:01:51 GMT

Server: Microsoft-IIS/7.5

X-AspNet-Version: 4.0.30319

X-Powered-By: ASP.NET

Date: Thu, 28 Jul 2016 17:02:41 GMT

The Expires header with current date time kill all caching.

Now put this in nginx config:

location ~* \.(svc|asmx)\/js$ {

expires 1d;

more_clear_headers 'Expires';

proxy_pass http://aspnetservers;

}

Then you get nice cache headers:

Cache-Control:max-age=86400

Connection:keep-alive

Content-Length:2096

Content-Type:application/x-javascript

Date:Wed, 27 Jul 2016 07:59:20 GMT

Last-Modified:Wed, 27 Jul 2016 07:59:20 GMT

Server:nginx

Browser shows it is loading the ajax proxies from cache:

Protect IIS from Range Attack CVE-2015-1635

This is an old one. Most IIS are already patched. But you should still put it on nginx to protect vulnerable IIS:

map $http_range $saferange {

"~\d{10,}" "";

default $http_range;

}

proxy_set_header Range $saferange;

This prevents someone from sending a range header with very large value and causing IIS to go kaboom!

Block AntiXSS and Injection Attacks

Nginx has a powerful module Naxsi which offer AntiXSS and injection attack defence. You can read all about it from Naxsi website.

Boost Site Performance using Google's PageSpeed Module for nginx

Google has a PageSpeed module for nginx, which does a lot of automatic optimization. It has a long list of features that one can utilize to make significant performance improvement. Example and demos are here.

Conclusion

Unless you are using commercial NGINX PLUS, I would use the open source free NGINX only as a caching proxy to cache static files, especially delivering large files. For everything else, I would use HAProxy because it has much better monitoring console, health check, load balancing controls, sticky sessions, inspecting body of the content, TCP checks and so on. On the other hand, nginx has a powerful Web Application Firewall module Naxsi, which blocks AntiXSS and many types of injection attacks. So, to maximize performance, monitoring, protection and availability, you need to use a combination of nginx and haproxy.

The complete nginx.conf

user nginx;

worker_processes 4;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 19000;

}

worker_rlimit_nofile 40000;

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format full '$remote_addr - $remote_user [$time_local] '

'"$request" $status $body_bytes_sent '

'"$http_referer" "$http_user_agent" '

'$request_time '

'$upstream_addr '

'$upstream_status '

'$upstream_connect_time '

'$upstream_header_time '

'$upstream_response_time';

access_log /var/log/nginx/access.log full;

include /etc/nginx/conf.d/*.conf;

include /etc/nginx/blacklist.conf;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

client_body_timeout 5s;

client_header_timeout 5s;

keepalive_timeout 75s;

send_timeout 15s;

client_max_body_size 100k;

client_body_buffer_size 128k;

client_body_in_single_buffer on;

client_body_temp_path /var/nginx/client_body_temp;

client_header_buffer_size 1k;

large_client_header_buffers 4 4k;

map $http_range $saferange {

"~\d{10,}" "";

default $http_range;

}

proxy_set_header Range $saferange;

gzip on;

gzip_disable "msie6";

gzip_http_version 1.1;

gzip_comp_level 5;

gzip_min_length 256;

gzip_proxied any;

gzip_vary on;

gzip_types

application/atom+xml

application/javascript

application/json

application/rss+xml

application/vnd.ms-fontobject

application/x-font-ttf

application/x-web-app-manifest+json

application/xhtml+xml

application/xml

font/opentype

image/svg+xml

image/x-icon

text/css

text/plain

text/x-component;

output_buffers 1 32k;

postpone_output 1460;

sendfile on;

sendfile_max_chunk 512k;

tcp_nopush on;

tcp_nodelay on;

proxy_cache_path /var/nginx/cache levels=1:2 keys_zone=STATIC:10m inactive=24h max_size=1g;

proxy_redirect off;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_connect_timeout 5;

proxy_send_timeout 90;

proxy_read_timeout 90;

proxy_buffering on;

proxy_buffer_size 4k;

proxy_buffers 4 32k;

proxy_busy_buffers_size 64k;

proxy_temp_file_write_size 64k;

proxy_temp_path /var/nginx/proxy_temp;

proxy_http_version 1.1;

proxy_next_upstream error http_502 http_503 http_504;

proxy_next_upstream_timeout 30s;

proxy_next_upstream_tries 1;

server_tokens off;

more_clear_headers 'X-Powered-By';

more_clear_headers 'X-AspNet-Version';

more_clear_headers 'X-AspNetMvc-Version';

geo $whitelist {

default 0;

1.2.3.0/24 1;

9.10.11.12/32 1;

127.0.0.1/32 1;

10.0.0.0/8 1;

192.168.0.0/16 1;

}

map $whitelist $limit {

0 $binary_remote_addr;

1 "";

}

limit_conn_zone $limit zone=connlimit:10m;

limit_conn connlimit 20;

limit_conn_log_level warn;

limit_conn_status 503;

limit_req_zone $limit zone=one:10m rate=50r/s;

limit_req zone=one burst=10;

limit_req_log_level warn;

limit_req_status 503;

limit_conn_zone $binary_remote_addr zone=addr:10m;

upstream aspnetservers {

server 10.187.147.99:80 max_fails=3 fail_timeout=30s;

keepalive 32;

}

server_names_hash_bucket_size 128;

server {

listen 80 default_server;

server_name "";

return 444 break;

}

server {

listen 80;

server_name mynginx.com www.mynginx.com;

error_page 500 502 503 504 = /500.html;

proxy_intercept_errors on;

location / {

proxy_pass http://aspnetservers;

}

location ~* /Home/Index$ {

client_max_body_size 500k;

proxy_pass http://aspnetservers;

}

location ~* \.(svc|asmx)\/js$ {

expires 1d;

more_clear_headers 'Expires';

proxy_pass http://aspnetservers;

}

location ~* \.svc\/mex$ {

return 444 break;

}

location ~* \.(svc|asmx)$ {

client_max_body_size 100k;

if ( $args ~* wsdl ) {

return 444 break;

}

proxy_pass http://aspnetservers;

}

location /500.html {

return 500 "{\"Message\":\"An error has occurred.\",\"ExceptionMessage\":

\"An exception has occurred\",\"ExceptionType\":\"SomeException\",\"StackTrace\": null}";

}

location ~* \.(css|bmp|tif|ttf|docx|woff2|js|pict|tiff|eot|xlsx|jpg|csv|eps|woff|

xls|jpeg|doc|ejs|otf|pptx|gif|pdf|swf|svg|ps|ico|pls|midi|svgz|class|png|ppt|mid|webp|jar)$ {

proxy_cache STATIC;

expires 2d;

add_header X-Proxy-Cache $upstream_cache_status;

proxy_ignore_headers "Set-Cookie";

proxy_hide_header "Set-Cookie";

proxy_cache_valid 200 302 2d;

proxy_cache_valid 301 1h;

proxy_cache_valid any 1m;

proxy_pass http://aspnetservers;

}

location ~* /Download$ {

sendfile on;

aio threads;

limit_rate_after 200k;

limit_rate 100k;

limit_conn addr 2;

expires 2d;

root /var/nginx/cache/video;

error_page 404 = @fetch;

}

location @fetch {

internal;

add_header X-Proxy-Cache $upstream_cache_status;

proxy_ignore_headers "Set-Cookie";

proxy_hide_header "Set-Cookie";

proxy_cache_valid 200 302 2d;

proxy_cache_valid 301 1h;

proxy_cache_valid any 1m;

proxy_pass http://aspnetservers;

proxy_store on;

proxy_store_access user:rw group:rw all:r;

proxy_temp_path /var/nginx/cache/video;

root /var/nginx/cache/video;

}

location /status {

stub_status on;

access_log off;

}

}

}