Introduction

Artificial Neural Networks (ANNs) can been used in many fields for a variety of applications. In spite of their unique advantages such as their non-parametric nature, arbitrary decision boundary capabilities, and easy adaptation to different types of data, they possess some inherent limitations.

The limitations come with the problem of structure. This problem has three factors: the number of hidden layers, the size of these layers, and the level of connectivity between layers.

These factors may affect the effectiveness of the classification and the estimates made by the network. Big artificial neural networks behave as memory banks, in which the parameters of input and output are almost memorized so that input vectors are within the range of the training set, the network will return almost identical values the actual data, but outside the whole, the values tend to be very different from the real ones.

Moreover, small networks have a poor performance in the classification, leading to improved performance in estimating outside the range of the training set.

In this article, backpropagation ANNs is used for supervised training, also, we will be focused on determining the structure of the best network, instead of modifying the networks structure (can be another article).

Background

The main problem is finding the right structure to balance the size and the number of connections between the layers of the network so that it can classify and estimate at the same time with the least possible error.

In this project, we propose a generator of artificial neural networks trained with a set of data (from a CSV file). This dataset will be divided into two parts, a training set and a set of monitoring. Training iterations for each network with the training set will be carried out and ordered by a determined by the mean square error and the average of the magnitudes of differences in assessing trained with network monitoring assembly vector. A cut is made by selecting half of the best performing networks. The process is repeated until there is a winning network which is likely to have the best structure for the problem.

In each iteration, the number of training increases exponentially. Networks are trained in parallel, taking advantage that this kind of problem is parallelizable. Also, we used layers with Sigmoid transfer function, so that all inputs and outputs were normalized. The results, as well as the quadratic mean error values are standardized.

Also, this article refers to time independents problems, in which each training sample is independent of each other. Problems like automatic control problem are excluded in this solution. For these kind of problems, the network must be configured with n input vectors which represents the object state, the control vectors and the time elapsed between the n state and the n(t+1) state. The output vector must be the n(t+1) state of the object.

Using the Code

The BrainsProcessorBase Class

This class implements and defines some methods and properties that will be used by the three types of processors ANNs: Standalone, Master and Slave.

Creating the ANNs

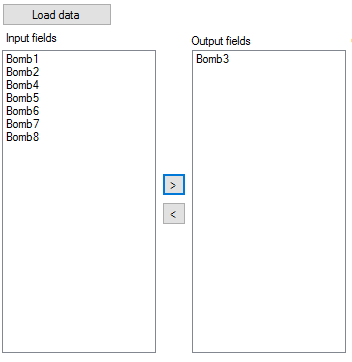

The first step in creation of ANNs is to load the data to work with and select the output parameters. In this article, we will use data from a water supply system with eight bombs connected in different places and heights, with different characteristics. We will only use the water supplied data each day. The idea is to determine the influence of the system on each bomb, and generate ANNs capable of estimating the supply of a bomb, with the data of all remaining bombs. For complete information, we must have the whole supplied water data of a day.

After that, we will select the sizes and quantities of hidden layers like:

This will generate combinations of layers of one, two and three layers like 10-10-10 or 15-13-6 combinations. The method to do that (named Combox) looks like this:

public List<int[]> Combox(ListBox listBox4)

{

var l = new List<string>();

var data = listBox4.Items.Cast<object>().Select(i => i.ToString().Split

('-').Select(int.Parse).ToArray()).ToList();

int ii = 0;

foreach (var item in data)

{

string a = "";

var lx = new List<string>();

for (int i = item[0]; i <= item[1]; i++)

{

a = "_" + i;

if ((l.Count <= item[1] - item[0]) && ii == 0)

{

l.Add(a);

}

else

foreach (var itemx in l.ToList())

lx.Add(itemx + a);

}

l.AddRange(lx.ToArray());

ii++;

}

return l.Distinct().Select(i => i.Split(new[] { "_" },

StringSplitOptions.RemoveEmptyEntries).Select(int.Parse).ToArray()).ToList();

}

Standalone Mode

In this mode, the program generates all the structures and trains and tests all of them. In each iteration, take the best half following the selector criteria of the multiple of the mean squared error and the sum of differences between the calculated result and the result of the verification sample.

Using data from this polynomial function, and parameters as the following image:

The results of the iterations are displayed as follows:

Cluster Master Mode

In this mode, the program can be configured as master of the cluster, or slave serving to a master instance. The process is configured on each computer to be included on the cluster, and the mode of the operation is selected. Slaves instances cannot design the ANNs architectures, they only receive from the master instance groups of ANNs, the training set and the verification test. They run n training epochs and sends the results back to the master instance, which, with all ANNs trained and evaluated, it selects the best half of the group, redistributes all remaining ANNs, increases the number of training epochs and repeats the process until one ANN is the winner.

The parameters of the master cluster can be configured as follows:

Cluster Slave Mode

In this mode, the instance will be connected to a master instance and wait for instructions. It receives training and verification sets, the ANNs, trains all ANNs and sends it back to the master instance.

The Distribution Process

At the start of the training process, the master instance queries all registered slave’s instances to know the number of available cores and max speed of the CPU in each one. Then it assigns to each slave a number of ANNs (i) based on:

To slave instances that get a number less than one unit, the master assigns only one ANN to process in each iteration. For the rest of the slave instances, it assigns the floor(i) of ANNs. The master instance assumes all the remaining ANNs for training.

References

- Taskin Kavzoglu, Determining Optimum Structure for Artificial Neural Networks. In Proceedings of the 25th Annual Technical Conference and Exhibition of the Remote Sensing Society, Cardiff, UK, pp. 675-682, 8-10 September 1999.