What’s the Problem with LINQ?

As outlined by Joe Duffy, LINQ introduces inefficiencies in the form of hidden allocations, from The ‘premature optimization is evil’ myth:

To take an example of a technology that I am quite supportive of, but that makes writing inefficient code very easy, let’s look at LINQ-to-Objects. Quick, how many inefficiencies are introduced by this code?

int[] Scale(int[] inputs, int lo, int hi, int c) {

var results = from x in inputs

where (x >= lo) && (x <= hi)

select (x * c);

return results.ToArray();

}

Good question, who knows, probably only Jon Skeet can tell just by looking at the code!! So to fully understand the problem, we need to take a look at what the compiler is doing for us behind-the-scenes, the code above ends up looking something like this:

private int[] Scale(int[] inputs, int lo, int hi, int c)

{

<>c__DisplayClass0_0 CS<>8__locals0;

CS<>8__locals0 = new <>c__DisplayClass0_0();

CS<>8__locals0.lo = lo;

CS<>8__locals0.hi = hi;

CS<>8__locals0.c = c;

return inputs

.Where<int>(new Func<int, bool>(CS<>8__locals0.<Scale>b__0))

.Select<int, int>(new Func<int, int>(CS<>8__locals0.<Scale>b__1))

.ToArray<int>();

}

[CompilerGenerated]

private sealed class c__DisplayClass0_0

{

public int c;

public int hi;

public int lo;

internal bool <Scale>b__0(int x)

{

return ((x >= this.lo) && (x <= this.hi));

}

internal int <Scale>b__1(int x)

{

return (x * this.c);

}

}

As you can see, we have an extra class allocated and some Func's to perform the actual logic. But this doesn’t even account for the overhead of the ToArray() call, using iterators and calling LINQ methods via dynamic dispatch. As an aside, if you are interested in finding out more about closures, it’s worth reading Jon Skeet’s excellent blog post “The Beauty of Closures”.

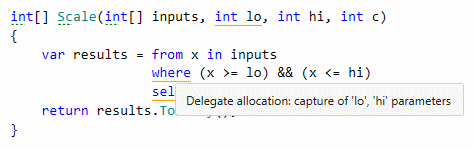

So there’s a lot going on behind the scenes, but it is actually possible to be shown these hidden allocations directly in Visual Studio. If you install the excellent Heap Allocation Viewer plug in for Resharper, you will get the following tool-tip right in the IDE:

As useful as it is though, I wouldn’t recommend turning this on all the time as seeing all those red lines under your code tends to make you a bit paranoid!!

Now before we look at some ways you can reduce the impact of LINQ, it’s worth pointing out that LINQ itself does some pretty neat tricks (HT to Oren Novotny for pointing this out to me). For instance, the common pattern of having a Where(..) followed by a Select(..) is optimised so that only a single iterator is used, not two as you would expect. Likewise two Select(..) statements in a row are combined, so that only a one iterator is needed.

A Note on Micro-optimizations

Whenever I write a post like this, I inevitably get comments complaining that it’s an “premature optimization” or something similar. So this time, I just want to add the following caveat:

I am not in any way advocating that LINQ is a bad thing, I think it’s fantastic feature of the C# language!

Also:

Please do not re-write any of your code based purely on the results of some micro-benchmarks!

As I explain in one of my talks, you should always profile first and then benchmark. If you do it the other way round, there is a temptation to optimize where it’s not needed.

Having said all that, the C# Compiler (Roslyn) coding guidelines do actually state the following:

Avoid allocations in compiler hot paths: - Avoid LINQ. - Avoid using foreach over collections that do not have a struct enumerator. - Consider using an object pool. There are many usages of object pools in the compiler to see an example.

Which is slightly ironic considering this advice comes from the same people who conceived and designed LINQ in the first place! But as outlined in the excellent talk “Essential Truths Everyone Should Know about Performance in a Large Managed Codebase”, they found LINQ has a noticeable cost.

Note: Hot paths are another way of talking about the critical 3% from the famous Donald Knuth quote:

We should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil. Yet we should not pass up our opportunities in that critical 3%.

RoslynLinqRewrite and LinqOptimizer

Now clearly, we could manually re-write any LINQ statement into an iterative version if we were concerned about performance, but wouldn’t it be much nicer if there were tools that could do the hard work for us? Well, it turns out there are!

First up is RoslynLinqRewrite, as per the project page:

This tool compiles C# code by first rewriting the syntax trees of LINQ expressions using plain procedural code, minimizing allocations and dynamic dispatch.

Also available is the Nessos LinqOptimizer which is:

An automatic query optimizer-compiler for Sequential and Parallel LINQ. LinqOptimizer compiles declarative LINQ queries into fast loop-based imperative code. The compiled code has fewer virtual calls and heap allocations, better data locality and speedups of up to 15x (Check the Performance page).

At a high-level, the main differences between them are:

RoslynLinqRewrite

- works at compile time (but prevents incremental compilation of your project)

- no code changes, except if you want to opt out via

[NoLinqRewrite]

LinqOptimiser

- works at run-time

- forces you to add

AsQueryExpr().Run() to LINQ methods - optimises Parallel LINQ

In the rest of the post, we will look at the tools in more detail and analyze their performance.

Comparison of LINQ Support

Obviously, before choosing either tool, you want to be sure that it’s actually going to optimize the LINQ statements you have in your code base. However neither tool supports the whole range of available LINQ Query Expressions, as the chart below illustrates:

| Method | RoslynLinqRewrite | LinqOptimiser | Both? |

Select | ✓ | ✓ | Yes |

Where | ✓ | ✓ | Yes |

Reverse | ✓ | ✗ | |

Cast | ✓ | ✗ | |

OfType | ✓ | ✗ | |

First/FirstOrDefault | ✓ | ✗ | |

Single/SingleOrDefault | ✓ | ✗ | |

Last/LastOrDefault | ✓ | ✗ | |

ToList | ✓ | ✓ | Yes |

ToArray | ✓ | ✓ | Yes |

ToDictionary | ✓ | ✗ | |

Count | ✓ | ✓ | Yes |

LongCount | ✓ | ✗ | |

Any | ✓ | ✗ | |

All | ✓ | ✗ | |

ElementAt/ElementAtOrDefault | ✓ | ✗ | |

Contains | ✓ | ✗ | |

ForEach | ✓ | ✓ | Yes |

Aggregate | ✗ | ✓ | |

Sum | ✗ | ✓ | |

SelectMany | ✗ | ✓ | |

Take/TakeWhile | ✗ | ✓ | |

Skip/SkipWhile | ✗ | ✓ | |

GroupBy | ✗ | ✓ | |

OrderBy/OrderByDescending | ✗ | ✓ | |

ThenBy/ThenByDescending | ✗ | ✓ | |

| Total | 22 | 18 | 6 |

Finally, we get to the main point of this blog post, how do the different tools perform, do they achieve their stated goals of optimizing LINQ queries and reducing allocations?

Let’s start with a very common scenario, using LINQ to filter and map a sequence of numbers, i.e., in C#:

var results = items.Where(i => i % 10 == 0)

.Select(i => i + 5);

We will compare the LINQ code above with the 2 optimised versions, plus an iterative form that will serve as our baseline. Here are the results:

(Full benchmark code)

The first thing that jumps out is that the LinqOptimiser version is allocating a lot of memory compared to the others. To see why this is happening, we need to look at the code it generates, which looks something like this:

IEnumerable<int> LinqOptimizer(int [] input)

{

var collector = new Nessos.LinqOptimizer.Core.ArrayCollector<int>();

for (int counter = 0; counter < input.Length; counter++)

{

var i = input[counter];

if (i % 10 == 0)

{

var result = i + 5;

collector.Add(result);

}

}

return collector;

}

This issue is that by default, ArrayCollector allocates a int[1024] as its backing storage, hence the excessive allocations!

By contrast, RoslynLinqRewrite optimizes the code like so:

IEnumerable<int> RoslynLinqRewriteWhereSelect_ProceduralLinq1(int[] _linqitems)

{

if (_linqitems == null)

throw new System.ArgumentNullException();

for (int _index = 0; _index < _linqitems.Length; _index++)

{

var _linqitem = _linqitems[_index];

if (_linqitem % 10 == 0)

{

var _linqitem1 = _linqitem + 5;

yield return _linqitem1;

}

}

}

Which is much more sensible! By using the yield keyword, it gets the compiler to do the hard work and so doesn’t have to allocate a temporary list to store the results in. This means that it is streaming the values, in the same way the original LINQ code does.

Lastly, we’ll look at one more example, this time using a Count() expression, i.e.,

items.Where(i => i % 10 == 0)

.Count();

Here, we can clearly see that both tools significantly reduce the allocations compared to the original LINQ code:

(Full benchmark code)

Future Options

However, even though using RoslynLinqRewrite or LinqOptimiser is pretty painless, we still have to install a 3rd party library into our project.

Wouldn’t it be even nicer if the .NET compiler, JITter and/or runtime did all the optimizations for us?

Well it’s certainly possible, as Joe Duffy explains in his QCon New York talk and work has already started so maybe we won’t have to wait too long!!

Discuss this post in /r/programming.

Further Reading

The post Optimizing LINQ first appeared on my blog Performance is a Feature!