In this article, R is used to explore the complexity of time series generated by a simple process.

Introduction

The objective of this article, in addition to making some math philosophical reflections about complexity and randomness, is to give a brief revision of some useful techniques to analyze complex time series, using the open source free statistical program R.

If you prefer a Spanish version of this article, you can visit my blog.

Background

There are some very simple math models on which, varying only an unique parameter, we can obtain time series which present a wide game of dynamics, from stationary to chaotic, passing through some degrees of periodicity.

A typical example of such models is the logistic equation, a very simple iterated function whose mathematical expression is as follows:

Xn+1 = UXn(1-Xn)

The range of values of this equation is the (0, 1) open interval, while the U parameter takes values from the (0, 4) open interval. Starting at values near 0 for the U parameter, the generated time series is completely stationary, taking a single value, but, when U reaches the value of 3, a critical point, suddenly becomes a periodic one, taking two different values alternatively.

As the U parameter value raises, up to approximately 3.5699, the number of values (i.e., the period) goes doubling and, when this limit value is reached, again a critical point is reached and the dynamics of the time series becomes chaotic.

The degree of chaoticity raises with the U parameter (except for some exceptional points of periodic islands), up to a value of U = 4, point at which the time series diverges to infinity.

But we can go one step beyond the chaos and try to reach the randomness, using the same deterministic processes, as you will see at the end of the article.

Another simple system that we can use to study these kind of processes is the triangular application, expressed this way:

Xn+1 = U(1 – 2|0.5 - Xn|)

In this equation, the U parameter takes values in the (0, 1) open interval.

Using the Code

The sample code consists of some R functions contained in the source file beyond-chaos.R

#########################################################################

#

# Logistic equation

# u: parameter

# length: number of terms in the series

# initial: initial value for the series

#

#########################################################################

fLog<-function(u,length,initial) {

c<-1:(length+300);

c[1] <- initial;

for (i in 2:(length+300)) {

c[i]<-u*c[i-1]*(1-c[i-1]);

}

return(c[-(1:300)]);

}

#########################################################################

#

# triangular application

# u: parameter

# length: number of terms in the series

# initial: initial value for the series

#

#########################################################################

fTriangular<-function(u,length,initial) {

c<-1:(length+300);

c[1] <- initial;

for (i in 2:(length+300)) {

c[i]<-u*(1-2*abs(0.5-c[i-1]));

}

return(c[-(1:300)]);

}

#########################################################################

#

# Normal random time series promediating chaotic series

# u1: parameter for the seeds series

# u2: parameter for the promediated series

# seed: initial value for the seed series

# length: number of terms in the returned series

# count: number of series to promediate

# fr: function to promediate (fLog or fTriangular)

#

#########################################################################

randFunc<-function(u1,u2,seed,length,count,fr) {

v<-fr(u1,count,seed);

x<-rep(0,length);

for(i in 1:count) {

x = x+fr(u2,length,v[i]);

}

return(x/count);

}

#########################################################################

#

# Logistic helper for web diagram

# u: parameter

# x: value of Xn

#

#########################################################################

fWebLog<-function(u,x) {

return (u*x*(1-x));

}

#########################################################################

#

# Triangular helper for web diagram

# u: parameter

# x: value of Xn

#

#########################################################################

fWebTriangular<-function(u,x) {

return (u*(1-2*abs(0.5-x)));

}

#########################################################################

#

# Draws a web diagram

# p: parameter

# steps: number of terms of the time series to draw

# fw: function to use (fWebLog or fWebTriangular)

#

#########################################################################

webDiagram<-function(p, steps=500, fw) {

xn<-seq(0,1,length.out=1000);

xn1<-sapply(xn,fw,u=p);

plot(xn,xn1,type='l',col="red");

lines(xn,xn,lty=4);

x0<-runif(1);

xn<-x0;

xn1<-0;

for (i in 1:steps) {

xf<-fw(p, x0);

xn<-c(xn,x0);

xn1<-c(xn1,xf);

xn<-c(xn,xf);

xn1<-c(xn1,xf);

x0<-xf;

}

lines(xn,xn1);

}

#########################################################################

#

# draws a Feyenbaun diagram of bifurcation

# start: first value of the parameter

# end: final value of the parameter

# step: increment in the parameter

# fw: function to use (fLog or fTrangular)

#

#########################################################################

feigenbaum<-function(start,end,step,fw) {

pu<-seq(start,end,by=step);

orbit<-sapply(pu,fw,initial=0.1,length=300);

r<-sort(rep(pu,300));

plot(r,orbit,pch=".");

}

#########################################################################

#

# draws a recurrencePlot for the RP property of the result of crqa function

# rp: RP property of the object (recurrence plot)

#

#########################################################################

recurrencePlot<-function(rp) {

x<-rep(1:(dim(rp)[1]),dim(rp)[2])[as.vector(t(rp))==T];

y<-as.vector(sapply(1:(dim(rp)[2]),rep,dim(rp)[1]))[as.vector(t(rp))==T];

plot(x,y,pch=".",pty="s",asp=1);

}

#########################################################################

#

# Helper to build a dataframe with delayed time series to train

# recurrent neural networks

# data: original time series

# lags: number of delayed versions of the original time series

#

#########################################################################

lagTS<-function(ts,lags) {

y<-as.zoo(ts);

for (l in 1:lags) {

y<-cbind(y,Lag(y,k=l));

}

return(na.omit(y));

}

Once extracted from the zip file, simply load it with the R command source:

source("beyond-chaos.R")

Exploring Chaos

The stationary and periodic zone of the dynamics of these systems don't have none interesting property to examine, so, take into account only that they are possible states of the system for some values of the U parameter, and let's go to take a more detailed look at the chaotic zone.

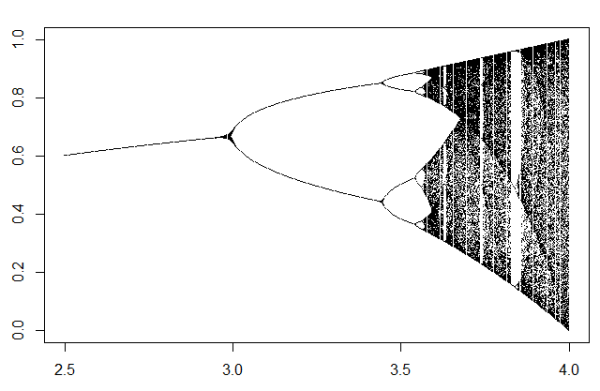

In order to have an idea of the behavior of the system along with the variation of the parameter, we can draw a Feigenbaum diagram, where you can clearly see the bifurcations of the values of the series and the growth of the set of values until the chaotic zone.

To do this, use the feigenbaum function (adapted from this article in R-bloggers). The parameters are the start and end of the interval of values of the parameter U, the step to increment the parameter and the function you want to use to generate the data.

This function can be one of two, fLog, to draw a bifurcation diagram for the logistic equation, or fTriangular, to draw the corresponding diagram for the triangular application. These two functions calculate 300 extra terms at the start of the series, which are discarded in order to eliminate transient dynamics states.

For example, for the logistic equation:

feigenbaum(2.5,4,0.003,fLog)

The degree of complexity of the dynamics can be checked by using a graphic tool called web diagram. This kind of diagram is built in this way: first, we draw the curve of the function in the full interval of values, in this case between 0 and 1, then, we draw the line y = x. We take any one value in the x axis and we draw a perpendicular line from here to the curve of the function. From here, a new horizontal line to the y = x line, and then, from here, again a vertical up to the curve. The process is repeated certain number of times.

To draw this diagram, you can use the webDiagram function. The parameters are the U parameter of the given function, the number of steps to draw, and a function to get the values. You can use the fWebLog and fWebTriangular functions to draw web diagrams for the logistic or the triangular functions.

This is, for example, the web diagram of a periodic series from the logistic equation:

webDiagram(3.4,fw=fWebLog)

And this is another for the chaotic zone of the triangular application:

webDiagram(0.99,fw=fWebTriangular)

If you want to know more on web diagrams, you can visit my blog.

The first interesting property of the complex systems is their sensitivity to initial conditions. As those systems are deterministic ones, if you generate some time series, starting always with exactly the same initial value, you will obtain all times exactly the same series. But if you vary, even only a little, the initial value, then you will obtain completely different time series.

For example, look what happens if you draw two time series with the logistic equation starting with an initial value of 0.1 and another one of 0.1001:

plot(fTriangular(0.95,100,0.1),type="l")

lines(fTriangular(0.95,100,0.1001),col="red")

In this case, I have used the triangular application, with the fTriangular function, with an U parameter of 0.95 and generating 100 terms of the series. You can obtain symilar results using the fLog function with an U parameter of 3.98, by example, to get a chaotic series.

This sensitivity to initial conditions makes the states of the natural complex systems virtually irreproducible, and limits a lot the possibilities of prediction, except for a very short time.

But the deterministic nature of this processes can be checked, by example, by using neural networks to try to fit the function that generates the time series. For example, I will use recurrent Elman neural networks, with the RSNNS R package.

The procedure I have followed to fit the time series with the neural network is to train it with a data set formed with some columns with the same time series delayed one term on each column. This way, on each row, we have the current term of the series and the n previous terms. To do this, I use the lagTS function, which accepts the time series and the number of previous terms to build, and return a dataframe, whose first column contains the series to predict. (More about recurrent neural networks here, in my blog).

require(RSNNS)

require(quantmod)

tsl<-fLog(3.98,1000,0.2)

y<-lagTS(as.ts(tsl),10)

train<-1:900

inputs<-y[,2:11]

outputs<-y[,1]

fit<-elman(inputs[train],outputs[train],size=c(3,2),

learnFuncParams=c(0.1),maxit=1000)

pred<-predict(fit,inputs[-train])

I use the first 900 rows to train the network, an then the trained network to make a prediction with the 90 remaining values. This is the 90 terms series to predict:

plot(as.vector(outputs[-train]),type="l")

And this is the series that the neural network predicts, overlapped over the original, in red:

lines(pred,col="red")

Seems that the neural network can make a perfect prediction on a chaotic system, but this is not the case. If you try to predict further than the next term of the series, the result quickly loses accuracy. But the important thing to remark is that, due to the deterministic character of the process, the network is able to approximate very well the original function. Let's look at what happens with the more disordered series you can have, the random ones.

You can use a function like rnorm to generate a random series with normal distribution, by example, but these functions, usually, are pseudo-random number generators, and they use also deterministic processes to obtain the values. Instead of this, I will get a truly random time series from this website, which uses atmospheric noise to generate the series. (Really, you will get almost the same results using a good pseudo random number generator, but this way is more strict.)

I have obtained a sample of atmospheric white noise with uniform distribution. In a wav sound file. So I will use the audio package to read it:

require(audio)

wv<-load.wave("soundfile.wav")

And then, I repeat the previous procedure, but first I normalize the data to values between 0 and 1, for the network to work better.

tsr<-(wv[1:1000] - min(wv[1:1000]))/(max(wv[1:1000])-min(wv[1:1000]))

y<-lagTS(as.ts(tsr),10)

inputs<-y[,2:11]

outputs<-y[,1]

fit<-elman(inputs[train],outputs[train],size=c(3,2),

learnFuncParams=c(0.1),maxit=1000)

pred<-predict(fit,inputs[-train])

plot(as.vector(outputs[-train]),type="l")

lines(pred,col="red")

Not so easy, not? Could we turn those chaotic systems into random ones?, How?

From Chaos to Randomness

Seems that we can't raise infinitely the complexity of those systems, since when the maximum value for the U parameter is reached, the system diverges to infinity.

Nothing to do by using only one series, but what if you use more than one? If you remember the limit central theorem, if you add some equally distributed variables, the final distribution tends to have normal distribution (i.e., random). You may use their sensitivity to initial conditions to produce a number of completely different time series using the same U parameter and then promediate them.

To do that, I have written the randFunc function, whose parameters are the following: u1 is the U parameter for a time series that I will use to generate the initial values, u2 is the U parameter of the N time series generated, seed is the initial value for the initial values, length the length of the final time series, count the number of series to promediate and, finally, fr is the function to use (fLog or fTriangular).

You can think that we need to promediate a lot of series to obtain a random one. Let's try it with only five:

r5<-randFunc(3.9,3.99,0.2,1000,5,fLog)

Now, we make some normality tests:

shapiro.test(r5)

W = 0.99781, p-value = 0.2119

qqnorm(r5)

qqline(r5)

Seems that's all OK, not? But, besides, I will try to find some traces of determinism in the generated series using a more sophisticated tool, the recurrence plot. I will use the crqa package to do this.

The recurrence plot is not a trivial stuff, so, if you want to know more about them, you can visit my blog. In order to have an idea of what I'm searching, let's look first at a recurrence plot for a deterministic system, using the logistic equation:

Require(crqa)

tsl<-as.ts(fLog(3.98,198,0.2))

rqa<-crqa(tsl,tsl,delay=1,embed=1,rescale=0,radius=0.03,

normalize=0,mindiagline=2,minvertline=2,side="both")

The returned rqa object has some properties with some values used to make recurrence analysis. One of them is RP, which holds the recurrence plot itself. I don't know a direct way to draw it, so, I have written the recurrencePlot function to do it. The call is as follows:

recurrencePlot(rqa$RP)

The key in this graph is that the diagonal lines, in addition of squared recognizable structures, are characteristic of the deterministic systems. There is a property of the rqa object called determinism rate or DET, its value for this series is:

rqa$DET

68.86727

Compare that with the recurrence plot of the random atmospheric noise:

tsl<-as.ts(wv[1:200])

rqa<-crqa(tsl,tsl,delay=1,embed=1,rescale=0,radius=0.05,

normalize=0,mindiagline=2,minvertline=2,side="both")

recurrencePlot(rqa$RP)

As you can see, the deterministic structures have disappeared, and the points are mainly isolated, instead of forming diagonal lines. The DET RQA measure is also much lower than in the previous case:

rqa$DET

9.7621

And what about the supposed random series obtained from the logistic equation? This is the corresponding recurrence plot. As you can see, there is no noticeable difference with that of the truly random time series:

tsl<-as.ts(r5[1:200])

rqa<-crqa(tsl,tsl,delay=1,embed=1,rescale=0,radius=0.01,

normalize=0,mindiagline=2,minvertline=2,side="both")

recurrencePlot(rqa$RP)

The DET measure is also as low as that of the random series:

<span style="line-height: 115%; font-family: "Courier New"; font-size: 11pt"><font color="#000000">rqa$DET

8.093995</font></span>

One of the conclusions that we can get of this is that the randomness of the data, or even their noise, can be only an illusion, due to a measurement procedure with not enough resolution to separate all the variables involved.

Taking that into account, together with the principle of parsimony, is there really randomness in nature? Or it is enough with the complexity to explain it, and we must consider randomness as the result of an insufficiency of our measuring instruments?

Thanks all for reading!!!