In the cloud era, everyone is fighting to increase static resource loading speeds. In recent years, this alone has promoted the gradual popularization of CDNs, especially in rapidly expanding markets like China. I work in a company whose core business is centered on image sharing communities and therefore we rely heavily on image CDNs. This article uses to case studies to highlight must have skillsets for server engineers working with CDN. It will discuss CDN background and basic principles, distributed image storage, procedures and considerations for batch adding and switching CDNs as well as CDN access fault analysis.

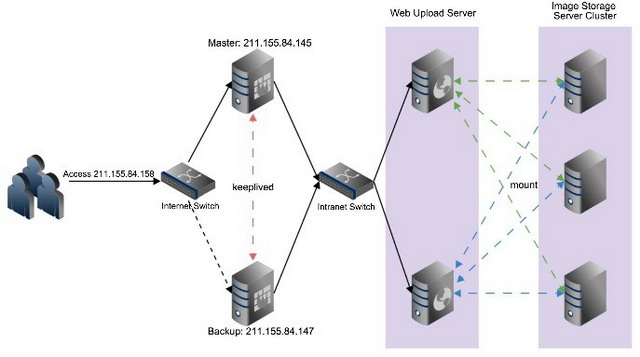

Everything in this article is based from my own experiences. Background and principles of CDN and distributed image storage Before we start, let’s have a quick look at the basic CDN image storage architecture:

These main principles of the above architecture can be illustrated in the diagram below:

In this architecture we aim to switch the image access traffic for a certain domain name (a.mengkang.net) to the CDN. Here’s a rundown of the procedure:

- First, we need to collect statistics on the original domain name's access logs to find the image addresses with high access frequencies (around 200,000 addresses for example). Then, we will hand these addresses over to the CDN provider.

- The CDN provider will then do the warm-up crawling on the resources from the 200,000 addresses.

- After warm-up crawling is complete, when can change the domain name for a portion of a.mengkang.net to b.mengkang.net. Then, we need to resolve the b.mengkang.net CNAME to the CDN server's domain name, such as b.mengkang.ccgslb.com.cn.

- Using wget testing, we will access the images in the b.mengkang.net domain name to see whether or not they can be cached by the CDN.

- If the cache test is successful, we will switch some of the traffic from a.mengkang.net to b.mengkang.net. The O&M staff will monitor the back-to-source traffic situation and adjust the allocation of traffic accordingly.

Locating CDN resource access faults

Case Study 1

Problem

Recently we had a unique issue with large images. The access to the same image address sometimes succeeded and sometimes fails and when an image could not be accessed, individual image addresses access requests jumped to the game site's homepage.

We contacted the CDN provider's customer support and were told that the carrier's DNS was hijacked, but there was no problem with the CDN service (they seemed very passive). Here’s how we solved the problem.

Solution

Let's use the following image as an example: http://f4.topit.me/4/2d/d1/1133196716aead12d4s.jpg

- First, we confirmed that our origin site resources could be accessed and there was no problem with CDN back-to-source

We used the wget command to bind the domain name host (here, we assumed the origin site IP is 111.1.23.214). This allows us to bypass the CDN and directly access our origin site:

This confirms that the image can be accessed normally.

- Then, we used wget -S to print out detailed HTTP header information

Using this request, we can clearly see that the request first connected to 123.150.50.14:80 and then experienced a 302 redirect. The header information clearly states: Powered-By-ChinaCache: HIT from CHN-TJ-7-3V2.6. This means that this is a problem with the CDN itself. Also, the redirect page also is a ChinaCache customer. Now that we had located the problem, the CDN provider could no longer deny responsibility and started fixing the problem.

Case Study 2

Problem

When accessing a certain webpage, the images in the CSS could not be accessed. However, images can be accessed by following the image address independently. Using wget --referer, we found that the problem was an incorrect anti-leeching setting: 8aad0243328afca0394f9b593e3fa3b508bd4d11

I reported this to customer service and they told me that they did not impose any restrictions and the problem is with our origin site. So we have to dig for evidences.

Solution

This clearly shows the domain name resolution process. The CDN DNS uses a predefined policy, returning the optimal IP, 111.202.7.252. Then, it returned 403. Only after I provided screenshots showing the two situations, the CDN customer service staff had to start fixing the problem.

Conclusion

The above problems caused our development engineers to take on too much operations and management responsibilities. Recently we switched to Alibaba Cloud OSS for storage, and now we do not have to worry about the above problems anymore. There is no more back-to-source because we can directly store images on the cloud! It's that simple!