How to mount a surveillance system that detects intruders in your home, takes their photos and notifies you on your cell phone, using which you can call the police if necessary and provide them the photos to quickly identify the robbers and increase your chances to recover all the stolen items.

Introduction

In this article, I will show some DIY stuff to mount a surveillance system that detects intruders in your home, takes photos of them and notifies you on your cell phone, from which you can call the police if necessary and provide them the photos in order to quickly identify the robbers and raise your opportunities to recover all the stolen things.

Of course, besides this software, you have to provide some hardware, but I have built this system at my home using relatively cheap materials, other than the cameras, which arethe most expensive part of the mounting. But you can do a lot of things with a camera, so it can be a good and funny investment.

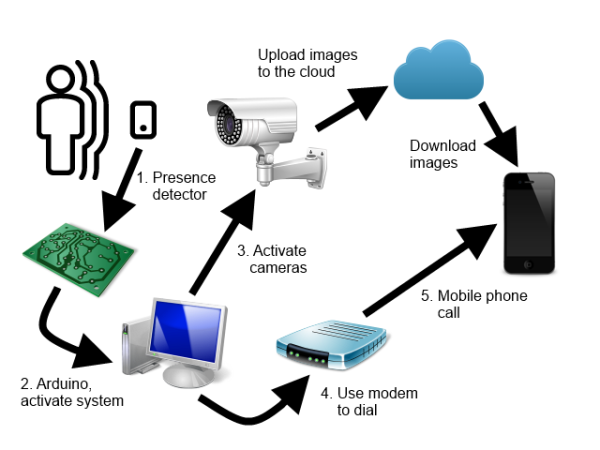

Basically, this is the system schema, with all the participating elements:

Although in the schema I have represented some concrete subsystems, really I have designed the solution so that all those elements can be developed independently, by implementing a common interface and using dependency injection to link them with the application. The different subsystems or protocols are the following:

- Camera protocol: defines the communication with the video cameras

- Storage protocol: defines the file transfer, images and control command / responses

- Trigger protocol: fires-up the surveillance system

- Alarm protocol: communicates the incidents remotely to the user

The solution is implemented using Visual Studio 2015 and the 4.5 version of the .NETFramework.

You can find a longer version of this article here in Spanish. Due to the file size limits of this site, of 10MB, I have had to delete a lot of files in the source code, all the NuGet packages, the obj directories and all the binaries. Although you can restore the packages from Visual Studio, it is possible that you cannot recompile the code. In that case, you can download the complete set of files of the project from my website, in the previous link.

The Hardware

Let's review the hardware I used to build the system in my case. As the application is extensible in many ways, you can use your own different hardware selection to mount it.

First, the cameras. I have two IP cameras, each one with a different protocol. The cheaper is a conceptornic wifi camera, with a price about 50€ and protocol NetWave cgi. The other is a professional one, with high-performance, but also a very high price. It's an Axis camera, with VAPIX cgi protocol.

In order to make a call to the mobile phone, I have bought a simple USB AT modem, for about 17€:

As a trigger I used, of course, an Arduino board (about 20€), a presence detector switch (about 10€) and a relay. As the presence switch works with 220V, it is a bad idea to connect it directly to the Arduino board. So, I have connected the detector to a12V power source, and the power source to the relay, which acts as another switch, that closes a circuit between the 5V Arduino power and an input pin. This isolates completely the Arduino board (and thus the computer) of the 220V main power source.

You can easily build a relay circuit like this. Simply connect the 12 power source to the relay reel, put a diode from the ground wire to the 12V wire, and, from the Arduino side, use an input pin (PI) as the trigger pin, an output pin (PO) to force the input pin to 0V when the circuit is open, and the 5V power signal to activate the input pin:

This is the Arduino code, I used the pin 28 as input and 24 as output, cause in the Arduino Mega board they are near the 5V pin, but you can use which you want to, of course.

int pin1 = 28;

int pin0 = 24;

void setup() {

pinMode(pin0, OUTPUT);

digitalWrite(pin0, LOW);

pinMode(pin1, INPUT);

digitalWrite(pin1, LOW);

Serial.begin(9600);

}

void loop() {

int val = digitalRead(pin1);

if (val == HIGH) {

Serial.write(1);

}

delay(1000);

}

Finally, although this is not really hardware, I will mention the storage protocol I have used. I have chosen Dropbox as the easiest and cheaper way to upload the photos to the cloud, and I use also this media to communicate the mobile clients with the control center, using text files with data in JSON format.

The Control Center

In the ThiefWatcher project, the central control application is implemented. It is a desktop MDI Windows application, and basically has two different window types. One of them is the control panel, where you can set up all the protocols except those of the cameras:

The top pane is for the trigger protocol. Here, you can select which protocol to use, provide a connection string with the corresponding settings (which can vary from one protocol to another), a start date / time in which the system must start the surveillance mode (if you don't provide it, the system starts inmediatelly), an end date / time to stop the surveillance, and you can configure the number of photos to be taken when an intruder is detected and the number of seconds between photos (an integer number).

Below this pane is that for the notifications (alarm) protocol. At right of the dropdown list to select the protocol, you have a Test button, which allows you to test this protocol without the need to make any simmulation. You must provide also a string connection with the parameter settings, and an optional message in case the protocol allows data transfer.

The bottom pane is for the storage protocol. You have a connection string to set up the parameters, if any, and a container name to store the data, which can be a local folder, an FTP folder, an Azure blob container name, etc.

The command buttons are, from left to right, Start Simulacrum, which starts or stops the system as if an intruder has been detected, so you can test the cameras and storage protocol, and the communications with the clients. In this mode, the start and end date are not taken into account. Next, the Start button starts or stops the real surveillance mode. No image is shown in the camera forms (is supposed that no one is present). Finally, the Save button writes the changes in the configuration file.

In the code usage section, I will comment the parameters for the connection strings of all the protocols I have implemented.

Regarding the camera protocol, the configuration of each camera is performed in the camera window, which you can show using the File / New Camera... menu option. First, you have to select the correct camera protocol for the camera you want to add, then, you have to provide the connection data, camera URL, user name and password. Then, you can see a window like this:

The first button, from the left, of the toolbar, is to change the access settings, the second shows the camera set up dialog box, which is implemented in the corresponding protocol. Then, you have a button to start and other to stop the camera, so you can watch the image while configuring the camera. The camera ID must be unique and is mandatory, as you will use this ID to select the camera from the clients. The last two buttons are to save the camera in the configuration file or to remove it.

All those settings are stored in the applicationApp.config file. The connection strings in the connectionStrings section, the other protocol settings in the appSettings section. There are also two custom sections to store the protocol list and the different cameras and their settings.

The cameraSection is like this:

<camerasSection>

<cameras>

<cameraData id="CAMNW"

protocolName="NetWave IP camera"

connectionStringName="CAMNW" />

<cameraData id="VAPIX"

protocolName="VAPIX IP Camera"

connectionStringName="VAPIX" />

</cameras>

</camerasSection>

Each camera is a cameraData element, with an id attribute, a protocolName attribute with the name of the corresponding protocol, and a connectionStringName attribute for the connection data: url, userName and password, stored in a connection string in the connectionStrings section.

There is also a protocolsSection, containing the list of installed protocols:

<protocolsSection>

<protocols>

<protocolData name="Arduino Simple Trigger"

class="trigger"

type="ArduinoSimpleTriggerProtocol.ArduinoTrigger, ArduinoSimpleTriggerProtocol,

Version=1.0.0.0, Culture=neutral, PublicKeyToken=null" />

<protocolData name="Lync Notifications"

class="alarm"

type="LyncProtocol.LyncAlarmChannel, LyncProtocol, Version=1.0.0.0,

Culture=neutral, PublicKeyToken=null" />

<protocolData name="AT Modem Notifications"

class="alarm"

type="ATModemProtocol.ATModemAlarmChannel, ATModemProtocol,

Version=1.0.0.0, Culture=neutral, PublicKeyToken=null" />

<protocolData name="Azure Blob Storage"

class="storage"

type="AzureBlobProtocol.AzureBlobManager, AzureBlobProtocol,

Version=1.0.0.0, Culture=neutral, PublicKeyToken=null" />

<protocolData name="NetWave IP camera"

class="camera"

type="NetWaveProtocol.NetWaveCamera, NetWaveProtocol,

Version=1.0.0.0, Culture=neutral, PublicKeyToken=null" />

<protocolData name="VAPIX IP Camera"

class="camera"

type="VAPIXProtocol.VAPIXCamera, VAPIXProtocol,

Version=1.0.0.0, Culture=neutral, PublicKeyToken=null" />

<protocolData name="DropBox Storage"

class="storage"

type="DropBoxProtocol.DropBoxStorage, DropBoxProtocol,

Version=1.0.0.0, Culture=neutral, PublicKeyToken=null" />

</protocols>

</protocolsSection>

Each protocol has a name, a class to identify their usage (trigger, alarm, storage or camera) and a type with the complete type of the class which implements the protocol.

You can add new protocols to this section using the File / Install Protocol/s... menu option, selecting the class library with the protocol or protocols implementation.

The Clients

The application has to notify you a possible intrusion wherever you are, so I have implemented the clients as mobile Apps. The easiest way to quickly have versions of an App for almost all platforms is to use Xamarin to do it, so this is the approach I have selected.

The TWClientApp PCL (portable class library) project contains almost all the code in the client side. In the specific projects for the different platforms, there is only the code to save files, to save the pictures taken by the cameras in the mobile phone memory, so that you can provide them to the police as soon as possible.

This is my first mobile App project, so it isn't very sophisticated. Here, I haven't used dependency injection. Instead I have implemented only the Dropbox storage protocol, so, if you want to use another one, you have to change the code in the PCL library. This protocol has the advantage that you can use the Dropbox actual client to obtain the photos, without using the ThiefWatcher client (although you lose the application control capability).

When you start the client App, you have to press the Connect button in order to send an identification message to the main application:

Then, a list of cameras is sent to the client. You can select one of them pressing the corresponding button:

You can watch the current image of the camera. Normally, you can't wait a really video stream, as uploading each image can be very slow. The central control takes the frames in real time, but the Dropbox spends up to two seconds to upload each one.

You can use the buttons to Start /Stop the camera, take a photo, or end the alarm mode (there is no need to stop the cameras before end the alarm mode).

The photos appear in a list at the bottom, and you can save it to the phone or delete them.

I couldn't test the iOS version, as I don't have a MAC, but the Windows Phone and Android Apps work fine.

Using the Code

The different protocol interfaces are defined in the WatcherCommons project, in the Interfaces namespace. The camera protocol is IWatcherCamera, defined as follows:

public class FrameEventArgs : EventArgs

{

public FrameEventArgs(Bitmap bmp)

{

Image = bmp;

}

public Bitmap Image { get; private set; }

}

public delegate void NewFrameEventHandler(object sender, FrameEventArgs e);

public interface IWatcherCamera

{

event NewFrameEventHandler OnNewFrame;

Size FrameSize { get; }

string ConnectionString { get; set; }

string UserName { get; set; }

string Password { get; set; }

string Uri { get; set; }

int MaxFPS { get; set; }

bool Status { get; }

ICameraSetupManager SetupManager { get; }

void Initialize();

void ShowCameraConfiguration(Form parent);

void Start();

void Close();

}

OnNewFrame: Is the event handler fired when an image is ready to be sent to the application. The image is passed as a Bitmap in the Image property of the FrameEventArgs parameter.FrameSize: The current width and height of the camera image.ConnectionString: a semicolon separated string to define the camera access parameters. In the protocols I have implemented, the parameters are url, userName and password, like this: url=http://192.168.1.20;userName=root;password=root.UserName,Password and Uri: Same as in the connection string.MaxFps: To set the capture rate.Status: true if the camera is running.SetupManager: Interface with the camera settings dialog box. Used to fire an event inthe application when the user changes the camera image size, so that the camera form can be resized properly.Initialize: to reset the internal state as needed.ShowCameraConfiguration: shows the camera configuration dialog box. It must be not modal, so that you can watch the changes if the camera is showing images at time.Start: starts the image capturing. This is carried-out in a separate thread, you have to take this into account when interacting with the camera in the new frame event.Stop: stop capturing.

The NetWave protocol is implemented in the NetWaveProtocol project, and the VAPIX protocol in the VAPIXProtocol project.

The trigger protocol, ITrigger,is as follows:

public interface ITrigger

{

event EventHandler OnTriggerFired;

string ConnectionString { get; set; }

void Initialize();

void Start();

void Stop();

}

OnTriggerFired: fired when the trigger condition is detected.ConnectionString: string with the configuration parameters. In the protocol I have implemented, in the ArduinoSimpleTriggerProtocol project, they are port and baudrate, like this: port=COM4;baudrate=9600. Remember to set the same baud rate in the Arduino code.Initialize: to reset the intarnal state as needed.Start: start listen for a trigger condition. This is done in a separate thread.Stop: stop listening.

The notification protocol, IAlarmChannel, is simple too:

public interface IAlarmChannel

{

string ConnectionString { get; set; }

string MessageText { get; set; }

void Initialice();

void SendAlarm();

}

ConnectionString: string with the configuration parameters.MessageText: message to send, if the protocol allows it.Initialize: to reset the internal state.SendAlarm: sends a notificatrion to the clients.

The protocols I have implemented are the ATModemProtocol project, which dials one or more phone numbers using an AT modem, and has the following configuration parameters:

port: the COM port where the modem is connectedbaudrate: to set the port baud rateinitdelay: delay in milliseconds to wait before dialnumber: comma separated list of phone numbersringduration: time in milliseconds before hang up

The other protocol uses Skype or Lync to notify the user. It is implemented in the LyncProtocol project. The connection string is a semicolon separated list of Skype or Lync user addresses. You must have the Lync client installed on the main computer and on the clients.

The latter is the storage protocol, the data used by this protocol is defined in the Data namespace of the WatcherCommons class library. There are two different classes, ControlCommand is for the camera commands:

[DataContract]

public class ControlCommand

{

public const int cmdGetCameraList = 1;

public const int cmdStopAlarm = 2;

public ControlCommand()

{

}

public static ControlCommand FromJSON(Stream s)

{

s.Position = 0;

StreamReader rdr = new StreamReader(s);

string str = rdr.ReadToEnd();

return JsonConvert.DeserializeObject<ControlCommand>(str);

}

public static void ToJSON(Stream s, ControlCommand cc)

{

s.Position = 0;

string js = JsonConvert.SerializeObject(cc);

StreamWriter wr = new StreamWriter(s);

wr.Write(js);

wr.Flush();

}

[DataMember]

public int Command { get; set; }

[DataMember]

public string ClientID { get; set; }

}

The commands are sent and received in JSON format. There are two differentcommnands, passed in the Command member, one to register with the application and get the list of cameras and another one to stop the alarm and reset the application to the surveillance mode.

The ClientID member uniquely identifies each client.

CameraInfo is to interchange requests and responses regarding the cameras, in JSON format too:

[DataContract]

public class CameraInfo

{

public CameraInfo()

{

}

public static List<CameraInfo> FromJSON(Stream s)

{

s.Position = 0;

StreamReader rdr = new StreamReader(s);

return JsonConvert.DeserializeObject<List<CameraInfo>>(rdr.ReadToEnd());

}

public static void ToJSON(Stream s, List<CameraInfo> ci)

{

s.Position = 0;

string js = JsonConvert.SerializeObject(ci);

StreamWriter wr = new StreamWriter(s);

wr.Write(js);

wr.Flush();

}

[DataMember]

public string ID { get; set; }

[DataMember]

public bool Active { get; set; }

[DataMember]

public bool Photo { get; set; }

[DataMember]

public int Width { get; set; }

[DataMember]

public int Height { get; set; }

[DataMember]

public string ClientID { get; set; }

}

ID: the camera identifierActive: the camera statePhoto: used to request to the camera to take a photoWidth and Height: camera image sizeClientID: client unique identifier

When you request a list of cameras, you receive a response with an array of CameraInfo objects, one for each camera.

The interface to implement the protocol is IStorageManager:

public interface IStorageManager

{

string ConnsecionString { get; set; }

string ContainerPath { get; set; }

void UploadFile(string filename, Stream s);

void DownloadFile(string filename, Stream s);

void DeleteFile(string filename);

bool ExistsFile(string filename);

IEnumerable<string> ListFiles(string model);

IEnumerable<ControlCommand> GetCommands();

IEnumerable<List<CameraInfo>> GetRequests();

void SendResponse(List<CameraInfo> resp);

}

ConnectionString: string with the configuration parametersContainerPath: to identify a folder, blob container name, etc.UploadFile: send a file, provided in a Stream objectDownloadFile: get a file in the provided Stream objectDeleteFile: deletea fileExistsFile: test if a file existsListFiles: enumerate the files in the folder, the begining of their names must match themodel parameterGetCommands: enumerate the commands sent by the clientsGetRequests: enumerate the camera requests sent by the clientsSendResponse: send a responsefor a command or camera request

I have implemented two storage protocols. The DropBoxProtocol project implements the protocol to use with Dropbox. In the server side, this is nothing more than read and write files to the Dropbox folder. There is no need to connection string, as the folder is configured separately.

In the clients, this is the protocol implemented. It is slightly different, the interface is defined in the TWClientApp project:

public interface IStorageManager

{

Task DownloadFile(string filename, Stream s);

Task DeleteFile(string filename);

Task<bool> ExistsFile(string filename);

Task<List<string>> ListFiles(string model);

Task SendCommand(ControlCommand cmd);

Task SendRequest(List<CameraInfo> req);

Task<List<CameraInfo>> GetResponse(string id);

}

It is an asynchronous interface, and there are less members than that in the server side. The implementation is not as easy as in the server; we have to use the Dropbox API to interact with it. The implementation is in the class DropBoxStorage and, in the _accessKey constant, you mustset the security key to stablish succesfully the connection (Don't forget to do so before compile the code the first time, as thereis no default value).

private const string _accessKey = "";

Almost all the code of the client App is in the TWClientApp project, in the class CameraPage. The interchange protocol of data is by means of files, each with a special name to identify it. These are the different file name patterns:

- The camera only write one frame file, when the client read the frame, it deletes the file and the server can write another one. The file is a jpg picture, with the name <CAMERA ID>_FRAME_<CLIENT ID>.jpg.

- The name of the photos is similar and there may have more than one photo. The name pattern is: <CAMERA ID>_PHOTO_yyyyMMddHHmmss.jpg.

- The clientcan send commands to the server, one at time, in JSON text format and with the name cmd_<CLIENT ID>.json.

- When the server get a command file, it deletes the file, so the client can send another command, and it executes the command. Then, it write a response file with the name resp_<CLIENT ID>.json.

- Finally, the clientcan send camera requests, by example to take a photo, or to start or stop a camera, in JSON format in a file with the name req_<CLIENT ID>.json. The server read the file, deletes it, and passes the request to the camera to process it, then, the server write a response file, like in the case of commands, with the camera state.

The NetWave camera protocol configuration dialog box is very simple, you can read more about this protocol in my blog.

As to the VAPIX protocol, it is more complicated, as it is a protocol for professional cameras. Instead of a complicate dialog box with lots of controls, I have implemented a tree view with all the configuration parameters (they are a lot of them), where you can select every one of them and changethe value.

And that's all, enjoy the solution, and thanks for reading!!!

History

- 10th March, 2017: Initial version