A while ago I worked on a project that used this tech stack

- Akka HTTP : (actually we used Spray.IO but it is practically the same thing for the purpose of this article). For those that don't know what Akka HTTP is, it is a simple Akka based framework that is also able to expose a REST interface to communicate with the actor system

- Cassandra database : Apache Cassandra is a free and open-source distributed database management system designed to handle large amounts of data across many commodity servers, providing high availability with no single point of failure. Cassandra offers robust support for clusters spanning multiple datacenters, with asynchronous masterless replication allowing low latency operations for all clients. It is a multi node cluster

This was a pain to test, and we were always stepping on each others toes, as we were all sharing a single database. What we really needed was our own instance of a Cassandra cluster, as you can imagine running up a 5 node cluster of VMs just to satisfy each developers own testing needs was a bit much. So we ended up with some dedicated test environments, running 5 Cassandra nodes. These was still a PITA to be honest.

This got me thinking perhaps I could use Docker to help me out here, perhaps I could run Cassandra in a Docker container, hell perhaps I could even run my own code that uses Cassandra in a Docker container, and just point my UI at the Akka HTTP REST server running in Docker. mmmmm

I started to dig around, and of course this is entirely possible (otherwise I would not be writing this article now would I).

This is certainly not a new thing here for Codeproject, there are numerous Docker articles, but I never found one that talked about Cassandra, so I thought why not write another one.

The code can be located here : https://github.com/sachabarber/DockerExamples

This section will outline some of the fundamental ideas behind Docker

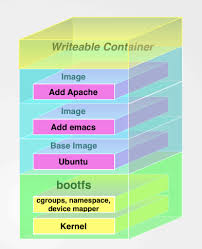

Perhaps the most important idea to get your head around with docker is that of images/image layers, and how these relate to containers. Docker works on the idea of a layered images. Where each image is a new layer over the one beneath.

For example consider the following diagram

There are 3 images in play here

Each of these layers wil build upon the one below. What do I mean by build upon? Quite simply it will add/remove/alter files on the Docker file system. It is by altering the Docker file system in this way that we are able to build up very complex images. This example would end up with a container (that could be used as a new image) that would be capable of running Apache + Emacs on Ubuntu.

Ok, so that's cool, but where do we get these images?

As I stated above Docker has a vibrant community behind it, and it also has a cloud repository of base images that Docker or the community have created.

For example say I wanted to use Cassandra, I would simple got to https://hub.docker.com/ and search for cassandra, which would give me a page like this

CLICK FOR BIGGER IMAGE

In this case there is an official version from the Cassandra maintainers, but sometimes you can expect this to be community based.

In some cases where an official image is available you may get some good documentation telling you things like

- What ports are exposes

- What environment variables are / could be used

- How to link containers

Occasionally a bit of software you use will want to use a certain directory for some purpose or another, most typically config, or logging. It would be nice to expose this outside of a container, such that it could map to some physical location on the host, or even possibly be shared between containers.

Luckily Docker has your back here and allows you to create a mapping from container path to host path. You can read more about this at this link : data-volumes

Here is what the official Docker docs have to say about volumes

A data volume is a specially-designated directory within one or more containers that bypasses the Union File System. Data volumes provide several useful features for persistent or shared data:

Volumes are initialized when a container is created. If the container’s base image contains data at the specified mount point, that existing data is copied into the new volume upon volume initialization. (Note that this does not apply when mounting a host directory.)

- Data volumes can be shared and reused among containers.

- Changes to a data volume are made directly.

- Changes to a data volume will not be included when you update an image.

- Data volumes persist even if the container itself is deleted.

- Data volumes are designed to persist data, independent of the container’s life cycle. Docker therefore never automatically deletes volumes when you remove a container, nor will it “garbage collect†volumes that are no longer referenced by a container host

An example of creating a volume that will be mapped from a container to a host file system path would be as follows

$ docker run -d -P --name web -v /src/webapp:/webapp training/webapp python app.py

This command mounts the host directory, /src/webapp, into the container at /webapp. If the path /webapp already exists inside the container’s image, the /src/webapp mount overlays but does not remove the pre-existing content. Once the mount is removed, the content is accessible again.

This is consistent with the expected behavior of the mount command. The container-dir must always be an absolute path such as /src/docs. The host-dir can either be an absolute path or a name value. If you supply an absolute path for the host-dir, Docker bind-mounts to the path you specify. If you supply a name, Docker creates a named volume by that name.

Some software may also be setup to use environment variables. Luckily docker supports this both in the Docker command line and the richer Docker Compose file syntax, which we will see more of later.

This is an example of setting an environment variable from the Docker command line, we will see a Docker Compose file example later. The -e CASSANDRA_BROADCAST_ADDRESS=10.42.42.42 is setting an environement variable that will be passed to the container being created by the image here.

$ docker run --name some-Cassandra -d -e CASSANDRA_BROADCAST_ADDRESS=10.42.42.42 -p 7000:7000 cassandra:tag

Docker comes with this idea of linking containers together. But why would you want that? So lets imagine we have a web site that is able to run in a Docker container and it talks to a MySQL database that is also able to run in a Docker container. We could either run the MySQL database container up first, grab the IP address of it, and mangle the deployment of the web site some how to let is know about the IP address of the database container, or we could use Docker linking.

This is an example of setting an environment variable from the Docker command line, we will see a Docker Compose file example later. The --link some-cassandra:cassandra is linking this new container creation request to an existing container called "cassandra".

$ docker run --name some-cassandra2 -d --link some-cassandra:cassandra cassandra:tag

In a nutshell Docker linking allows to express dependencies from one container to another, this may be done on the command line or via docker-compose.This article will discuss the docker-compose mechanism.

Another feature of linking is that you may run interactive commands, say some sort of SQL command line against an existing container. For example Apache Cassandra comes with a command line utility called CQL which you can use to do database command line stuff. Here is an example of running that against a container that is already running Apache Cassandra.

$ docker run -it --link some-cassandra:cassandra --rm cassandra cqlsh cassandra

where some-cassandra is the name of your original Cassandra Server container

It would be a rare bit of software that did not need to expose a port or IP address to accept input from the user. Luckily this is entirely possible with Docker. In fact Docker offers very rich networking capabilities, which i'm sorry to say is outside the scope of this article. We will look at how to expose ports from container to host. However for a deeper walk through I urge you to read more on this subject (it is a big subject) : https://docs.docker.com/engine/userguide/networking/

Docker can build images automatically by reading the instructions from a Dockerfile. A Dockerfile is a text document that contains all the commands a user could call on the command line to assemble an image. Using docker build users can create an automated build that executes several command-line instructions in succession.

Whilst the Docker line does allow you to do pretty much anything with containers/images, sometimes what you are looking for is a more contained description of you software stack. Something like:

- I have a web site

- It has 2 databases that is depends on

- I want the logs to be exposed here and here

- I need these ports open

And you would like to bring all of this up in one go. That sounds ideal. Fortunately Docker allows this with something called Docker Compose which allows us to describe ALL our requirements in one file, and bring it all up in one go. We will be seeing 3 examples of this in the rest of the article

Ok so I now know that there is a place where I can grab images, well that's grand, but how do I get MY software to run with one of these images?

We will examine this question using several examples, as shown below.

I have decided to split the examples into 3, ranging from very simple, to medium to complex. However the principles we use for all of them is the same, we just build up the example a bit more each time

But before we get into the examples there are a couple of common points which they all share

They all use Akka HTTP, which as I have stated above is a Akka based REST api. A bare bones Akka Http application may look something like this (this is with a single default GET route)

package SAS

import akka.actor.ActorSystem

import akka.event.Logging

import akka.http.scaladsl.Http

import akka.http.scaladsl.server.Directives._

import akka.stream.ActorMaterializer

import com.typesafe.config.ConfigFactory

object DockerAkkaHttpMicroService {

implicit val system = ActorSystem()

implicit val executor = system.dispatcher

implicit val materializer = ActorMaterializer()

def main(args: Array[String]): Unit = {

val config = ConfigFactory.load()

val logger = Logging(system, getClass)

val routes =

get {

pathSingleSlash {

complete {

"Hello, World from Code!"

}

}

}

Http().bindAndHandle(routes, config.getString("http.address"), config.getInt("http.port"))

}

}

In fact this is the entire codebase for the 1st example below. If one was to open the source code associated with example 1 in a Scala/SBT capable editor and run this, and then use something like Postman to test the route, we would see something like this

CLICK FOR BIGGER IMAGE

So that is what we expect a running Akka Http application to be able to do, basically response with some viable response to a REST call.

I am using a scala/sbt stack, as such I will be using SBT to build the code that goes with this article.

I also create a fat Jar. Which means the end result once all the dependencies have been added in which be a single .jar file. The SBT file for example 1 looks like this, the others are similar just with different dependencies

name := "SimpleAkkaHttpService"

version := "1.0"

scalaVersion := "2.11.7"

scalacOptions := Seq("-unchecked", "-deprecation", "-encoding", "utf8")

assemblyJarName in assembly := "SimpleAkkaHttpService.jar"

libraryDependencies ++= {

val akkaStreamVersion = "1.0"

Seq(

"com.typesafe.akka" %% "akka-actor" % "2.3.12",

"com.typesafe.akka" % "akka-stream-experimental_2.11" % akkaStreamVersion,

"com.typesafe.akka" % "akka-http-core-experimental_2.11" % akkaStreamVersion,

"com.typesafe.akka" % "akka-http-experimental_2.11" % akkaStreamVersion

)

}

Revolver.settings

Each of the examples will be expected to run MY OWN code in a container.

So how does that happen exactly, I know we talked about layers and being able to build on top of layers of other images. Yes spot on, its exactly that, we use a base image and then add files on top of that.

In my case the dockerfile contains the way in which to build a new image for MY OWN container (ie my own software) by building a new layer on top of an existing image.

In my case the base image needs several things

Again DockerHub has your back here. Lets have a look at the dockerfile for example 1.

FROM hseeberger/scala-sbt

MAINTAINER sacha barber <sacha.barber@gmail.com>

ENV REFRESHED_AT 2017-07-02

ADD . /root

WORKDIR /root

EXPOSE 8080

ENTRYPOINT ["java", "-jar", "SimpleAkkaHttpService.jar"]

What is this saying?

Well when you break it down its actually quite digestable, all of these are standard Dockerfile commands

- FROM uses the name of the base image (scala + SBT) so this one from DockerHub: https://hub.docker.com/r/hseeberger/scala-sbt/

- MAINTAINER me

- ENV REFRESHED AT date

- ADD we add files to the base image, we use root

- WORKDIR we set working directory to be the root

- EXPOSE we want the port 8080 (this was what our Akka Http app exposed) from the new container we are building

- ENTRYPOINT we set to the app that will run when we start the container and what command it will run, in this case java runs our fat jar

All of the examples have a VERY simliar dockerfile, so I will not cover it again, just wanted to go through one in a bit of detail

We also make use of another bit of the docker toolkit, namely another command line tool called "docker-compose". docker-compose is meant for assembling more and more elaborate interconnected setups that you wish to containerise.

There is a convention that the docker-compose file should be called "docker-compose.yml".

Here is the complete docker-compose.yml file for the 1st example

webservice:

build: .

ports:

- "8080:8080"

So what is this saying?

As before if we take it step by step it is quite easy to understand. It names the service for the Akka Http REST "webservice" and uses the dockerfile in the current location to bring up the Akka Http web service inside a container, and it exposes port 8080 of the container to the hosts 8080 port.

If we run this command line in a directory that has the 3 files SimpleAkkaHttpService.jar/Dockerfile/ this docker-compose.yml in it

docker-compose up --build

We can then try the web site again to see if it works.

CLICK FOR BIGGER IMAGE

Ha it works.

I know what you are all thinking that I just copied the image from above, well I did, but trust me this does work.

Full instructions are in the README.md in Git repo anyway

Believe it or not with these few bits of knowledge we are able to build pretty elaborate setups, as we will see in the 3 examples below.

This is as above, we just used the 1st example to pave the way for other examples. Next time we will build apon what we have an add a dependency on MySQL.

This example will build upon what we have just done, with the main difference being that this time we will be running a Akka Http REST API that will talk to a SINGLE MySQL server and will obtain some of the static data that is available within a MySQL server. This will introduce 3 new Docker Compose concepts, namely

- volumes

- environment variables

- linking

- multiple services starting in unison

Here is the actual code we are going to trying to run.

package SAS

import akka.actor.ActorSystem

import akka.event.Logging

import akka.http.scaladsl.Http

import akka.http.scaladsl.server.Directives._

import akka.stream.ActorMaterializer

import com.typesafe.config.ConfigFactory

import java.sql.{ResultSet, Connection, DriverManager}

object DockerAkkaHttpMicroServiceUsingMySql {

implicit val system = ActorSystem()

implicit val executor = system.dispatcher

implicit val materializer = ActorMaterializer()

def main(args: Array[String]): Unit = {

val config = ConfigFactory.load()

val logger = Logging(system, getClass)

def getSomeMySqlData() : String = {

val url = "jdbc:mysql://db:3306/mysql"

val driver = "com.mysql.jdbc.Driver"

val username = "root"

val password = "sacha"

var connection:Connection = null

var returnVal=""

try {

Class.forName(driver)

connection = DriverManager.getConnection(url, username, password)

val statement = connection.createStatement

val rs = statement.executeQuery("SELECT table_name, table_schema FROM INFORMATION_SCHEMA.TABLES WHERE TABLE_TYPE = 'BASE TABLE';")

realize(rs).take(10).foreach(v=>

{

val valuesList = v.values.toList

val tableName = valuesList(0)

val tableSchema = valuesList(1)

returnVal = returnVal + s"tableName= $tableName, tableSchema= $tableSchema\r\n"

})

} catch {

case e: Exception => e.printStackTrace

}

connection.close

returnVal

}

def buildMap(queryResult: ResultSet,

colNames: Seq[String]): Option[Map[String, Object]] =

if (queryResult.next())

Some(colNames.map(n => n -> queryResult.getObject(n)).toMap)

else

None

def realize(queryResult: ResultSet): Vector[Map[String, Object]] = {

val md = queryResult.getMetaData

val colNames = (1 to md.getColumnCount) map md.getColumnName

Iterator.continually(buildMap(queryResult, colNames))

.takeWhile(!_.isEmpty).map(_.get).toVector

}

val routes =

get {

pathSingleSlash {

complete {

getSomeMySqlData()

}

}

}

Http().bindAndHandle(routes,

config.getString("http.address"),

config.getInt("http.port"))

}

}

Notice the connection to the MySQL database uses this line

val url = "jdbc:mysql://db:3306/mysql"

Where does that come from, well if we examine the actual Docker Compose file, we may get to understand this. Here is the Docker Compose file for this example

version: '2'

services:

db:

image: mysql:latest

volumes:

- db_data:/var/lib/mysql

restart: always

environment:

MYSQL_ROOT_PASSWORD: sacha

MYSQL_USER: sacha

MYSQL_PASSWORD: sacha

ports:

- "3306:3306"

webservice:

build: .

ports:

- "8080:8080"

depends_on:

- db

volumes:

db_data:

There are several things to talk about here, namely

Volumes

The "db" project (MySQL see the mySql image (again from DockerHub)) uses a volume to map from the MySQL world to the host container

Environment variables

The "db" project (MySQL see the mySql image (again from DockerHub)) requires us to set some environment variables. We already saw how to set those from the command line but above shows you how to set them in a docker-compose.yaml file

Linking

As we already saw this time we want to run not only the Akka Http REST api but also the MySQL database each in its own container. We obviously want to connect to the MySQL database from the Akka Http api code. We saw something like this in the Akka Http code base

val url = "jdbc:mysql://db:3306/mysql"

So what this is doing is once the containers are up (there is a dependency declared in the docker-commpose.yaml file, see the depends_on) the database is avaiable using the name "db" on the port that it exposes "3306" in this case. So we simply use that in our connection string. And since Docker takes care of the dependency for us, this is ok, we know the "db" will be started before the Akka Http REST service.

Multiple services starting in unison

This is the first time we have seen more than one service starting up. This time we have 2

- db : The MySQL service

- webservice : The Akka Http service

Each with their own setup for the container requirements they need.

As before we need to ensure that the Dockerfile/docker-compose.yaml and the SimpleAkkaHttpServiceWithMySql.jar (as specified in the Dockerfile and build.sbt files) are in the same directory.

Then issue this command

docker-compose up --build

If all goes well we should see something like this

CLICK FOR BIGGER IMAGE

Another thing we can now do is open another command line and issue this command to examine the running containers

CLICK FOR BIGGER IMAGE

Now that we are happy its all up and running we can just use postman to test the REST API.

See how it grabs this metadata from the running MySQL database. Pretty cool huh. We are now able to setup fairly complex software requirements, the topics I have just covered should get you a good way to easing your development woes. It has certainly worked out well for me.

The last example I wanted to cover in someways just builds on what we have above in example 2, there is only one more new concept to cover, but its a nice one. But before we go into that I just wanted to let you know why I chose to pick Apache Cassandra as the final example.

Apache Cassandra is a multinode distributed database, when new nodes may come and go from what is known as the "cassandra ring".The ring is really a distributed hash map, however Cassandra has one more interesting feature in that it uses a GOSSIP protocol to ensure that each node knows and talks to each other. Its how consensus is gathered, you need to reach a consensum to assume that a bit of data is really persisted.

This image may help

CLICK FOR BIGGER IMAGE

Some tables/queries that you design may ONLY work if you have the right number of nodes (usually odd so that consensus may be reached).

As before here is the code that we are trying to run for this example

package SAS

import akka.actor.ActorSystem

import akka.event.Logging

import akka.http.scaladsl.Http

import akka.http.scaladsl.server.Directives._

import akka.stream.ActorMaterializer

import com.datastax.driver.core.{Session, ResultSet, Cluster}

import com.typesafe.config.ConfigFactory

import scala.collection.JavaConversions._

object DockerAkkaHttpMicroServiceUsingCassandra {

implicit val system = ActorSystem()

implicit val executor = system.dispatcher

implicit val materializer = ActorMaterializer()

def main(args: Array[String]): Unit = {

val config = ConfigFactory.load()

val logger = Logging(system, getClass)

def getSomeCassandraData() : String = {

var cluster:Cluster = null

var returnVal="getSomeCassandraData\r\n"

try {

cluster = Cluster.builder()

.addContactPoint("cassandra-1")

.withPort(9042)

.build()

val session = cluster.connect()

returnVal = returnVal + "Before keyspace\r\n"

createKeySpace(session)

returnVal = returnVal + "After keyspace\r\n"

createTable(session)

returnVal = returnVal + "After table\r\n"

seedData(session)

returnVal = returnVal + "After seeding\r\n"

returnVal = returnVal + "\r\n"

val rs = session.execute("select category, points, lastname from dockercassandra.cyclist_category")

val iter = rs.iterator()

while (iter.hasNext()) {

if (rs.getAvailableWithoutFetching() == 100 && !rs.isFullyFetched())

rs.fetchMoreResults()

val row = iter.next()

returnVal = returnVal + s"category= ${row.getString("category")}, " +

s"points= ${row.getInt("points")}, lastname= ${row.getString("lastname")}\r\n"

}

}

catch {

case e: Exception => {

e.printStackTrace

returnVal = e.printStackTrace().toString

}

} finally {

if (cluster != null) cluster.close()

}

returnVal

}

def createKeySpace(session : Session) : Unit = {

session.execute(

"""CREATE KEYSPACE IF NOT EXISTS DockerCassandra

WITH replication = {

'class':'SimpleStrategy',

'replication_factor' : 3

};""")

}

def createTable(session : Session) : Unit = {

session.execute(

"""CREATE TABLE IF NOT EXISTS dockercassandra.cyclist_category (

category text,

points int,

lastname text,

PRIMARY KEY (category, points))

WITH CLUSTERING ORDER BY (points DESC);""")

}

def seedData(session : Session) : Unit = {

session.execute("TRUNCATE dockercassandra.cyclist_category;")

session.execute("INSERT INTO dockercassandra.cyclist_category (category, points, lastname) VALUES ('cat1', 15, 'barber');");

session.execute("INSERT INTO dockercassandra.cyclist_category (category, points, lastname) VALUES ('cat2', 25, 'smith');");

session.execute("INSERT INTO dockercassandra.cyclist_category (category, points, lastname) VALUES ('cat3', 45, 'evans');");

session.execute("INSERT INTO dockercassandra.cyclist_category (category, points, lastname) VALUES ('cat4', 65, 'harth');");

}

val routes =

get {

pathSingleSlash {

complete {

getSomeCassandraData()

}

}

}

Http().bindAndHandle(routes,

config.getString("http.address"),

config.getInt("http.port"))

}

}

As before thanks to what we now know about linking we can use the "cassandra-1" container and also use its port.

However ith such a possibly large database with many nodes, how would we even express that in a Docker Compose file, surely we don't need to express 5 or 7 or 9 seperate Cassandra services in the Docker Compose file do we?

No actually we do not, Docker has a nice helpful thing we can do here. Lets look at the Docker Compose file first though, here it is

version: '2'

services:

cassandra-1:

image: cassandra:latest

command: /bin/bash -c "echo ' -- Pausing to let system catch up ...' && sleep 10 && /docker-entrypoint.sh cassandra -f"

expose:

- 7000

- 7001

- 7199

- 9042

- 9160

# volumes: # uncomment if you desire mounts, also uncomment cluster.sh

# - ./data/cassandra-1:/var/lib/cassandra:rw

cassandra-N:

image: cassandra:latest

command: /bin/bash -c "echo ' -- Pausing to let system catch up ...' && sleep 30 && /docker-entrypoint.sh cassandra -f"

environment:

- CASSANDRA_SEEDS=cassandra-1

links:

- cassandra-1:cassandra-1

expose:

- 7000

- 7001

- 7199

- 9042

- 9160

# volumes: # uncomment if you desire mounts, also uncomment cluster.sh

# - ./data/cassandra-2:/var/lib/cassandra:rw

webservice:

build: .

ports:

- "8080:8080"

depends_on:

- cassandra-1

It is basically what we have seen (more of the same) the only difference being that we now have this new container "cassandra-N" which links through to the main "cassandra-1" container, and it also sets a Cassandra specific environment variable to ensure the ring is formed.

As before we need to ensure that the Dockerfile/docker-compose.yaml and the SimpleAkkaHttpServiceWithCassandra.jar (as specified in the Dockerfile and build.sbt files) are in the same directory.

Then issue this command

docker-compose up --build

It would all work out fine, as shown below

CLICK FOR BIGGER IMAGE

As can be seen when we run our old friend postman

CLICK FOR BIGGER IMAGE

This is all good and works fine, but what if we want more nodes in our ring? Well it turns out Docker Compose lets us do this, we simple use this magic command instead notice the "scale" part.

docker-compose scale webservice=1 cassandra-1=1 cassandra-N=4

Other codeproject articles

These are also fairly essential reading if you ask me

As always if you like what you see here, a vote/comment is most welcome