Validation is an important part of form design. It provides guidance for our users. It informs the business rules surrounding the capture of data in our systems. Because it's a key part of form creation, we need to test it very carefully. A lot of the time, this testing is very manual. Does this sound familiar? You build a form. You add your validation logic. You fire up a browser. You fill in the form. Everything looks ok. No, wait. That rule doesn't work. Back to the code. Add a fix. Refresh the browser. Fill the whole form in again. Rinse, repeat.

That's a pretty long feedback cycle. It's important to do browser-based testing. We don’t need to do it throughout development though. So what else can we do? Well, if we're using Fluent Validation, we can write unit tests. We can move all our thinking about logic and validation rules to our test code. If we can capture all the rules and edge cases in test form, the code will almost write itself! Well, not quite. As we make all our tests pass, we'll end up with a bunch of working validators when we check in a browser. Once, at the end. Ready? Let's do this.

Simplify Testing With Templates

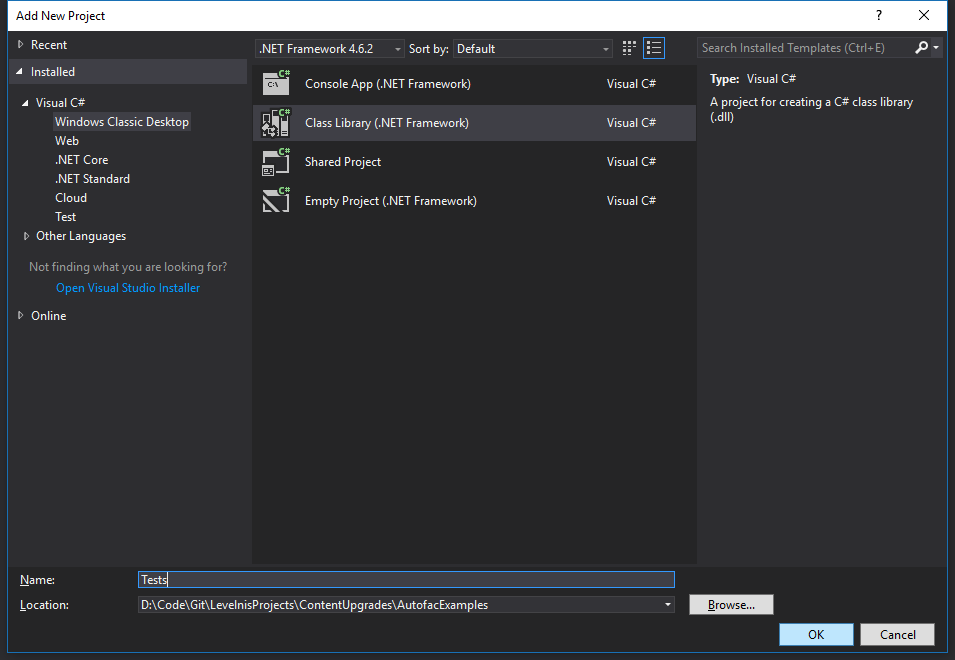

The first thing we need to do is create a separate unit test project. This allows us to mirror the namespaces within the code that we’re testing. We can organise our tests in a way that makes it easy to find them. We’re building on the code from the last article. If you need to get up and running, you can grab it from here: How To Master Complex Scenarios Using Fluent Validation.

Add a new class library project called Tests. Next, go into the project properties and adjust the Assembly Name and Default Namespace. I set mine to Levelnis.Learning.AutofacExamples.Tests to keep this project in sync with the others. Now we need to add NUnit, so we can write tests. Open up the Nuget package manager and install NUnit into the Tests project.

We’re now ready to write some tests. To make it easier to create tests, we'll use a couple of templates. The first one is the base template that I use for all my unit tests. I first introduced this in an earlier article. I won’t explain it again, but you can read about it here: Unit Testing Controllers Using NUnit and NSubstitute.

namespace Levelnis.Learning.AutofacExamples.Tests

{

using NUnit.Framework;

[TestFixture]

public abstract class TestTemplate<TContext>

{

protected virtual TContext Sut { get; private set; }

[SetUp]

public virtual void MainSetup()

{

Sut = EstablishContext();

}

[TearDown]

public void Teardown()

{

TestCleanup();

}

protected abstract TContext EstablishContext();

protected abstract void TestCleanup();

}

}

Next, we build on that with a ValidatorTestTemplate. It constrains TContext to be a class that inherits from AbstractValidator. We’ve seen this before, as it’s the base type for Fluent Validation validators.

namespace Levelnis.Learning.AutofacExamples.Tests

{

using System.Collections.Generic;

using System.Linq;

using FluentValidation;

using FluentValidation.Results;

public abstract class ValidatorTestTemplate<TValidator,

TEntity> : TestTemplate<TValidator>

where TValidator : AbstractValidator<TEntity>, new()

{

protected TEntity Subject;

protected ValidationResult Result;

protected int ExpectedErrorCount;

protected override TValidator EstablishContext()

{

return new TValidator();

}

protected static IDictionary<string,

string> CreateErrors(IList<string> keys, IList<string> values)

{

var errors = new Dictionary<string, string> ();

for (var i = 0; i < keys.Count; i++)

{

errors.Add(keys[i], values[i]);

}

return errors;

}

protected void PerformValidationTest(TEntity subject, bool isValid,

int expectedErrorCount, IDictionary<string, string> errors)

{

Subject = subject;

if (isValid)

{

ExpectedErrorCount = 0;

ValidatorShouldBeValid(errors);

}

else

{

ExpectedErrorCount = expectedErrorCount;

ValidatorShouldBeInvalid(errors);

}

}

private void ValidatorShouldBeValid

(ICollection<KeyValuePair<string, string>> errors)

{

Result = Sut.Validate(Subject);

Result.IsValid.ShouldBeTrue();

Result.Errors.Count.ShouldEqual(0);

errors.ShouldBeNull();

ExpectedErrorCount.ShouldEqual(0);

}

private void ValidatorShouldBeInvalid

(ICollection<KeyValuePair<string, string>> errors)

{

Result = Sut.Validate(Subject);

Result.IsValid.ShouldBeFalse

("Incorrect value for IsValid at index {0}", index);

errors.Count.ShouldEqual(ExpectedErrorCount,

"Incorrect error count at index {0}", index);

Result.Errors.Count.ShouldEqual

(ExpectedErrorCount, "Incorrect error count at index {0}", index);

ExpectedErrorCount.ShouldBeGreaterThan(0,

"Incorrect error count at index {0}", index);

foreach (var error in errors)

{

var failure = Result.Errors.Single(e => e.PropertyName == error.Key);

failure.ErrorMessage.ShouldEqual

(error.Value, "Incorrect error message at index {0}", index);

}

}

}

}

I've written some extension methods, which make the test code easier to read. There's a few things floating around on the web that do this as well, but it's trivial to roll your own. Here they are:

namespace Levelnis.Learning.AutofacExamples.Tests

{

using System;

using NUnit.Framework;

public static class AssertionExtensions

{

public static void ShouldBeFalse(this bool condition, string message, params object[] args)

{

Assert.That(condition, Is.False, message, args);

}

public static void ShouldBeGreaterThan(this IComparable arg1,

IComparable arg2, string message, params object[] args)

{

Assert.That(arg1, Is.GreaterThan(arg2), message, args);

}

public static void ShouldBeNull(this object value)

{

Assert.That(value, Is.Null);

}

public static void ShouldBeTrue(this bool condition)

{

Assert.That(condition, Is.True);

}

public static void ShouldEqual<T> (this T source, T target)

{

Assert.That(source, Is.EqualTo(target));

}

public static void ShouldEqual<T>

(this T source, T target, string message, params object[] args)

{

Assert.That(source, Is.EqualTo(target), message, args);

}

}

}

There are other methods you could add too (ShouldBeLessThan, ShouldBeInstanceOfType, ShouldBeNaN, ShouldContain to name but a few). I've included the full set in the download accompanying this article.

View the original article.

So what’s going on here? The key things to notice are the CreateErrors and PerformValidationTest methods. We’re going to tell our test whether it should be valid or not. It will then run the appropriate code. If our test is supposed to be valid but isn’t, the test will fail. Likewise if it is supposed to be invalid but is actually valid, the test will also fail. If we’re testing an invalid scenario, we can use CreateErrors to store the list of validation errors we expect to see.

Test Quickly With TestCase and TestCaseSource

In a typical validator, there are many rules to implement. We need to write a test for each rule, for completeness. We may also want to test combinations of rules. What happens if all the rules fail? How about just the first two? That's a lot of tests! If only there was a way to make this easier. You know what's coming: of course there is.

As you’ve seen, we're using NUnit as our test framework. It's got a great pair of features called TestCase and TestCaseSource. They're attributes that we can apply to our test. We can then parameterise the test method and pass inputs into it. We can create all our scenarios as test cases and use 1 test method to test the entire lot. Each test case will show up as a separate test in the unit test runner. I'll show you a trick so you can tell exactly which test cases have failed. It means you can add new scenarios and test those edge cases with minimal effort. Let's see it in action.

First of all, we need a validator. We'll use the one from the previous article as a base. Here it is:

namespace Levelnis.Learning.AutofacExamples.Web.Validators

{

using System;

using CommandQuery.ViewModels;

using FluentValidation;

using FluentValidation.Results;

public class RegisterFluentViewModelValidator : AbstractValidator<RegisterFluentViewModel>

{

public RegisterFluentViewModelValidator()

{

RuleFor(m => m.Username)

.NotEmpty().WithMessage("Please enter your username");

RuleFor(m => m.Email)

.NotEmpty().WithMessage("Please enter your email address")

.EmailAddress().WithMessage("Please enter a valid email address")

.Equal(m => m.EmailAgain).WithMessage

("Your email addresses don't match. Please check and re-enter.");

RuleFor(m => m.EmailAgain)

.NotEmpty().WithMessage("Please enter your email address again")

.EmailAddress().WithMessage("Please enter a valid email address again")

.Equal(m => m.Email).WithMessage

("Your email addresses don't match. Please check and re-enter.");

RuleFor(m => m.Password)

.NotEmpty().WithMessage("Please enter your password")

.Equal(m => m.PasswordAgain).WithMessage

("Your passwords don't match. Please check and re-enter.")

.Length(6, 20).WithMessage

("Your password must be at least 6 characters long.");

RuleFor(m => m.PasswordAgain)

.NotEmpty().WithMessage("Please enter your password again")

.Equal(m => m.Password).WithMessage

("Your passwords don't match. Please check and re-enter.");

RuleFor(m => m.BlogUrl)

.NotEmpty()

.WithMessage("Please enter a URL")

.Must(BeAValidUrl)

.WithMessage("Invalid URL. Please re-enter");

RuleFor(m => m.Comments)

.Must(BeValidComments)

.WithMessage("Please enter at least 50 characters of comments.

If you have nothing to say, please check the checkbox.");

Custom(OtherCommentsMustBeValid);

}

private static ValidationFailure OtherCommentsMustBeValid

(RegisterFluentViewModel model, ValidationContext<RegisterFluentViewModel> context)

{

if (model.NoOtherComment) return null;

return model.OtherComments != null && model.OtherComments.Length >= 50 ? null :

new ValidationFailure("OtherComments",

"Please enter at least 50 characters of comments.

If you have nothing to say, please check the checkbox.");

}

private static bool BeValidComments(RegisterFluentViewModel model, string comments)

{

if (model.NoComment) return true;

return comments != null && comments.Length >= 50;

}

private static bool BeAValidUrl(string arg)

{

Uri result;

return Uri.TryCreate(arg, UriKind.Absolute, out result);

}

}

}

Now let's see the test in action. l'll show you with TestCase and TestCaseSource so you can see the difference. We’ll build the tests up slowly, so you can see things take shape. Let’s begin at the beginning. The first validation rule is that the username must not be null. Let’s create a new test fixture and test that rule.

Remember how we’re trying to keep our test code in sync with our production code? Our validator lives in Web/Validators, so we’ll create the same folder structure in our test project. I can’t stress enough about how important this is. It isn’t a big issue when we’ve got 1 or 2 tests. But how about when we’ve got 500? 5000? 20000? It can easily become a maintenance nightmare. Start with good habits. This also helps us identify areas where we’re missing tests.

Add a Web folder to your Tests project and a Validators folder inside that. We’re mirroring the Web project namespace structure. Now add a new class called RegisterFluentViewModelValidatorTests. We’re using the ValidatorTestTemplate, which needs the validator and model types as generic arguments. I find it makes for cleaner tests if I separate the valid and invalid cases into separate tests, so let’s do that.

namespace Levelnis.Learning.AutofacExamples.Tests.Web.Validators

{

using AutofacExamples.Web.CommandQuery.ViewModels;

using AutofacExamples.Web.Validators;

using NUnit.Framework;

public class RegisterFluentViewModelValidatorTests :

ValidatorTestTemplate<RegisterFluentViewModelValidator, RegisterFluentViewModel>

{

private const string UsernameKey = "Username";

private const string UsernameError = "Please enter your username";

protected override void TestCleanup()

{

}

[Test]

public void GivenARegisterFluentViewModelValidator_WhenValid_ThenTheValidatorShouldPassAllRules()

{

var model = new RegisterFluentViewModel

{

Username = "dave",

Email = "<a class="__cf_email__"

href="/cdn-cgi/l/email-protection"

data-cfemail="9ffbfee9fadfebfaecebb1fcf0f2">

[email protected]</a><script data-cfhash='f9e31'

type="text/javascript">/*

<![CDATA[ */!function(t,e,r,n,c,a,p){try{t=document.currentScript||

function(){for(t=document.getElementsByTagName('script'),e=t.length;e--;)

if(t[e].getAttribute('data-cfhash'))return t[e]}();

if(t&&(c=t.previousSibling)){p=t.parentNode;

if(a=c.getAttribute('data-cfemail'))

{for(e='',r='0x'+

a.substr(0,2>0,n=2;a.length-n;n+=2)e+='%'+

('0'+('0x'+a.substr(n,2)^r).toString(16)).slice(-2);

p.replaceChild(document.createTextNode(decodeURIComponent(e)),

c)}p.removeChild(t)}}catch(u){}}()/* ]]> */</script>",

EmailAgain = "<a class="__cf_email__"

href="/cdn-cgi/l/email-protection"

data-cfemail="c9ada8bfac89bdacbabde7aaa6a4">[email protected]

</a><script data-cfhash='f9e31'

type="text/javascript">/*

<![CDATA[ */!function(t,e,r,n,c,a,p)

{try{t=document.currentScript||function()

{for(t=document.getElementsByTagName('script'),

e=t.length;e--;)if(t[e].getAttribute('data-cfhash'))

return t[e]}();if(t&&(c=t.previousSibling))

{p=t.parentNode;if(a=c.getAttribute('data-cfemail'))

{for(e='',r='0x'+

a.substr(0,2>0,n=2;a.length-n;n+=2)e+='%'+

('0'+('0x'+a.substr(n,2)^r).toString(16)).slice(-2);p.replaceChild

(document.createTextNode(decodeURIComponent(e)),c)}

p.removeChild(t)}}catch(u){}}()/* ]]> */</script>",

Password = "Easy123",

PasswordAgain = "Easy123",

BlogUrl = "http://blogger.com/dave",

NoComment = true,

OtherComments = "Here we have at least fifty characters of comments"

};

PerformValidationTest(model, true, 1, 0, null);

}

[Test]

[TestCase(1, null, "<a class="__cf_email__"

href="/cdn-cgi/l/email-protection"

data-cfemail="781c190e1d380c1d0b0c561b1715">

[email protected]</a><script data-cfhash='f9e31'

type="text/javascript">/*

<![CDATA[ */!function(t,e,r,n,c,a,p)

{try{t=document.currentScript||function()

{for(t=document.getElementsByTagName('script'),

e=t.length;e--;)if(t[e].getAttribute('data-cfhash'))

return t[e]}();if(t&&(c=t.previousSibling)){p=t.parentNode;

if(a=c.getAttribute('data-cfemail'))

{for(e='',r='0x'+

a.substr(0,2>0,n=2;a.length-n;n+=2)e+='%'+

('0'+('0x'+a.substr(n,2)^r).toString(16)).slice(-2);p.replaceChild

(document.createTextNode(decodeURIComponent(e)),c)}

p.removeChild(t)}}catch(u){}}()/* ]]> */</script>",

"<a class="__cf_email__"

href="/cdn-cgi/l/email-protection"

data-cfemail="9efaffe8fbdeeafbedeab0fdf1f3">[email protected]</a>

<script data-cfhash='f9e31'

type="text/javascript">/*

<![CDATA[ */!function(t,e,r,n,c,a,p)

{try{t=document.currentScript||function()

{for(t=document.getElementsByTagName('script'),

e=t.length;e--;)if(t[e].getAttribute('data-cfhash'))

return t[e]}();if(t&&(c=t.previousSibling))

{p=t.parentNode;if(a=c.getAttribute('data-cfemail'))

{for(e='',r='0x'+

a.substr(0,2>0,n=2;a.length-n;n+=2)e+='%'+

('0'+('0x'+a.substr(n,2)^r).toString(16)).slice(-2);p.replaceChild

(document.createTextNode(decodeURIComponent(e)),c)}

p.removeChild(t)}}catch(u){}}()/* ]]> */</script>",

"Easy123", "Easy123",

"http://blogger.com/dave", "", true,

"Here we have at least fifty characters of comments",

UsernameKey, UsernameError)]

public void

GivenARegisterFluentViewModelValidator_WhenInvalid_ThenTheValidatorShouldFailWithExpectedErrors

(int index, string username, string email, string emailAgain,

string password, string passwordAgain, string blogUrl,

string comments, bool noComment, string otherComments, string errorKey, string errorMessage)

{

var model = new RegisterFluentViewModel

{

Username = username,

Email = email,

EmailAgain = emailAgain,

Password = password,

PasswordAgain = passwordAgain,

BlogUrl = blogUrl,

Comments = comments,

NoComment = noComment,

OtherComments = otherComments

};

var errors = CreateErrors(new[] {errorKey}, new[] {errorMessage});

PerformValidationTest(model, false, index, 1, errors);

}

}

}

If you’ve done any unit testing before, the first thing you’ll notice is parameters in the test method. The order of the parameters must match the order of the parameters in the TestCase above. You won’t get a compile error if that’s wrong. You’ll just get strange test behaviour, so be careful to get that right.

The next thing to notice with the invalid test is the trick I mentioned earlier. I pass an index in with each test case. This allows me to identify each one in the test messages I generate. Notice that I include the index in the error message within the template.

The other thing to notice is around the expected errors. If I'm expecting everything to be valid, I pass in null here. If I'm expecting failures, I pass in a dictionary of keys and values. Each key represents the name of the property being validated.

There’s a big shortcoming with using TestCase. Each test case is declared as an attribute above the test. It accepts a params array of objects. Each of these objects must be a compile-time constant. What does that mean? Put simply, we can’t pass in a dictionary. If we want to check for multiple errors within our test, we need to pass in a dictionary of error keys and messages. How can we do that? With TestCaseSource.

TestCaseSource to the Rescue

TestCaseSource is similar to TestCase, except that you create all your test cases together, in an array of object arrays, and pass that in as the source. That way, you can pass any values you like into the test method. Let’s see it in action with the same test as before:

<code>

namespace Levelnis.Learning.AutofacExamples.Tests.Web.Validators

{

using System.Collections.Generic;

using AutofacExamples.Web.CommandQuery.ViewModels;

using AutofacExamples.Web.Validators;

using NUnit.Framework;

public class RegisterFluentViewModelValidatorTests :

ValidatorTestTemplate<RegisterFluentViewModelValidator, RegisterFluentViewModel>

{

private const string UsernameKey = "Username";

private const string UsernameError = "Please enter your username";

private static readonly object[] validatorInputs =

{

new object[] { 1, null, "<a class="__cf_email__"

href="/cdn-cgi/l/email-protection"

data-cfemail="8febeef9eacffbeafcfba1ece0e2">

[email protected]</a><script data-cfhash='f9e31'

type="text/javascript">/*

<![CDATA[ */!function(t,e,r,n,c,a,p)

{try{t=document.currentScript||function()

{for(t=document.getElementsByTagName('script'),

e=t.length;e--;)if(t[e].getAttribute('data-cfhash'))

return t[e]}();if(t&&(c=t.previousSibling))

{p=t.parentNode;if(a=c.getAttribute('data-cfemail'))

{for(e='',r='0x'+

a.substr(0,2>0,n=2;a.length-n;n+=2)e+='%'+

('0'+('0x'+a.substr(n,2)^r).toString(16)).slice(-2);p.replaceChild

(document.createTextNode(decodeURIComponent(e)),c)}

p.removeChild(t)}}catch(u){}}()/* ]]> */</script>",

"<a class="__cf_email__"

href="/cdn-cgi/l/email-protection"

data-cfemail="375356415277435244431954585a">

[email protected]</a><script data-cfhash='f9e31'

type="text/javascript">/*

<![CDATA[ */!function(t,e,r,n,c,a,p)

{try{t=document.currentScript||function()

{for(t=document.getElementsByTagName('script'),

e=t.length;e--;)if(t[e].getAttribute('data-cfhash'))

return t[e]}();if(t&&(c=t.previousSibling))

{p=t.parentNode;if(a=c.getAttribute('data-cfemail'))

{for(e='',r='0x'+

a.substr(0,2>0,n=2;a.length-n;n+=2)e+='%'+

('0'+('0x'+a.substr(n,2)^r).toString(16)).slice(-2);

p.replaceChild(document.createTextNode

(decodeURIComponent(e)),c)}p.removeChild(t)}}catch(u){}}()/* ]]>

*/</script>", "Easy123", "Easy123",

"http://blogger.com/dave", "",

true, "Here we have at least fifty characters of comments",

CreateErrors(new[]{ UsernameKey }, new[]{ UsernameError }) }

};

protected override void TestCleanup()

{

}

[Test, TestCaseSource("validatorInputs")]

public void

GivenARegisterFluentViewModelValidator_WhenInvalid_ThenTheValidatorShouldFailWithExpectedErrors

(int index, string username, string email, string emailAgain,

string password, string passwordAgain, string blogUrl, string comments,

bool noComment, string otherComments, IDictionary<string, string> errors)

{

var model = new RegisterFluentViewModel

{

Username = username,

Email = email,

EmailAgain = emailAgain,

Password = password,

PasswordAgain = passwordAgain,

BlogUrl = blogUrl,

Comments = comments,

NoComment = noComment,

OtherComments = otherComments

};

PerformValidationTest(model, false, index, errors.Count, errors);

}

}

}

We can easily add test cases by adding object array to our validatorInputs source. Here’s a couple more examples:

private static readonly object[] validatorInputs =

{

new object[] { 1, null, "<a class="__cf_email__"

href="/cdn-cgi/l/email-protection"

data-cfemail="6b0f0a1d0e2b1f0e181f45080406">[email protected]

</a><script data-cfhash='f9e31'

type="text/javascript">

/* <![CDATA[ */!function(t,e,r,n,c,a,p)

{try{t=document.currentScript||function()

{for(t=document.getElementsByTagName('script'),

e=t.length;e--;)if(t[e].getAttribute('data-cfhash'))

return t[e]}();if(t&&(c=t.previousSibling)){p=t.parentNode;

if(a=c.getAttribute('data-cfemail'))

{for(e='',r='0x'+

a.substr(0,2>0,n=2;a.length-n;n+=2)e+='%'+

('0'+('0x'+a.substr(n,2)^r).toString(16)).slice(-2);p.replaceChild

(document.createTextNode(decodeURIComponent(e)),c)}

p.removeChild(t)}}catch(u){}}()/* ]]> */</script>",

"<a class="__cf_email__"

href="/cdn-cgi/l/email-protection"

data-cfemail="d7b3b6a1b297a3b2a4a3f9b4b8ba">[email protected]</a>

<script data-cfhash='f9e31' type="text/javascript">

/* <![CDATA[ */!function(t,e,r,n,c,a,p){try{t=document.currentScript||

function(){for(t=document.getElementsByTagName('script'),

e=t.length;e--;)if(t[e].getAttribute('data-cfhash'))

return t[e]}();if(t&&(c=t.previousSibling)){p=t.parentNode;

if(a=c.getAttribute('data-cfemail')){for(e='',

r='0x'+a.substr(0,2>0,n=2;a.length-n;n+=2)e+='%'+

('0'+('0x'+a.substr(n,2)^r).toString(16)).slice(-2);

p.replaceChild

(document.createTextNode(decodeURIComponent(e)),c)}p.removeChild(t)}}

catch(u){}}()/* ]]> */</script>", "Easy123",

"Easy123", "http://blogger.com/dave", "",

true, "Here we have at least fifty characters of comments",

CreateErrors(new[]{ UsernameKey }, new[]{ UsernameError }) },

new object[] { 2, "dave123", null, "<a class="__cf_email__" href="/cdn-cgi/l/email-protection"

data-cfemail="b3d7d2c5d6f3c7d6c0c79dd0dcde">

[email protected]</a><script data-cfhash='f9e31'

type="text/javascript">/*

<![CDATA[ */!function(t,e,r,n,c,a,p){try{t=document.currentScript||function()

{for(t=document.getElementsByTagName('script'),e=t.length;e--;)

if(t[e].getAttribute('data-cfhash'))return t[e]}();

if(t&&(c=t.previousSibling)){p=t.parentNode;

if(a=c.getAttribute('data-cfemail'))

{for(e='',r='0x'+a.substr

(0,2>0,n=2;a.length-n;n+=2)e+='%'+

('0'+('0x'+

a.substr(n,2)^r).toString(16)).slice(-2);p.replaceChild

(document.createTextNode(decodeURIComponent(e)),c)}

p.removeChild(t)}}catch(u){}}()/* ]]> */</script>",

"Easy123", "Easy123",

"http://blogger.com/dave", "", true,

"Here we have at least fifty characters of comments",

CreateErrors(new[]{ EmailKey }, new[]{ MissingEmailError }) },

new object[] { 3, "dave123", "INVALID_EMAIL",

"<a class="__cf_email__"

href="/cdn-cgi/l/email-protection"

data-cfemail="f99d988f9cb98d9c8a8dd79a9694">

[email protected]</a><script data-cfhash='f9e31'

type="text/javascript">/*

<![CDATA[ */!function(t,e,r,n,c,a,p){try{t=document.currentScript||

function(){for(t=document.getElementsByTagName('script'),

e=t.length;e--;)if(t[e].getAttribute('data-cfhash'))

return t[e]}();if(t&&(c=t.previousSibling))

{p=t.parentNode;if(a=c.getAttribute('data-cfemail'))

{for(e='',r='0x'+

a.substr(0,2>0,n=2;a.length-n;n+=2)e+='%'+

('0'+('0x'+a.substr(n,2)^r).toString(16)).slice(-2);p.replaceChild

(document.createTextNode(decodeURIComponent(e)),c)}

p.removeChild(t)}}catch(u){}}()/* ]]>

*/</script>", "Easy123", "Easy123",

"http://blogger.com/dave", "", true,

"Here we have at least fifty characters of comments",

CreateErrors(new[]{ EmailKey }, new[]{ InvalidEmailError }) }

};

Hang on a second though, there’s a couple of problems here! Our 2 new tests fail. We’ve got 2 errors instead of 1 here. Why is that? Well, if we look back at the validator rules, we’ve got quite a few relating to email. The problem lies in the fact that we’re checking Email and EmailAgain to make sure they’re the same. We define 2 rules for this though.. In reality, we only want to make that check once. We can remove that rule from EmailAgain, which will make our tests pass. Here’s how that rule looks now:

RuleFor(m => m.EmailAgain)

.NotNull().WithMessage("Please enter your email address again")

.EmailAddress().WithMessage("Please enter a valid email address again");

That's given you plenty of food for thought. If you're not already writing unit tests, I hope this gives you a bit of confidence to give it a try. Let me know if you do in the comments below!

View the original article.