Introduction

This article is a practical guide on using Intel® Threading Building Blocks (TBB) and OpenMP libraries for C++ based on the example of delivering parallel scalable code that implements Burrows-Wheeler Transformation (BWT) algorithm.

The following article introduces the basic ideas of delivering a parallel code implementing BWT encoding/decoding algorithm that can be perfectly scaled under Amdahl’s law, and, thus, can perform faster by leveraging the modern multi-core Intel® CPUs such as Intel® Core™ i7 and Intel® Xeon™ E5 processors family as well as the newest Intel® Xeon™ Phi co-processors.

Particularly, we’ll discuss about such aspects of parallel programming as tight loop parallelization using Intel TBB’s library function templates, multitasking to concurrently execute the fragments of sequential code that cannot be run in parallel. As well, we’ll provide the explanation of using the concept of nested parallelism to optimize the performance of nested loops, specifically how to collapse the execution of one or more nested loops into a single recursive parallel process. Finally, we’ll discuss about using OpenMP’s parallel directive construct the using of which allows to workshare the entire encoding/decoding process between multiple hardware threads, that, in turn, allows to optimize the process of encoding/decoding of typically large amount of data.

In this article…

At the very beginning of this article, we’ll take a closer look at the algorithm itself and its computational complexity. Further, we’ll discuss about the fragments of sequential code in C++ that perform the actual encoding/decoding and provide the detailed analysis of those fragments of code to determine the basic performance optimization hotspots. During the discussion, we’ll explain how to use the specific OpenMP’s parallel directive constructs and Intel® TBB library function templates to transform the sequential code into parallel, and, thus, to drastically improve the performance of the encoding/decoding process.

The topics that will be discussed further in this article include:

- A single tight loop parallelization using Intel® TBB’s template functions as well as how to properly “collapse” two or more nested parallel loops to provide the multidimensional data processing performance speed-up;

- Performing the execution of two or more independent sequential tasks in parallel with Intel® TBB’s task group functions used to accelerate the performance of the fragments of sequential code that cannot be perfectly parallelized;

- Threads synchronization to avoid the race condition and data dependency issues that might be incurred by the parallel code being created. We’ll discuss about such synchronization aspects as using threads isolation to provide synchronization of the collapsed nested loop parallel execution. During the discussion, we’ll compare the either threads synchronization provided by the either Intel® TBB or OpenMP library;

Besides the aspects of using Intel® TBB’s template functions to implement parallel loops, in this article, we’ll also find out how to use specific OpenMP library’s parallel directive constructs to perfectly workshare the entire process of encoding/decoding of the large datasets among all CPU’s threads based on the partition-merge algorithm. The following algorithm is basically used to distribute iterations of a single loop between multiple CPU’s threads running in it parallel, which, in turn, provides the significant performance acceleration gain compared to the sequential execution of the same loop, that performs the actual encoding/decoding.

Finally, at the end of this article, we’ll provide a performance analysis and assessment of the following parallel code using the either Intel® VTune™ Amplifier XE tool. We’ll compare the performance of the parallel code execution while encoding/decoding datasets of different sizes.

Background

Burrows-Wheeler Transformation (BWT)

Burrows-Wheeler transformation (BWT) is a data encoding/decoding algorithm used as an essential part of many data compression algorithms such as bzip2. The following algorithm was initially proposed by Michael Burrows and David Wheeler in 1994. The main idea of the following algorithm is that we actually perform a block-sorting of a certain string of characters to produce an output easy to compress by using, for example, run-length encoding (RLE).

Encoding

The process of string encoding using BWT-algorithm is mainly based on performing a lexicographical permutation of characters from the original input string. Normally, the following process is very simple and can be formulated as follows:

- Create a resulting set that consists of n strings, in which strings are all possible rotations of the original input string;

- Sort the resulting set of strings lexicographically;

- Create an output string from the characters located at the final position of each string in the resulting set;

To perform the actual encoding using BWT transformation algorithm, we initially must create a resulting set of strings. Each string in the resulting set can be obtained as the result of performing rotation of the original input string right by k – characters (k < n), where k – the position of a new permuted string in the given resulting set.

The string rotation itself is a circular shift operation during which we actually retrieve a character at the last position in the given input string and assign it to the temporary variable: (t <- string[n]). After that, we perform a simple linear shift of characters by moving each character to the next position in the given string:

foreach (k <- 0 downto (n-1)) {string[k + 1] <- string[k];}

Since the rest of other characters were successfully moved by one position right, we simply assign the last character from the temporary variable at the first position in the given string: (string[0] <- t). To rotate an input string by k positions right, we must iteratively repeat the following process discussed above k – times, until we’ve obtained a permuted string in which all characters are rotated by k - positions relative to their original location in the input string.

However, there’s one important algorithm-level optimization of the string rotation that allows to drastically improve the performance of the encoding process. To obtain a rotated string we normally no longer need to perform a complex rotation of the original input string discussed above. Either, it’s not necessary to iteratively repeat the following process the number of times to find a specific rotation of the original input string.

Instead, to rotate the input string by k positions right, we need first to copy k last characters from the original input string starting at the position that exactly corresponds to the number of positions by which the original string should be rotated. After that we’ll need to assign those characters being copied at the beginning of a newly created string starting at the position of the first character:

foreach (i <- k to n) {new_string[i – k] <- string[i];}

After that, we’ll simply need to copy the first k characters from the original input string to a newly created starting at the position k, that exactly corresponds to the number of positions by which the original string must be rotated.

foreach (j <- 0 to k) {new_string[j + k] <- string[j];}

The second essential phase of the BWT-algorithm is sorting the resulting set of strings in the lexicographical order, that can be done by using the most of existing sorting algorithms such as famous quicksort and merge-sort. By sorting the resulting set of strings in the lexicographical order we actually obtain the permuted sequence of characters of the original input string.

Finally, since we’ve obtained the ordered resulting set of strings, we normally need to retrieve a character at the last position of each string in the given resulting set and append it to the output that finally will contain the encoded string.

The example below illustrates the BWT encoding process, during which we’re obtaining the resulting set of permuted strings by rotating the original input string right by the number of characters that exactly corresponds to the position of the current permuted string in the following resulting set (we will use the null-terminating character ‘\0’ to indicate the final position in the string to be encoded):

Input String -> BROWSE\0 0

----------------------------------

\0BROWSE 1

E\0BROWS 2

SE\0BROW 3

WSE\0BRO 4

OWSE\0BR 5

ROWSE\0B 6

The null-terminating character will further be used by decoding algorithm to locate a string in the given resulting set that contains the following character in its final position. The following string will exactly match the decoded original input string.

The second essential phase of the BWT-algorithm is sorting the resulting set of strings in the lexicographical order, which can be done by using the most of existing sorting algorithms such as famous quicksort and mergesort. By sorting the resulting set of strings in the lexicographical order we actually obtain the permuted sequence of characters of the original input string:

Input String -> BROWSE\0 0 -> \0BROWSE 1

---------------------------------------------------------

\0BROWSE 1 BROWSE\0 0

E\0BROWS 2 E\0BROWS 2

SE\0BROW 3 OWSE\0BR 5

WSE\0BRO 4 ROWSE\0B 6

OWSE\0BR 5 SE\0BROW 3

ROWSE\0B 6 WSE\0BRO 4

Finally, since we’ve obtained the resulting set of strings ordered during the previous step of encoding, we normally need to retrieve a character at the last position of each string in the given resulting set and append it to the output that will contain the encoded string as it’s shown in the example below:

Input String -> \0BROWSE 0 -> \0BROWSE

------------------------------------------------

BROWSE\0 1 BROWSE\0

E\0BROWS 2 E\0BROWS

OWSE\0BR 3 OWSE\0BR

ROWSE\0B 4 ROWSE\0B

SE\0BROW 5 SE\0BROW

WSE\0BRO 6 WSE\0BRO

---------------------------------------------

Output -> E\0SRBWO

The following fragment of code in C++ listed below implements the BWT encoding algorithm:

void bwt_encode(char* string, size_t length, char* output)

{

BWT* bwt = (BWT*)malloc(sizeof(BWT) * length);

bwt[0].string = (char*)malloc(length);

memset((void*)bwt[0].string, 0x00, length);

memcpy((void*)bwt[0].string, (const void*)string, length);

for (size_t row = 1; row < length; row++)

{

bwt[row].string = (char*)malloc(length);

memset((void*)bwt[row].string, 0x00, length);

for (size_t index = length - row; index < length; index++)

bwt[row].string[index - (length - row)] = string[index];

for (size_t index = 0; index < length - row; index++)

bwt[row].string[index + row] = string[index];

}

qsort((void*)bwt, length, sizeof(BWT), cmp_proc);

for (size_t row = 0; row < length; row++)

output[row] = bwt[row].string[length - 1];

output[length] = '\0';

for (size_t index = 0; index < length; index++)

free(bwt[index].string);

free(bwt);

}

The computational complexity of the full encoding algorithm can be estimated as and is proportional to p = O(n^2 x log2(n)). In this case, the estimation of the overall complexity of BWT encoding does not include the actual computational complexity of sorting. The complexity of sorting algorithm used is an overhead which value can be simplified for either encoding or decoding process.

Decoding

To decode the encoded original input string, we’ll use the inverse BWT-algorithm that has the following steps and can be formulated as follows:

- For the string to be decoded, containing n – characters, create a resulting set of n empty strings;

- Retrieve each character of the following string and insert it before the first character in each string of the given resulting set;

- Sort the resulting set of strings lexicographically;

- Repeat steps (2,3) n – times, where n – the number of characters in the string to be decoded;

- Perform the linear search to locate a string in the current resulting set, containing the null-terminated character at its final position;

- Copy the characters of the string that has been obtained at the previous step to the output string;

As we’ve already noticed, the BWT decoding algorithm is somewhat different. The first what we need to do is to create a resulting set of n empty strings:

foreach (k <- 0 to n) { strings[k] <- {0};}

After that, we need to retrieve the characters of the string to be decoded and insert each character at the position Before the first character of each string in the current resulting set:

foreach (k <- 0 to n) { strings[k][0] <- output[k];}

In the other words, we’ll simply assign the entire string to be decoded before the first column of the given resulting set. Then, we, obviously, must sort our resulting set in lexicographical order. We normally iteratively repeat the following process n – times for each position of character in the string to be decoded. Finally, we must perform the trivial linear search to find a string containing the null-terminated character at its final position. Obviously, that, the following string is the decoded original input string the characters of which must be copied to the output string.

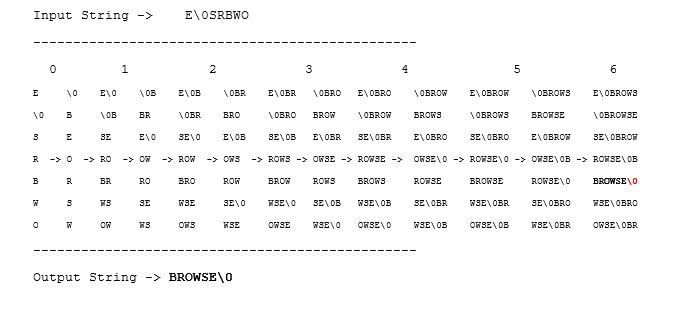

The example below illustrates the process of creating the resulting set of strings based on using the inverse BWT algorithm:

The code fragment in C++ below illustrates the implementation of the BWT decoding algorithm:

void bwt_decode(char* string, size_t length, char* output)

{

BWT* bwt = (BWT*)malloc(sizeof(BWT) * length);

for (size_t row = 0; row < length; row++)

{

bwt[row].string = (char*)malloc(length);

memset((void*)bwt[row].string, 0x00, length);

}

for (size_t index = 0; index < length; index++)

{

for (size_t row = 0; row < length; row++)

for (size_t col = 0; col < length - 1; col++)

{

size_t rev_first = length - col - 2;

size_t rev_second = length - col - 1;

bwt[row].string[rev_second] = bwt[row].string[rev_first];

}

for (size_t row = 0; row < length; row++)

bwt[row].string[0] = string[row];

qsort((void*)bwt, length, sizeof(BWT), cmp_proc);

}

for (size_t index = 0; index < length; index++)

if (bwt[index].string[length - 1] == '\0')

memcpy((void*)output, (const void*)bwt[index].string, length);

output[length] = '\0';

for (size_t index = 0; index < length; index++)

free(bwt[index].string);

free(bwt);

}

Similar to the encoding algorithm discussed above, BWT decoding has the number of algorithm-level optimizations such as using suffix arrays or arrays of pointers to each permuted string in the resulting set. An array of suffixes created by the encoder is passed along with the encoded string to the decoder. This, in turn, allows to simplify the process of decoding since it’s not necessary to perform the complex inverse transformation in the decoder. However, the using of suffix arrays makes the data less compressible when BWT transformation is performed as a part of a data compression algorithm.

The computational complexity of the BWT decoding algorithm is higher than the complexity of the encoding process and can be originally estimated as p = O(N^3 + N^2 + 2N). Obviously, that, the BWT decoding algorithm has a very high computational complexity compared to the process of encoding.

Performance Optimization

Before we begin the discussion how to optimize the performance of BWT transformation algorithm using those mentioned above multithreaded libraries, let’s first take closer look at the fragments of sequential code listed above to determine the basic performance optimization hotspots in the fragments of code that implement the either encoding and decoding process.

Tight Loop Parallelization

As we’ve already noticed, the very first optimization hotspot of the code that implements the encoding algorithm is the two sequential loops that perform the actual rotation of the input string during each iteration of the main loop. According to the nature of string rotation algorithm, the parallel execution of the following loops does not normally incur the data flow dependency or race condition issues, which makes it possible to perfectly parallelize the execution of those loops by performing the either tight loop parallelization. Also, it’s possible to execute the fragments of code that implement those subsequent loops in parallel as the two independent tasks.

To execute the iterations of the following loops in parallel we’ll basically use the Intel® TBB library’s parallel_for template function. This function provides the basic mechanism to implement the tight loop parallelization, during which, particular iterations of the sequential loop are executed concurrently by a team of threads:

tbb::parallel_for(tbb::blocked_range<size_t>(length - row, length),

[&](const tbb::blocked_range<size_t> &r) {

for (size_t index = r.begin(); index != r.end(); index++)

bwt[row].string[index - (length - row)] = string[index];

});

tbb::parallel_for(tbb::blocked_range<size_t>(0, length - row),

[&](const tbb::blocked_range<size_t> &r) {

for (size_t index = r.begin(); index != r.end(); index++)

bwt[row].string[index + row] = string[index];

});

The parallel_for template function accepts the object of the blocked_range template class as the first argument. The object of this class is basically used to define the range that can be split recursively during the parallel loop execution. In the other words, by using this template class we basically define the ranges of values assigned to the loop counter variable during each loop iteration. Those ranges are typically declared by assigning those values to the first and second argument of the blocked_range constructor. The second argument of the following template function is the lambda expression that defines the code being executed in parallel. The argument of the following lambda expression is the reference to the same blocked_range class object, that is used to access the lower and upper bound values of the loop counter variable index, defined by the following blocked range. Further, we’ll use the following object to access the begin() and end() methods of the blocked range class to retrieve those lower and upper bound values inside the code of the lambda expression.

Obviously, that, the code defined in the lambda expression implements the loop, iterations of which are executed concurrently for each value of the index variable, by a team of independent threads. During each iteration, we’re executing the code that copies of a particular character from the original input string to a specific position in the newly created string.

According to the best practices of parallel programming using Intel®TBB library, we normally use the parallel_for template function whenever we need to concurrently execute the iterations of specific loops in parallel performing tight loop parallelization.

Parallel Tasks Execution

To optimize the performance of those subsequent loops discussed above, we’ll use the Intel®TBB’s task_group class, the methods of which are used to spawn the execution of those subsequent loops as two independent parallel tasks. To do this we normally need to declare task_group class object. Further, we’ll invoke the method run, that accepts the only one argument – a reference to an object of the task_handle<Func> template class. According to the Intel®TBB® library template functions intrinsic we’ll use C++ lambda expressions wherever we need to define the fragment of code, the parallel execution of which is performed by using those library template functions. In this case, the lambda expression will enclose the fragments of code that call the parallel_for template function to perform the parallel execution of each particular loop that actually performs the original string rotation.

To spawn each parallel task, we perform an asynchronous call the method run. In this particular case, the following method is called twice for each fragment of code that invokes the specific parallel loop execution. After calling this method we need provide a synchronization of those parallel tasks being executed. For that purpose, we need to call wait method, that normally suspends the running of code until each parallel task has completed its execution:

tbb::task_group g;

g.run([&] {

tbb::parallel_for(tbb::blocked_range<size_t>(length - row, length),

[&](const tbb::blocked_range<size_t> &r) {

for (size_t index = r.begin(); index != r.end(); index++)

bwt[row].string[index - (length - row)] = string[index];

});

});

g.run([&] {

tbb::parallel_for(tbb::blocked_range<size_t>(0, length - row),

[&](const tbb::blocked_range<size_t> &r) {

for (size_t index = r.begin(); index != r.end(); index++)

bwt[row].string[index + row] = string[index];

});

});

g.wait();

Nested Parallelism

While performing parallel optimization of the code listed above, we’ll basically deal with parallelization of several nested loops. In this particular case, the parallel loops that perform the actual string rotation are executed within each iteration of the main loop. Obviously, that, the main loop is another important optimization hotspot of the code being discussed. In this case, we’ll use the concept of nested-parallelism since we need to parallelize more than one nested loop.

According to the nature of the BWT encoding algorithm the following process does not normally incur any data flow dependency or race condition issues since each rotation of the original input string does not depend on the result of string rotation performed during the previous loop iterations. To execute the main loop in parallel we’ll use the same Intel®TBB’s parallel_for template function similar to parallelizing those nested loops that perform the actual string rotation:

tbb::parallel_for(tbb::blocked_range<size_t>(1, length),

[&](const tbb::blocked_range<size_t> &r) {

for (size_t row = r.begin(); row != r.end(); row++)

{

bwt[row].string = (char*)::VirtualAllocEx(hProcHandle, NULL, \

length, MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)bwt[row].string, length);

tbb::task_group g;

g.run([&] {

tbb::parallel_for(tbb::blocked_range<size_t>(length - row, length),

[&](const tbb::blocked_range<size_t> &r) {

for (size_t index = r.begin(); index != r.end(); index++)

bwt[row].string[index - (length - row)] = string[index];

});

});

g.run([&] {

tbb::parallel_for(tbb::blocked_range<size_t>(0, length - row),

[&](const tbb::blocked_range<size_t> &r) {

for (size_t index = r.begin(); index != r.end(); index++)

bwt[row].string[index + row] = string[index];

});

});

g.wait();

}

});

As we can see from the parallel code above, in this case, to parallelize those nested loops, we basically use a special case of nested parallelism in which those loops are not perfectly nested, which makes it impossible to collapse the execution of those nested loops into a single recursive parallel process. During each iteration of the main loop executed in parallel in its own thread, we’re performing a subsequent call to the same parallel_for function that spawns another team of threads that execute the iterations of the loops nested inside the main loop.

Collapsing Nested Loops

At this point, let’s take a look at the code that implements the BWT decoding algorithm. The optimization hotspot that we’ll discuss about is the parallelization of the two loops that are perfectly nested. In this case, we normally execute the first loop to iterate through the resulting set of strings. During each iteration of the following loop we iterate through the current string, moving each character by a single position right. By performing the insertion, we actually process the two-dimensional data since the resulting set of strings is actually a matrix of characters.

To optimize the performance of those two nested loops, we actually need to collapse the parallel execution of those loops into a single parallel loop the iterations of which are concurrently executed. For that purpose, we’ll use the same parallel_for template function with the object of blocked_range2d class passed as an argument. The using of the following class allows to recursively split the iterations of those nested loops into a single parallel process executed by a two-dimensional grid of threads. The constructor of the blocked_range2d class has six arguments. The first and second arguments are the lower and upper boundaries of the range of values assigned to the loop counter variable during each iteration of the first loop. The third argument normally specifies a grain size. The grain size is the amount of iterations performed by a single thread in a grid. The using of grain size value is quite experimental. In this particular case, we assigned the grain size parameter to the value which is half of the total number of iterations performed by the first loop. It means that the entire amount of iterations will be shared between two concurrent threads. Similarly, the next three arguments of the tbb::blocked_range2d constructor are the range, the values of which will be assigned to the loop counter variable of the second loop as well as the grain size value. In this case, the grain size parameter is set to the number of iterations of the second nested loop, which means that each particular thread in a grid will execute just one iteration of the second loop:

tbb::task_group_context root(tbb::task_group_context::isolated);

tbb::parallel_for(tbb::blocked_range2d<size_t>(0, length, length / 2, 0, length - 1, length),

[&](const tbb::blocked_range2d<size_t> &r) {

for (size_t row = r.rows().begin(); row != r.rows().end(); row++)

for (size_t col = r.cols().begin(); col != r.cols().end(); col++)

{

size_t rev_first = length - col - 2;

size_t rev_second = length - col - 1;

bwt[row].string[rev_second] = bwt[row].string[rev_first];

}

}, root);

Similarly to the code that performs the BWT encoding, the code that performs the actual decoding implements the main loop, during each iteration of which we perform the inverse BWT transformation to obtain the decoded string. In this particular case, the main loop that performs the actual inverse transformation cannot be perfectly parallelized since the parallel execution of each iteration of the following loop incurs the data flow dependency issue. During each iteration of the following loop we’re actually inserting a new column consisting characters of the encoded string before the position of the first column in the resulting set. According to the nature of the inverse BWT algorithm, the new columns must be inserted in a specific order to obtain the decoded string at the end of the code execution. That’s why we simply cannot not parallelize the following loop execution:

for (size_t index = 0; index < length; index++)

{

tbb::task_group_context root(tbb::task_group_context::isolated);

tbb::parallel_for(tbb::blocked_range2d<size_t>(0, length, length / 2, 0, length - 1, length),

[&](const tbb::blocked_range2d<size_t> &r) {

for (size_t row = r.rows().begin(); row != r.rows().end(); row++)

for (size_t col = r.cols().begin(); col != r.cols().end(); col++)

{

size_t rev_first = length - col - 2;

size_t rev_second = length - col - 1;

bwt[row].string[rev_second] = bwt[row].string[rev_first];

}

}, root);

tbb::parallel_for(tbb::blocked_range<size_t>(0, length),

[&](const tbb::blocked_range<size_t> &r) {

for (size_t row = r.begin(); row != r.end(); row++)

bwt[row].string[0] = string[row];

});

tbb::parallel_sort(bwt, bwt + length, [](const BWT& bwt1, const BWT& bwt2)

{ return (strcmp(bwt1.string, bwt2.string) < 0) ? 1 : 0; });

}

Threads Synchronization

Unlike the other parallel libraries and packages such as OpenMP, collapsing nested loops into a single parallel process does not always ensure that there’s a proper synchronization between particular threads in a team that concurrently perform an execution of each iteration of the single collapsed loop. In this case, the threads synchronization mechanism is actually needed to provide the synchronization between threads that execute the iterations of the first loop and the other threads specifically executing the iterations of the second loop nested inside that first loop. This sophisticated synchronization mechanism is called “parallel tasks isolation”. By using those parallel tasks isolation we actually provide a synchronization between each row in the two-dimensional grid of threads. While executing two or more collapsed nested loops that perform multidimensional data processing, Intel TBB synchronization mechanism actually creates a tree of concurrent parallel tasks, the actual synchronization between which is a very complicated process producing the sufficient overhead during the parallel code execution:

To relax the process of threads synchronization, in this particular case, the Intel TBB synchronization mechanism performs a so-called “parallel tasks cancellation”, which means that the cancellation of the “parent” parallel task actually cancels the execution of all “child” tasks in a tree. If the “parent” task is currently cancelled, then it causes the unexpected termination of those child tasks spawned inside the current parent task being cancelled. This, in turn, normally leads to the situation in which tasks run in parallel are not properly executed. According to the latest Intel TBB guidelines, the creation of the isolated task_group_context root protects the second nested loop from downwards propagation of cancellation from the first loop while being executed. To provide each parallel task isolation, we simply need to declare the task_group_context class object and pass the task_group_context::isolated enum value to its constructor. Then, we have to pass the following object as a third parameter of the parallel_for function. This normally would provide the isolation of each concurrent thread executing a specific iteration of the second nested loop:

tbb::task_group_context root(tbb::task_group_context::isolated);

tbb::parallel_for(tbb::blocked_range2d<size_t>(0, length, length / 2, 0, length - 1, length),

[&](const tbb::blocked_range2d<size_t> &r) {

for (size_t row = r.rows().begin(); row != r.rows().end(); row++)

for (size_t col = r.cols().begin(); col != r.cols().end(); col++)

{

size_t rev_first = length - col - 2;

size_t rev_second = length - col - 1;

bwt[row].string[rev_second] = bwt[row].string[rev_first];

}

}, root);

Parallel Lexicographical Sort

After creating the current resulting set of all possible rotations of the original string, we normally perform the permutation of the encoded characters which is typically done by sorting the resulting set of strings in the lexicographical order. As you might already have noticed from the sequential code that implements the encoding algorithm, that we particularly use the C standard library function qsort to sort the resulting set of strings. Normally, there’s the number of functions from different libraries that allow to perform the actual sorting including qsort and std::sort STL library function as well as the Intel®TBB’s parallel_sort template function. Unlike the qsort and std::sort, the following function normally performs parallel merge-sort, which, in turn, allows to drastically increase the performance of the process of sorting. That’s why, to provide a better performance and at the same time exactly conform to the usage of the Intel®TBB library, we’ll use the following function to perform sorting of the current resulting set of strings lexicographically:

tbb::parallel_sort(bwt, bwt + length, [](const BWT& bwt1, const BWT& bwt2)

{ return (strcmp(bwt1.string, bwt2.string) < 0) ? 1 : 0; });

Using OpenMP To Workshare the Process of Encoding/Decoding

In the previous sections of this article, we’ve discussed about using various Intel®TBB library functions to improve the performance of code that implements BWT algorithm. The process of encoding of typically large datasets causes a poor scalability of the parallel code being delivered by using Intel®TBB library. That’s actually why, it’s recommended to split up the entire buffer being encoded into small chunks and workshare the execution of code that processes those small chunks between multiple concurrent threads executed in parallel. To do this, we’ll use the #pragma omp parallel directive construct of the OpenMP library for that purpose.

According to the concept of using the following parallel directive construct, the region of parallel code enclosed by the #pragma omp parallel is executed concurrently by multiple threads in a team. To workshare the entire process of encoding/decoding, we’ll actually use the partitioning algorithm to divide the entire string buffer into series of chunks. As the resulting of using partitioning algorithm we’ll obtain the array of encoded chunks at the end of the following code execution. Before performing the actual encoding we initially have to allocate the array of objects to store the encoded string and its size as follows:

BWT* bwt = (BWT*)::VirtualAllocEx(hProcHandle, \

NULL, sizeof(BWT) * 8, MEM_COMMIT, PAGE_READWRITE);

After that we need to read the contents of the plain-text file into the buffer previously allocated:

load_from_file(argv[3], input);

According to the partitioning algorithm, for each thread we have to obtain the current thread-id and the number of threads that will execute the fragment of parallel code performing the actual encoding/decoding. After that we’ll have to compute the size and either starting and final positions of the current chunk from the entire string buffer. At this point, we’ll also need to compute the alignment of the chunk encoded/decoded by the last thread in a team.

int thread_id = omp_get_thread_num();

int n_threads = omp_get_num_threads();

size_t size = (size_t)ceil(length / (float)n_threads);

size_t start = thread_id * size;

size_t end = (thread_id + 1) * size;

end = ((length % n_threads) > 0 && thread_id == n_threads - 1) ? length : end;

size = ((length % n_threads) > 0 && thread_id == n_threads - 1) ? (end - start) : size;

Then, we’ll have to allocate a string buffer to which the current chunk will be copied from the entire string buffer, as well as the buffer for the encoded chunk:

char* chunk = (char*)::VirtualAllocEx(hProcHandle, NULL, \

(size + 1), MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)chunk, (size + 1));

::CopyMemory((LPVOID)chunk, (CONST VOID*)&input[start], size);

char* encoded = (char*)::VirtualAllocEx(hProcHandle, NULL, \

(size + 1), MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)encoded, (size + 1));

To perform the actual encoding of the current chunk of string data, we call the parallel::tbb_bwt_encode_chunk(chunk, (size + 1), encoded) function.

After we’ve obtained the encoded chunk we need to copy its contents into a string buffer of the current object:

bwt[thread_id].string = (char*)::VirtualAllocEx(hProcHandle, NULL, \

(size + 1), MEM_COMMIT, PAGE_READWRITE);

bwt[thread_id].length = size + 1;

::CopyMemory((LPVOID)bwt[thread_id].string, (CONST VOID*)encoded, (size + 1));

As we’ve already discussed, at the end of the following code execution we’ll obtain the array of objects containing each encoded chunk. After that we write the following array into a binary output file:

save_to_file(argv[4], output, filelen);

During the decoding process, we need to allocate buffer for the array of chunk objects and read encoded chunks from the output binary file and for each particular chunk perform the actual decoding:

BWT* bwt = (BWT*)::VirtualAllocEx(hProcHandle, \

NULL, sizeof(BWT) * 8, MEM_COMMIT, PAGE_READWRITE);

bwt_load_from_file(argv[3], bwt);

Normally, the decoding process is slightly different compared to the process during which the input file is encoded. At this point, we normally retrieve each chunk from the array of objects read from the binary output file.

Before decoding each chunk, we allocate buffer to store an encoded string:

char* chunk = (char*)::VirtualAllocEx(hProcHandle, NULL, \

bwt[thread_id].length, MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)chunk, bwt[thread_id].length);

Then, we copy the encoded string from the string buffer of a specific chunk object to the temporary buffer previously allocated:

::CopyMemory((LPVOID)chunk, (CONST VOID*)bwt[thread_id].string, bwt[thread_id].length);

After that we call parallel::tbb_bwt_decode_chunk(chunk, bwt[thread_id].length, bwt[thread_id].string) function that performs the actual decoding. As the result of performing the actual decoding by using this function, we obtain the decoded string and copy it to the string buffer of the current object. The array of objects, at the end of the decoding process, we’ll contain strings, each one is obtained during the decoding process. We iteratively concatenate each string from the array to obtain the entire decoded string, that further will be written to file in plain-text format:

save_to_file(argv[4], output, filelen);

The complete fragments of code that implement worksharing of encoding/decoding process listed below:

Encoding:

#pragma omp parallel

{

int thread_id = omp_get_thread_num();

int n_threads = omp_get_num_threads();

size_t size = (size_t)ceil(length / (float)n_threads);

size_t start = thread_id * size;

size_t end = (thread_id + 1) * size;

end = ((length % n_threads) > 0 && thread_id == n_threads - 1) ? length : end;

size = ((length % n_threads) > 0 && thread_id == n_threads - 1) ? (end - start) : size;

HANDLE hProcHandle = ::GetCurrentProcess();

char* chunk = (char*)::VirtualAllocEx(hProcHandle, NULL, \

(size + 1), MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)chunk, (size + 1));

::CopyMemory((LPVOID)chunk, (CONST VOID*)&input[start], size);

char* encoded = (char*)::VirtualAllocEx(hProcHandle, NULL, \

(size + 1), MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)encoded, (size + 1));

parallel::tbb_bwt_encode_chunk(chunk, (size + 1), encoded);

bwt[thread_id].string = (char*)::VirtualAllocEx(hProcHandle, NULL, \

(size + 1), MEM_COMMIT, PAGE_READWRITE);

bwt[thread_id].length = size + 1;

::CopyMemory((LPVOID)bwt[thread_id].string, (CONST VOID*)encoded, (size + 1));

if (chunk != NULL) ::VirtualFreeEx(hProcHandle, chunk, \

(size + 1), MEM_RELEASE);

if (encoded != NULL) ::VirtualFreeEx(hProcHandle, encoded, \

(size + 1), MEM_RELEASE);

}

Decoding:

#pragma omp parallel

{

int thread_id = omp_get_thread_num();

int n_threads = omp_get_num_threads();

HANDLE hProcHandle = ::GetCurrentProcess();

char* chunk = (char*)::VirtualAllocEx(hProcHandle, NULL, \

bwt[thread_id].length, MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)chunk, bwt[thread_id].length);

::CopyMemory((LPVOID)chunk, (CONST VOID*)bwt[thread_id].string, bwt[thread_id].length);

parallel::tbb_bwt_decode_chunk(chunk, bwt[thread_id].length, bwt[thread_id].string);

if (chunk != NULL) ::VirtualFreeEx(hProcHandle, chunk, \

bwt[thread_id].length, MEM_RELEASE);

}

for (size_t index = 0; index < 8; index++)

strncat(output, bwt[index].string, bwt[index].length);

if (output != NULL && strlen(output) > 0)

length = strlen(output);

How to Compile and Run This Code

To compile the following code, use the following command line:

Debug x64:

icl /GS /W3 /Zc:wchar_t /ZI /Od /Fd".\vc140.pdb" /Qopenmp /Qstd=c++11 /Zc:forScope /RTC1 /MDd /Fa /EHs /Fo /Qprof-dir .\ /Fp"bwt.pch" bwt.cpp /link /OUT:"bwt.exe" /MANIFEST /NXCOMPAT /PDB:"bwt.pdb" /DYNAMICBASE "kernel32.lib" "user32.lib" "gdi32.lib" "winspool.lib" "comdlg32.lib" "advapi32.lib" "shell32.lib" "ole32.lib" "oleaut32.lib" "uuid.lib" "odbc32.lib" "odbccp32.lib" "tbb.lib" "tbb_debug.lib" /DEBUG /MACHINE:X64 /INCREMENTAL /SUBSYSTEM:CONSOLE

Release x64:

icl /GS /W3 /Gy /Zc:wchar_t /Zi /O2 /Zc:forScope /Oi /MD /Fa /EHs /Fo /Qopenmp /Qprof-dir .\ /Fp"bwt.pch" bwt.cpp /link /OUT:"bwt.exe" /MANIFEST /NXCOMPAT /PDB:"bwt.pdb" /DYNAMICBASE "kernel32.lib" "user32.lib" "gdi32.lib" "winspool.lib" "comdlg32.lib" "advapi32.lib" "shell32.lib" "ole32.lib" "oleaut32.lib" "uuid.lib" "odbc32.lib" "odbccp32.lib" "tbb.lib" "tbb_debug.lib" /MACHINE:X64 /OPT:REF /INCREMENTAL:NO /SUBSYSTEM:CONSOLE

To encode/decode a text file using the following program use the following command line:

Encoding:

bwt.exe -e -s sample.txt sample.dat - for serial code execution

bwt.exe -e -p sample.txt sample.dat - for parallel code execution

Decoding:

bwt.exe -d -s sample.dat output.txt - for serial code execution

bwt.exe -d -p sample.dat output.txt - for parallel code execution

Performance Assessment

In this section, we’ll discuss about the performance assessment of parallel code that performs Burrows-Wheeler Transformation (BWT). At this point, we’ll use Intel VTune Amplifier XE to measure the actual performance speed-up of the following parallel code being optimized by using Intel TBB and OpenMP libraries. Particularly, we’ll analyze the results provided by the following performance measurement tool such as an ideal execution CPU time, a spin overhead time spent for parallel tasks scheduling. Also, we’ll analyze the parallel execution time compared to the potential gain of the OpenMP regions execution.

The very first aspect, which we’ll make an emphasize on, is the CPU ideal execution time, which is as high as 86.3% of the overall CPU execution time. Normally, the using of those multithreaded parallel libraries and packages, especially OpenMP library, provides a capability of the following parallel code can be executed x3 times faster rather than the old sequential code that performs the complex Burrows-Wheeler Transformation (BWT). Delivering the hybrid parallel code that uses the either Intel® TBB or OpenMP library to increase the performance normally provides the lowest overhead time, which is basically spent for threads scheduling and other purposes such as threads synchronization. However, the spin time of the following parallel code execution, during which CPU is busy with executing the other application threads, can be somewhat higher than normal. Sometimes it’s rather preferable to allow some spin time to make sure that the execution of all threads is properly synchronized.

The parallel region time of this code is normally very high about 99.9% compared to the serial execution time, which means that the following code is perfectly parallelized. However, some of the parallel regions have a certain amount of potential gain, especially those regions that have been paralellized using OpenMP library. The overall potential gain for this code is about 20.6%. While running the following code in parallel we typically oversubscribe for the actual number of hardware threads since the OpenMP parallel regions are executed on top of the code parallelized using Intel TBB library:

As we can see from the statistics figure above, the TBB scheduler internals function is the hotspot that creates a significant CPU workload during the code execution. However, the overall CPU workload created by the following parallel code is normal or ideal, rather than poor:

At this point, let's take a closer look to see how CPU's logical cores are simultaneously utilized during the following code execution:

From the diagram above, we can see that only 4 of 8 logical cores are efficiently utilized. This actually means that the following code did not create the sufficient workload on the CPU since the execution of multiple Intel TBB parallel regions at the bottom of OpenMP normally does not entirely workshare parallel tasks between multiple CPU's cores. Typically Intel TBB threads scheduling mechanism does not support static scheduling, instead it handles the parallel tasks scheduling dynamically. In this case the average CPU usage and target CPU utilization are equal and can be estimated as 30 secs of the time elapsed since the beginning of the parallel code execution.

Finally, the overall duration of the following parallel code single instance execution on CPU with 8 logical cores reaches 3.355 secs, which is a pretty good score compared to the execution of the sequential code used to accomplish the same task:

Using the code

The code that implements parallel scalable Burrows-Wheeler Transformation algorithm is listed below:

#include <tbb/tbb.h>

#include <tbb/parallel_for.h>

#include <tbb/parallel_sort.h>

#include <tbb/blocked_range2d.h>

#include <time.h>

#include <omp.h>

typedef struct tagBWT

{

char* string;

size_t length;

}BWT;

int cmp_proc(const void * a, const void * b)

{

return strcmp((*(BWT*)a).string, (*(BWT*)b).string);

}

namespace serial

{

void c_bwt_encode_chunk(char* string, size_t length, char* output)

{

HANDLE hProcHandle = ::GetCurrentProcess();

BWT* bwt = (BWT*)::VirtualAllocEx(hProcHandle, NULL, \

sizeof(BWT) * length, MEM_COMMIT, PAGE_READWRITE);

bwt[0].string = (char*)::VirtualAllocEx(hProcHandle, NULL, \

length, MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)bwt[0].string, length);

::CopyMemory((LPVOID)bwt[0].string, string, length);

for (size_t row = 1; row < length; row++)

{

bwt[row].string = (char*)::VirtualAllocEx(hProcHandle, NULL, \

length, MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)bwt[row].string, length);

for (size_t index = length - row; index < length; index++)

bwt[row].string[index - (length - row)] = string[index];

for (size_t index = 0; index < length - row; index++)

bwt[row].string[index + row] = string[index];

}

qsort((void*)bwt, length, sizeof(BWT), cmp_proc);

for (size_t row = 0; row < length; row++)

output[row] = bwt[row].string[length - 1];

output[length] = '\0';

for (size_t index = 0; index < length; index++)

if (bwt[index].string != NULL)

::VirtualFreeEx(hProcHandle, bwt[index].string, length, MEM_RELEASE);

if (bwt != NULL)

::VirtualFreeEx(hProcHandle, bwt, sizeof(BWT) * length, MEM_RELEASE);

}

void c_bwt_decode_chunk(char* string, size_t length, char* output)

{

HANDLE hProcHandle = ::GetCurrentProcess();

BWT* bwt = (BWT*)::VirtualAllocEx(hProcHandle, NULL, \

sizeof(BWT) * length, MEM_COMMIT, PAGE_READWRITE);

for (size_t row = 0; row < length; row++)

{

bwt[row].string = (char*)::VirtualAllocEx(hProcHandle, NULL, \

length, MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)bwt[row].string, length);

}

for (size_t index = 0; index < length; index++)

{

for (size_t row = 0; row < length; row++)

for (size_t col = 0; col < length - 1; col++)

{

size_t rev_first = length - col - 2;

size_t rev_second = length - col - 1;

bwt[row].string[rev_second] = bwt[row].string[rev_first];

}

for (size_t row = 0; row < length; row++)

bwt[row].string[0] = string[row];

qsort((void*)bwt, length, sizeof(BWT), cmp_proc);

}

for (size_t index = 0; index < length; index++)

if (bwt[index].string[length - 1] == '\0')

::CopyMemory((LPVOID)output, (CONST VOID*)bwt[index].string, length);

output[length] = '\0';

for (size_t index = 0; index < length; index++)

if (bwt[index].string != NULL)

::VirtualFreeEx(hProcHandle, bwt[index].string, length, MEM_RELEASE);

if (bwt != NULL)

::VirtualFreeEx(hProcHandle, bwt, sizeof(BWT) * length, MEM_RELEASE);

}

void c_bwt_encode(char* input, size_t length, BWT*& bwt)

{

int n_threads = omp_get_num_threads();

for (int chunk_id = 0; chunk_id < n_threads; chunk_id++)

{

size_t size = (size_t)ceil(length / (float)n_threads);

size_t start = chunk_id * size;

size_t end = (chunk_id + 1) * size;

end = ((length % n_threads) > 0 && chunk_id == n_threads - 1) ? length : end;

size = ((length % n_threads) > 0 && chunk_id == n_threads - 1) ? (end - start) : size;

HANDLE hProcHandle = ::GetCurrentProcess();

char* chunk = (char*)::VirtualAllocEx(hProcHandle, NULL, \

(size + 1), MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)chunk, (size + 1));

::CopyMemory((LPVOID)chunk, (CONST VOID*)&input[start], size);

char* encoded = (char*)::VirtualAllocEx(hProcHandle, NULL, \

(size + 1), MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)encoded, (size + 1));

serial::c_bwt_encode_chunk(chunk, (size + 1), encoded);

bwt[chunk_id].string = (char*)::VirtualAllocEx(hProcHandle, NULL, \

(size + 1), MEM_COMMIT, PAGE_READWRITE);

bwt[chunk_id].length = size + 1;

::CopyMemory((LPVOID)bwt[chunk_id].string, (CONST VOID*)encoded, (size + 1));

if (chunk != NULL) ::VirtualFreeEx(hProcHandle, chunk, \

(size + 1), MEM_RELEASE);

if (encoded != NULL) ::VirtualFreeEx(hProcHandle, encoded, \

(size + 1), MEM_RELEASE);

}

}

void c_bwt_decode(const BWT* bwt, char* output, size_t& length)

{

int n_threads = omp_get_num_threads();

for (int chunk_id = 0; chunk_id < n_threads; chunk_id++)

{

HANDLE hProcHandle = ::GetCurrentProcess();

char* chunk = (char*)::VirtualAllocEx(hProcHandle, NULL, \

bwt[chunk_id].length, MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)chunk, bwt[chunk_id].length);

::CopyMemory((LPVOID)chunk, (CONST VOID*)bwt[chunk_id].string, bwt[chunk_id].length);

serial::c_bwt_decode_chunk(chunk, bwt[chunk_id].length, bwt[chunk_id].string);

if (chunk != NULL) ::VirtualFreeEx(hProcHandle, chunk, \

bwt[chunk_id].length, MEM_RELEASE);

}

for (size_t index = 0; index < n_threads; index++)

strncat(output, bwt[index].string, bwt[index].length);

if (output != NULL && strlen(output) > 0)

length = strlen(output);

}

}

namespace parallel

{

void tbb_bwt_encode_chunk(char* string, size_t length, char* output)

{

HANDLE hProcHandle = ::GetCurrentProcess();

BWT* bwt = (BWT*)::VirtualAllocEx(hProcHandle, NULL, \

sizeof(BWT) * length, MEM_COMMIT, PAGE_READWRITE);

bwt[0].string = (char*)::VirtualAllocEx(hProcHandle, NULL, \

length, MEM_COMMIT, PAGE_READWRITE);

::CopyMemory((LPVOID)bwt[0].string, (CONST VOID*)string, length);

tbb::parallel_for(tbb::blocked_range<size_t>(1, length),

[&](const tbb::blocked_range<size_t> &r) {

for (size_t row = r.begin(); row != r.end(); row++)

{

bwt[row].string = (char*)::VirtualAllocEx(hProcHandle, NULL, \

length, MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)bwt[row].string, length);

tbb::task_group g;

g.run([&] {

tbb::parallel_for(tbb::blocked_range<size_t>(length - row, length),

[&](const tbb::blocked_range<size_t> &r) {

for (size_t index = r.begin(); index != r.end(); index++)

bwt[row].string[index - (length - row)] = string[index];

});

});

g.run([&] {

tbb::parallel_for(tbb::blocked_range<size_t>(0, length - row),

[&](const tbb::blocked_range<size_t> &r) {

for (size_t index = r.begin(); index != r.end(); index++)

bwt[row].string[index + row] = string[index];

});

});

g.wait();

}

});

tbb::parallel_sort(bwt, bwt + length, [](const BWT& bwt1, const BWT& bwt2)

{ return (strcmp(bwt1.string, bwt2.string) < 0) ? 1 : 0; });

tbb::parallel_for(tbb::blocked_range<size_t>(0, length),

[&](const tbb::blocked_range<size_t> &r) {

for (size_t row = r.begin(); row != r.end(); row++)

output[row] = bwt[row].string[length - 1];

});

tbb::parallel_for(static_cast<size_t>(0), length, [&](size_t row) {

if (bwt[row].string != NULL) {

::VirtualFreeEx(hProcHandle, bwt[row].string, \

length, MEM_RELEASE);

bwt[row].string = NULL;

}});

if (bwt != NULL) ::VirtualFreeEx(hProcHandle, bwt, \

sizeof(BWT) * length, MEM_RELEASE);

}

void tbb_bwt_decode_chunk(char* string, size_t length, char* output)

{

HANDLE hProcHandle = ::GetCurrentProcess();

BWT* bwt = (BWT*)::VirtualAllocEx(hProcHandle, NULL, \

sizeof(BWT) * length, MEM_COMMIT, PAGE_READWRITE);

tbb::parallel_for(static_cast<size_t>(0), length, [&](size_t row) {

bwt[row].string = (char*)::VirtualAllocEx(hProcHandle, NULL, \

length, MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)bwt[row].string, length);

});

for (size_t index = 0; index < length; index++)

{

tbb::task_group_context root(tbb::task_group_context::isolated);

tbb::parallel_for(tbb::blocked_range2d<size_t>(0, length, length / 2, 0, length - 1, length),

[&](const tbb::blocked_range2d<size_t> &r) {

for (size_t row = r.rows().begin(); row != r.rows().end(); row++)

for (size_t col = r.cols().begin(); col != r.cols().end(); col++)

{

size_t rev_first = length - col - 2;

size_t rev_second = length - col - 1;

bwt[row].string[rev_second] = bwt[row].string[rev_first];

}

}, root);

tbb::parallel_for(tbb::blocked_range<size_t>(0, length),

[&](const tbb::blocked_range<size_t> &r) {

for (size_t row = r.begin(); row != r.end(); row++)

bwt[row].string[0] = string[row];

});

tbb::parallel_sort(bwt, bwt + length, [](const BWT& bwt1, const BWT& bwt2)

{ return (strcmp(bwt1.string, bwt2.string) < 0) ? 1 : 0; });

}

tbb::parallel_for(tbb::blocked_range<size_t>(0, length),

[&](const tbb::blocked_range<size_t> &r) {

for (size_t index = r.begin(); index != r.end(); index++)

if (bwt[index].string[length - 1] == '\0')

::CopyMemory((LPVOID)output, (CONST VOID*)bwt[index].string, length);

});

output[length] = '\0';

tbb::parallel_for(static_cast<size_t>(0), length, [&](size_t row) {

if (bwt[row].string != NULL) {

::VirtualFreeEx(hProcHandle, bwt[row].string, \

length, MEM_RELEASE);

bwt[row].string = NULL;

}

});

if (bwt != NULL) ::VirtualFreeEx(hProcHandle, bwt, \

sizeof(BWT) * length, MEM_RELEASE);

}

void omp_bwt_encode(char* input, size_t length, BWT*& bwt)

{

#pragma omp parallel

{

int thread_id = omp_get_thread_num();

int n_threads = omp_get_num_threads();

size_t size = (size_t)ceil(length / (float)n_threads);

size_t start = thread_id * size;

size_t end = (thread_id + 1) * size;

end = ((length % n_threads) > 0 && thread_id == n_threads - 1) ? length : end;

size = ((length % n_threads) > 0 && thread_id == n_threads - 1) ? (end - start) : size;

HANDLE hProcHandle = ::GetCurrentProcess();

char* chunk = (char*)::VirtualAllocEx(hProcHandle, NULL, \

(size + 1), MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)chunk, (size + 1));

::CopyMemory((LPVOID)chunk, (CONST VOID*)&input[start], size);

char* encoded = (char*)::VirtualAllocEx(hProcHandle, NULL, \

(size + 1), MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)encoded, (size + 1));

parallel::tbb_bwt_encode_chunk(chunk, (size + 1), encoded);

bwt[thread_id].string = (char*)::VirtualAllocEx(hProcHandle, NULL, \

(size + 1), MEM_COMMIT, PAGE_READWRITE);

bwt[thread_id].length = size + 1;

::CopyMemory((LPVOID)bwt[thread_id].string, (CONST VOID*)encoded, (size + 1));

if (chunk != NULL) ::VirtualFreeEx(hProcHandle, chunk, \

(size + 1), MEM_RELEASE);

if (encoded != NULL) ::VirtualFreeEx(hProcHandle, encoded, \

(size + 1), MEM_RELEASE);

}

}

void omp_bwt_decode(const BWT* bwt, char* output, size_t& length)

{

#pragma omp parallel

{

int thread_id = omp_get_thread_num();

int n_threads = omp_get_num_threads();

HANDLE hProcHandle = ::GetCurrentProcess();

char* chunk = (char*)::VirtualAllocEx(hProcHandle, NULL, \

bwt[thread_id].length, MEM_COMMIT, PAGE_READWRITE);

::ZeroMemory((LPVOID)chunk, bwt[thread_id].length);

::CopyMemory((LPVOID)chunk, (CONST VOID*)bwt[thread_id].string, bwt[thread_id].length);

parallel::tbb_bwt_decode_chunk(chunk, bwt[thread_id].length, bwt[thread_id].string);

if (chunk != NULL) ::VirtualFreeEx(hProcHandle, chunk, \

bwt[thread_id].length, MEM_RELEASE);

}

for (size_t index = 0; index < 8; index++)

strncat(output, bwt[index].string, bwt[index].length);

if (output != NULL && strlen(output) > 0)

length = strlen(output);

}

}

DWORD g_BytesTransferred = 0;

VOID CALLBACK FileIOCompletionRoutine(

__in DWORD dwErrorCode,

__in DWORD dwNumberOfBytesTransfered,

__in LPOVERLAPPED lpOverlapped

);

VOID CALLBACK FileIOCompletionRoutine(

__in DWORD dwErrorCode,

__in DWORD dwNumberOfBytesTransfered,

__in LPOVERLAPPED lpOverlapped)

{

g_BytesTransferred = dwNumberOfBytesTransfered;

}

size_t load_from_file(const char* filename, void*& buffer)

{

LARGE_INTEGER lpFileSize = { 0 };

HANDLE hProcHandle = ::GetCurrentProcess();

HANDLE hFile = ::CreateFile(filename, GENERIC_READ, FILE_SHARE_READ, NULL, \

OPEN_EXISTING, FILE_ATTRIBUTE_NORMAL | FILE_FLAG_OVERLAPPED, NULL);

if (hFile == INVALID_HANDLE_VALUE)

{

fprintf(stdout, "Unable to open file %s...\n", filename);

return 0;

}

::GetFileSizeEx(hFile, &lpFileSize);

buffer = ::VirtualAllocEx(hProcHandle, \

NULL, lpFileSize.LowPart, MEM_COMMIT, PAGE_READWRITE);

OVERLAPPED overlapped = { 0 };

if (!::ReadFileEx(hFile, buffer, \

lpFileSize.LowPart, &overlapped, FileIOCompletionRoutine))

{

fprintf(stdout, "Unable to read file %s...\n", filename);

return 0;

}

::CloseHandle(hFile);

return lpFileSize.LowPart;

}

size_t save_to_file(const char* filename, LPVOID buffer, size_t length)

{

DWORD dwBytesWritten = 0;

HANDLE hProcHandle = ::GetCurrentProcess();

HANDLE hFile = ::CreateFile(filename, GENERIC_WRITE, NULL, NULL, \

CREATE_ALWAYS, FILE_ATTRIBUTE_NORMAL, NULL);

if (hFile == INVALID_HANDLE_VALUE)

{

fprintf(stdout, "Unable to create file %s...\n", filename);

return 0;

}

OVERLAPPED overlapped = { 0 };

if (!::WriteFileEx(hFile, buffer, \

length, &overlapped, FileIOCompletionRoutine))

{

fprintf(stdout, "Unable to write to file %s...\n", filename);

return 0;

}

if (g_BytesTransferred > 0 && g_BytesTransferred <= length - 1)

length = g_BytesTransferred;

if (buffer != NULL)

::VirtualFreeEx(hProcHandle, buffer, length, MEM_RELEASE);

::CloseHandle(hFile);

return length;

}

size_t bwt_load_from_file(const char* filename, BWT*& bwt)

{

size_t length = 0L;

LARGE_INTEGER lpFileSize = { 0 };

HANDLE hProcHandle = ::GetCurrentProcess();

HANDLE hFile = ::CreateFile(filename, GENERIC_READ, FILE_SHARE_READ, NULL, \

OPEN_EXISTING, FILE_ATTRIBUTE_NORMAL, NULL);

if (hFile == INVALID_HANDLE_VALUE)

{

fprintf(stdout, "Unable to open file %s...\n", filename);

return 0;

}

for (size_t index = 0; index < 8; index++)

{

DWORD dwBytesRead = 0L;

OVERLAPPED overlapped = { 0 };

if (!::ReadFile(hFile, (void*)&bwt[index].length, \

sizeof(size_t), &dwBytesRead, NULL))

{

fprintf(stdout, "Unable to read file %s...\n", filename);

return 0;

}

length += bwt[index].length;

bwt[index].string = (char*)::VirtualAllocEx(hProcHandle, \

NULL, bwt[index].length, MEM_COMMIT, PAGE_READWRITE);

if (!::ReadFile(hFile, (void*)bwt[index].string, \

bwt[index].length, &dwBytesRead, NULL))

{

fprintf(stdout, "Unable to read file %s...\n", filename);

return 0;

}

}

::CloseHandle(hFile);

return length;

}

size_t bwt_save_to_file(const char* filename, const BWT* bwt, size_t length)

{

HANDLE hProcHandle = ::GetCurrentProcess();

HANDLE hFile = ::CreateFile(filename, GENERIC_WRITE, NULL, NULL, \

CREATE_ALWAYS, FILE_ATTRIBUTE_NORMAL, NULL);

if (hFile == INVALID_HANDLE_VALUE)

{

fprintf(stdout, "Unable to create file %s...\n", filename);

return 0;

}

for (size_t index = 0; index < length; index++)

{

DWORD dwBytesWritten = 0L;

OVERLAPPED overlapped = { 0 };

if (!::WriteFile(hFile, (void*)&bwt[index].length, \

sizeof(size_t), &dwBytesWritten, NULL))

{

fprintf(stdout, "Unable to write to file %s...\n", filename);

return 0;

}

if (!::WriteFile(hFile, (void*)bwt[index].string, \

bwt[index].length, &dwBytesWritten, NULL))

{

fprintf(stdout, "Unable to write to file %s...\n", filename);

return 0;

}

}

::CloseHandle(hFile);

return length;

}

int main(int argc, char* argv[])

{

clock_t time_start, time_end;

char logo[1024] = "Burrows-Wheeler Parallel Tranformation using Intel(R) TBB for C++ "\

"(BWTP v.1.00.16384) by Arthur V. Ratz\n""\n Usage: bwt [options] [mode] file1 file2\n"\

"\nOptions:\n\n -e\tencode *.txt file\n -d\tdecode *.txt file\n"

"\nModes:\n\n -s\tserial execution\n -p\tparallel execution";

if (argc < 5)

{

fprintf(stdout, "%s\n", logo);

return 0;

}

size_t filelen = 0;

void* input = NULL;

HANDLE hProcHandle = ::GetCurrentProcess();

if (!strcmp(argv[1], "-e"))

{

if ((filelen = load_from_file(argv[3], input)) > 0)

{

fprintf(stdout, "Encoding data...\n");

char* string = reinterpret_cast<char*>(input);

BWT* bwt = (BWT*)::VirtualAllocEx(hProcHandle, \

NULL, sizeof(BWT) * 8, MEM_COMMIT, PAGE_READWRITE);

time_start = clock();

if (!strcmp(argv[2], "-p"))

parallel::omp_bwt_encode(string, filelen, bwt);

else if (!strcmp(argv[2], "-s"))

serial::c_bwt_encode(string, filelen, bwt);

time_end = clock();

if (filelen > 0)

bwt_save_to_file(argv[4], bwt, 8);

for (int index = 0; index < 8; index++)

if (bwt[index].string != NULL) {

::VirtualFreeEx(hProcHandle, bwt[index].string, \

bwt[index].length, MEM_RELEASE);

bwt[index].string = NULL;

}

if (bwt != NULL) ::VirtualFreeEx(hProcHandle, bwt, \

sizeof(BWT) * 8, MEM_RELEASE);

if (input != NULL) ::VirtualFreeEx(hProcHandle, input, \

filelen, MEM_RELEASE);

}

fprintf(stdout, "Execution Time: %6.4f secs\n", (time_end - time_start) / (float)CLOCKS_PER_SEC);

}

else if (!strcmp(argv[1], "-d"))

{

BWT* bwt = (BWT*)::VirtualAllocEx(hProcHandle, \

NULL, sizeof(BWT) * 8, MEM_COMMIT, PAGE_READWRITE);

if ((filelen = bwt_load_from_file(argv[3], bwt)) > 0)

{

fprintf(stdout, "Decoding data...\n");

char* output = (char*)::VirtualAllocEx(hProcHandle, \

NULL, filelen, MEM_COMMIT, PAGE_READWRITE);

time_start = clock();

if (!strcmp(argv[2], "-p"))

parallel::omp_bwt_decode(bwt, output, filelen);

else if (!strcmp(argv[2], "-s"))

serial::c_bwt_decode(bwt, output, filelen);

time_end = clock();

if (filelen > 0)

save_to_file(argv[4], output, filelen);

if (output != NULL) ::VirtualFreeEx(hProcHandle, output, \

filelen, MEM_RELEASE);

}

fprintf(stdout, "Execution Time: %6.4f secs\n", (time_end - time_start) / (float)CLOCKS_PER_SEC);

for (int index = 0; index < 8; index++)

if (bwt[index].string != NULL) {

::VirtualFreeEx(hProcHandle, bwt[index].string, \

bwt[index].length, MEM_RELEASE);

bwt[index].string = NULL;

}

if (bwt != NULL) ::VirtualFreeEx(hProcHandle, bwt, \

sizeof(BWT) * 8, MEM_RELEASE);

}

return 0;

}

Points of Interest

In this article, we’ve discussed how to improve the performance of the code that performs the sequential encoding/decoding of files containing the data in the plain-text (*.txt) format. we’ve taken a closer look at the algorithm itself and its computational complexity.

Particularly, we’ve discussed about the fragments of sequential code in C++ that perform the actual encoding/decoding and provided the detailed analysis of the following code to determine the basic performance optimization hotspots. During the discussion, we’ve explained how to use the specific OpenMP’s parallel directive constructs and Intel® TBB library function templates to transform the sequential code into parallel, and, thus, to drastically improve the performance of the encoding/decoding process.

Besides the aspects of using Intel® TBB’s template functions to implement parallel loops, in this article, we’ve also found out how to use specific OpenMP library’s parallel directive constructs to perfectly workshare the entire process of encoding/decoding of the large datasets among all CPU’s threads based on the partitioning algorithm.

Moreover, just for the experiment, to provide a better performance and scalability of the parallel code introduced in this article it would be "nice" :) if someone of the parallel programming enthusiasts would offload the execution of the parallel regions of the following code to the Intel® Xeon™ Phi co-processor, the "many-integrated cores" architecture of which provides the outstanding performance of the typically slow sequential code performing the complex data transformation. Optionally, the same parallel programming concept can be used to modernize the following parallel code to be executed using Nvidia CUDA GPUs.

History

- May 1, 2017 - The first revision of this article has been published

- May 2, 2017 - The second revision of this article has been published