This article describes the design, implementation and usage of invoking the Microsoft Workflow Foundation (WF4) from the Azure Function.

Contents

- Push Model

- Message Mediator (Pipeline) using VETER pattern

- Message encapsulation into the declarative stateless workflow mediator

- Serverless requirements

- Business Integrator

- Support for metadata driven architecture

- Contract and Model First using an untype messaging

- Agile deployment

- Azure Platform

- WF4 Technology

- WCF Technology

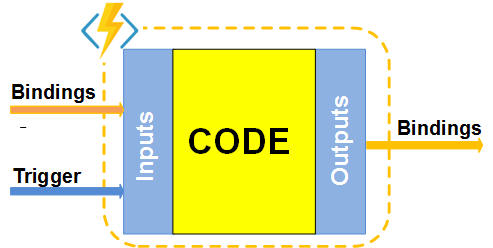

Microsoft Azure Function represents a solution for running small pieces of the code (known as functions) in the cloud serverless platform - function as a service (FaaS). Basically, the cloud provider gives you a "function boilerplate" with inputs for processing your business logic with delivery outputs. All Inputs and Outputs are binding based on the requirements in a declarative way. The Functions in the Serverless architecture model are processing in the Push manner based on the event-driven triggers and/or REST API Gateway for pushing (forwarding) messages to the specific function. The Triggers and/or API Gateway are parts of the Serverless Architecture and they are fully transparent to the Functions, in other words, the user is focusing on the body of the Functions and its Inputs/Outputs bindings.

The Serverless Architecture is based on the Push Model, where the event is Pushed to the Function. This model allows to scale very well and performing high throughputs for event streams. Note that the consumer of the serverless FaaS platform doesn't need to handle scalability, restarting, recycling, triggering, hosting, etc. application. It is fully transparent and it is handled by cloud provider, such as Microsoft Azure.

That's a great feature and next evolution of the cloud computing.

So, the following screen snippet shows the Azure Function integration boilerplate:

The Function integration boilerplate into the Serverless model is based on the selection of the trigger type, the place where the event happens and the Inputs/Outputs bindings such as a way how to get In/Out data for function if it is required. Each function has its own definition stored in the json formatted text file such as the function.json. More details about the Azure Functions can be found here.

As you can see, this boilerplate (signature) is very similar to method invoked by runtime processor where the data/references are passed into the method (function) for their mediation and return back to the caller via the outputs (or return value). Note, that the Function is a stateless message mediation primitive with an agile capability, in other words, the function says: "Give me inputs and you will get outputs".

From the Function code point of the view, the code is executing in the "serverless host environment", which means it is loaded (hosted) and executed somewhere on the cloud PaaS and its processing is agile to the environment. The type of the Function body can be done imperatively such as by Code or declarative way described by XAML.

So, based on the above description, the following screen shows a Function with XAML:

The above picture shows that the Function mediator is encapsulated into the XAML definition described by XML formatted text. This resource can be stored locally in the function folder or centralized into the Repository Storage, for example, Storage Container. This concept has been described in my article Azure Virtual Bridge and successfully validated in the production. The implementation of the XAML invoker is incorporated into the WCF proxy stack as a custom Workflow Channel. This design idea and concept was created a long time ago and it shows the correct road map (see my older articles in References).

The following screen snippet shows a concept of the API Gateway in the Serverless architecture, where untyped messages are routed into the custom Workflow Channel for projecting a XAML definition:

In the above example, the WCF Routing Service is hosted in the Web Role, where workflow XAML projector is hosted as well. In other words, the workflow projector (stateless XAML mediator) can be run anywhere where boilerplate is hosted.

Deployment of the above "API REST/Soap Gateway" is very simple. It is only the web.config file and one assembly such as WorkflowChannel.dll. Note, that this deployment package can be deployed on Azure or on-premises/IIS server. In this scenario, the Function calls the entry point the same way like any other REST/Soap service.

In this article, I would like to show you another way of invoking a WorkflowChannel such as "hosting" a XAML mediator by Function, in other words, calling a WCF client proxy with the custom channel within the Function body.

So, the following screen snippet shows a Workflow Channel in the Azure Function boilerplate and basically, it is hosted in the Serverless framework which is fully transparent to the XAML:

Using a custom WCF Workflow Channel in the Function Code allows to encapsulate a XAML definition into the Repository Storage. The Function inputs are passed to the WCF Client proxy in the sync manner and return back to the Outputs. This is a standard message exchange pattern for WCF stack the same like calling a REST service, etc. The plumbing of the WCF Workflow Channel is done programmatically and it is fully reusable for any kind of XAML mediator.

From the Workflow Foundation Technology, the XAML mediators are stateless non-persisted workflow running in-memory. The state can be flowed in the message by value or reference. The XAML mediators support all principles of the Serverless architecture. I do recommend to read my older article WF4 Custom activities for message mediation where I describe in detail the message mediators using a declarative way such as XAML.

The message flows in the Function via the message pipeline from the Inputs to the Outputs. This message pipeline can be formularized into the well-known pattern such as VETER.

The following screen snippet shows a logical pattern for stateless Function message mediation:

The incoming message from the Trigger is going to the Validation, then it can be Enlarged using others inputs, following to the Transformation, where the payload can be transformed using a message primitives and activities, after that, the message can be Enlarged (e.g., adding some headers) and finally it is Route to the Outputs.

Having the above VETER pattern defined in the XAML definition and "hosted" by Azure Function boilerplate enables us to encapsulate business parts into the resources and handled by business people instead of the developers/coders. The business model can be decomposed into a small business functions (mediators) actually into the logically centralized resources (XAML, XSLT, JSON, etc.) in the Repository and physical decentralized into the Functions and runtime projected in the Serverless Framework. During the design time, we can design those resources (metadata) using suitable tools, based on the other metadata deployed for runtime projecting. So, this concept fully supports Metadata-driven architecture.

For better understanding the above model, the following screen snippet shows the VETER Message Mediator "hosted" by Azure Function:

As you can see, there is a Design Tool for creating a VETER (XAML) definition in the Blob Storage Repository and WCF Workflow Channel for pulling this resource for its runtime projecting within the Azure Function. Note, that the VETER definition is a Dynamic Activity type, which can be recompiled during the design time into the custom activity type and its assembly can be stored into the assembly container storage. In this scenario, the Repository will only have runtime resources such as XSLT if it required.

The following screen snippet shows an example of the Azure Function (AFN) Workflow in the IoT data stream pipeline:

The Function integration with IoT Hub Stream is described by metadata stored in the function.json file, see the following code snippet:

{

"scriptFile": "..\\bin\\Microservices.dll",

"entryPoint": "RKiss.AFNLib.AFNLib.EventToEvent",

"bindings": [

{

"type": "eventHubTrigger",

"name": "ed",

"direction": "in",

"path": "ba2017-iotdemo",

"connection": "iothub",

"consumerGroup": "$Default"

},

{

"type": "eventHub",

"name": "outputEventHubMessage",

"path": "outeventhub",

"connection": "bridge",

"direction": "out"

}

],

"disabled": false

}

As you can see, the above bindings have two definitions, one for input and the other one for output. The entry point is declared within the assembly Microservices.dll. What it means is that there is no code for runtime compiling during the function cold start (except the XAML mediator).

Note, the above function metadata is the same for any kind of event mediation, because the business job has been delegated into the XAML definition, where the event can be mediated declaratively using the pre-built primitives, xslt technique, etc.

That's great!

OK, let's describe its concept and design. I am assuming you have some knowledge of the Azure Functions, WCF4 and WF4 Technologies

The concept of the Azure Workflow Channel is based on the already existing a WCF Custom Workflow Channel described in the detail here. Note, that the Serverless Framework for Azure Functions doesn't allow to use a custom web.config, where the client proxy can be configured. In other words, we have to programmatically create the WCF Client proxy with a custom binding for WorkflowChannel.

The following code snippet shows an example of the C# Function Http Trigger with invoking a XAML code:

As you can see, the above Function is very straightforward for its implementation. Basically, all horse power magic logic is hidden in the Mediator method, which is located in the external assembly Microservices.dll stored in the custom folder bin in the wwwroot. As you will see later on, the Mediator is actually Http wrapper around the WCF Custom Channel proxy decorated for Azure Function usage.

The following screen snippets show the rest of the files for this function such as function.json and project.json (optional):

As you can see, the bindings and dependencies are standardly declared, so there is nothing special related to the custom method Mediator.

The following picture shows the position of the Mediator in the C# Function trigger by Http. The business logic is declared in the workflow definition:

Of course, the Mediator can be used as a precompiled Http Trigger Function, see the following declaration:

That's great! Just only one file such as function.json is necessary for a message mediator. Note, that the present release of the Azure Function Apps allows to use Nuget packages only for C# Functions, therefore there is no project.json file.

To minimize coding in the Azure Function, the following class has been created:

All methods represent precompiled C# Azure Functions, see their signatures:

namespace RKiss.AFNLib

{

public class Microservices

{

public static async Task<HttpResponseMessage>

Mediator(HttpRequestMessage req, TraceWriter log, ExecutionContext executionContext)

public static async Task<BrokeredMessage>

BMsgToBMsg(BrokeredMessage bmsg, TraceWriter log, ExecutionContext executionContext)

public static async Task<EventData>

EventToEvent(EventData ed, TraceWriter log, ExecutionContext executionContext)

public static async Task<EventData>

EventToStream(EventData ed, TraceWriter log,

ExecutionContext executionContext, Stream outputMediator)

public static async Task<EventData>

EventToAppendBlob(EventData ed, TraceWriter log,

ExecutionContext executionContext, ICloudBlob appendBlob)

}

}

The main method is the Mediator for HTTP Trigger, like it is shown in the following screen snippet:

The other methods (precompiled Functions) are basically wrappers for specific message type such as BrokeredMessage, EventData, etc.

Data Stream Pipeline

This example shows an integration of the precompiled Function between the Event Hubs with output blob for archiving events in the AppendBlob:

Each event from the IoT Hub is forwarded to the Workflow Channel for its mediation. The result of the mediation (VETER pattern) is returned back for the next Event Hub and the Blob storage. Basically, there are two declarations for this plumbing, such as integration in the function.json and workflow definition (XAML). Once we have the integration done (such as a generic template), we can concentrate on the event mediation process.

For the data stream pipeline, the class Microservices has built-in EventData wrapper around the Mediator method, see EventToEvent method. This method allows us to integrate a XAML mediator with the Event Hubs. Additional output can be done in the C# Function as a post-mediator code to the specific output. In the class Microservices are included two implementations, see the method EventToStream and EventToAppendBlob.

Note, Azure Functions currently do not support appendBlob type, so my workaround is working with an interface ICloudBlob (direction inout). The helper method DataToAppendBlob has a responsibility to handle this interface as a CloudAppendBlob object.

C# Function for Data Stream Pipeline

This example shows a generic C# boilerplate around the EventToEvent method:

function.json

{

"bindings": [

{

"type": "eventHubTrigger",

"name": "ed",

"direction": "in",

"path": "ba2017-iotdemo",

"connection": "iothub",

"consumerGroup": "$Default"

},

{

"type": "blob",

"name": "outputMediator",

"path": "archive/demo/transactions/{rand-guid}",

"connection": "mySTORAGE",

"direction": "out"

},

{

"type": "eventHub",

"name": "$return",

"path": "eventhubdemo",

"connection": "ehdemo",

"direction": "out"

}

],

"disabled": false

}

run.csx

#r "Microsoft.ServiceBus"

#r "..\\bin\\Microservices.dll"

using System;

using Microsoft.ServiceBus.Messaging;

using RKiss.AFNLib;

public static async Task<EventData> Run(EventData ed, TraceWriter log,

ExecutionContext executionContext, Stream outputMediator)

{

log.Info($"[{executionContext.InvocationId}]EventHub

trigger function EventToBlob: {ed.SequenceNumber}");

var response = await RKiss.AFNLib.Microservices.EventToEvent

(ed, log, executionContext);

var responseClone = response.Clone();

var byteArray = response.GetBytes();

await outputMediator.WriteAsync(byteArray, 0, byteArray.Length);

return responseClone;

}

As you can see, the above C# function body is very straightforward implementation. Based on the needs, we can incorporate a light pre-mediator and/or post-mediator. For example, if we need to invoke a specific XAML VETER mediator based on the input event, then the pre-mediator is the correct place to do it and overwrite the properties ed.Properties["afn-mediator-name"] with the name of the workflow definition for handling this message mediation.

I hope the above examples are giving you a picture of the Azure Workflow Function, where the body is a workflow invoker implemented as a custom WCF Channel.

OK, it's show time. Let's describe in detail how the Azure Workflow Function can be configured and is ready to use. I am assuming you have some knowledge of the Azure Functions and Workflow Foundation WF4 Technologies.

Using Azure Workflow Function requires doing some steps such as adding an assembly of the pre-compiled functions and app settings for repository of the workflow definitions and custom assemblies. The logical picture of this plumbing is shown in the following screen snippet:

Let's start step by step to make our workable Azure Workflow Function for invoking any workflow definitions located in the configured repository.

Step 1. Download Solution/Binaries

In this step, we need to download (from this article) either the full source solution or just binaries only.

Visual Studio 2017 Solution

Binaries Package

The above solution has three projects, such as:

Microservices project, which is a library of the pre-compiled Azure Workflow Functions. Note that the part of this project is WorkflowChannel assembly.WorklowActivities project, which is a custom assembly for workflow activities and class extensions.ActivityLibraryTemplates project, which is a project of the XAML workflow samples. We need these XAML files to store in the Blob Storage container microservices.

Step 2. WorkflowDefinitionStorage (Blob Storage)

The goal of this step is to create two containers in your Blob Storage account such as container microservices and assemblies. Copy from Step 1 all XAML files into the container microservices. Use the following blob naming and content-type like it is shown in the following picture:

Copy an assembly WorkflowActivities.dll to the container assemblies. Note, that the name of the blob doesn't have an extension DLL.

Now, we have only three samples in the microservices container, but you can create more for your testing and work, there is no limit.

Step 3. Loopback Workflow (For Test Purpose)

This step shows a sample of our test. It is very simple and straightforward logic, where the input payload is looped back to the output json payload. The following screen snippet shows this Loopback workflow:

Note, that the _outMessage response message has added a header for content-type with application/json value. As you can see, the above picture shows a sequence for adding this header to the message using built-in primitives such as InvokeMethod activities. Using a custom class extension, we can simplify the addition of the message header - see more details later.

Arguments Required for Each Workflow

The WorkflowChannel communicated with a dynamic workflow activity via the "hardcoded arguments". The following arguments must be declared in each workflow, otherwise an error is thrown.

Note, that _metadata argument is a collection of the name/value pairs of the blob (XAML) file and they can be used for local variables.

Step 4. Function Apps

In order to configure in the app settings, the following code snippet shows all configurable variables in the major precompiled function such as Mediator.

#region name of the host function

string functionName = executionContext.FunctionName;

#endregion

log.Info($"[{executionContext.InvocationId}]HTTP trigger function

Mediator hosted by {functionName}.");

#region storage for workflow definitions

string storageAccount = string.Empty;

if (ConfigurationManager.AppSettings.AllKeys.Contains

($"WorkflowDefinationStorage_{functionName}"))

storageAccount = ConfigurationManager.AppSettings

[$"WorkflowDefinationStorage_{functionName}"];

else if (ConfigurationManager.AppSettings.AllKeys.Contains

("WorkflowDefinationStorage"))

storageAccount = ConfigurationManager.AppSettings["WorkflowDefinationStorage"];

else

return req.CreateErrorResponse(HttpStatusCode.BadRequest,

"Missing a Workflow Definition storage account");

#endregion

#region resolving name of the workflow definition

string xaml = string.Empty;

if (ConfigurationManager.AppSettings.AllKeys.Contains

($"WorkflowDefinationName_{functionName}"))

xaml = ConfigurationManager.AppSettings[$"WorkflowDefinationName_{functionName}"];

if (string.IsNullOrEmpty(xaml))

{

xaml = req.GetQueryNameValuePairs()

.FirstOrDefault(q => string.Compare(q.Key, "xaml", true) == 0)

.Value;

if (string.IsNullOrEmpty(xaml))

{

if (req.Headers.Contains("afn-mediator-name"))

xaml = req.Headers.GetValues

("afn-mediator-name").First().Trim().Replace("$", "/");

else if (ConfigurationManager.AppSettings.AllKeys.Contains

("WorkflowDefinationName"))

xaml = ConfigurationManager.AppSettings["WorkflowDefinationName"];

else

return req.CreateErrorResponse(HttpStatusCode.BadRequest,

"Missing name of the microservice");

}

}

log.Info($"xaml={xaml}");

#endregion

The above configuration logic in the Mediator Function is based on the postfix name on the variable. Thanks for the added feature in the ExecutionContext of the Azure Function which allows to get the name of the executed function. That's perfect for our postfix of the variable in the settings. This postfix indicates that this config setting is for specific function name, otherwise if it doesn't exist, it will use variable without the postfix such as a default name.

The above logic also resolves the name of the XAML workflow which can be configured in the app settings, in the url query string or in the header.

Finally, we have a Function step.

First of all, we need to add some configuration settings for WorkflowChannel, where our XAML blobs are located, so add the following keys into the app settings:

WorkflowDefinationStorage DefaultEndpointsProtocol=https;

AccountName=*****;AccountKey=*******;EndpointSuffix=core.windows.net

WorkflowDefinationName template/loopback/1000

Valid app settings for specific function, the name of the key has a postfix with the function name, as is shown in the following:

WorkflowDefinationStorage_mediator DefaultEndpointsProtocol=https;

AccountName=*****;AccountKey=*******;EndpointSuffix=core.windows.net

OK, now we have to create a bin folder for custom assemblies in the wwwroot folder valid for all functions. Copy two assemblies such as Microservices.dll and WorkflowChannel.dll into the bin folder as is shown in the following screen snippet:

custom assemblies

Now, we are ready to create an Azure Function. Go to the portal and create the HttpTrigger C# function with the name mediator.

function.json:

{

"scriptFile": "..\\bin\\Microservices.dll",

"entryPoint": "RKiss.AFNLib.Microservices.Mediator",

"bindings": [

{

"authLevel": "function",

"name": "req",

"type": "httpTrigger",

"direction": "in",

"methods": [

"get",

"post"

]

},

{

"name": "$return",

"type": "http",

"direction": "out"

}

],

"disabled": false

}

Note that this is a pre-compiled function, so delete the run.csx file.

After all, you should have a Function like it is shown in the following picture:

Step 5. Test

Azure Workflow HttpTrigger Function can be used to test by any REST client, e.g., Postman, Advanced REST Client, etc. From the portal function, we can Get function URL address with a authorization code. Adding a xaml=template/loopback/1000 to the url query string, we are addressing a workflow definition from the Blob Storage. Another way to address a XAML workflow is using a header with name afn-mediator-name.

Note that the name of the workflow definition has the following format and it must be matched with the name of the blob file stored in the container microservices, see Step 2.

{topic}/{name}/{version}

examples:

template/loopback/1000

demo/test/2000

The following screen snippet shows a REST API call for Azure Workflow Function with a name mediator:

As you can see the above response, the payload is an input loopback, exactly what the workflow (Step 3) has been declared.

Note: You can use the following uri addresses for testing purposes. They are valid until August 15th, 2017.

https://rk20170721-fnc.azurewebsites.net/api/mediator?code=06nMbUuvjZugxEQiCTer/4wfBDUUGCccuklLKQRt4sEqwapWMDuaoQ==&xaml=template/loopback/1000

https://rk20170721-fnc.azurewebsites.net/api/mediator?code=06nMbUuvjZugxEQiCTer/4wfBDUUGCccuklLKQRt4sEqwapWMDuaoQ==&xaml=template/test/1000

Step 6. Using a Custom Assembly Within the Workflow

In this step, I am going to demonstrate using a custom assembly within the Workflow. As you saw in the Solution (Step 1), there is a WorkflowActivities project. This project contains one custom activity such as SendHttpRequest used in the sample Test$1000.xaml and one class extension to simplify a XAML expression. The assembly WorkflowActivities.dll is stored in the container assemblies, see the Step 2.

The following code snippet shows this class extension for adding a header to the message:

public static class MessageExtension

{

public static Message AddHeader(this Message message, XName name, string value)

{

if (message.Version == MessageVersion.None)

{

if (message.Properties.TryGetValue

(HttpResponseMessageProperty.Name, out dynamic hrmp) == false)

{

hrmp = new HttpResponseMessageProperty();

message.Properties.Add(HttpResponseMessageProperty.Name, hrmp);

}

hrmp.Headers.Add(name.LocalName, value);

}

else

{

var mh = new MessageHeader<string>(value);

message.Headers.Add(mh.GetUntypedHeader(name.LocalName, name.NamespaceName));

}

return message;

}

}

Note that the method signature for class extension returns the same type of the extension (this in/out). This simple pattern allows to chain method multiple times in the same XAML expression, see the following pictures:

As you can see, the above Loopback workflow looks much better than previous one in Step 3.

The following screen snippet shows the usage of the custom activity SendHttpRequest for calling another Azure Function:

The SendHttpRequest is an async activity for generic REST call and its usage is very straightforward, just need to populate its properties:

This article described the usage of the Azure Function for hosting a stateless workflow defined and declared in the XAML resource stored in the Blob Repository. The concept is based on the custom WCF channel for Workflow. Using this plumbing stack technique, we can easily host the stateless workflow in many different processes such as cloud, on-premises, etc. More details can be found in my earlier articles addressed in the below References. The Azure Functions are parts of the cloud serverless architecture and using them for hosting a workflow invoker is giving to you a new dimension for your business model decomposition. I hope you will find it useful.

- 21st July, 2017: Initial version