This is a comprehensive article that explains big data from beginning to end.

Introduction

I wanted to start surfing the net about big data, but I could not find any complete article which explained the process from beginning to end. Each article just described part of this huge concept. I felt a lack of a comprehensive article and decided to write this essay.

Nowadays, we encounter the phenomena of growing volume of data. Our desire to keep all of this data for long term and process and analyze them with high velocity has been caused to build a good solution for that. Finding a suitable substitute for traditional databases which can store any type of structured and unstructured data is the most challenging thing for data scientists.

I have selected a complete scenario from the first step until the result, which is hard to find throughout the internet. I selected MapReduce which is a processing data by hadoop and scala inside intellij idea, while all of this story will happen under the ubuntu Linux as operating system.

What is Big Data?

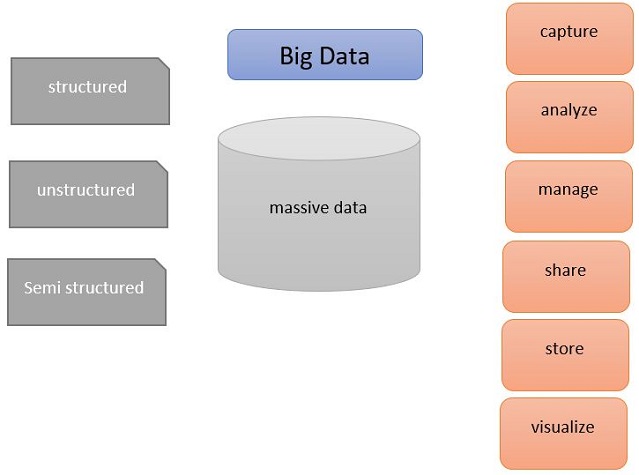

Big data is huge volume of massive data which is structured, unstructured or semi structured and it is difficult to store and manage with traditional databases. Its volume is variable from Terabyte to petabytes. Relational databases such as SQL Server are not suitable for storing unstructured data. As you see in the below picture, big data is set of all kinds of structured or unstructured data which has fundamental requirements such as storing, management, share, analyze.

What is Hadoop?

Indeed, we expect two issues from all of databases. Firstly, we need to store data, secondly, we want to process stored data properly in a fast and accurate way. Because of arbitrary shape and large volume of big data, it is impossible to store them in traditional databases. We need to think about new one which can handle either storing or processing of big data.

Hadoop is as a revolutionary database for big data, which has the capacity to save any shape of data and process them cluster of nodes. It also dramatically reduces the cost of data maintenance. With the aid of hadoop, we can store any sort of data for example all of user click for long period. So it makes easy historical analysis. Hadoop has distributed storage and also distributed process system such as Map Reduce.

It is obvious two expectation duties from databases in the below pictures. First store on the left and beginning step, then processing on the right side. Hadoop is an innovative database which is different from traditional and relational databases. Hadoop runs in bunch of clusters in multiple machines which each cluster includes too many numerous nodes.

What is Hadoop Ecosystem?

As I mentioned above, hadoop is proper for either storing unstructured databases or processing them. There is abstract definition for hadoop eco system. Saving data is on the left side with two different storage possibilities as HDFS and HBase. HBase is top of HDFS and both has been written by Java.

HDFS:

- Hadoop Distributed File System allows store large data in distributed flat files.

- HDFS is good for sequential access to data.

- There is no random real-time read/write access to data. It is more proper for offline batch processing.

HBase:

- Store data I key/value pairs in columnar fashion.

- HBase has possibility to read/write on real time.

Hadoop was written in Java but also you can implement by R, Python, Ruby.

Spark is open source cluster computing which is implemented in Scala and is suitable to support job iteration on distributed computation. Spark has high performance.

Hive, pig, Sqoop and mahout are data access which make query to database possible. Hive and pig are SQL-like; mahout is for machine learning.

MapReduce and Yarn are both working to process data. MapReduce is built to process data in distributed systems. MapReduce can handle all tasks such as job and task trackers, monitoring and executing as parallel. Yarn, Yet Another Resource Negotiator is MapReduce 2.0 which has better resource management and scheduling.

Sqoop is a connector to import and export data from/to external databases. It makes easy and fast transfer data in parallel way.

How Hadoop Works?

Hadoop follows master/slaves architecture model. For HDFS, the name node in master monitors and keeps tracking all of slaves which are bunch of storage cluster.

There are two different types of jobs which do all of magic for MapReduce processing. Map job which sends query to for processing various nodes in cluster. This job will be broken down to smaller tasks. Then Reduce job collect all of output which each node has produced and combine them to one single value as final result.

This architecture makes Hadoop an inexpensive solution which is very quick and reliable. Divide big job into smaller ones and put each task in different node. This story might remind you about the multithreading process. In multithreading, all concurrent processes are shared with the aid of locks and semaphores, but data accessing in MapReduce is under the control of HDFS.

Get Ready for Hands On Experience

Until now, we upgraded and touched upon the abstract meaning of hadoop and big data. Now it is time to dig into it and make a practical project. We need to prepare some hardware which will meet our requirements.

Hardware

- RAM: 8 GB

- Hard Free Space: 50 GB

Software

- Operating System: Windows 10 - 64-bit

- Oracle Virtual Machine VirtualBox

- Linux-ubuntu 17.04

- Jdk-8

- Ssh

- Hadoop

- Hadoop jar

- Intellij idea

- Maven

- Writing Scala code or wordcount example

1. Operating System: Windows 10 - 64-bit

If you still do not have Win 10 and want to upgrade your OS, let's follow the below link to get it right now:

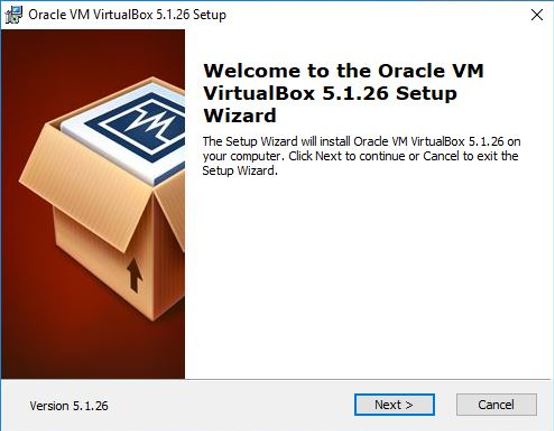

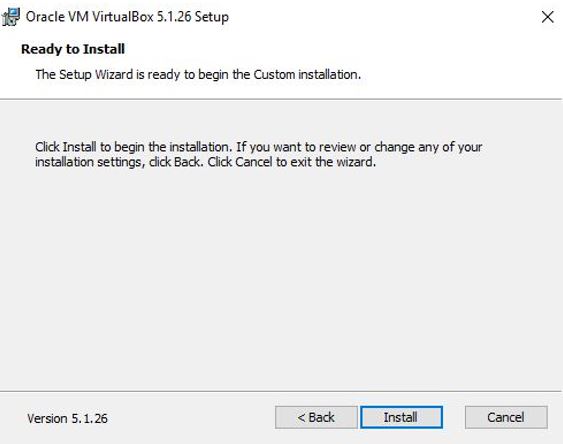

2. Oracle Virtual Machine VirtualBox

Please go to this link and click on Windows hosts and get lastest virtual box. Then follow the below instructions:

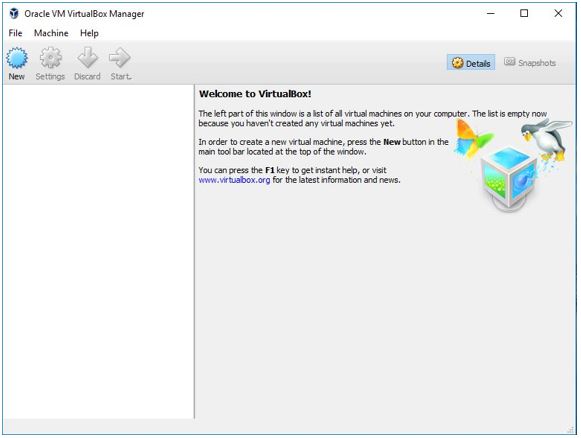

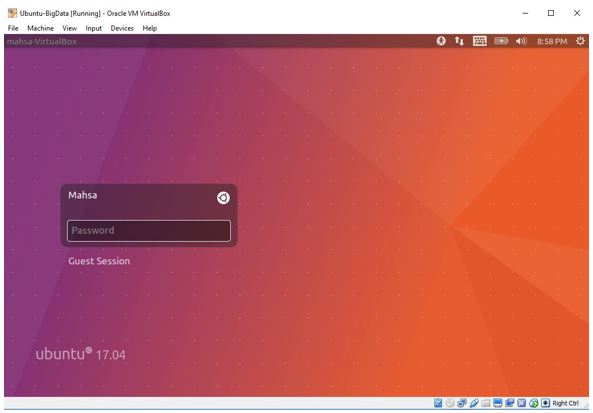

3. Linux-ubuntu 17.04

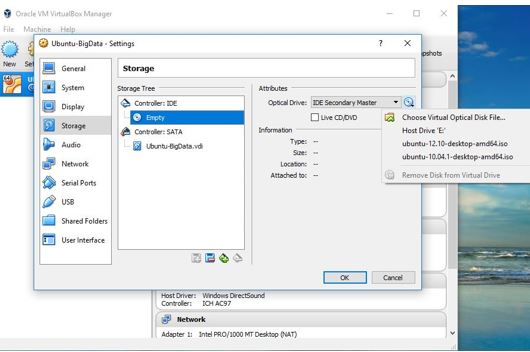

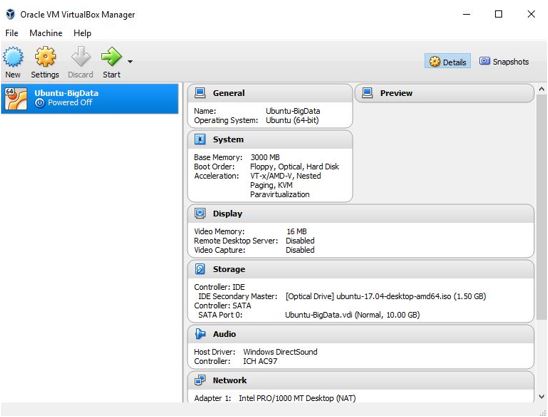

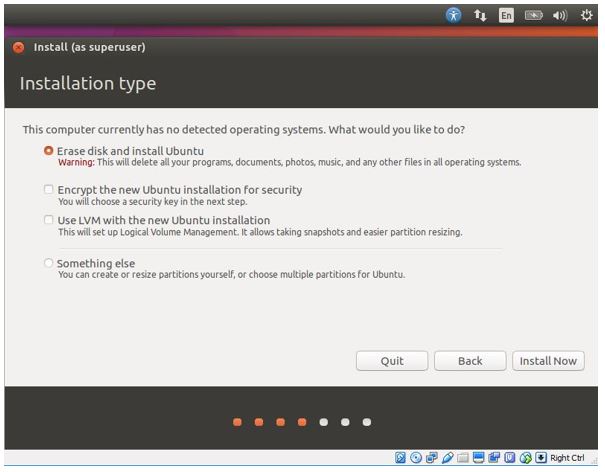

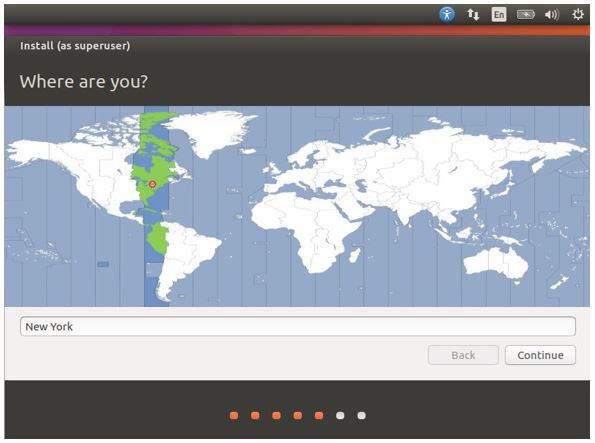

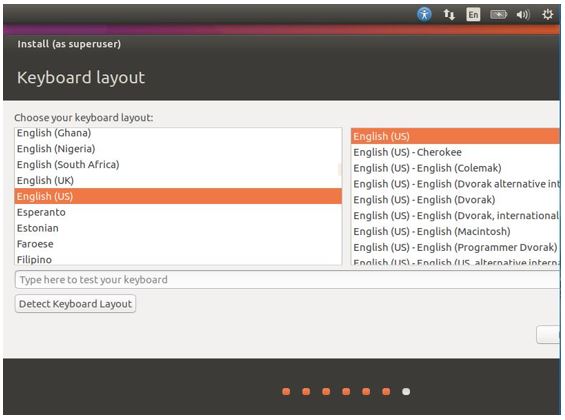

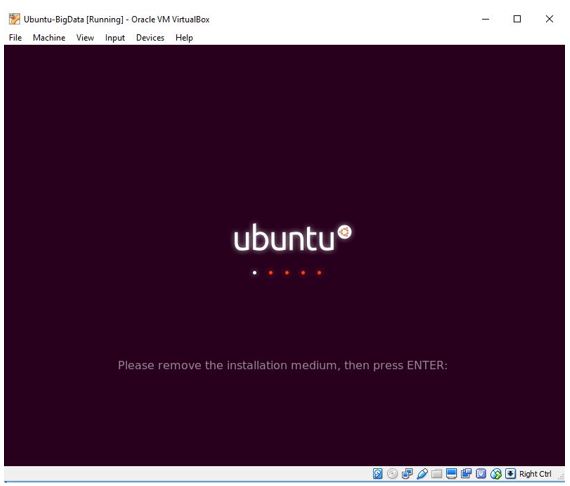

Please go to this link and click on 64-bit and get lastest ubuntu. Then follow the below instructions:

Click on "New" to create new vm for new OS which is Linux/Ubuntu 64-bit.

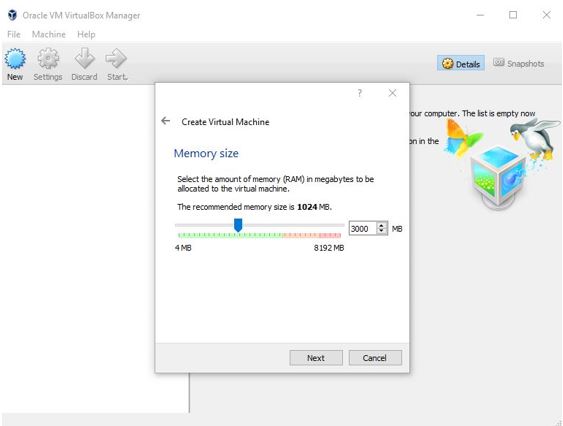

In the below picture, you should assign memory to new OS.

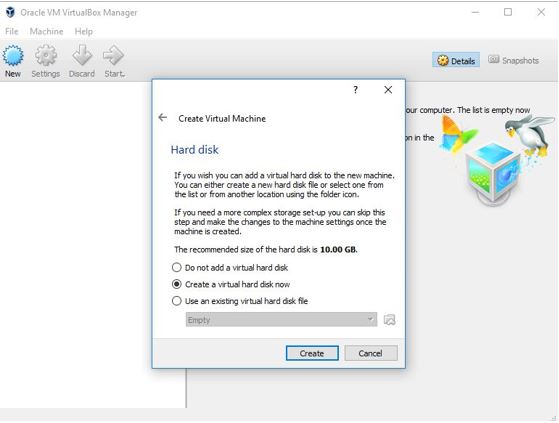

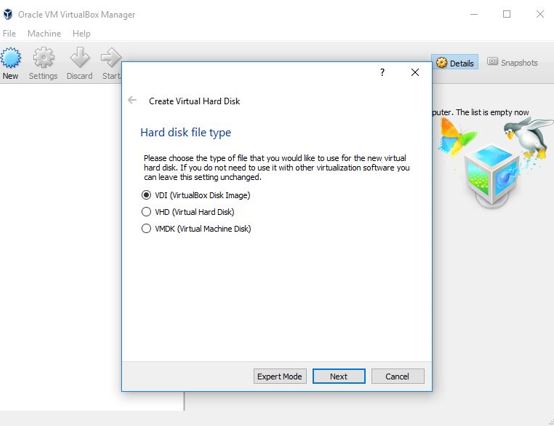

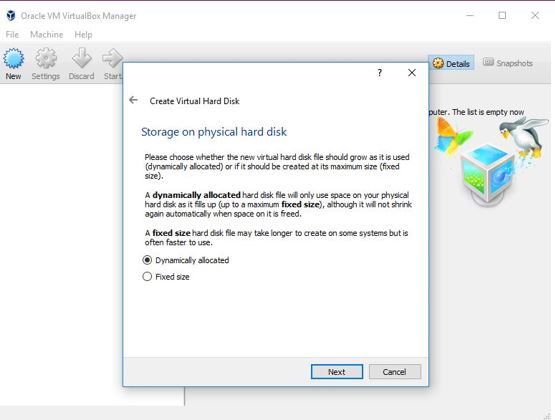

In the below picture, you should assign hard disk to new OS.

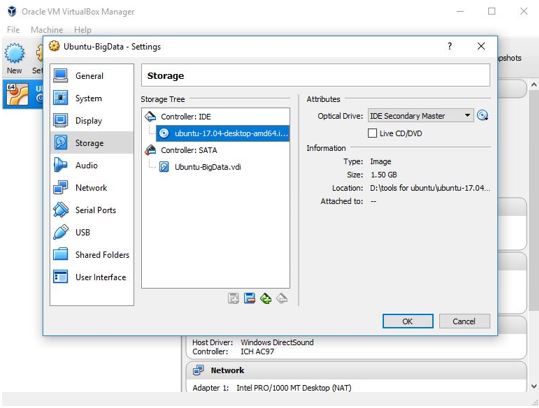

Now, it is time to select your ubuntu 17 which is .iso type. Please select "ubuntu-17.04-desktop-amd64".

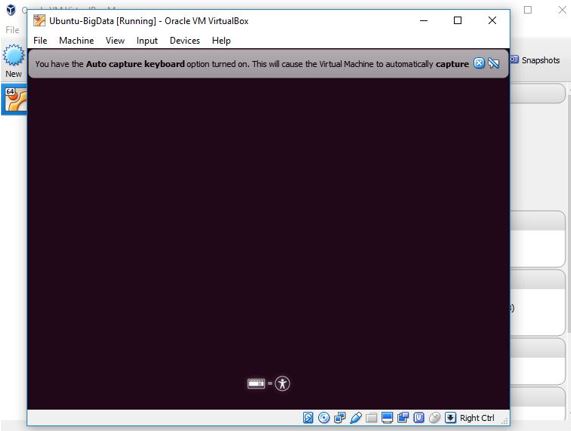

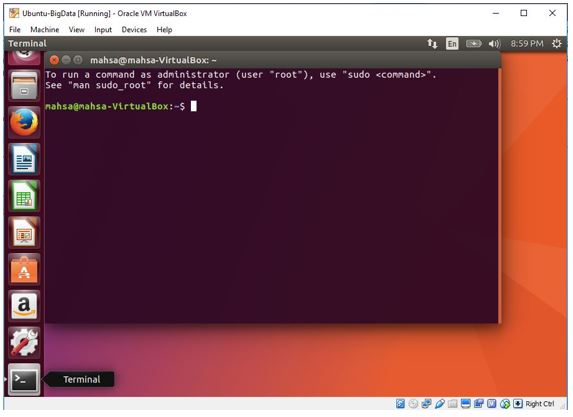

Now, double click on new vm and start to install.

Select your language and click on "Install Ubuntu".

In the below picture, you specify who you are, so it is first user who can use ubuntu.

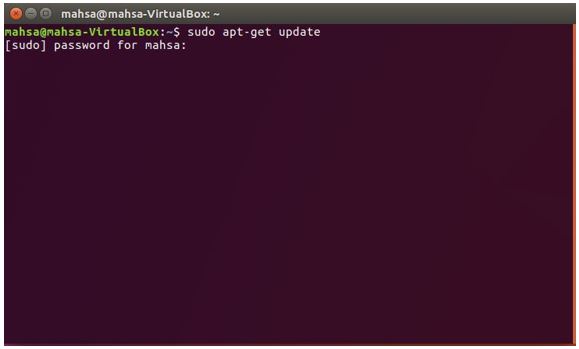

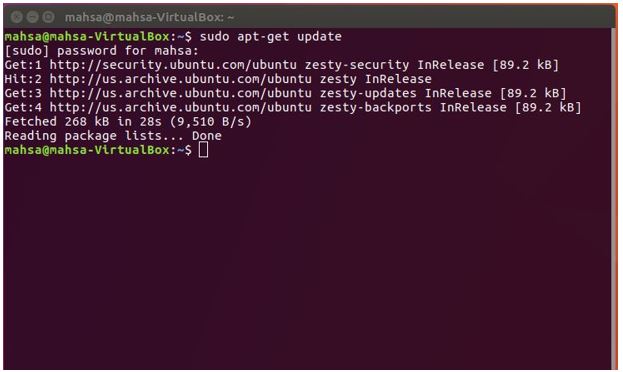

sudo apt-get install update

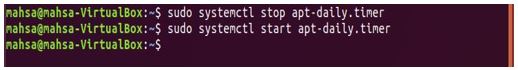

If you see below error, let's try to use one of two solutions:

First Solution:

sudo rm /var/lib/apt/lists/lock

sudo rm /var/cache/apt/archives/lock

sudo rm /var/lib/dpkg/lock

Second Solution:

sudo systemctl stop apt-daily.timer

sudo systemctl start apt-daily.timer

Then again:

sudo apt-get install update

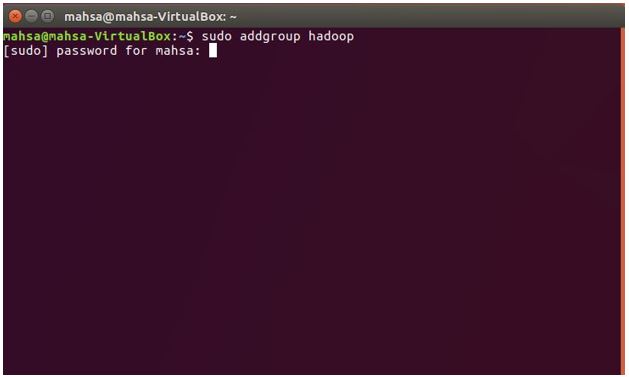

Let's create a new user and group for hadoop.

sudo addgroup hadoop

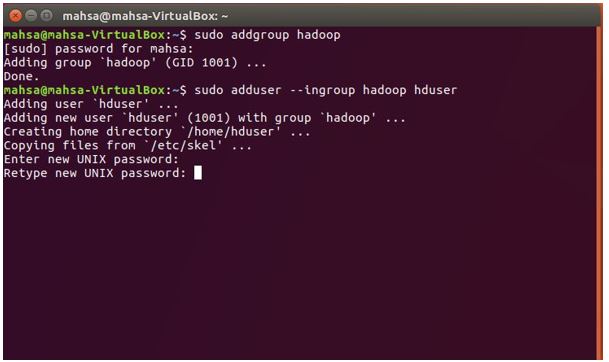

Add user to group:

sudo adduser --ingroup hadoop hduser

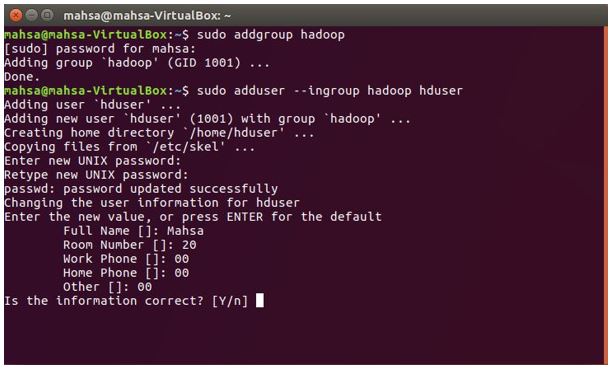

Let's enter your specification, ,then press "y" to save it.

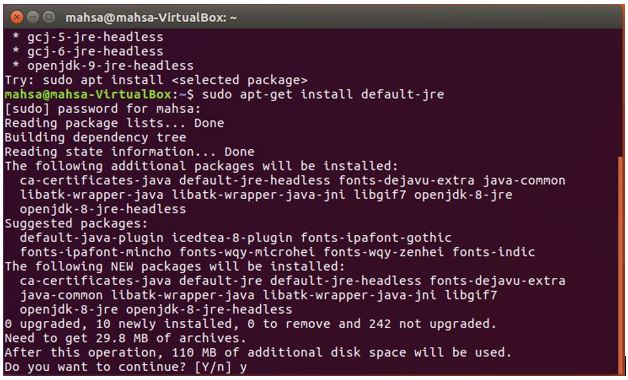

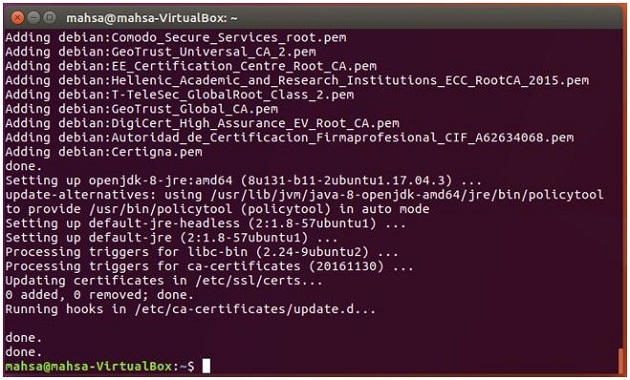

4. Installig JDK

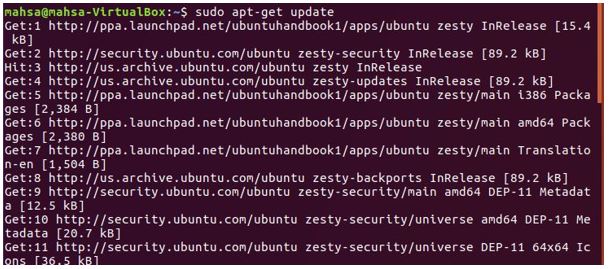

sudo apt-get update

java -version

sudo apt-get install default-jre

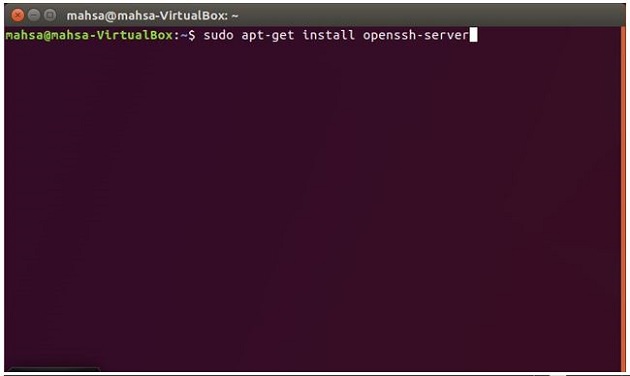

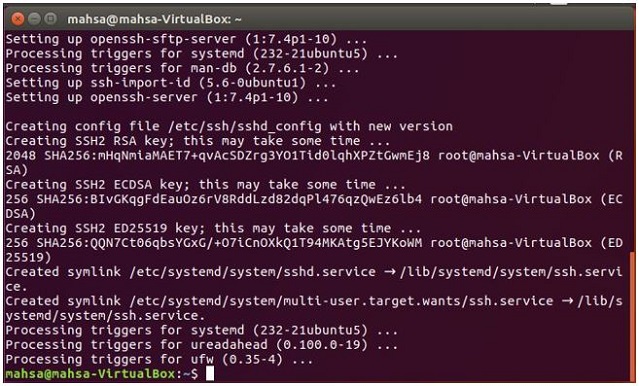

5. Installig open-ssh Protocol

OpenSSH provides secure and encrypted communication on the network by using SSH protocol. The question is that why we use openssh. It is because hadoop needs to get password in order to allow to reach its nodes.

sudo apt-get install openssh-server

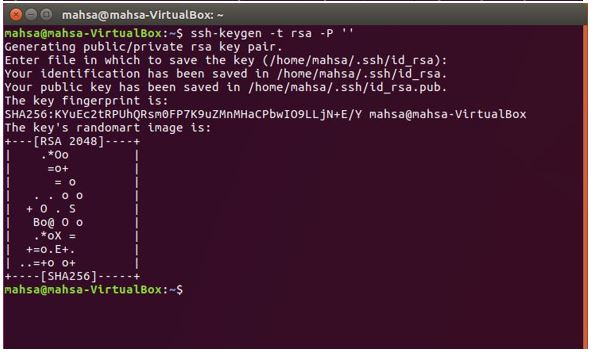

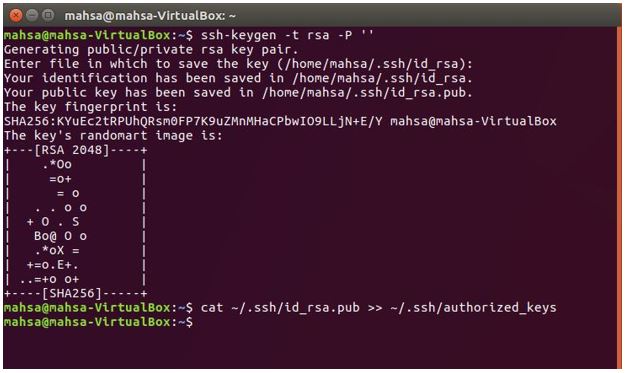

ssh-keygen -t rsa -P ''

After running the above code, if you are asked to enter file name, leave it blank and press enter.v

Then try to run:

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

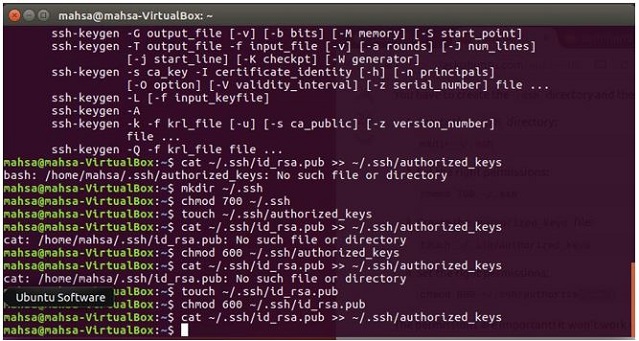

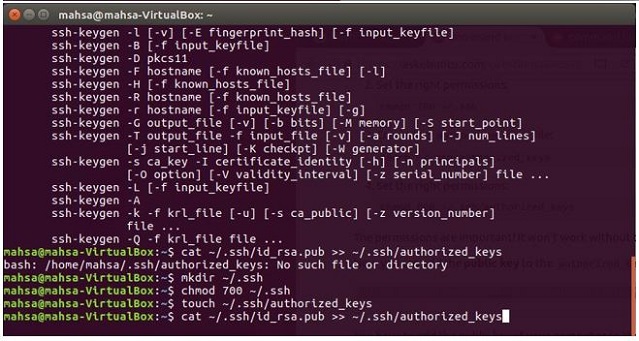

If you have "No File Directory" error, let's follow the below instructions:

After running "cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys" -> error: "No such file or directory"

- mkdir ~/.ssh

- chhmod 700 ~/.ssh

- touch ~/.ssh/authorized_keys

- chmod 600 ~/.ssh/authorized_keys

- touch ~/.ssh/id_rsa.pub

- chmod 600 ~/.ssh/id_rsa.pub

6- Installing Hadoop

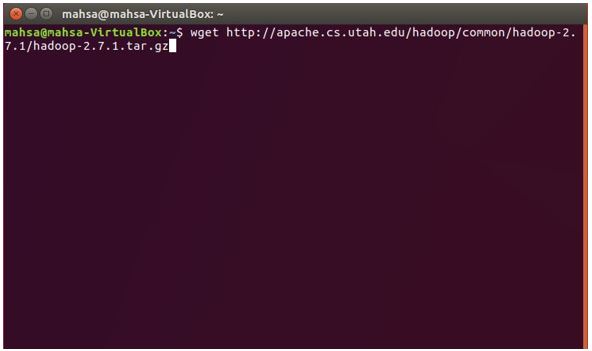

Firstly, you should download hadoop via the code below:

wget http://apache.cs.utah.edu/hadoop/common/hadoop-2.7.1/hadoop-2.7.1.tar.gz

To extract hadoop file:

tar xzf hadoop-2.7.1.tar.gz

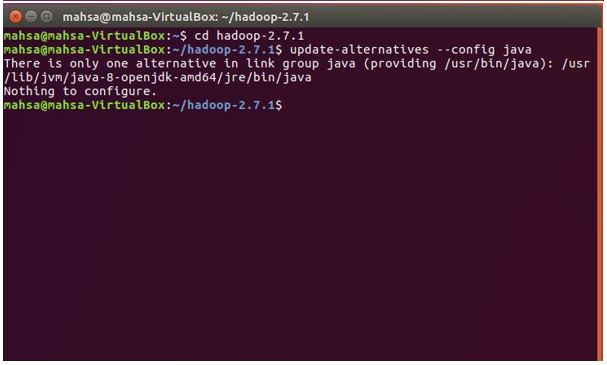

To find Java path:

update-alternatives --config java

Whatever after /jre is your Java path.

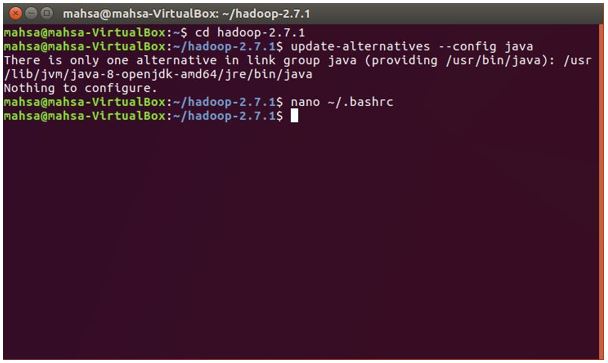

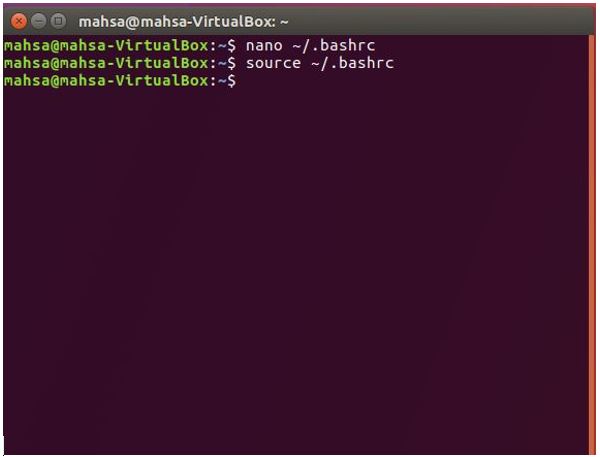

1. Edit ~/.bashrc

nano ~/.bashrc

The above code line will open addop-ev.sh, and after scrolling to end of file, find java-home and enter as hard code Java path which you have found with "update-altetrnatives --config java".

Then at the end, enter the below lines:

#HADOOP VARIABLES START

export JAVA_HOME=/usr/lib/jvm/java-8-openjdk-amd64

export HADOOP_INSTALL=/home/mahsa/hadoop-2.7.1

export PATH=$PATH:$HADOOP_INSTALL/bin

export PATH=$PATH:$HADOOP_INSTALL/sbin

export HADOOP_MAPRED_HOME=$HADOOP_INSTALL

export HADOOP_COMMON_HOME=$HADOOP_INSTALL

export HADOOP_HDFS_HOME=$HADOOP_INSTALL

export YARN_HOME=$HADOOP_INSTALL

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_INSTALL/lib/native

export HADOOP_OPTS="-Djava.library.path= $HADOOP_INSTALL/lib/native"

#HADOOP VARIABLES END

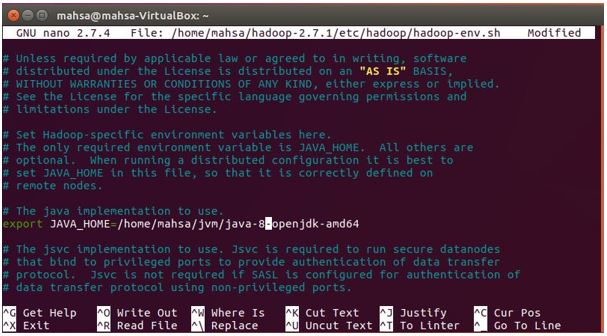

Also change java home by hard code such as:

For closing and saving file, press "ctl+X" and when it asks to save press "y" and when it asks for changing the file name just press "Enter" and do not change file name.

In order to confirm your above operations, let's execute:

source ~/.bashrc

2. Edit hadoop-env.sh

sudo nano /home/mahsa/hadoop-2.7.1/etc/hadoop/hadoop-env.sh

Write at the end of file:

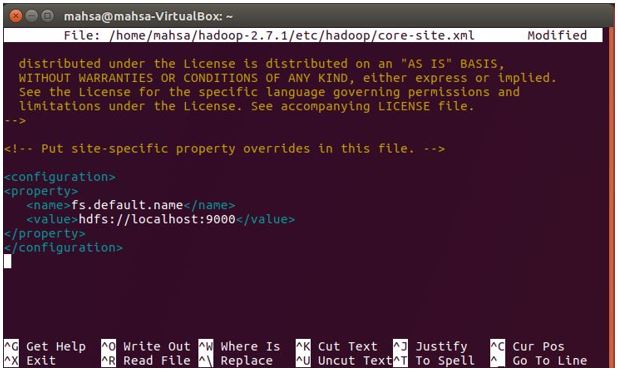

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

3. Edit yarn-site.xml

sudo nano /home/mahsa/hadoop-2.7.1/etc/hadoop/yarn-site.xml

Write at the end of file:

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

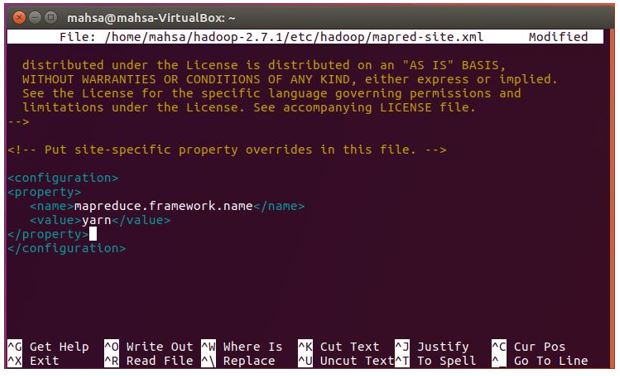

4. Edit mapred-site.xml

cp /home/mahsa/hadoop-2.7.1/etc/hadoop/mapred-site.xml.template

/home/mahsa/hadoop-2.7.1/etc/hadoop/mapred-site.xml

sudo nano /home/mahsa/hadoop-2.7.1/etc/hadoop/mapred-site.xml

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

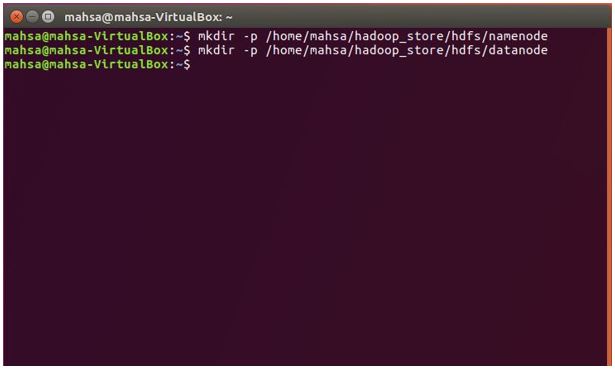

5. Edit hdfs

mkdir -p /home/mahsa/hadoop_store/hdfs/namenode

mkdir -p /home/mahsa/hadoop_store/hdfs/datanode

sudo nano /home/mahsa/hadoop-2.7.1/etc/hadoop/hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/mahsa/hadoop_store/hdfs/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/mahsa/hadoop_store/hdfs/datanode</value>

</property>

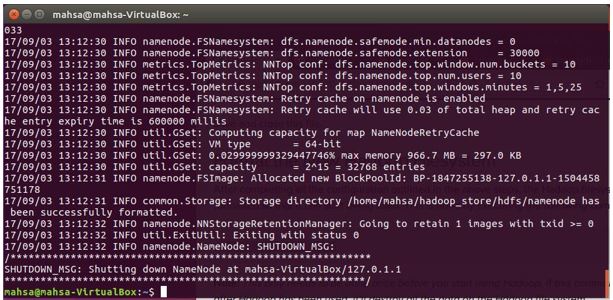

6. Format

hdfs namenode -format

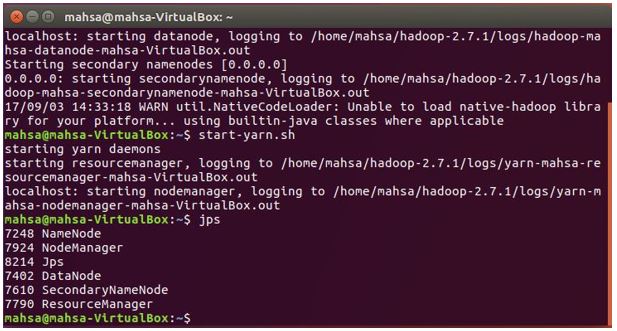

start-dfs.sh

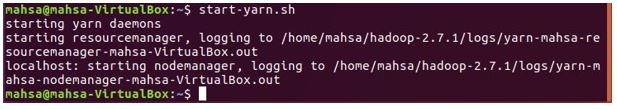

start-yarn.sh

By running "jps", you can be sure that hadoop has been installed properly.

jps

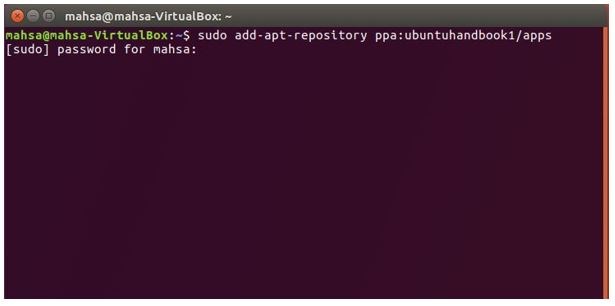

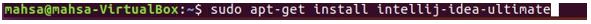

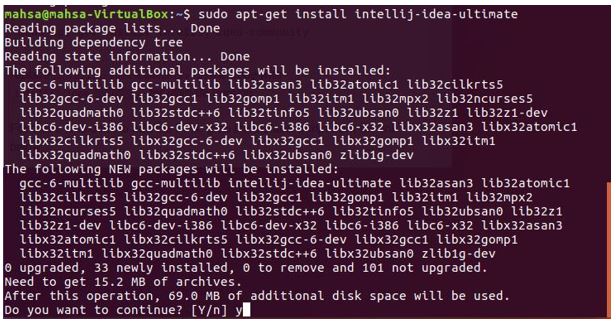

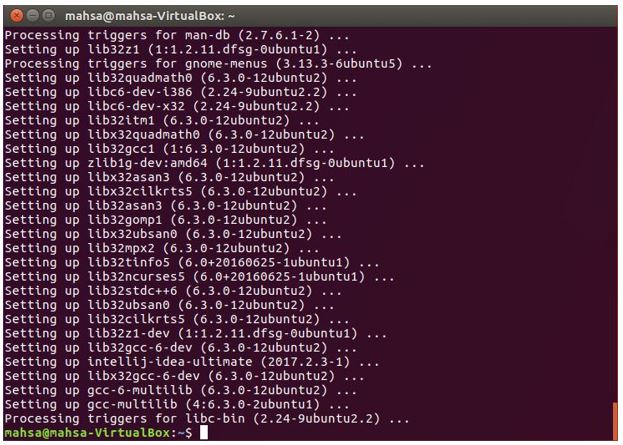

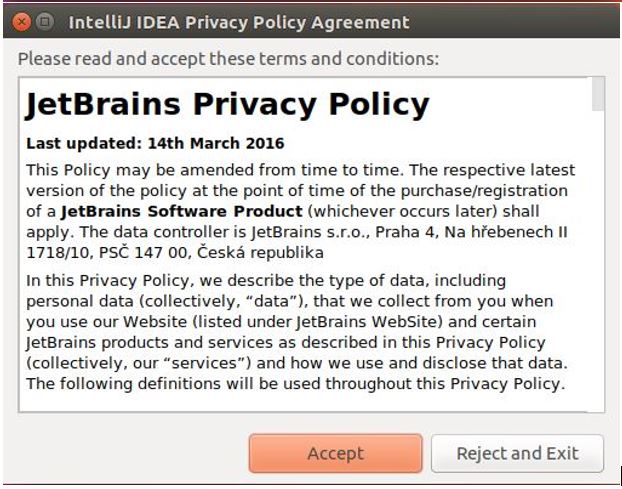

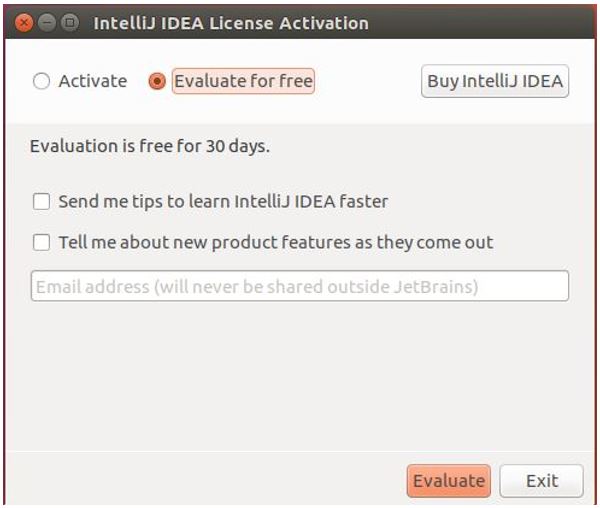

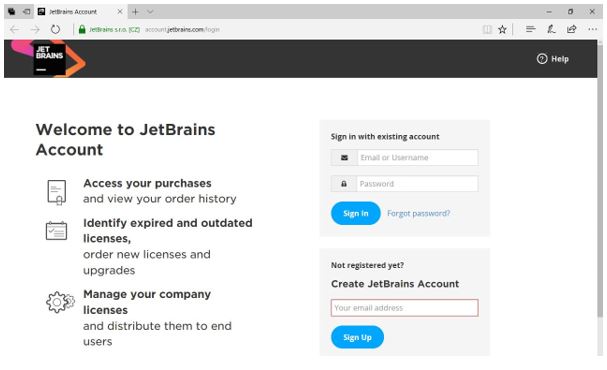

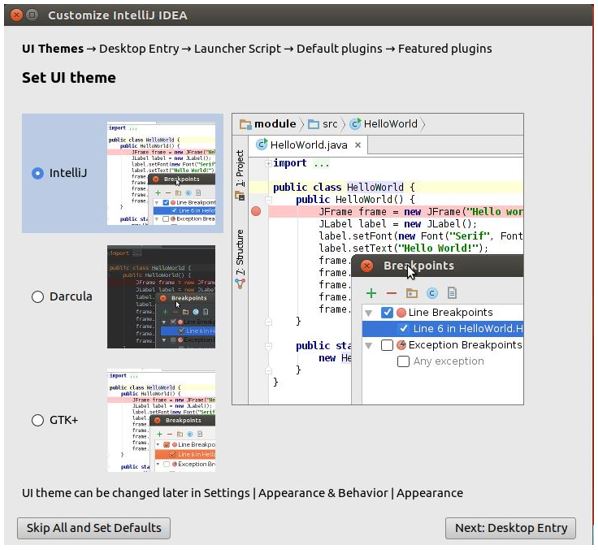

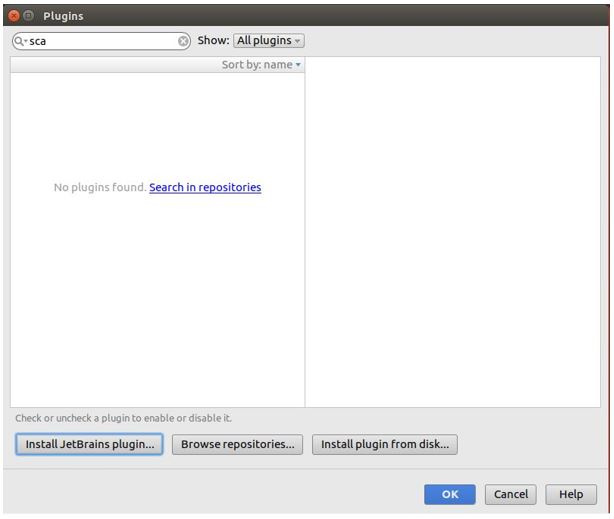

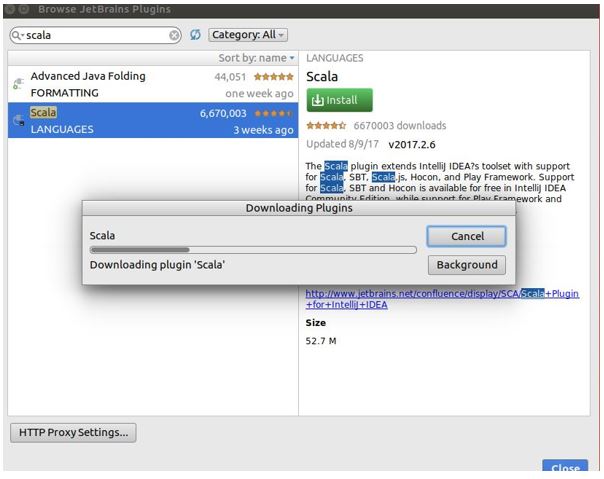

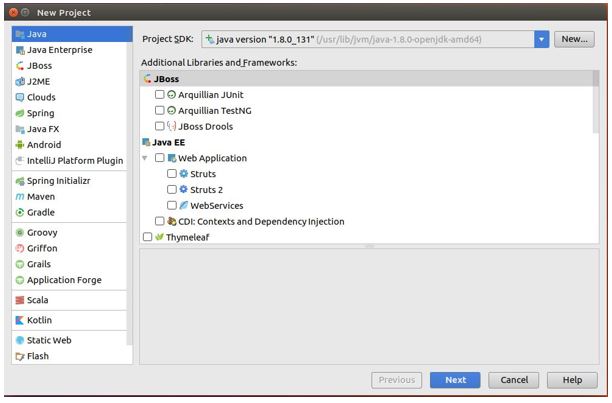

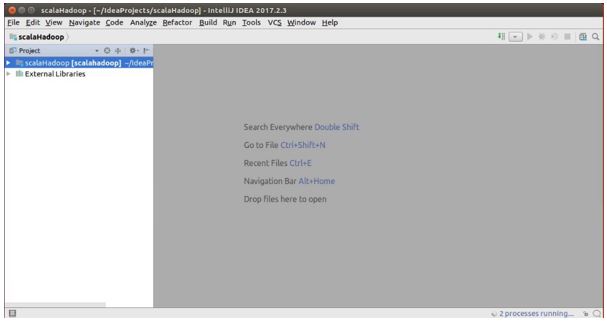

6. Installing Intellij Idea

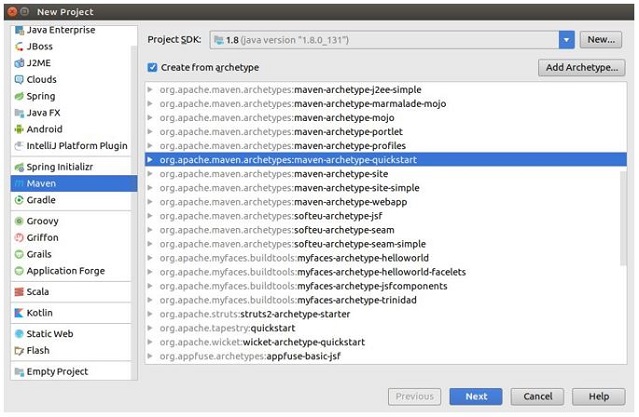

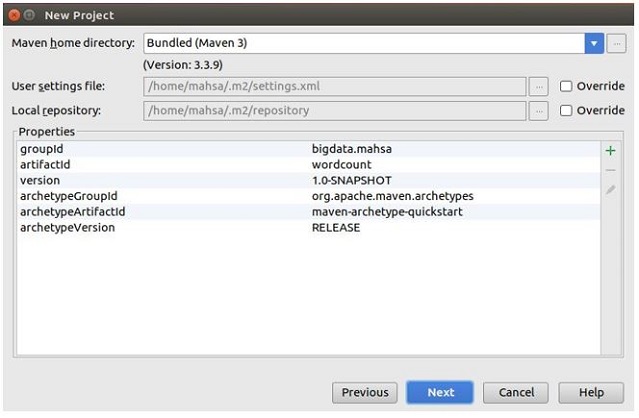

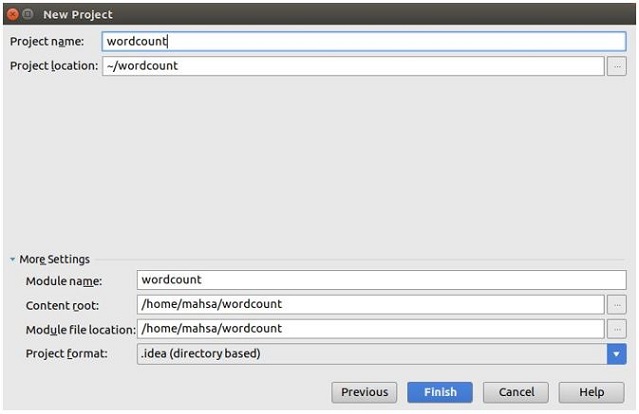

There are two possibilities for MapReduce implementation, one is scala-sbt and other way is maven, I want to describe both of them. First scala-sbt.

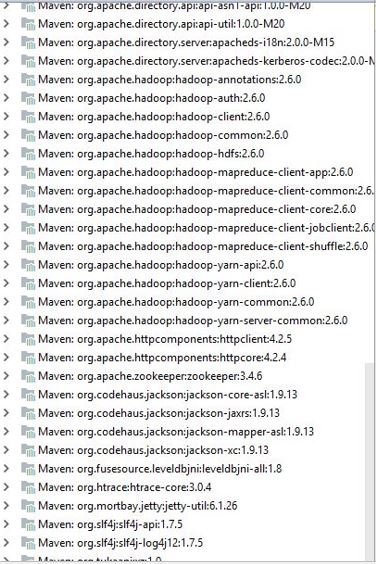

7. Installing Maven

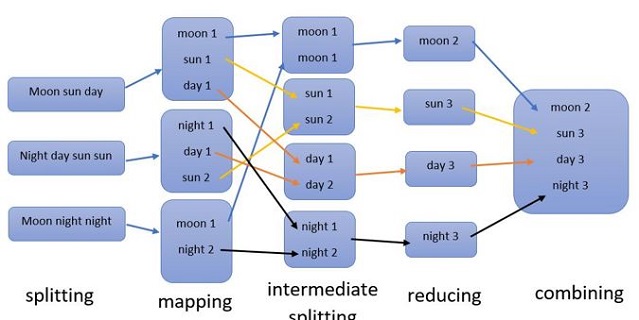

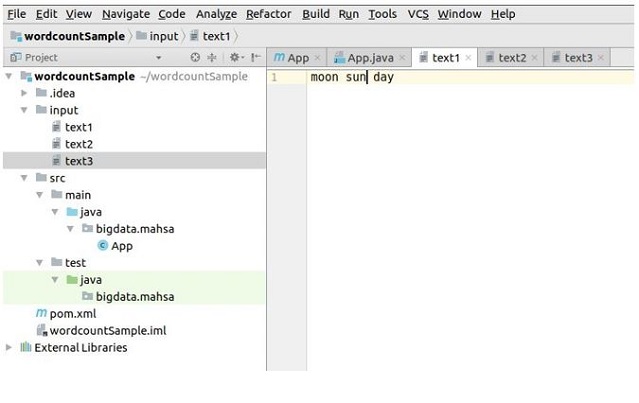

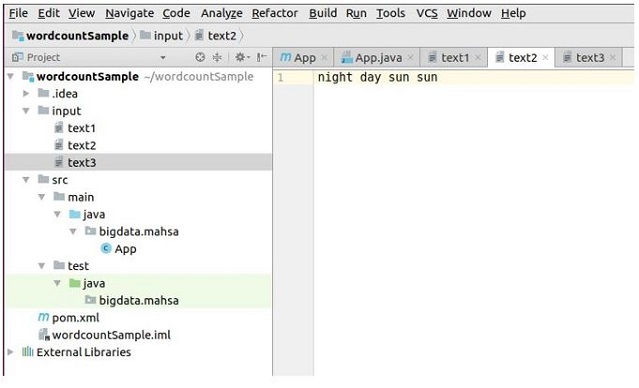

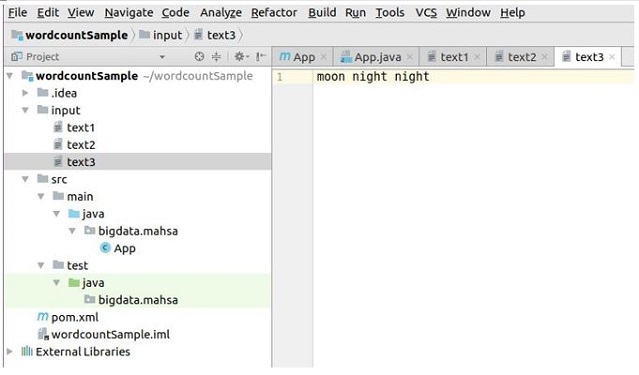

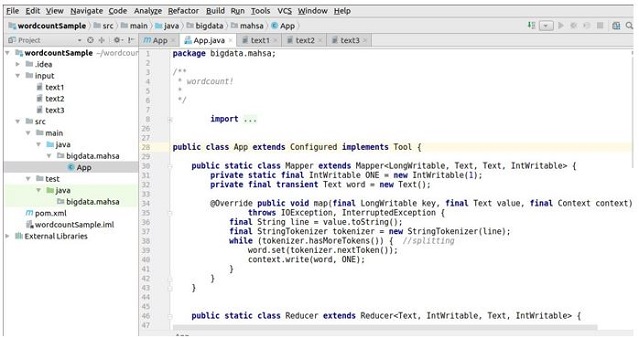

There are three text file at the below picture for practicing word count. MapReduce starts splitting each file to cluster of nodes as I explained at the top of this article. At Mapping phase, each node is responsible to count word. At intermediate splitting in each node is just hommogeous word and each number of that specific word in previous node. Then in reducing phase, each node will be summed up and collect its own result to produce single value.

At below picture that you will see as pop up, you must click to "Enable Auto Import" which will handle all libraries.

Go to Menu "Run" -> Select "Edit Configurations":

- Select "Application"

- Select "Main Class = "App"

- Select arguments as: "input/ output/"

You just need to create "input" as directory and output directory will be created by intellij.

After running application, you will see two files:

- "_SUCCESS"

- "part-r-00000"

If you open "part-r-00000", you will see the result as:

- moon 2

- sun 3

- day 3

- night 3

Using the Code

-package bigdata.mahsa;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

import java.io.IOException;

import java.util.StringTokenizer;

public class App extends Configured implements Tool {

public static class Mapper extends Mapper<LongWritable, Text, Text, IntWritable> {

private static final IntWritable ONE = new IntWritable(1);

private final transient Text word = new Text();

@Override public void map

(final LongWritable key, final Text value, final Context context)

throws IOException, InterruptedException {

final String line = value.toString();

final StringTokenizer tokenizer = new StringTokenizer(line);

while (tokenizer.hasMoreTokens()) {

word.set(tokenizer.nextToken());

context.write(word, ONE);

}

}

}

public static class Reducer extends Reducer<Text, IntWritable, Text, IntWritable> {

@Override

public void reduce(final Text key, final Iterable<IntWritable> values,

final Context context)

throws IOException, InterruptedException {

int sumofword = 0;

for (final IntWritable val : values) {

sum += val.get();

}

context.write(key, new IntWritable(sumofword));

}

}

@Override public int run(final String[] args) throws Exception {

final Configuration conf = this.getConf();

final Job job = Job.getInstance(conf, "Word Count");

job.setJarByClass(WordCount.class);

job.setMapperClass(Mapper.class);

job.setReducerClass(Reducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class);

FileInputFormat.addInputPath(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

return job.waitForCompletion(true) ? 0 : 1;

}

public static void main(final String[] args) throws Exception {

final int result = ToolRunner.run(new Configuration(), new App(), args);

System.exit(result);

}

}

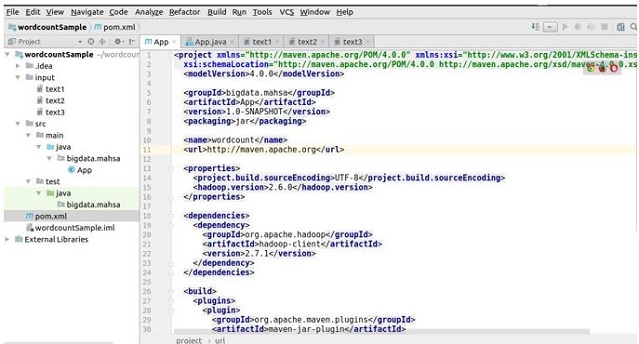

Pom.xml

<project xmlns="<a data-saferedirecturl="https://www.google.com/url?

hl=en&q=http://maven.apache.org/POM/4.0.0&source=gmail&ust=1505079008271000&

usg=AFQjCNELhZ47SF18clzvYehUc2tFtF4L1Q" href="http://maven.apache.org/POM/4.0.0"

target="_blank">http://maven.apache.org/POM/4.0.0</a>"

xmlns:xsi="<a data-saferedirecturl="https://www.google.com/url?

hl=en&q=http://www.w3.org/2001/XMLSchema-instance&source=gmail&

ust=1505079008271000&usg=AFQjCNFD9yHHFr1eQUhTqHt1em3OxoDqEg"

href="http://www.w3.org/2001/XMLSchema-instance" target="_blank">

http://www.w3.org/2001/XMLSchema-instance</a>"

xsi:schemaLocation="<a data-saferedirecturl="https://www.google.com/url?

hl=en&q=http://maven.apache.org/POM/4.0.0&source=gmail&ust=1505079008271000&

usg=AFQjCNELhZ47SF18clzvYehUc2tFtF4L1Q" href="http://maven.apache.org/POM/4.0.0"

target="_blank">http://maven.apache.org/POM/4.0.0</a>

<a data-saferedirecturl="https://www.google.com/url?hl=en&

q=http://maven.apache.org/xsd/maven-4.0.0.xsd&source=gmail&ust=1505079008271000&

usg=AFQjCNF31lT_EYlu0SxGI9EvuhtJcJ9Y0w" href="http://maven.apache.org/xsd/maven-4.0.0.xsd"

target="_blank">http://maven.apache.org/xsd/maven-4.0.0.xsd</a>">

<modelVersion>4.0.0</modelVersion>

<groupId>bigdata.mahsa</groupId>

<artifactId>App</artifactId>

<version>1.0-SNAPSHOT</version>

<packaging>jar</packaging>

<name>wordcount</name>

<url><a data-saferedirecturl="https://www.google.com/url?hl=en&

q=http://maven.apache.org&source=gmail&ust=1505079008271000&

usg=AFQjCNHfdU8bl-1WzHoSKompqgsFvDc6cA" href="http://maven.apache.org/"

target="_blank">http://maven.apache.org</a></url>

<properties>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<hadoop.version>2.6.0</hadoop.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>2.7.1</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.pluginsplugins</groupId>

<artifactId>maven-jar-plugin</artifactId>

<configuration>

<archive>

<manifest>

<mainClass>bigdata.mahsa.App</mainClass>

</manifest>

</archive>

</configuration>

</plugin>

</plugins>

</build>

</project>

Feedback

Feel free to leave any feedback on this article; it is a pleasure to see your opinions and vote about this code. If you have any questions, please do not hesitate to ask me here.

History

- 9th September, 2017: Initial version