Author: Anthony Daniels

Abstract

A new general purpose Multi-Objective Optimization Engine that uses a Hybrid Genetic Algorithm – Multi Agent System is described. Unlike traditional multi-objective methods, the proposed method transforms the problem into a Fuzzy Programming equivalent, including fuzzy objectives and constraints. The proposed method then performs fuzzy set operations on the objective functions and constraints combining the information into a single objective and single constraint. This single objective and single constraint is then used in sorting the fuzzy Pareto Set and grooming out inferior solutions.

Index Terms—Multi-Objective Optimization, Genetic Algorithms, Multi-Agent Systems, Fuzzy Programming, Open Source, Optimization Solver

I Introduction

In recent years, there has been a large amount of development in Hybrid Evolutionary Algorithms. The introduction of the Hybrid Genetic Algorithm – Multi Agent System (HGA-MAS) [1][2][10] proved that by adding multiple agents representing the interests of the individual variables, one would speed up the convergence of the genetic algorithm dramatically. Convergence speed was orders of magnitude faster than over a traditional genetic algorithm [1] (Please refer to the enclosed technical paper for all references). The reason for this is that the multi-agent system provides localized hill climbing ability for the individual chromosomes of the genetic algorithm.

II HGA-MAS Framework

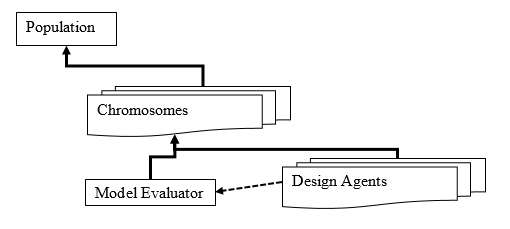

In the proposed HGA-MAS, the population contains a collection of chromosomes (or candidate designs). It should be noted that the chromosomes are real coded and not binary. Each chromosome contains its own model evaluator which is capable of analyzing the chromosome in terms of its objective functions and constraints. By having their own model evaluator, this directly supports multi-threaded parallelism in the optimization kernel. Each chromosome also has a collection of design agents that represent the interests of every individual variable that is part of the chromosomes design description. The proposed system follows the schematic in Figure 1.

Figure 1: HGA-MAS Schematic

In each generation of the HGA-MAS, the solver performs the following operations:

- Perform Design Agent Improvements Repeat N times (user setting)

- Perform Genetic Algorithm Operations (Crossover, Mutation)

- Inject Population into Pareto Set

- Re-Normalize and Evaluate the Pareto Set and Population

- Groom the Pareto Set

The design agent improvement session allows each of the design agents to improve the candidate design based on their own interests. They submit design change requests to the model evaluator, which in turn accepts the changes or denies them. Every design agent gets a turn to improve the design, and this process is repeated N times to give the design agents time to improve the candidate designs before the disruptive genetic algorithm operations take place. For the genetic algorithm phase, it should be noted that Elite protection is given to the chromosome with the highest objective function (more on that will be discussed in the following sections). A number of crossover (tail swapping) and mutation operations are performed. The number of operations is a function of the population size. Then the current chromosomes and the active Pareto Set is renormalized, evaluated, and inferior designs are weeded out in the grooming phase.

III Literature Review

This section will discuss the current state of Multi-Objective Optimization (MOO) from the perspective of the proposed system. In MOO, the scientist is trying to choose a solution or set of solutions that optimize sometimes conflicting objective functions subject to constraints. Often in these scenarios, there is not a single solution, but a set of equally good solutions that are spread across what is known as the Pareto Front. Sometimes, but not always, there is a Pareto Optimal solution that provides the best balance of input variables along the Pareto Front. The mathematical definition for a typical MOO is shown in (1).

In dealing with the multiple objective functions, a number of methods have been approached. One such method is known as the weighted sums method or scalarization. In this method, each fi(x) is multiplied by a scalar value ?i and the individual objective functions are directly summed into one long objective function [8]. The proposed method has the similar goal of reducing the number of equations being worked on, but the author uses Fuzzy Sets to achieve this. Another area of research that is relevant is the area of genetic algorithm non-dominated sorting of candidate solutions (designs).[9] The focus of this method is to provide a robust widely and evenly spread Pareto Front and sort the candidate solutions pairwise based on their objective functions. This pairwise sorting classifies solutions as either dominant, non-dominant, or inferior. From there, the scientist picks solutions that best fit their needs. The proposed method instead utilizes fuzzy sorting to rank solutions.

In the area of Fuzzy Programming, there has been effort to convert both the objective functions and or constraints into Fuzzy Utility Preference Functions [3] [11] [12] [15]. This effort has produced both the implementation of multiple objectives as sets [3], and as individual fuzzy functions that produce a preference surface [15]. Both methods have their merits being the reduction of data (sets) and providing a soft computing objective (surface). For the purposes of this research, the author has chosen to follow the path outlined in Buckley [3], however the fuzzy set operations performed in this research are slightly different and will be outlined in detail in the following section.

IV Fuzzy Programming

Fuzzy Programming, as a method of optimization, takes the existing mathematical formulation of the objectives and constraints and transforms them into fuzzy preference functions [3]. This is best shown by example. As an illustration, take the simple constraint of X1 <= 5. Transformed the constraint looks like the fuzzy utility preference function in Figure 2. Less than or equal to 5, the fuzzy constraint is fully satisfied with a utility of 1.0. This decreases as the constraint is violated until it maintains an unsatisfied value of 0.05. In fuzzy utilities, the fully unsatisfied constraint is a non-zero small number. The reason for this is that 0 utility means fully infeasible. Unfortunately, set operations with fully infeasible values lose all information in the multiplicative set inferences and thus make decision engines for agents ineffective at improving solutions that are temporarily in infeasible regions of the search space.

Figure 2: Example Fuzzy Constraint – Less Than or Equal to

Similarly to constraints, objective functions can also be turned into fuzzy utility functions. This is done through a process where the Pareto set current lower bound and upper bound are converted into a linear fuzzy utility preference function. This again is best illustrated by example. Say we have the objective function max f(x) = x1 + x2^2 and the current Pareto Set of candidate solutions has an objective function value range of [4.3…15.7], then the fuzzy utility would look like Fig. 3. It is important to note that with every generation, the ranges of the individual objective functions change, which is why at the end of every generation, the utility functions are renormalized given the current ranges and the Pareto Set is resorted.

Figure 3: Example Fuzzy Objective Function – Maximize F(x)

Extending this to multi-objective optimization is as simple as performing set operations on the fuzzified objective functions. For example:

Fi(x) is the fuzzy utility function of fi(x) and MIN is the minimum set operator. We are maximizing the minimum of the fuzzy utility functions. In terms of fuzzy set mathematics, this is the same as the AND operator. The proposed system performs the MIN and AVG operators on the set of fuzzy objective functions. This differs from weighted sum multi-objective methods that use scaling factors with summation, and not set operations to combine and reduce the number of objective functions. [8]

Using the set operator in (4) provides upward pressure on both the min and the average of the objective utilities. This can be useful in scenarios where there is an unhappy objective function that is never satisfied. The average operator provides pressure on the group as a whole. Similarly, the same operator is applied to the set of constraints to roll up the collection into a single constraint utility. The renormalization step once a generation on the Pareto Set is a critical operation because it allows for an up to date picture of the candidate solutions and how they compare to one another. The purpose of the fuzzy sorting is to provide relative fitness of a candidate solution, not some absolute value. This differs in approach from traditional non-dominated sorting of genetic algorithms [9].

V Example Problem

For the purposes of example, the following problem has its number of objective functions limited to two so the traditional Pareto Fronts can be plotted and the comparison can be made to the Fuzzy Pareto Set. This example problem has two variables, two objective functions, and a non-convex Pareto Front [16]. The optimization is as follows:

Even though the search space was non-convex, the HGA-MAS found the Pareto Optimal solution. Figure 4 Shows the Pareto Set color mapped to the objective function utility. The equi-utility banding can readily be seen as one moves out from the Pareto Optimal solution.

Figure 4: Example Problem Pareto Front Fuzzy Utility

Figure 5: Example Problem Top Ten Designs

VII Theory Section Conclusion

The combination of a Hybrid Genetic Algorithm – Multi Agent System and Fuzzy Programming has produced a solver that can simplify multi-objective optimization. By transforming the traditional optimization problem into a Fuzzy Programming form, the scientist can reduce the objective functions and constraints to a single element each through set operations. This greatly simplifies the practical optimization of the problem. In addition, one can mix minimization, maximization, and goal seek objective functions heterogeneously without difficulty. In each of the example problems, the solver was able to find the Pareto Optimal solution in 100 generations. This included both convex and non-convex problems. This type of solver has also been successful in the handling of Integer Programming problems such as bin packing [2].

VIII TridentOpt Code Manual

Now that the theory of how TridentOpt works has been discussed, a detailed discussion of the code base is necessary. The GUI is written in QT 5.8, so you will need to install the open source version of that. It should also be mentioned that the TridentOptCore makes heavy use of the HPC Template Library for everything from serialization to random number generation.

At the top of the object hierarchy is the TOptPopulation. The TOptPopulation has two collections of TOptChromosomes, the active population of candidate designs and the Pareto Set. Each TOptChromosome has the necessary information to define the problem being solved. The TOptChromosome has a collection of TOptObjective, a collection of TOptConstraint, a collection of TOptAgent, and a TOptModel. Every generation of the solver, the TOptPopulation goes through the following steps:

void TOptPopulation::ImprovePopulation(void)

{

int numChromo, numPareto;

numChromo = m_arrChromosomes.size();

this->UpdatePopulationUtilities();

this->UpdateTopTenElite();

for (int m = 0; ((m < m_intNumDesignCycle) && (m_blnIsRunning == true)); m++)

{

if (m_blnMultiThreaded)

{

this->ImproveDesignAgentsMT();

}

else

{

this->ImproveDesignAgentsST();

}

}

this->ImproveGeneticAlgorithm();

this->UpdatePopulationUtilities();

this->UpdateTopTenElite();

for (int m = 0; m < numChromo; m++)

{

numPareto = m_arrParetoSet.size();

TOptChromosome * ptrCurr = m_arrChromosomes.at(m);

bool blnAlreadyThere = false;

for (int n = 0; n < numPareto; n++)

{

TOptChromosome * ptrParetoCurr = m_arrParetoSet.at(n);

m_sngEpsilon = 0.001f;

bool blnTest = ptrParetoCurr->IsChromoEqual(ptrCurr, m_sngEpsilon);

if (blnTest == true)

{

blnAlreadyThere = true;

break;

}

}

if (blnAlreadyThere == false)

{ TOptChromosome * ptrNew = new TOptChromosome();

*ptrNew = *ptrCurr;

ptrNew->Set_ptrEvaluator(m_ptrPopEvaluator);

ptrNew->Set_ptrParent(this);

m_arrParetoSet.push_back(ptrNew);

}

}

this->GroomParetoFront(false);

this->SaveParetoSetDump();

std::sort(m_arrChromosomes.m_vect.begin(), m_arrChromosomes.m_vect.end(),

SortChromosomeDescending);

if (numChromo >= 10)

{

int intReseed = round(numChromo * 0.1f);

for (int k = numChromo - 1; k >= numChromo - 1 - intReseed; k--)

{

if ((k >= 0) && (k < numChromo))

{

if (m_arrChromosomes.at(k)->Get_blnEliteChromosome() == false)

{

m_arrChromosomes.at(k)->RandomizeChromosome();

}

else

{

int z = 10; }

}

}

}

else

{

m_arrChromosomes.at(numChromo - 1)->RandomizeChromosome();

}

return;

}

In order to introduce new problems to the solver, the user needs to write a new TOptModel subclass. The code base provides a number of example models including all of the ones in the technical paper in the documentation folder. In order to successfully subclass the TOptModel, one only has to override the virtual functions listed below:

class HTL_DLLAPI TOptSineCos : public TOptModel

{

public:

TOptSineCos();

virtual ~TOptSineCos();

TOptSineCos(const TOptSineCos& rhs);

TOptSineCos & operator = (const TOptSineCos & rhs);

public:

virtual int CreateDesignChromosome(void);

protected:

virtual int CalculateModel(void);

const double m_PI = 3.14159265359f;

};

CreateDesignChromosome function builds the design chromosome for the problem. This is the TOptChromosome that all other chromosomes will be carbon copied from in the TOptPopulation. You have to set up the TOptObjectives, TOptConstraints, and TOptAgents (Variables) for your problem. Agents have two modes Single Step and Gradient mode. Single Step takes a step in either direction and Gradient takes multiple steps in the direction of the derivative until the local optima is found. Use the code examples provided to successfully implement. CalculateModel is where you actually calculate the math model of your optimization problem. This is the meat of the TOptModel. Let's take a look at the TOptSineCos model function.

double phi1(double s1, double s2)

{

return ((1.0 / 2.0) * sin(s1)) - (2 * cos(s1)) + sin(s2) - ((3.0 / 2.0) * cos(s2));

}

double phi2(double s1, double s2)

{

return ((3.0 / 2.0) * sin(s1)) - (cos(s1)) + (2.0*sin(s2)) - ((1.0 / 2.0) * cos(s2));

}

int TOptSineCos::CalculateModel()

{

double s1, s2, J1, J2;

s1 = m_arrVars.at(0);

s2 = m_arrVars.at(1);

J1 = 1.0f + pow(phi1(1.0, 2.0) - phi1(s1, s2), 2) + pow(phi2(1.0, 2.0) - phi2(s1, s2), 2);

J2 = pow(s1 + 3.0, 2) + pow(s2 + 1.0, 2);

m_arrObjs.at(0) = J1; m_arrObjs.at(1) = J2;

m_arrConsts.at(0) = s1; m_arrConsts.at(1) = s1; m_arrConsts.at(2) = s2; m_arrConsts.at(3) = s2; return 1;

}

The reader will notice that the math is the equations outlined in Equation 5 was mentioned earlier in the example problem. The flow is simple. Import the variables, calculate the objectives and constraints, and return those values back to the containers. m_arrVars is the container for variables, m_arrObjs is the container for objectives, and m_arrConsts is the container for constraint values. As long as these inputs and outputs are met, the TridentOpt engine takes care of the rest of the details.

One other detail. In order for your model to be loaded into the system, it has to be registered with the TOptModelFactory. This is done by the following statement in the header file.

static bool blnTOptSineCos_Registered =

HTL::GetBaseFactoryPtr()->Register<TOptSineCos>("TOptSineCos");

static bool blnTOptSineCos_Registered2 =

TridentOptCore::GetModelFactoryPtr()->Register<TOptSineCos>("TOptSineCos");

References

[1] Daniels A. and M. G. Parsons, “An agent based approach to space allocation in general arrangements”, Proceedings of the 9th International Marine Design Conference, Ann Arbor, MI, May 2006.

[2] Daniels A. and M.G. Parsons, “Development of a Hybrid Agent – Genetic Algorithm Approach to General Arrangements”, Proceedings of COMPIT 2007, Cortona, Italy, April 2007. Accepted Journal of Ship Technology Research, 2008.

[3] Buckley J.J., “Fuzzy Programming and the Pareto Optimal Set”, Fuzzy Sets and Systems, Volume 10, page 57-63. 1983.

[4] Unknown Author, modeFrontier, Inc. “A simple multi-objective optimization problem”,

http://www.math.unipd.it/~marcuzzi/DIDATTICA/LEZ&ESE_PIAI_Matematica/3_cones.pdf

For more information, visit: www.esteco.comor send an e-mail to: modeFRONTIER@esteco.com

[5]Unkown Author, “Solving Three-objective Optimization Problems Using Evolutionary Dynamic Weighted Aggregation: Results and Analysis”, Department of Computing, University of Surrey, Guildford, Surrey, GU2 7XH, UK

www.soft-computing.de/moo3D.pdf

[6] R. Viennet, C Fonteix, and I. Marc. “Multi-criteria optimization using genetic algorithms for determining a pareto set”, International Journal of Systems Science, Volume 27(2), page 255-260, 1996.

[7] Zhenan He, Gary G. Yen, and Jun Zhang, “Fuzzy-Based Pareto Optimality for Many-Objective Evolutionary Algorithms” IEEE TRANSACTIONS ON EVOLUTIONARY COMPUTATION, Volume 18, NO. 2, April 2014

[8] Santos Silva Carlos A. “Multi-objective Optimization Overview”, MIT Portugal

https://fenix.tecnico.ulisboa.pt/downloadFile/3779575589383/Class8

[9] Fonseca Carlos M. and Peter J. Fleming “Genetic Algorithms for Multi-objective Optimization: Formulation, Discussion and Generalization”, Genetic Algorithms: Proceedings of the Fifth International Conference (S. Forrest, ed.), San Mateo, CA: Morgan Kaufmann, July 1993.

[10] Adnan Acan , “GAACO: A GA + ACO Hybrid for Faster and Better Search Capability”, International Workshop on Ant Algorithms, pages 300-301. ANTS 2002

[11] Koppen Mario, Raul Vicente-Garcia, and Bertram Nickolay “Fuzzy-Pareto-Dominance and its Application in Evolutionary Multi-Objective Optimization”, Third International Conference, EMO 2005, Guanajuato, Mexico, March 9-11, 2005. Proceedings.

[12] Erfani Tohid and Sergei V.Utyuzhnikov, “Handling Uncertainty and Finding Robust Pareto Frontier in Multi-objective Optimization Using Fuzzy Set Theory”, 51st AIAA/ASME/ASCE/AHS/ASC Structures, Structural Dynamics, and Materials Conference Orlando, Florida. April 2010.

[13] Chase N., M. Rademacher, E. Goodman “A Benchmark Study of Multi-Objective Optimization Methods”

https://www.researchgate.net/publication/242077047_A_Benchmark_Study_of_Multi-Objective_Optimization_Methods

[14] Deb Kalyanmoy, Samir Agrawal, Amrit Pratap, and T Meyarivan, “A Fast Elitist Non-Dominated Sorting Genetic Algorithm for Multi-Objective Optimization: NSGA-II”, International Conference on Parallel Problem Solving from Nature, PPSN 2000: Parallel Problem Solving from Nature PPSN VI pp 849-858.

[15] Ghosh Debdas, Debjani Chakraborty “A method to obtain fuzzy Pareto set of fuzzy multi-criteria quadratic programming problems”, Annals of Fuzzy Mathematics and Informatics, 2013.

[16] Lopeza RafaelH.,T.G.Rittob,RubensSampaioc andJoseEduardoS.deCursid, “A New Multi-Objective Optimization Algorithm For Non-Convex Pareto Fronts and Objective Functions”, Mecánica Computacional Vol XXXII, pages 669-679, Mendoza, Argentina, 19-22 November 2013.