Introduction

This is a Powershell tool for monitoring a set of http hosts that logs into MSSQL database. You can download the latest version here and check the official git-hub. This is a good showcase to understand powershell basics and a nice example about powershell capabilities to create a quick and dirty application. Moreover, this is a nice tool to monitor real web site without pains or exernal services.

Why a Powershell tool to Monitor Http resources

This tool need as a swiss knife for monitoring websites without using external service or installing complex softwares. It may be needed to monitor some site, also for testing\debugging purposes and you don't want to spend too much time adopting huge tools. Or you may need a monitor tool that can be customized to collect complex statistics or add business login into monitor.

Because this project is build trying to keep it as much simple as possible, it is just a simple powershell script. This means you'll just have to download a single file and run. No worry about install process, download frameworks or prerequisites. Just download and run. Easy right? This will install you powershell as a windows service. All settings can be tuned directly into the script or using an external config file. Once service is running, he periodically reload the website list and try to call each entry, saving result into database.

Monitoring

Monitoring is a big theme and I want focus on website monitoring, that's related with this the behaviour of this article. Everyone with a website knows that, things can sometimes go wrong. Why? All web devolper knows that also a simple website is more than static resources served. There is a web server, an "engine" (php, ASP.NET, Java...) that elaborate request, and a database in the most simple case... and nowadays this coniguration is really the simplest one. Sometime something can go wrong in the code, in the webserver server, in the the network, in the OS... How a monitor sistem can help us? Of course, monitoring it doesn't solve real problem, but it can help to prevent and quickly resolve issues. Do you think thi is to much for a simple web site? Are you sure? Our website is part of our business as long as customers can reach us through it. Even if your website is up 99% of the time, this means into about 7 hours per month customers cannot reach you. That doesn't sounds good.

The focus of this article is about creating an active monitoring that can help distinguish when website is up or down. This tool will monitor standard http(s) service by hitting a request and interpreting result. If the response is not correct (i.e. http status 200 with response body not empty) this will alert you and, in any case, a log will be written into a database. So you will be able to get statistics or detect website weakness.. This is called active monitoring because the system inject artificial traffic to the target and check for results (in alternative to passive monitoring, where there are some probe that analyze result of real traffic...).

Implementing an Http-Monitor in Powershell

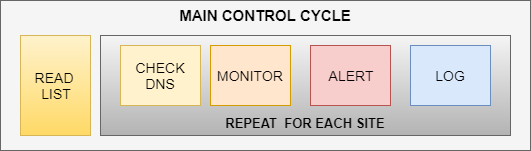

In this chapter I will discern most important part of application. Doing this, I want to put focus on “non-standard” part, because I think are the more interesting part. Let’s start explaining what http-monitor does:

-

Read a list of url from a configuration file => Input process

-

Check where DNS pints => Avoiding false positives

-

Make an Http call, taking results => Do Check

-

Store result to db => Result logging

-

Send an email in case of error => Alerting

Input process

This is simply implemented by a “Get-Content” from a txt file input. Each line represent a url to be monitored. Here is the snippet that implements that, and I think more explanation aren’t needed.

$webSitesToMonitor = Get-Content $dbpath

Avoiding false positives

When you have to monitor a big list of sites or URL there is a big issue with maintaining it. The real problem is that website may be dismissed or moved to other server or service supplier. So, i introduced the possibility to tell to http-monitor what server IP have to taken in account. The condition is the following:

-

I have many servers, i.e. SERVER A, SERVER B

-

I had 1000 sites over these 2 server at the beginning

-

Now, some web site changed service supplier and I’m not more responsible for them

Definitive solution will be to change input list removing the no more useful entries, I agree with you. In the real word, telling to the system that only the websites hosted in your server have to be monitored is a good way to avoid monitoring non useful things and manage non relevant alerts. Of course, you can always check periodically what monitoring are ignored and then update input list consequently.

This system is implemented by DNS resolution. If DNS does not points to the set of IP, it is not monitored.

try

{

$ip=[System.Net.Dns]::GetHostAddresses($line.Substring($line.IndexOf("//")+2))

.IPAddressToString

Write-Host $line " respond to ip " $ip

$monitorStatus="OK"

if($monitoring.Length -gt 0)

{

$toMonitor=$toMonitor -and $monitoring.Contains($ip)

if($toMonitor -eq $false)

{

$monitorStatus="SKIPPED"

}

}

}

catch

{

$toMonitor=$false

Write-Warning " $line unable to resolve IP "

throw $_

$monitorStatus="NOT RESOLVED"

}

Do Check

Do check is the easier part because Powershell provide us a simple command to launch. This will returns request object where we can read information about status, timing and so on.

try

{

$RequestTime = Get-Date

$R = Invoke-WebRequest -URI $line -UserAgent $userAgent

$TimeTaken = ((Get-Date) - $RequestTime).TotalMilliseconds

$status=$R.StatusCode

$len=$R.RawContentLength

}

catch

{

$status=$_.Exception.Response.StatusCode.Value__

$len=0

}

Result logging

Best way to store result is to use a database. I also thought about a CSV file to avoid database dependency, but it is hard to process data from CSV. You should import to database each time you need to make some complex query. So I decide to use database (MSSQL, express version it’s ok!) or disable this function. Write on database is very easy and bring me on the early 2000 when using plain ADO.Net was a common practice.

Function CreateTableIfNotExists

{

[CmdletBinding()]

Param(

[System.Data.SqlClient.SqlConnection]$OpenSQLConnection

)

$script=@"

if not exists (select * from sysobjects where name='logs' and xtype='U')

CREATE TABLE [logs]

( [date] [datetime] NOT NULL DEFAULT (getdate()) ,

[site] [varchar](500) NULL,

[status] [varchar](50) NULL,

[length] [bigint] NULL,

[time] [bigint] NULL,

[ip] [varchar](50) NULL,

[monitored] [varchar](50) NULL

) ON [PRIMARY]

"@

$sqlCommand = New-Object System.Data.SqlClient.SqlCommand

$sqlCommand.Connection = $sqlConnection

$sqlCommand.CommandText =$script

$result= $sqlCommand.ExecuteNonQuery()

Write-Warning "Table log created $result"

}

Function WriteLogToDB {

[CmdletBinding()]

Param(

[System.Data.SqlClient.SqlConnection]$OpenSQLConnection,

[string]$site,

[int]$status,

[int]$length,

[int]$time,

[string]$ip,

[string]$monitored

)

$sqlCommand = New-Object System.Data.SqlClient.SqlCommand

$sqlCommand.Connection = $sqlConnection

$sqlCommand.CommandText =

"INSERT INTO [dbo].[logs] ([site] ,[status] ,[length] ,[time],[ip],[monitored]) "+

" VALUES (@site,@status ,@lenght ,@time,@ip,@monitored) "

$sqlCommand.Parameters.Add(New-Object SqlParameter("@site",[Data.SQLDBType]::NVarChar, 500)) | Out-Null

$sqlCommand.Parameters.Add(New-Object SqlParameter("@status",[Data.SQLDBType]::NVarChar, 500)) | Out-Null

$sqlCommand.Parameters.Add(New-Object SqlParameter("@lenght",[Data.SQLDBType]::BigInt)) | Out-Null

$sqlCommand.Parameters.Add(New-Object SqlParameter("@time",[Data.SQLDBType]::BigInt)) | Out-Null

$sqlCommand.Parameters.Add(New-Object SqlParameter("@ip",[Data.SQLDBType]::NVarChar, 500))) | Out-Null

$sqlCommand.Parameters.Add(New-Object SqlParameter("@monitored",[Data.SQLDBType]::NVarChar, 500)) | Out-Null

$sqlCommand.Parameters[0].Value = $site

$sqlCommand.Parameters[1].Value = $status

$sqlCommand.Parameters[2].Value = $length

$sqlCommand.Parameters[3].Value = $time

$sqlCommand.Parameters[4].Value = $ip

$sqlCommand.Parameters[5].Value = $monitored

$InsertedID = $sqlCommand.ExecuteScalar()

}

if($writeToDB -eq $true)

{

WriteLogToDB $sqlConnection $line $status $len $TimeTaken $ip $monitored

}

Alerting

The simpler alert system I imaged was an email alert. I know nowadays we are mined by thousand of non-relevant information by mail so this may be hard to find in the pot. Anyway, without an UI, in a tool designed to be the simplest thing in the world, this is still the best option. I also thought about writing into event logger or send logs using syslog or similar system. The problem is that in this scenario we would setup up manually notification from these system to be “physically” notified by error.

The implementation is quite easy as powershell provide a function to do that:

if($sendEmail -eq $true -and $emailErrorCodes.Length -ge 0 -and $emailErrorCodes.Contains( $R.StatusCode) )

{

$statusEmail=$R.StatusCode

$content=$R.RawContent

$subject="$errorSubject $line"

$body= @".. this value is omitted for readability "@

$attachment="$workingPath\tmp.txt"

Remove-Item $workingPath\tmp.txt -Force

Write-Host $attachment

$content|Set-Content $attachment

Write-Host "Sending mail notification"

Send-MailMessage -From $emailFrom -To $emailTo -Subject $subject -Body $body -Attachments $attachment -Priority High -dno onSuccess, onFailure -SmtpServer $smtpServer

Remove-Item $workingPath\tmp.txt -Force

}

Continuous monitoring

This is the most tricky part. The simplest solution is to schedule this powershell as a task on windows. Playing with config is possible to tell that this operation must be done each x minutes and avoid concurrent start of instances. I also implemented it as a service. This was most an exercise than a real need, because poweshell executed as a task is a working solution that can be easily automated by using poweshell api.

How it is possible to setup a powershell as a service

I windows we know not all executable can be services. Differently from linux where you can run every script as a service, in windows we need to implement executable with a given structure. In example, using .net framework, we need to implement a ServiceBase based class to expose Start\Stop functionalities. This is quite the same in all other languages as the Start\Stop APIs are needed from the OS to control the service. To do that in powershell I found an interesting reference implementation that:

-

Embed a C# class inside the powershell, as a string

-

in this class, implement Start\Stop methods that invoke the powershell script

-

Most part of script are dynamic so details like paths are setted up during setup

-

A setup method, where

-

Class is actualized with running parameters

-

Class is compiled

-

A Service compatible executable is created (in the same folder of script)

-

This executable is registered as a service.

$source = @"

using System;

using System.ServiceProcess;

using System.Diagnostics;

using System.Runtime.InteropServices; // SET STATUS

using System.ComponentModel; // SET STATUS

public class Service_$serviceName : ServiceBase {

[DllImport("advapi32.dll", SetLastError=true)] // SET STATUS

private static extern bool SetServiceStatus(IntPtr handle, ref ServiceStatus serviceStatus);

protected override void OnStart(string [] args) {

EventLog.WriteEntry(ServiceName, "$exeName OnStart() // Entry. Starting script '$scriptCopyCname' -Start"); // EVENT LOG

// Set the service state to Start Pending. // SET STATUS [

// Only useful if the startup time is long. Not really necessary here for a 2s startup time.

serviceStatus.dwServiceType = ServiceType.SERVICE_WIN32_OWN_PROCESS;

serviceStatus.dwCurrentState = ServiceState.SERVICE_START_PENDING;

serviceStatus.dwWin32ExitCode = 0;

serviceStatus.dwWaitHint = 2000; // It takes about 2 seconds to start PowerShell

SetServiceStatus(ServiceHandle, ref serviceStatus); // SET STATUS ]

// Start a child process with another copy of this script

try {

Process p = new Process();

// Redirect the output stream of the child process.

p.StartInfo.UseShellExecute = false;

p.StartInfo.RedirectStandardOutput = true;

p.StartInfo.FileName = "PowerShell.exe";

p.StartInfo.Arguments = "-ExecutionPolicy Bypass -c & '$scriptCopyCname' -Start"; // Works if path has spaces, but not if it contains ' quotes.

p.Start();

// Read the output stream first and then wait. (To avoid deadlocks says Microsoft!)

string output = p.StandardOutput.ReadToEnd();

// Wait for the completion of the script startup code, that launches the -Service instance

p.WaitForExit();

if (p.ExitCode != 0) throw new Win32Exception((int)(Win32Error.ERROR_APP_INIT_FAILURE));

// Success. Set the service state to Running. // SET STATUS

serviceStatus.dwCurrentState = ServiceState.SERVICE_RUNNING; // SET STATUS

} catch (Exception e) {

EventLog.WriteEntry(ServiceName, "$exeName OnStart() // Failed to start $scriptCopyCname. " + e.Message, EventLogEntryType.Error); // EVENT LOG

// Change the service state back to Stopped. // SET STATUS [

serviceStatus.dwCurrentState = ServiceState.SERVICE_STOPPED;

Win32Exception w32ex = e as Win32Exception; // Try getting the WIN32 error code

if (w32ex == null) { // Not a Win32 exception, but maybe the inner one is...

w32ex = e.InnerException as Win32Exception;

}

if (w32ex != null) { // Report the actual WIN32 error

serviceStatus.dwWin32ExitCode = w32ex.NativeErrorCode;

} else { // Make up a reasonable reason

serviceStatus.dwWin32ExitCode = (int)(Win32Error.ERROR_APP_INIT_FAILURE);

} // SET STATUS ]

} finally {

serviceStatus.dwWaitHint = 0; // SET STATUS

SetServiceStatus(ServiceHandle, ref serviceStatus); // SET STATUS

EventLog.WriteEntry(ServiceName, "$exeName OnStart() // Exit"); // EVENT LOG

}

}

protected override void OnStop() {

EventLog.WriteEntry(ServiceName, "$exeName OnStop() // Entry"); // EVENT LOG

// Start a child process with another copy of ourselves

Process p = new Process();

// Redirect the output stream of the child process.

p.StartInfo.UseShellExecute = false;

p.StartInfo.RedirectStandardOutput = true;

p.StartInfo.FileName = "PowerShell.exe";

p.StartInfo.Arguments = "-c & '$scriptCopyCname' -Stop"; // Works if path has spaces, but not if it contains ' quotes.

p.Start();

// Read the output stream first and then wait.

string output = p.StandardOutput.ReadToEnd();

// Wait for the PowerShell script to be fully stopped.

p.WaitForExit();

// Change the service state back to Stopped. // SET STATUS

serviceStatus.dwCurrentState = ServiceState.SERVICE_STOPPED; // SET STATUS

SetServiceStatus(ServiceHandle, ref serviceStatus); // SET STATUS

EventLog.WriteEntry(ServiceName, "$exeName OnStop() // Exit"); // EVENT LOG

}

public static void Main() {

System.ServiceProcess.ServiceBase.Run(new Service_$serviceName());

}

}

"@

try {

$pss = Get-Service $serviceName -ea stop

Write-Warning "Service installed nothing to do."

exit 0

} catch {

Write-Debug "Installation is necessary"

}

if (!(Test-Path $installDir)) {

New-Item -ItemType directory -Path $installDir | Out-Null

}

try {

Write-Verbose "Compiling $exeFullName"

Add-Type -TypeDefinition $source -Language CSharp -OutputAssembly $exeFullName -OutputType ConsoleApplication -ReferencedAssemblies "System.ServiceProcess" -Debug:$false

} catch {

$msg = $_.Exception.Message

Write-error "Failed to create the $exeFullName service stub. $msg"

exit 1

}

Write-Verbose "Registering service $serviceName"

$pss = New-Service $serviceName $exeFullName -DisplayName $serviceDisplayName -Description $ServiceDescription -StartupType Automatic

Note: this code is derived from JFLarvoire scpript (a real great work!), and adapted to the http-monitor need. Please make reference to the original implementation if you are interesting in running powershell script as windows service.

Dynamic configuration

This point is important to deliver the script through many server or monitoring workstation. The easier way you can think about is to put lot of constant in the top of the script then change it during initial setup. This is very easy to implement but hard to manage as the software change, because you changed the file so it is needed to merge file instead of overwrite. So I point to the powershell data file solution (psd1 files). This would be a great solution for store externally all configuration data, except from the fact that such data are static. As you see in this great example you can store settings in as psd1 file, then load into application. But in my case i have lot of parameters that are dynamic. The most important issues is about path. I want to define a base path, then many path computed by concatenation of base path with relative path. We want also to define username\password of database once, then build dynamically the connection string, with the possibility to add parameters to the connection string itself.

So, to get this flexibility, if decide to use a more simple solution, maybe artigianal, but very functional. Main script define all parameters in the script. This means if you want to edit it inside the script itself, making harder the upgrade, you still can. After parameter definition application checks for a settings ps1 script. If found this is included into main script. All variables defined here will override the script one. Once the script is a “real” powershell script you can use variable concatenation, and make all trick you may need.

$writeToDB= $true

$DBServer = "(localdb)\Projects"

$DBName = "httpstatus"

$ConnectionString = "Server=$DBServer;Database=$DBName;Integrated Security=True;"

if ( (Test-Path -Path $workingPath\settings.ps1))

{

Write-Host "Apply external changes"

. ("$workingPath\settings.ps1")

}

See it in action: Setup and usage instructions

How to install

Simply, Powershell Http Monitor tool has three possible usage.

-

As a standalone application, to be manually run

-

As a scheduled task, to be scheduled periodically

-

As a service, to run continuously in background

Run once

Running this command the script read all websites, try to call them and finally download results into MSSQL database or log files. This usage mode doesn't require any installation. It may be also scheduled using windows scheduled tasks.

Http-Monitor -Run

Run as scheduled task

It is very easy to configure. Key settings:

It is very easy to configure. Key settings:

See screenshot for all steps:

-

Create a new scheduled task

-

Setup timing: The trick is to define a task starting once (now) but repeated indefinitely every x minutes.

-

Define startup script: This is easy. You have only to following text into edit box (just replace with you absolute filepath). Powershell -file "<pathtofile>\Http-Monitor.ps1"

Avoid multiple instances

Last step of wizard is needed to avoid multiple instance at the same time. Just choose "do not allow new instance" in drop down.

Run as service

This install the powershell script as service.

PS> Http-Monitor -Setup

once it is installed you can control using script or service.mmc

PS> Http-Monitor -Start

PS> Http-Monitor -Stop

Configure

As Powershell Http Monitor is a dry application, with no UI, configuration is the most tricky part, but at the end there are few things to learn.

-

Application settings: this can be edited inside the main application script or using external script.

-

Input: there is a text file with one host for each row.

Application settings

Input file

The file path must match application settings path. The file must contain the list of websites, with http or https prefix. This is usually a list of homepages but, by design Powershell Monitoring Tool can accept any other url.

Generate website list from IIS

This may be useful to monitor all sites into one iis server. To do this it is needed to use appcmd command to dump binding into a text file. It is easy to process using regex to translate into a list of site url.

Settings

You can configure application settings in two ways:

-

editing script inline (see section "Application settings")

-

manage settings in a separate files (recommended). Application looks for "settings.ps1" file into app directory and overwrite default settings with that one.

You can copy settings remaining it correctly download file

Settings are very easy to understand and with some attention you will be able to get the right tuning.

Conclusion

Poweshell is a powerfull tool that help us building simple solution very quickly. The fact that you can potentially do all things of the .Net Framework, in conjuntion with a set of built-in function very interconnected with the OS make it the place where all is allowed. Powershell come whit an IDE, that's quite a revolution for who is used to write OS-level script. This is not Visual Studio, but is good enough to help you writing and debugging code. Also the amount of resources and script ready to use shared in internet is a pro. So, what's the matter ?

I remark that powershell is a great tool, but... is still a scripting tool. So there is a point when it have to stop and give way to other, more structured solutions. This application, in my opionion reach this limit. It write to db, read inputs, send email alert, install itself. It was very interesting to push Powershell until this limit, but I can say that looking forward, next step will be based on other platform as complexity will grow. Writing to db without an ORM, reading data without an UI, use email as unique user alert media... It's great to get a quick result, easy to melt near our need and share, but as we will need more this is no more the right tool to use.

So, about next steps: it will be nice if this tool may be usefull to others and I will pride if someone will contribute on it. This could be a good point to start monitoring web sites, but also an interesting experience to found a real-world monitoring tool. Maybe with another stack, maybe with an UI, best with another technology...

References

History

- 2017-11-18: published on codeproject

- 2017-10-23: first release on github

- 2017-10-17: start working on it