In the previous post, we have seen how to train NN model by using MinibatchSource. Usually, we should use it when we have a large amount of data. In case of small amount of data, all data can be loaded in memory, and all can be passed to each iteration in order to train the model. This blog post will implement this kind of feeding the trainer.

We will reuse the previous implementation, so the starting point can be the previous source code. For data loading, we have to define a new method. The Iris data is stored in text format like the following:

sepal_length,sepal_width,petal_length,petal_width,species

5.1,3.5,1.4,0.2,setosa(1 0 0)

7.0,3.2,4.7,1.4,versicolor(0 1 0)

7.6,3.0,6.6,2.1,virginica(0 0 1)

...

The output column is encoded to 1-N encoding rule we have seen previously.

The method will read all the data from the file, parse the data and create two float arrays:

float[] feature, andfloat[] label

As can be seen, both arrays are 1D, which means all data will be inserted in 1D, because the CNTK requires so. Since the data is in 1D array, we should also provide the dimensionality of the data so the CNTK can resolve values for each feature. The following listing shows the loading Iris data in two 1D array returned as tuple.

static (float[], float[]) loadIrisDataset(string filePath, int featureDim, int numClasses)

{

var rows = File.ReadAllLines(filePath);

var features = new List<float>();

var label = new List<float>();

for (int i = 1; i < rows.Length; i++)

{

var row = rows[i].Split(',');

var input = new float[featureDim];

for (int j = 0; j < featureDim; j++)

{

input[j] = float.Parse(row[j], CultureInfo.InvariantCulture);

}

var output = new float[numClasses];

for (int k = 0; k < numClasses; k++)

{

int oIndex = featureDim + k;

output[k] = float.Parse(row[oIndex], CultureInfo.InvariantCulture);

}

features.AddRange(input);

label.AddRange(output);

}

return (features.ToArray(), label.ToArray());

}

Once the data is loaded, we should change very little amount of the previous code in order to implement batching instead of using minibatchSource. At the beginning, we provide several variables to define the NN model structure. Then we call the loadIrisDataset, and define xValues and yValues, which we use in order to create feature and label input variables. Then we create dictionary which connects the feature and labels with data values which we will pass to the trainer later.

The next code is the same as in the previous version in order to create NN model, Loss and Evaluation functions, and learning rate.

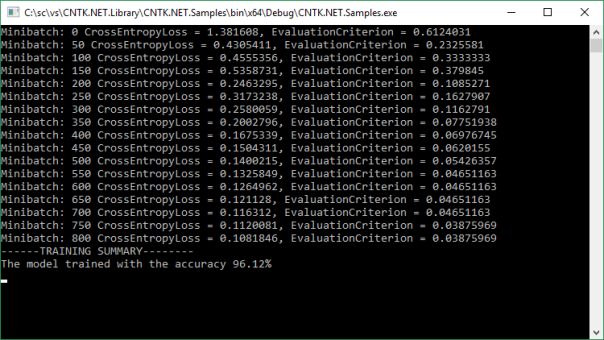

Then we create loop, for 800 iterations. Once the iteration reaches the maximum value, the program outputs the model properties and terminates.

Above said is implemented in the following code:

public static void TrainIriswithBatch(DeviceDescriptor device)

{

var iris_data_file = "Data/iris_with_hot_vector.csv";

int inputDim = 4;

int numOutputClasses = 3;

int numHiddenLayers = 1;

int hidenLayerDim = 6;

int sampleSize = 130;

var dataSet = loadIrisDataset(iris_data_file, inputDim, numOutputClasses);

var xValues = Value.CreateBatch<float>(new NDShape(1, inputDim), dataSet.Item1, device);

var yValues = Value.CreateBatch<float>(new NDShape(1, numOutputClasses), dataSet.Item2, device);

var feature = Variable.InputVariable(new NDShape(1, inputDim), DataType.Float);

var label = Variable.InputVariable(new NDShape(1, numOutputClasses), DataType.Float);

var dic = new Dictionary<Variable, Value>();

dic.Add(feature, xValues);

dic.Add(label, yValues);

(device, numOutputClasses, hidenLayerDim, feature, classifierName);

var ffnn_model = createFFNN(feature, numHiddenLayers, hidenLayerDim,

numOutputClasses, Activation.Tanh, "IrisNNModel", device);

var trainingLoss = CNTKLib.CrossEntropyWithSoftmax

(new Variable(ffnn_model), label, "lossFunction");

var classError = CNTKLib.ClassificationError

(new Variable(ffnn_model), label, "classificationError");

var learningRatePerSample = new TrainingParameterScheduleDouble(0.001125, 1);

var ll = Learner.SGDLearner(ffnn_model.Parameters(), learningRatePerSample);

var trainer = Trainer.CreateTrainer(ffnn_model, trainingLoss, classError, new Learner[] { ll });

int epochs = 800;

int i = 0;

while (epochs > -1)

{

trainer.TrainMinibatch(dic, device);

printTrainingProgress(trainer, i++, 50);

epochs--;

}

double acc = Math.Round((1.0 - trainer.PreviousMinibatchEvaluationAverage()) * 100, 2);

Console.WriteLine($"------TRAINING SUMMARY--------");

Console.WriteLine($"The model trained with the accuracy {acc}%");

}

If we run the code, the output will be the same as we got from the previous blog post example:

The full source code with training data can be downloaded here.

Filed under: .NET, C#, CNTK, CodeProject

Tagged: .NET, C#, CNTK, Code Project, CodeProject, Machine Learning