Introduction

In this article, you'll learn how to set up a development environment to use Elasticsearch and Kibana with logging purposes. During this process, you will use Docker, very basic usage, and you will learn, also, how easy it is to use .NET Standard libraries within our Windows Classic Desktop Applications.

Background

I will demonstrate that Elasticsearch and Kibana integration within our systems is quite easy. To do so, I'll set up and configure a Docker network and two Docker Images, one for Elasticsearch and another for Kibana. This infrastructure will be used by a Windows Classic Desktop console that is going to perform some randomized logging. Continuing with what I showed in the last article, regarding proper logging in .NET Core, I'm going to configure three logging outputs, the console, and with Serilog, I'll configure and use a rolling file shink and a Elasticsearch Shink. These Shinks are going to be used within a .NET Standard 2 library, but this library is going to be referenced by the console application, using latest Microsoft Dependency Injection Extensions.

Setting Up the Infrastructure

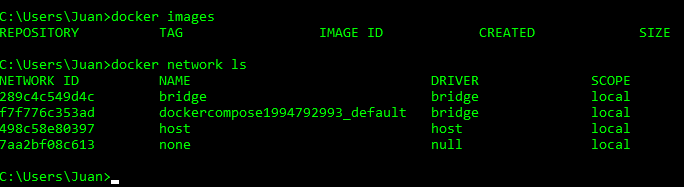

First of all, we need to have Docker installed and well configured in our machine, I'm using W10 with Docker for Windows. (In case you don't have it, you can find it here). We only need to run three commands to set everything with Docker. (This is awesome, isn't it?)

docker network create elk-logging-poc --driver=bridgedocker run -p 5601:5601 --name kibana -d --network elk-logging-poc kibana docker run -p 9200:9200 -p 9300:9300 --name elasticsearch -d --network elk-logging-poc elasticsearch

The first one creates a network to be used in this Proof Of Concept, the second runs Kibana, pulling the image from Docker Hub if it doesn't exist locally and the third one does the same with Elasticsearch.

Initial Status:

- Create Network:

- Install and run Kibana:

At this point, Kibana is installed but if we go to localhost:5601, we can see that Kibana is missing Elasticsearch:

Install Elasticsearch:

And now Kibana is ready to be configured:

Setting Up the Solution

Well, now it is time to set up our solution, and, as I said, I am going to use a Windows Classic Desktop console application and a .NET Standard Library. So, I'll create a new solution and the WCD console project:

Hint: Although it is a POC, I always try to use robust namespacing, I always have in mind that a POC can become a prototype and after that, a product. These things happen.

Now, let's create the .NET Standard library:

And the result will look like:

Console Files

In order to save you time, you need to install the NuGet packages listed in the packages.config to the Console application, and is easier to do it manually.

packages.config

="1.0"="utf-8"

<packages>

<package id="Elasticsearch.Net"

version="5.5.0" targetFramework="net462" />

<package id="Microsoft.DotNet.InternalAbstractions"

version="1.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.Configuration"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.Configuration.Abstractions"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.Configuration.Binder"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.Configuration.FileExtensions"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.Configuration.Json"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.DependencyInjection"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.DependencyInjection.Abstractions"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.DependencyModel"

version="1.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.FileProviders.Abstractions"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.FileProviders.Physical"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.FileSystemGlobbing"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.Logging"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.Logging.Abstractions"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.Logging.Console"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.Logging.Debug"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.Options"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.Options.ConfigurationExtensions"

version="2.0.0" targetFramework="net462" />

<package id="Microsoft.Extensions.Primitives"

version="2.0.0" targetFramework="net462" />

<package id="Newtonsoft.Json"

version="10.0.1" targetFramework="net462" />

<package id="Serilog"

version="2.5.0" targetFramework="net462" />

<package id="Serilog.Extensions.Logging"

version="2.0.2" targetFramework="net462" />

<package id="Serilog.Settings.Configuration"

version="2.4.0" targetFramework="net462" />

<package id="Serilog.Sinks.Elasticsearch"

version="5.4.0" targetFramework="net462" />

<package id="Serilog.Sinks.File"

version="3.2.0" targetFramework="net462" />

<package id="Serilog.Sinks.PeriodicBatching"

version="2.1.0" targetFramework="net462" />

<package id="Serilog.Sinks.RollingFile"

version="3.3.0" targetFramework="net462" />

<package id="System.Linq"

version="4.1.0" targetFramework="net462" />

<package id="System.Resources.ResourceManager"

version="4.0.1" targetFramework="net462" />

<package id="System.Runtime"

version="4.1.0" targetFramework="net462" />

<package id="System.Runtime.CompilerServices.Unsafe"

version="4.4.0" targetFramework="net462" />

</packages

appsettings.json

{

"Logging": {

"IncludeScopes": true,

"Debug": {

"LogLevel": {

"Default": "Critical"

}

},

"Console": {

"LogLevel": {

"Microsoft.AspNetCore.Mvc.Razor.Internal": "Warning",

"Microsoft.AspNetCore.Mvc.Razor.Razor": "Debug",

"Microsoft.AspNetCore.Mvc.Razor": "Error",

"Default": "Critical"

}

},

"LogLevel": {

"Default": "Critical"

}

},

"Serilog": {

"WriteTo": [

{

"Name": "Elasticsearch",

"Args": {

"nodeUris": "http://localhost:9200;http://remotehost:9200/",

"indexFormat": "elk-poc-index-{0:yyyy.MM}",

"templateName": "myCustomTemplate",

"typeName": "myCustomLogEventType",

"pipelineName": "myCustomPipelineName",

"batchPostingLimit": 50,

"period": 2000,

"inlineFields": true,

"minimumLogEventLevel": "Trace",

"bufferBaseFilename": "C:/Logs/docker-elk-serilog-web-buffer",

"bufferFileSizeLimitBytes": 5242880,

"bufferLogShippingInterval": 5000,

"connectionGlobalHeaders":

"Authorization=Bearer SOME-TOKEN;OtherHeader=OTHER-HEADER-VALUE"

}

}

],

"LogFile": "C:/Logs/ElasticSearchPoc.log",

"MinimumLevel": "Information"

}

}

Program.cs

using ElasticSearchPoc.Domain.LogProducer;

using Microsoft.Extensions.Configuration;

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Logging;

using Serilog;

using System;

namespace ElasticSearchPoc.Presentation.WCD.Console

{

class Program

{

static void Main(string[] args)

{

var serviceCollection = new ServiceCollection();

ConfigureServices(serviceCollection);

var serviceProvider = serviceCollection.BuildServiceProvider();

serviceProvider.GetService<BasicLogProducer>().Run();

}

private static void ConfigureServices(IServiceCollection serviceCollection)

{

var configuration = new ConfigurationBuilder()

.SetBasePath(AppContext.BaseDirectory)

.AddJsonFile("appsettings.json", false)

.Build();

serviceCollection.AddSingleton(new LoggerFactory()

.AddConsole(configuration.GetSection("Logging"))

.AddSerilog()

.AddDebug());

serviceCollection.AddLogging();

Log.Logger = new LoggerConfiguration()

.WriteTo.RollingFile(configuration["Serilog:LogFile"])

.ReadFrom.Configuration(configuration)

.CreateLogger();

serviceCollection.AddSingleton(configuration);

serviceCollection.AddTransient<BasicLogProducer>();

}

}

}

I'd like to add few comments about those files, take a look at the different log levels in appsettings.json and the Elasticsearch options, settings are key on this POC. Also, I'd like to emphasize that the source code of our program is clean and SOLID and I have to publicly say thank you to the .NET foundation and its contributors for these extremely useful libraries and extensions.

Let's take a look at the files in the .NET Standard library, LogProducer.

LogProducer Files

As you will see, its only dependency is with Microsoft.Extensions.Logging...

BasicLogProducer.cs

using Microsoft.Extensions.Logging;

using System;

using System.Threading;

namespace ElasticSearchPoc.Domain.LogProducer

{

public class BasicLogProducer

{

private readonly ILogger<BasicLogProducer> _logger;

public BasicLogProducer(ILogger<BasicLogProducer> logger)

{

_logger = logger;

}

public void Run()

{

var runDate = DateTime.Now;

while (true)

{

Array values = Enum.GetValues(typeof(LogLevel));

Random random = new Random();

LogLevel randomLogLevel = (LogLevel)values.GetValue(random.Next(values.Length));

switch (randomLogLevel)

{

case LogLevel.Trace:

_logger.LogTrace($"RunDate: {runDate};

Message Id: {Guid.NewGuid()}; LogLevel: Trace;

LogLevelValue: {randomLogLevel.ToString("D")}");

break;

case LogLevel.Debug:

_logger.LogDebug($"RunDate: {runDate};

Message Id: {Guid.NewGuid()}; LogLevel: Debug;

LogLevelValue: {randomLogLevel.ToString("D")}");

break;

case LogLevel.Information:

_logger.LogInformation($"RunDate: {runDate};

Message Id: {Guid.NewGuid()}; LogLevel: Information;

LogLevelValue: {randomLogLevel.ToString("D")}");

break;

case LogLevel.Warning:

_logger.LogWarning($"RunDate: {runDate};

Message Id: {Guid.NewGuid()}; LogLevel: Warning;

LogLevelValue: {randomLogLevel.ToString("D")}");

break;

case LogLevel.Error:

_logger.LogError($"RunDate: {runDate};

Message Id: {Guid.NewGuid()}; LogLevel: Error;

LogLevelValue: {randomLogLevel.ToString("D")}");

break;

case LogLevel.Critical:

_logger.LogCritical($"RunDate: {runDate};

Message Id: {Guid.NewGuid()}; LogLevel: Critical;

LogLevelValue: {randomLogLevel.ToString("D")}");

break;

case LogLevel.None:

default:

break;

}

Thread.Sleep(100);

}

}

}

}

Although I always try to write self-explanatory source code, this class deserves a brief explanation. It uses DI (Dependency Injection), the ILogger<T> is being created because the host app registered ILoggingFactory and Serilog so that logger is going to have whatever we have configured in the main application, in this case our three Shinks, console, file and Elasticsearch. The Run method gets initial run date and starts generating logs with a random log level on each iteration. It is very basic but it will do its job.

Configure, Run & Test

I'm not going to give too many details. I guess that with some screenshots, you'll like to try it out by yourself. First thing we have to do is to configure in Kibana the main index pattern and as you can see, that is one of the config parameters we have in our appsettings.json file, concretely. I used "indexFormat": "elk-poc-index-{0:yyyy.MM}", so we have to configure Kibana index like "elk-poc-index-*":

Well, let's launch our app and see what happens...

Console is logging only critical, according to configuration parameters:

We are also logging into a file, as stated in config file and in our Main method, I'll demonstrate with few screenshots how to use best Tail tool I know, Tail Blazer (If you have read until this point and you don't know TailBlazer, then you must go and try it right now because you'll love it):

- Plain output:

- Clicking on top right gear icon, we can add some highlights:

- And also in main window, we can add some filters:

I'll write Fatal so see only entries with Fatal keyword:

And, what about Elasticsearch and Kibana? Well, while I was doing these screenshots, the console application has been generating logs, in the console (Critical only), in files in the local file system with Information Level as we have just seen through TailBlazer, and the program has been sending logs to the Elasticsearch Shink with Trace level, so, if we go to Kibana and select Timelion menu item on the left menu, we should be able to see a graph indicating the number of logs received, something like this:

The graph makes sense because the code is sending around 10 logs per second because there is a Thread.Sleep(100), right? Let's force the application a little and set the sleep to only 10 ms. If I run it again, the timeline looks like this:

And what if I quit the sleep and I force my machine to generate as many logs as possible?

Well, the file log grows extremely fast, as expected (blue means recently created, look at the millis in the timestamp):

The CPU reach 100% (as expected also):

And Kibana receives a peak of 772 logs per second. Not too bad considering that everything is running on the same machine and I'm debugging and monitoring log files, we could probably force it quite a lot more.

And, well, that's all for today, I still don't know how to properly visualize data with Kibana but the POC finishes here because it logs, as expected.

Points of Interest

- .NET standard libraries are the best solution whenever it is possible because they are the most reusable choice nowadays.

- Configure Elasticsearch and Kibana with Docker takes only three steps.

- Logging modern .NET applications has many possibilities and we've learned how to:

- Properly log to the console

- Properly log to log files and how to visualize them in real-time with TailBlazer

- How to configure a Serilog Shink to log to Elasticsearch

History

A Sunday POC at home, probably I won't add anything else, despite some typos corrections or some clarifications if requested.